Beyond silicon: We discover the processors of your future tech

From stacked CPUs to organic and quantum processing

Note: Our beyond silicon feature has been fully updated. This article was first published in October 2007.

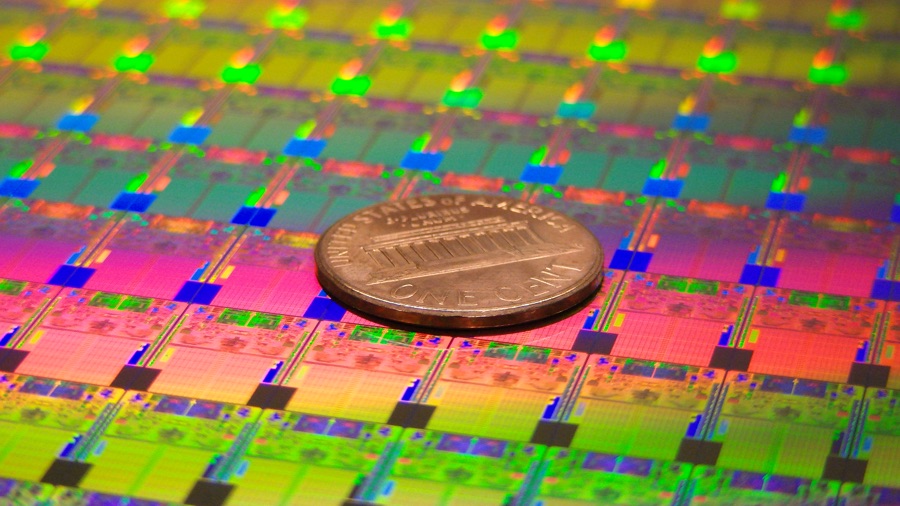

Today's processors are made from silicon, which itself is fashioned from one of the most abundant materials on earth: sand. But as it gets harder and harder to make ever more miniature circuits – processor technology has moved from 90nm fabrication in the mid-2000s to 14nm now, with that predicted to shrink further to a barely believable 7nm or even 5nm by 2021 – chipmakers are looking for alternatives; not just materials, but maybe even biological components.

A little bit of history

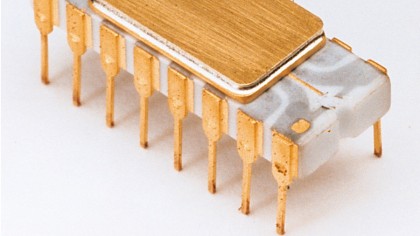

Intel's first microprocessor, the 4004, had 2,300 transistors. Modern processors have several billion. That's been achieved by cramming ever more transistors into the same amount of silicon, but as you do that the laws of physics kick in and your processor starts generating heat – and the more power you want, the more heat you generate. The fastest Pentium 4 processors could be overclocked beyond 8GHz, but to achieve that you needed liquid nitrogen to stop them burning up.

Today's processors are much more complicated than the single-core Pentiums, with multiple cores and three-dimensional architectures performing incredible feats of engineering, but sooner or later silicon will hit a wall. It won't be able to provide the exponential growth in processing power we're used to, because we'll be down to components that are only a few atoms wide.

What happens then?

Rack 'em and stack 'em

One option is to stick with silicon but to use it in different ways. For example, today's processors are largely flat – rather than try to cram ever more transistors into the same amount of space, we could take an architectural approach and build up to make the silicon equivalent of skyscrapers (hopefully avoiding the silicon equivalent of The Towering Inferno).

Or we could take what's known as a III-V approach, which uses elements from either side of silicon in the periodic table – it's in what's known as group IV, so you'd use materials from groups III and V in layers above the silicon. This would reduce the amount of power needed to move electrons around, which should make it possible to manufacture transistors smaller and pack them in more tightly. The favourite candidate for III-V manufacturing is gallium nitride, which has been used in LEDs for a few decades and can operate at much higher temperatures than the previous favourite, gallium arsenide.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Another option is to rethink the CPU itself. Intel, Nvidia and AMD are all moving in the same direction with processors, and if you look at the amount of space given over to the GPU on the processor die of chips with integrated graphics you'll see more and more real-estate being handed over to the GPU.

Traditionally the CPU has done the difficult stuff while the GPU has handled the graphics – so for example in a game the CPU's doing the AI – but the GPU's ability to do simpler tasks in massively parallel ways means designers are increasingly looking at sharing the overall workload between CPU and GPU based on their suitability for the job.

The more the GPU can do the better, because GPUs are massively parallel circuits, which is another way of saying they're fairly simple components crammed together in big numbers – thousands of cores compared to the handful in a CPU. Unlike CPUs, which are already pushing the limits of miniaturisation, GPUs have a long way to go before the laws of physics ruin their exponential growth.

Carbon dating

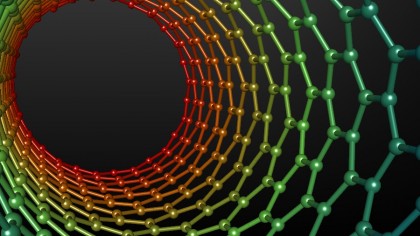

What if silicon runs out of road? Carbon could come to the forefront instead, in the form of carbon nanotubes. In October, IBM published a paper in the journal Science describing a new method for creating carbon nanotubes from sheets of the "miracle material" graphene.

Unlike previous attempts, IBM's method didn't encounter increasing electrical resistance as contact sizes were reduced. IBM says that we may see carbon nanotube processors "within the decade".

However, as IBM's Shi-Jen Han explains in IBM's blog, those processors are still a long way from reality. "We've developed a way for carbon nanotubes to self-assemble and bind to specialised molecules on a wafer. The next step is to push the density of these placed nanotubes (to 10nm apart) and reproducibility across an entire wafer," he says.

But when IBM finally cracks it, the potential is enormous. "Better transistors can offer higher speed while consume less power. Plus, carbon nanotubes are flexible and transparent. They could be used in futuristic 'more than Moore' applications, such as flexible and stretchable electronics or sensors embedded in wearables that actually attach to skin – and are not just bracelets, watches, or eyewear," Han says.

Top Image Credit: Intel

Contributor

Writer, broadcaster, musician and kitchen gadget obsessive Carrie Marshall has been writing about tech since 1998, contributing sage advice and odd opinions to all kinds of magazines and websites as well as writing more than twenty books. Her latest, a love letter to music titled Small Town Joy, is on sale now. She is the singer in spectacularly obscure Glaswegian rock band Unquiet Mind.