Nvidia's latest supercomputer is like 'a datacenter in a box'

Long-awaited Pascal graphics start inside the DGX-1

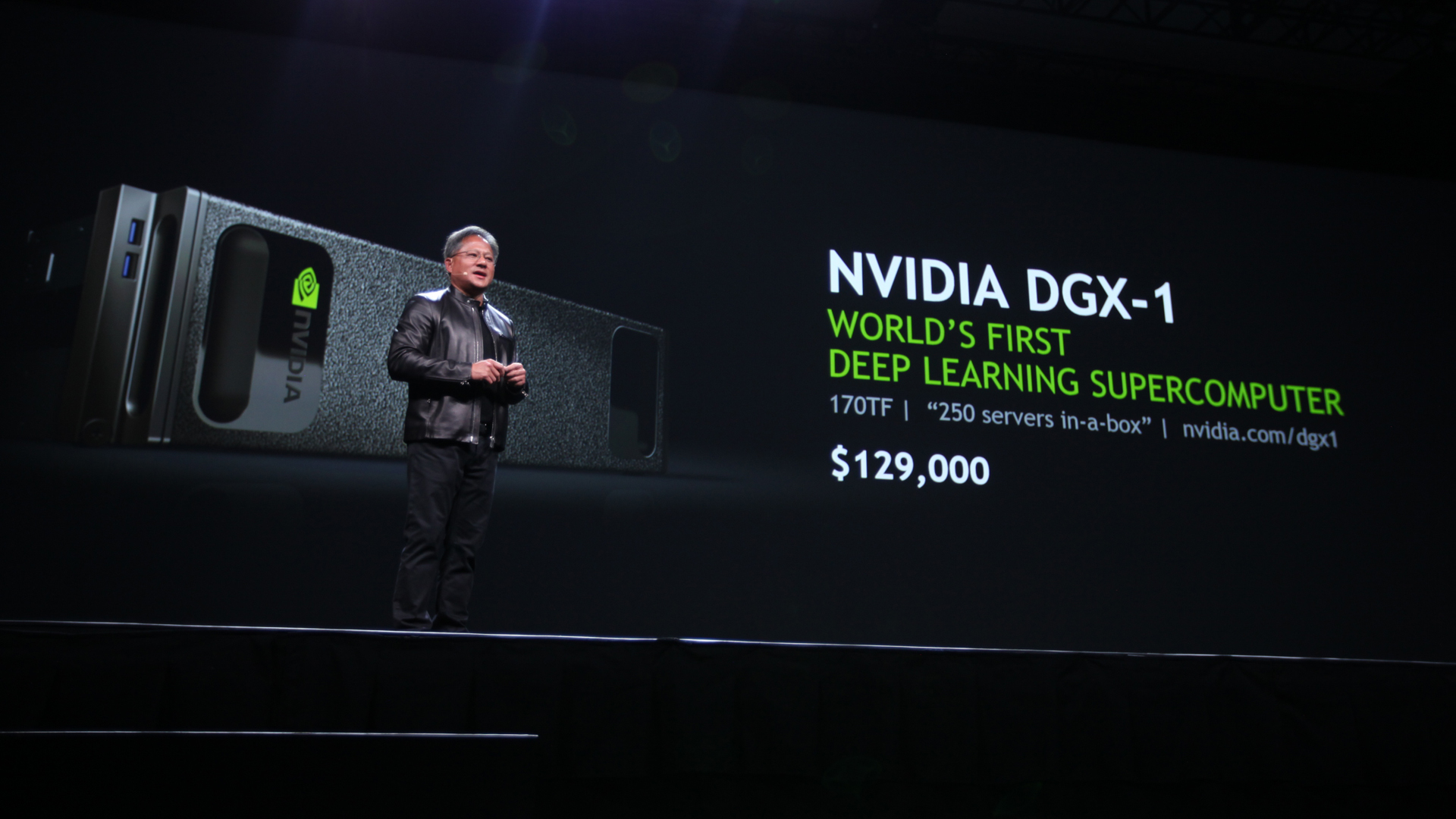

Move over, Watson – if you haven't already. Nvidia has just unveiled the DGX-1, the "world's first deep learning supercomputer" built on the firm's newly announced Pascal architecture.

Designed to power the machine learning and artificial intelligence efforts of businesses through applying GPU-accelerated computing, the DGX-1 delivers throughput equal to that of 250 servers running Intel Xeon processors.

Specifically, the DGX-1 can pump out 170 teraflops – that's 170 trillion floating operations per second – with its eight 16GB Tesla P100 graphics chips. By comparison, and while built for a different purpose, IBM's Watson supercomputer is clocked at 80 teraflops.

"It's like having a data center in a box," Nvidia CEO and co-founder Jen-Hsun Huang said on stage during his GPU Technology Conference (GTC) keynote address.

As an example, Huang used the time taken to train AlexNet, a popular neural network for computer image recognition developed by University of Toronto graduate Alex Krizhevsky. These neural networks have to be "trained" by powerful computers to properly recognize images – or whatever their primary function is – on their own.

For the 250 servers running on Intel Xeon chips, that would take 150 hours of computation time. The DGX-1 can do the work in two hours with its single cluster of Tesla P100 chips.

The implications this has for researchers' ability to more quickly test their findings in AI, 3D mapping or even better image search on Google is – frankly – astounding. Well, at least until Nvidia outdoes itself again next year by a factor of 10.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

(The DGX-1 is 12 times as powerful as Nvidia's previous GPU-based supercomputer, unveiled at GTC 2015.)

Feel the power of Pascal

Named after yet another storied contributor to science, Nvidia's newest graphics processing architecture, Pascal, finds its first home inside the DGX-1 as the Tesla P100 datacenter accelerator. That system accelerator is, naturally, based on Nvidia's Pascal GP100 GPU.

Pascal doesn't just add more on top of what the previous Maxwell architecture did, but rather makes it more efficient. For instance, the Pascal chip's streaming multiprocessors (SMs) have half as many CUDA computing cores inside than previous models: 64 CUDA cores and four texture units. (That makes 3,840 computing cores and 240 texture units for the GP100.)

However, because Pascal contains more than twice as many SMs as before, it is in turn more powerful than previous generations.

In short, supercomputers or servers running on the Pascal-powered Tesla P100 can do more on their own than before, potentially meaning smaller – or at least more efficient – datacenters and more easily approachable neural network and AI development.

Huang expects to see servers from IBM, Hewlett Packard Enterprise, Dell and Cray in the first quarter of 2017, and one will retail for a cool $129,000 (about £91,130, AU$171,161).

What will Pascal mean for folks like you and I? Well, later this year we can expect to see another leap in consumer-grade graphics chips, likely focused on powering truly high-quality virtual reality more efficiently than throwing a few Titans at it and calling it a day.

- These are the best VR laptops right now

Lead Image Credit: Nvidia Corporation (Flickr)

Joe Osborne is the Senior Technology Editor at Insider Inc. His role is to leads the technology coverage team for the Business Insider Shopping team, facilitating expert reviews, comprehensive buying guides, snap deals news and more. Previously, Joe was TechRadar's US computing editor, leading reviews of everything from gaming PCs to internal components and accessories. In his spare time, Joe is a renowned Dungeons and Dragons dungeon master – and arguably the nicest man in tech.

Most Popular