How AI can restore our forgotten past

Intelligent preservation

AI will eradicate 70% of jobs, and quite possibly end the human race. These are two recurring themes in media coverage of artificial intelligence.

It’s scary stuff, but AI also has an important part to play in mapping our past and present. Put together machine learning, neural networks and a vat full of data, give them a stir, and you can get amazing results. Today you can restore photos, but one day we may be able to use AI to map out the past in VR.

Here we take a look at some of the current research into AI that's exploring some promising, and far less threatening, uses for the technology.

Restoring images

Rips and creases in historical pics? Scratched out eyes in a photo of your ex from 10 years ago? AI can fix all that.

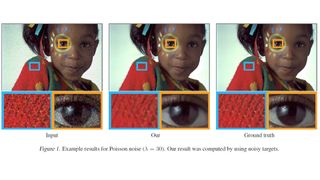

Several AI and machine learning projects that are in the works take a photo that’s noisy, ripped or blurred and make it pristine using restoration algorithms that do far more that you could with a photo editor, or a felt-tip pen and some Tipp-Ex.

Deep Image Prior, a neural network created by an Oxford University research team, and Nvidia’s image reconstruction, demonstrated in April of this year, show how AI can digitally restore a partially obliterated image.

Nvidia’s process involves training its AI by taking chunks of images from image libraries ImageNet, Places2 and CelebA-HQ, which are huge repositories of images of almost every kind of common object.

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

Just as you might learn to draw by sketching real-life objects, the image reconstruction algorithms here are finessed by re-drawing chunks of missing image data in these photos, then referring to the 'complete' original picture to see how accurate the attempt was.

Getting painterly

These algorithms use some of the same techniques as a restorer of oil paintings. We’re talking about 'in-painting'. This is where cracks and other damage in a painting are differentiated from deliberate texture or brush-strokes, and then filled in to make the picture look as it would have when first painted.

Restorers use X-rays, which reveal the different layers of paint, to do this manually. AI replicates some of the effect with machine learning.

Let’s not let loose robots with paint brushes on any old masters just yet, but AI could also be used to reveal what a painting looked like hundreds of years ago, without the need to spend tens of thousands of dollars on restoration.

Think of a famous painting. It’s probably in a fancy art gallery behind a sheet of glass. Maybe it’s not even the original on show, because the curators know how many idiot children with fingers caked in crisp dust wander about the place on school holidays.

Even the well-maintained paintings you see in famed art galleries are affected by age. Thick layers of varnish on paintings from the 1500s will have dimmed them over the years. Van Gogh was too skint to afford decent oils, and the reds are gradually retreating from many of his works, and the yellows turning brown.

However, what about an old master found in a basement, or a painting so old it’s more canvas than paint? A bad restoration job can turn an old masterwork into something that looks like it was made by an 8-year-old, like the Ecce Homo, re-painted into oblivion by an 81-year-old amateur. AI could avoid this.

Spotting forgeries

The big names in AI have sensibly stayed away from suggesting they can improve a painting that's worth millions. However, artificial intelligence is already being used to make sure we’re not sold a fake version of art history.

In 2017 researchers from Rutgers University in New Jersey published a paper outlining software that could be used to tell a forgery from an authentic painting. It claims to do the job better than the professionals.

Line drawings of Pablo Picasso, Henry Matisse, and Egon Schiele were analyzed at the stroke level, formulating a stylistic fingerprint for each artist. The authors claim it works “with accuracy 100% for detecting fakes in most settings”.

This is one way AI can get involved in the art world without letting any robots retouchers paintings loose on paintings works tens of millions.

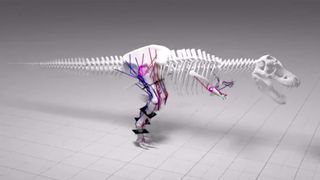

Working out dinosaurs

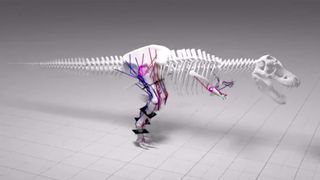

In uncovering the truth, sometimes AI bursts a few bubbles. Among other things it's telling us that the Tyrannosaurus Rex we know from Jurassic Park bears little resemblance to what the dinosaur was actually like.

One current theory is that such large dinosaurs may have been feathered rather than scaly, and an AI model from the University of Manchester now suggests a T-Rex couldn’t out-run a jeep either.

The researchers mapped out the T-Rex’s bone and muscular structure, then used machine learning to see how fast this creature could get from point A to point B without breaking any bones.

The findings? It’s was so big and heavy, a T-Rex could likely only walk, not run. Sprinting after some kids and scientists in search of dinner would simply put too much stress on its body.

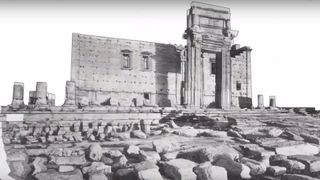

Microsoft historical site mapping

Iconem is a startup specializing in 'heritage activism' – recreating historic sites that are under threat from war, or simply time, in 3D, powered by Microsoft AI. It creates photo-realistic recreations of places like the Alamut fortress in Iran and the El-Kurru royal burial ground in Sudan.

The artificial intelligence element enters in the way the 3D models are constructed. Iconem uses photogrammetry, enabling 3D modeling of objects using 'flat' photos.

Its team took 50,000 photos of ancient city of Palmyra, which had been occupied by Islamic State fighters in 2015. They used drones to avoid landmines, digitally recording not just what was left of the site, but also the damage caused by the occupiers.

Isis returned to Palmyra after Iconem mapped the site and, in 2017, destroyed part of the Roman theater at the site. There’s a vital immediacy to the work; it’s easy to think of historical sites as frozen, sure to exist as they are in perpetuity, but Iconem’s work shows this is not the case.

You can check out some of its 3D views of Palmyra on YouTube, as part of a collaboration with Google Arts & Culture. If Iconem’s work isn’t good content for VR, nothing is.

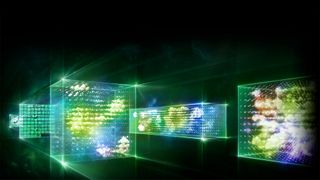

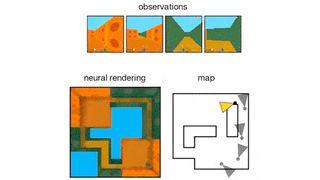

Bring the past to life

VR and AI are better pals than you might think. Google’s DeepMind AI lab has devised a neural network that can construct a 3D environment from as little as a single image. It extrapolates or 'imagines' the 3D scene based on recognition of objects and their most likely shapes. The more images it has to work with, the more faithful a replication of the real environment it can make.

In its demonstration, DeepMind AI creates a 3D labyrinth from a handful of flat images. The AI part is used to discern close planes from far ones, something we naturally take for granted in our recognition of scenes.

This tech has a future worth day-dreaming about. Imagine being able to recreate the home in which you grew up, in VR, using pictures from an old photo album. Or crashing your parents’ wedding as if you were a time-traveller.

DeepMind’s researchers detailed this project in the June 2018 issue of Science. The 3D maze the AI made had visual quality roughly matched that of the images. It’s pretty rough-looking.

Imagine being able to recreate the home in which you grew up, or crashing your parents’ wedding as if you were a time-traveller.

However, it doesn’t take a grand leap to imagine how it too could be enhanced using AI. Let’s go back to the idea of mapping your parent’s wedding day. Their faces are vague, just a small part of a scan of an old slice of 35mm film. But there are hundreds of photos of them in the cloud, uploaded over the years, that can be used to enhance the render, even if most were taken decades later.

Their wedding car is an Austin Healey, which the AI recognises, replacing it with a high-polygon rendering of the same model. Flat cobblestone textures are replaced with photorealistic ones and the AI recognises the church in the background. Not only is there a Google wireframe mesh of the building, the AI also pulls in thousands of photos uploaded near the same location to map out the surrounding area.

It’s the perfect storm of machine learning and big data. And, hey presto: we have a holodeck for your past and half-forgotten memories. Are we there yet? Of course not – but it makes for a satisfying tech-fueled daydream.

TechRadar's Next Up series is brought to you in association with Honor

Andrew is a freelance journalist and has been writing and editing for some of the UK's top tech and lifestyle publications including TrustedReviews, Stuff, T3, TechRadar, Lifehacker and others.