Mankind vs machine: Can our bodies keep up with the evolution of gadgets?

Our gadgets are evolving more quickly than we are. Should we be worried?

In June 2010, during the launch of the iPhone 4, Steve Jobs first introduced the 'retina display', saying: "It turns out there's a magic number right around 300 pixels per inch, that when you hold something around to 10 to 12 inches away from your eyes, is the limit of the human retina to differentiate the pixels."

His words caused an immediate fuss among vision experts. Wired quoted Raymond Soneira, president of DisplayMate Technologies, complaining that it was a misleading marketing term. Phil Plait at Discover Magazine disagreed, arguing that the claim applied to the average human, not those with perfect vision.

Soneira shot back, writing: "Steve Jobs claimed that the iPhone 4 has a resolution higher than the Retina – that's not right. The iPhone 4 has significantly lower resolution than the retina. It actually needs a resolution significantly higher than the retina in order to deliver an image that appears perfect to the retina."

Display technology has far exceeded what the human eye is capable of discerning

Today, the debate is somewhat moot. Whether or not you could see the edges of the iPhone 4's pixels, you certainly can't see those of the market's newer smartphones. The iPhone 7 Plus goes up 401ppi. The LG G3 manages 534 ppi. The Samsung Galaxy S6 Edge 577 ppi and the Sony Z5 Premium a ridiculous 806 ppi.

When you consider that magazines are printed at 300 dpi and that fine art printers aim for 720 dpi, you quickly realise that even mainstream display technology has far exceeded what the human eye is capable of discerning.

The world of technology moves quickly. Our gadgets are evolving more quickly than we are. But should we be worried - or just accept it as the latest stage in the evolution of our species?

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

A history of change

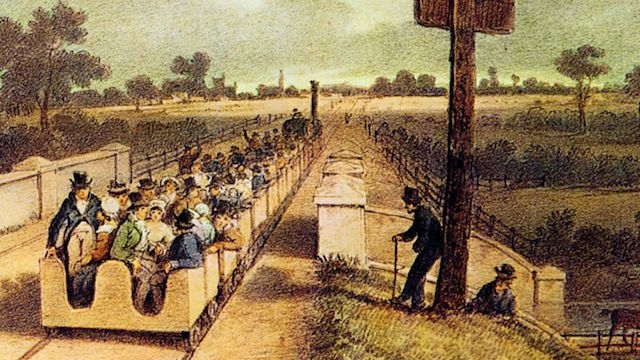

To answer that question, we should remember that it's hardly the first time this has happened. In 1830, before the opening of the first major railway line between Liverpool and Manchester, people feared that they wouldn't be able to breathe while travelling at such a speed (17mph), or that their eyes would be damaged trying to perceive the motion of the world outside the carriage.

Their fears were quickly allayed, but several no doubt experienced their first taste of motion sickness instead - caused by the cognitive dissonance between our eyes seeing a non-moving carriage and our balance system telling us we're definitely moving.

Motion sickness is likely to become a rather larger part of our lives

It's not clear why some people are affected more than others, or the exact mechanism of how it works, but motion sickness has likely existed since the first boats took to the ocean about 800,000 years ago. Our earliest record of the term is from Greek physician Hippocrates, who wrote around 400BC that "sailing on the sea proves that motion disorders the body".

In the coming years, motion sickness is likely to become a rather larger part of our lives. With self-driving cars on the march, we'll have more time to read, work or play games without having to focus our attention on the road ahead. A study at the University of Michigan in 2015 found that six to twelve percent of adults riding in self-driving vehicles would be expected to experience moderate or severe motion sickness at some time.

Study author Michael Sivak explains: "The reason is that the three main factors contributing to motion sickness—conflict between vestibular (balance) and visual inputs, inability to anticipate the direction of motion and lack of control over the direction of motion—are elevated in self-driving vehicles."

One British firm is trying to alleviate this problem. Ansible Motion, based in Norwich, is building driving simulators that car manufacturers can use to test different factors that might make people feel less queasy.

"Our own simulation methodology, by default, inserts a layer of controllable sensory content - for motion, vision, haptic feedback, and so on," explains Ansible Motion's Technical Liaison, Phil Morse.

"This can be a useful way to explore human sensitivities while people are engaged in different tasks inside a car. And then the understanding of these sensitivities can wrap back around and inform the real vehicle design."

Body clock blues

Feel groggy when you wake up in the morning? You're noticing another example of our bodies struggling to keep pace with technological change.

Artificial lighting has only been around for a couple of hundred years, and before that people spent their evenings in darkness. Now, in much of the world, the sky at night glows orange rather than black, with the stars replaced by millions of tiny squares of blue light in the hands of the people below.

We're knocking our body's biological clock out of sync

Different colours of light have different effects on our body. Blue wavelengths boost attention, reading times and mood in the daytime - but are disruptive to our sleep at night.

As more and more people spend their evenings staring at televisions, tablets and smartphones, we're knocking our body's biological clock out of sync.

Multiple studies have linked light exposure at night to different types of cancer, diabetes, heart disease and obesity. It's not clear exactly what mechanism is causing that damage, but we know that exposure to light suppresses the secretion of melatonin, and some preliminary experimental evidence indicates that lower melatonin levels are associated with cancer. Meanwhile, short sleep has also been linked to increased risk for depression, diabetes (again) and cardiovascular problems.

There are some solutions. F.lux has long offered software that adapts the color of your computer's display to the time of day, and last year Apple added a feature called Night Shift to iOS that does pretty much the same thing. But the most effective way to ensure you get a good night's sleep is to avoid looking at bright screens for the two to three hours immediately preceding bedtime, and maximise the amount of bright light you get during the day. At least until our caveman bodies adjust to the new, illuminated reality.

The shift to Social

But perhaps the most fascinating way that technology has outpaced humans is when it comes to social networks. British anthropologist Robin Dunbar, in 1993, published a paper showing that there's a correlation between the size of a primate's brain and the average size of their social groups. In humans, the number ranges from 100 to 250 depending on the individual - with a commonly used mid-point of 150 known as Dunbar's number. In a nutshell, the average human can only maintain stable social relationships with about 150 other people before some begin to decay.

Facebook and other social networks, however, have left Dunbar's number in the dust. At the time of writing, I've got 728 friends on Facebook. The average among adult Americans in 2014 was 338, with younger users having significantly more than older users. It's true that not all of those will be the "stable social relationships" that Dunbar is talking about, but Facebook allows us a safety net for our friendships - an easy way of rekindling them when the need arises.

Humans can only maintain stable social relationships with about 150 other people

In a way, that makes Facebook a kind of prosthesis for the parts of our brain that look after social interaction. The same could be said about Google and Wikipedia acting as a prosthesis for our memory, or calculators performing the same task for mathematical operations, or dating sites outsourcing our most basic evolutionary desires.

In fact, if you look around you, you're surrounded by hardware and software tools that we've built to handle things our brains used to take care of.

Philosopher and writer Luis de Miranda believes that this is just the latest step in humans slowly becoming what he calls "anthrobots".

"We've been anthrobots since we started to cooperate and organize our tasks," he explained in an email to me.

"As anthrobots, we're a hybrid unity made of flesh and protocols, creation and creature. It wouldn't make much sense to speak of a human species without technology."

De Miranda argues that we shouldn't fear this process, as it's inevitable - and besides, our brains are far more adaptable than we realise.

"If we have new possibilities, we'll use them," he wrote. "The more the techno-capitalist society multiplies points of contact between each of us and machines, the more we will be constantly mobilized to produce data. This can become alienating, because we're not here on Earth merely to produce data. What's positive is the [potential for the] democratisation of world-shaping via technology."

Creative collapse

But others argue that we should be paying more attention to what's happening to society in the process. One of the biggest critics of our modern relationship with technology is Nicholas Carr, who's authored multiple books on the subject. His latest is called "Utopia is Creepy", and collects together blog posts from the past twelve years that document the negative influence that technology has on our lives.

"My goal is simply to say that I think this technology is imposing on us a certain way of being, a certain way of thinking, a certain way of acting," he explains. "We haven't really questioned it, and it's probably a good idea to start questioning it."

Carr's argument is two-pronged. The first is that the default mode we switch into when interacting with technology, which he describes as "very fast-paced, very distracted, multitasking, gathering lots of information very quickly", doesn't allow enough time for higher forms of human thinking - conceptual, creative, critical thinking. The second is that as we become more dependent on computers and software to do things, we become less likely to develop deep talents of our own.

"The idea is that we'll get greater convenience and we'll be freed up to make higher thoughts," he says. "But the reality is that we just become more and more dependent on the computers and the software to do things and hence lose those opportunities to develop rich skills, and engage with the world and with work in ways that are very fulfilling."

He doesn't put much stock in the argument that this is just the latest stage in our evolution.

"The argument that we've always adapted to technology and we'll adapt to this technology, I think is absolutely true, and it's also what scares me," he adds.

"Because we're very adaptable, and adaptation is a process of change. We never ask ourselves: Are we changing for the better, are we changing for the worse, or is it neutral?

"Simply saying we're good at adapting seems to me to avoid the hard question."