The world’s largest SSD is getting much bigger and smarter - here’s how

CEO of Nimbus Data gazes into the SSD crystal ball

Back in 2018, analyst house IDC predicted that the global datasphere (the total amount of data created by humanity) would reach 175 zettabytes by 2025. As of now, we don’t have the capacity to quench that thirst for storage.

Until we find the storage equivalent of the holy grail, there will be three distinct ways to handle the gargantuan amount of bits generated by humankind: tape, hard disk drives and solid state drives.

To discuss the burgeoning need for storage, we sat down (virtually) with Thomas Isakovich, founder and CEO of Nimbus Data - the company behind the world’s largest SSD (the ExaDrive DC100) to see how he views the enterprise storage market evolving in the years to come and his plans to address storage demand.

- We've built a list of the best external hard drives right now

- Check out our list of the best portable SSDs on the market

- Here's our list of the best rugged hard drives out there

The Nimbus Data ExaDrive DC 100TB SSD was launched in 2018 and yet none of the big vendors have released anything above 32TB. Why do you think this is?

Regarding why the big SSD vendors allowed an upstart to take the crown for the highest capacity SSD and most energy efficient SSD ever made – well, we see two reasons. First is technology. Nimbus Data has a patent pending on the scale-out architecture of our SSD that gives us a fundamental advantage that will be tough for the other vendors to work around.

This scale-out architecture is key since building a single ASIC flash controller capable of addressing such a large amount of flash will be very costly. The second reason is market size – the big vendors are chasing flash sales volume, and that’s in mobile phones, notebooks, and tablets, as well as boot drives for servers. That said, not too long ago, the thought of a 16TB HDD sounded insane when 2TB HDDs were “all the storage you would ever need”. Obviously, times change rapidly, and we expect our leadership position in high capacity SSDs to prove prescient.

What are your views on computations storage when it comes to solid state drives?

When it comes to computational SSDs, I am bearish. Computational SSDs have proprietary architectures that require applications to be rewritten to specifically take advantage of them. This will discourage mainstream adoption. Another negative is that computational SSDs forcibly bind the “processor” to the “flash”, which creates lock-in at a time when customers want to disaggregate processors and flash for both control and cost reasons (ed: Check out this storage processor from PLIops).

For example, major cloud providers are trying to make SSDs leaner, not heavier, by allowing direct access to the flash (i.e. Open Channel). This gives these customers supreme control over cost, exactly the opposite of what computational SSDs propose. Finally, every application will require a different processor-to-flash ratio. There is no one size fits all, yet computation SSDs do not allow flash capacity and processing to be scaled independently.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

That said, we do see potential for “intelligent SSDs” where some storage system functionality, such as compression, or specific protocols, like key-value store, is performed by the SSD directly. We call this an “intelligent SSD” since the processor inside the SSD is exclusively for SSD/storage-related functions and not externally addressable by an application.

What would it take for SSDs to replace tap drives as archiving?

SSDs replacing tape will not happen any time soon, and I say this despite being a flash vendor that wants to see flash everywhere. QLC flash continues to be at least 4x the cost/bit of nearline HDDs. And nearline HDDs still command a considerable cost premium over tape.

The sheer volume of data being created cannot be fulfilled by existing flash manufacturing capacity – not even close. There is no one size fits all – different tiers and storage media will be used for different use cases for the foreseeable future. PLC flash will potentially provide another step function in cost reduction for SSDs, but HDDs will match this step function with their own innovation. In my view, tape, HDD, and SSD will coexist for at least a decade, likely longer.

Your drives are 3.5-inch in form factor. Why not embrace either 2.5-inch or Ruler form factor?

Regarding why ExaDrive uses a 3.5-inch form factor, it is because our SSDs are targeting (a) high density and (b) HDD to flash migration.

Over 20 years of R&D by enclosure vendors and rack makers have resulted in ultra-dense 3.5-inch storage enclosures. Today, 4U enclosures can handle 100 3.5-inch devices, enabling unparalleled storage density that is at least 4x better than what can be done with 2.5-inch based enclosures.

The Ruler form factor is promising, but Ruler’s goal of 1PB per 1U has been beaten by 3.5-inch and ExaDrive already. You can get 2.5PB per U today with 100 x 100 TB ExaDrives in 4U. Also keep in mind that there are over 1 billion 3.5-inch slots in production today. These HDD users will want to move to SSD selectively – and will want to mix-and-match HDD and SSD as part of a hybrid or tiered storage architecture to cost-optimize. 3.5-inch SSDs like ExaDrive enable that – whereas Ruler would require the customer to buy a totally new enclosure/server that is flash-only, making hybrid storage much more expensive and complex to achieve.

Can you give us more details on what's inside your 100TB SDD? (controller, NAND etc.)

The 100 TB ExaDrive DC has 3,072 flash dies in a single device – in fact, that’s about 8 full wafers of flash memory in a single SSD device. The achievement of ExaDrive is to present all this capacity as a single device, using the SATA or SAS protocol, and to then power and cool it within the limited power and thermal envelope of nearline HDDs. That’s where our secret sauce lies. The sheer mechanical achievement alone is something to behold, candidly. Our USP is the patent-pending scale-out architecture, management SOC, power management firmware, and the mechanical/thermal design to pack that much flash into such a small form factor.

How do you see the future of Flash SSD in the enterprise at capacity? (mix and match NAND type, other interfaces etc.)

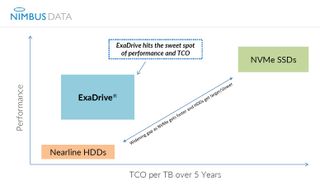

We see “capacity flash” and “performance flash” becoming mainstream in the enterprise, much like you had capacity HDDs (5400 and 7200 pm) and performance HDDs (15K and 10K rpm) back in the day. The reality is that the performance gap between HDDs and NVMe SSDs is widening, so a capacity SSD like ExaDrive fills this gap perfectly. The chart attached really tells the story.

What would it achieve a 1PB SSD (400TB with a 2.5:1 compression)?

Well, I love a challenge. Candidly, this is quite doable with the ExaDrive architecture. Higher density NAND will be needed, along with embedded processing to handle the compression aspect. Ask me again in a couple of years. We can do 200 TB in 2021 and likely 400 TB by 2023, depending on the timing of NAND density gains. That said, there may be thermal aspects to consider. For example, the higher density and lower grade the flash, the higher the power consumption and greater the heat, so even if the density is possible, there will be other factors to consider.

As for using the tape compression standard of 2.5:1, well, that’s a marketing question. A lot of new data being created is incompressible data, or data that has been compressed elsewhere before arriving at the SSD. It’s best to be transparent and honest about real capacity versus “potential capacity”.

What are the differences in the second generation ExaDrive DC and do you see NVMe or Ethernet being on your radar soon to match the capacity increase?

We further improved the thermal design to better support ultra-high-density top-loading storage enclosures (4U 90-bay+). We also added compatibility with Broadcom’s latest tri-mode SAS/SATA/NVMe hardware RAID controllers. For the second part of the question, we are eying both technologies – can’t share much more publicly right now though.

- Here's our list of the best cloud storage services around

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.

Most Popular