Adobe Firefly's latest image model looks like a generational leap and I can't wait to try it

Detail, precision control, and the end of 'blank canvas syndrome' coming from Adobe

Adobe Firefly is already a hit with Photoshop users of all stripes, but this next iteration could make the generative image tool that does not rely on publicly-scrapped images indispensable.

This week, the creative software company announced at Adobe Max, London, an update to its Photoshop Firefly generative AI utility that deepens the integration and vastly expands capabilities through the adoption of a new model: Firefly Image 3 Model.

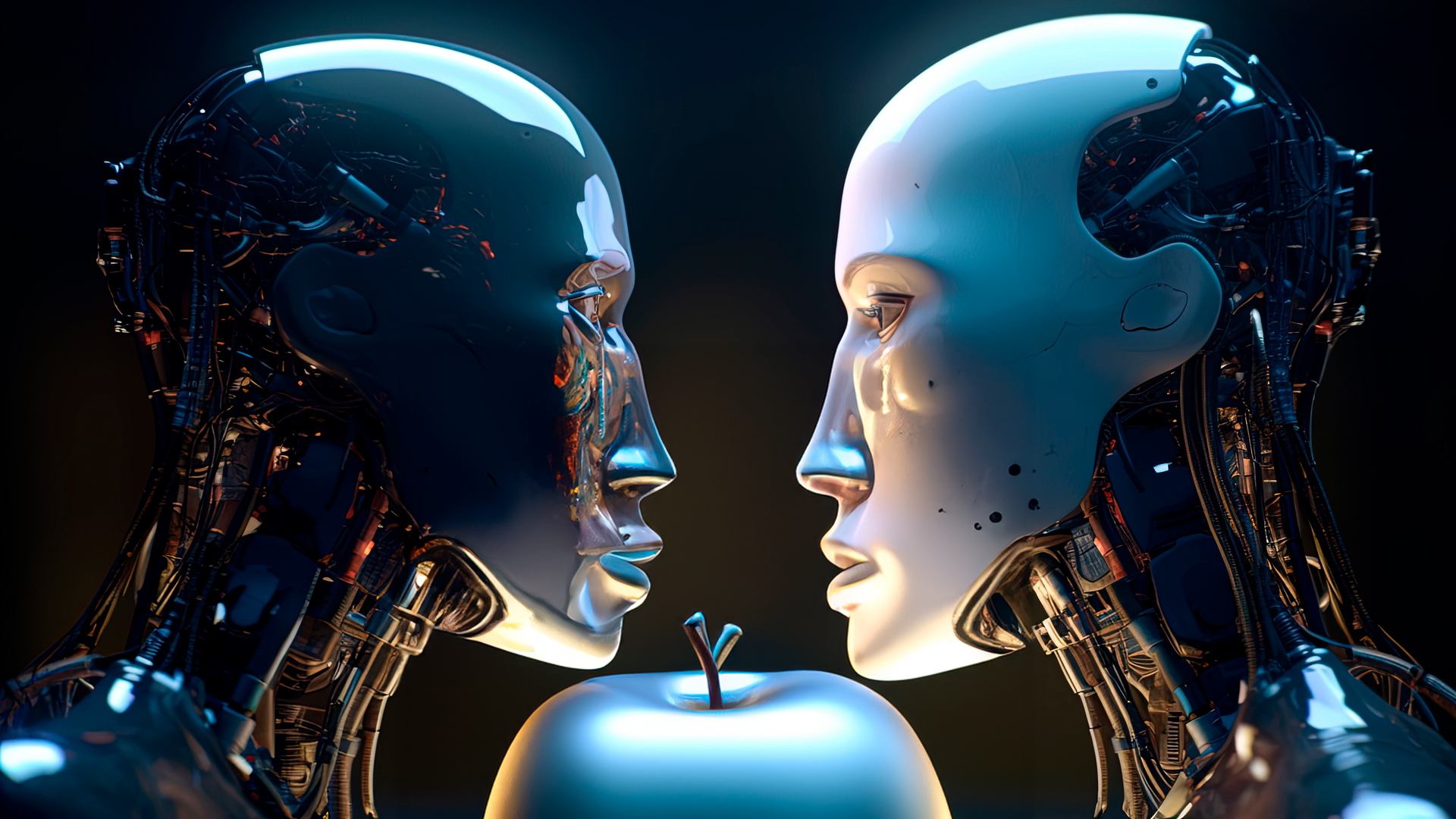

I've been using Adobe Firefly almost since its introduction and while its power is impressive, its use is simple. You select a photo, area, or image object in Photoshop, and then in the text prompt area describe what you want to appear. I've used it to extend table tops to help fill out a 16:9 aspect-ratio image and to add a metallic apple to this Getty Image (below).

Image Model 3, however, will add much finer control and new image workflows to Firefly's generative arsenal. Among the key enhancements are:

- Reference Image

- Generate Background

- Generate Similar

- Enhance Detail

Perhaps most useful might be Firefly's ability to help virtually anyone fill a blank canvas with pro-level imagery.

In a demonstration I watched, Adobe executives started with a blank canvas and then described in a prompt the scene they wanted: A bear playing guitar by a campfire. What's new here, though, is how the enhanced generative tools include the ability to use reference images, set a content type, and add effects, all before generation begins.

It still takes about 20-25 seconds for a render, but there are now more options, and the image detail, even when zoomed in, is far superior to what I've seen from Firefly Image 1 or 2 models.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The new reference image skills are especially powerful. With the bear, we selected its acoustic guitar and from a series of reference guitar images chose an electric one and then had Firefly replace the original guitar. Firefly not only put the new guitar in place, but it properly lit it and adjusted the bear image so that its grip matched the fresh instrument.

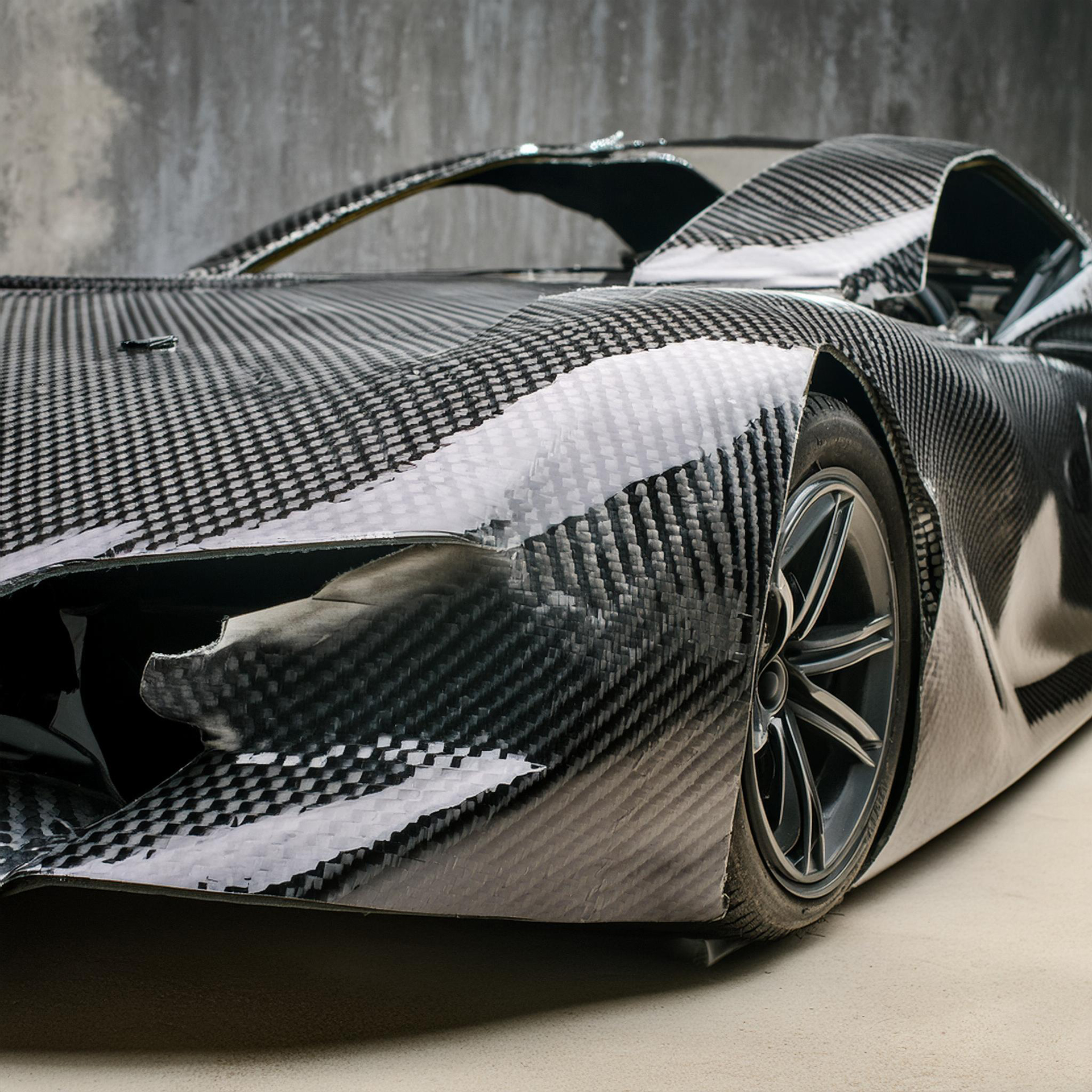

It's also now just as easy to swap out a background for something new. We started with a bottle of pink perfume and then removed the background and asked Firefly to put it in pink water. The result was a dramatically lit bottle floating on a sea of pink water. Because the background is just an object, swapping it for snow or sand was also easy. I noticed how in each instance, the lighting and blend between the bottle and the new backgrounds were visually perfect.

Reference images mean that you can have an idea of how you want to replace a fill, surface, or object and use reference images to drive each of those iterations. Firefly just makes it all look natural and good.

The new Generate Similar looks like a great way to iterate on an initial image idea.

Adobe's VP of Generative AI, Alexandru Costin, told me the new model is now better at understanding longer prompts and takes advantage of an improved style engine. The results are also more natural because Firefly Image 3 Model doesn't default to what might look most aesthetically pleasing, like producing all portraits in "golden hour light."

It can use references to match styles, but also image structure and then build countless variations.

Firefly Image 3 model will arrive first in Adobe Photoshop desktop beta and the Firefly web app in beta today (April 23, 2024). General availability is expected in Photoshop and Firefly later this year.

You might also like

- What is Adobe Firefly? The new AI-powered Midjourney rival ...

- Adobe Firefly is doing generative AI differently and it may even be ...

- Adobe announces new Firefly-powered services that let you train ...

- Creators slam Adobe over Firefly AI training

- Adobe Firefly comes to the Apple Vision Pro – and it's a wild mashup ...

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.