Apple's careful approach is killing my interest in Apple Intelligence – here's what I want Apple to do

Get in the race, Apple

Apple has arguably delivered a lot of artificial intelligence in the roughly 8 months since it unveiled Apple Intelligence at WWDC 2024, but it's also failed to deliver the most promising aspect of its AI strategy: a smarter, more useful, and far more aware Siri.

Siri hasn't been entirely left out. It's more conversational but the limits of its capabilities are still painfully apparent. Virtually all difficult questions are handed off to ChatGPT, OpenAI's far more powerful generative chatbot. And it's not even a particularly smooth or fast handover.

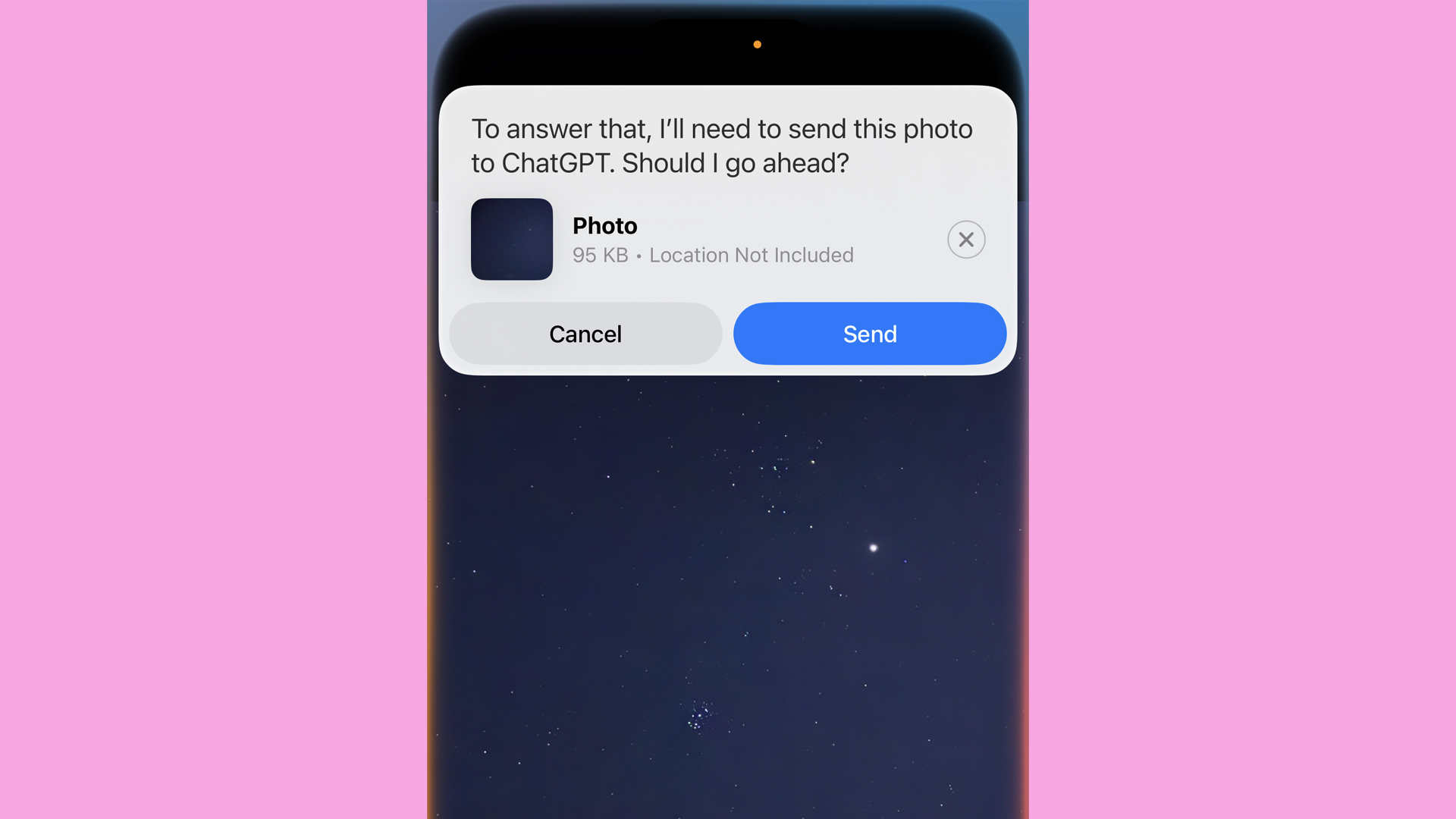

When I was on vacation, I started taking pictures of the stars with the iPhone 16 Pro Max and the Samsung Galaxy S25 Ultra. At one point I realized I couldn't easily identify some of the star clusters and constellations. I captured a screenshot and asked Siri to identify the stars in the image I shared. There was a pause and then Siri told me, "To answer that, I'll need to send this photo to ChatGPT. Should I go ahead?"

While I guess I'm glad Apple has a way to answer these questions, I still can't get over the fact that Apple hasn't figured out how to natively support them. I get that Apple is not a generative, large language model leader like OpenAI or Google (Gemini support has been promised but has yet to arrive), but this feels like it's not even trying.

Okay, perhaps I can get over the fact that Apple Intelligence will never produce one-stop shopping for all my generative AI needs, but my disappointment goes further than that.

The long wait

Apple promised that by this year we'd have all of Apple Intelligence's features including the ones I've been waiting for: Siri's situational awareness (using what it knows about you from the phone) and its ability to see what's going on on your screen, understand it, and act on your behalf based on that knowledge.

Some believed we might see the full realization of this promise with iOS 18.4 but that update appears delayed. No one knows why and Apple certainly isn't talking about it but I'm concerned that this is another by-product of Apple's overly cautious approach.

Yes, I get that Apple is the most privacy-aware and secure consumer platform and ecosystem. A portion of their AI strategy revolves around Private Cloud Compute. But what is that locked-down vapor actually doing for us? I worry that Apple is so afraid of breaking this ironclad security promise that it's falling way behind the AI competition, which happily runs roughshod over most of these privacy and data concerns, and usually does mop-up after the fact when someone cries foul. It is the "move fast and break things" style of development that Facebook (now Meta) once ascribed to and eventually left behind when the company grew up. But that was then and this is now – and by now, I mean the AI revolution. The only way to stay ahead of it is to move as fast as it's developing around you.

AI Time

Apple appears locked in an old model of long-term software upgrade cycles. I won't claim that Apple is working on the old 18-month model, but the promises for Apple Intelligence stretched out over almost a year. It's as if Apple doesn't fully comprehend the AI development pace.

OpenAI and its competitors are not working on 12-, 9-, or 6-month cycles. We're getting significant model updates every three months, and sometimes in bunches. It feels like a free-for-all because it is. I think everyone in this space understands this as a race and it's only Apple that appears stuck near the starting line.

You might argue that Apple has delivered a lot of Apple intelligence since June. There are features like Genmojis, Image Playground, and Writing Tools that vary on the scale of utility. Most are not very useful at all. Why do I need to spend time creating silly images of me or my friends, or whimsical Genmojis featuring animals that I can share in messages? I'd honestly prefer Apple get to work on generative image creation that produces more usable images. Even writing Tools is not something I tap into very often (if at all). and don't get me started on summaries, most of which are word salads of important information, slamming together disparate ideas in a way that makes them more confusing than actionable.

Meanwhile, Siri remains the disappointment it's long been, trailing so far behind Gemini and ChatGPT that it's clear they're not in the same class.

It's time for Apple to stop being so cautious and officially join the fight. I think Siri is still a massive opportunity for on-device intelligence, instant information, and automation. Now it's time for Apple to let go, hurry up, and do its thing.

You might also like

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.