Can Grammarly's AI bloodhound sniff out text written by ChatGPT?

Grammarly Authorship watches you write in real time

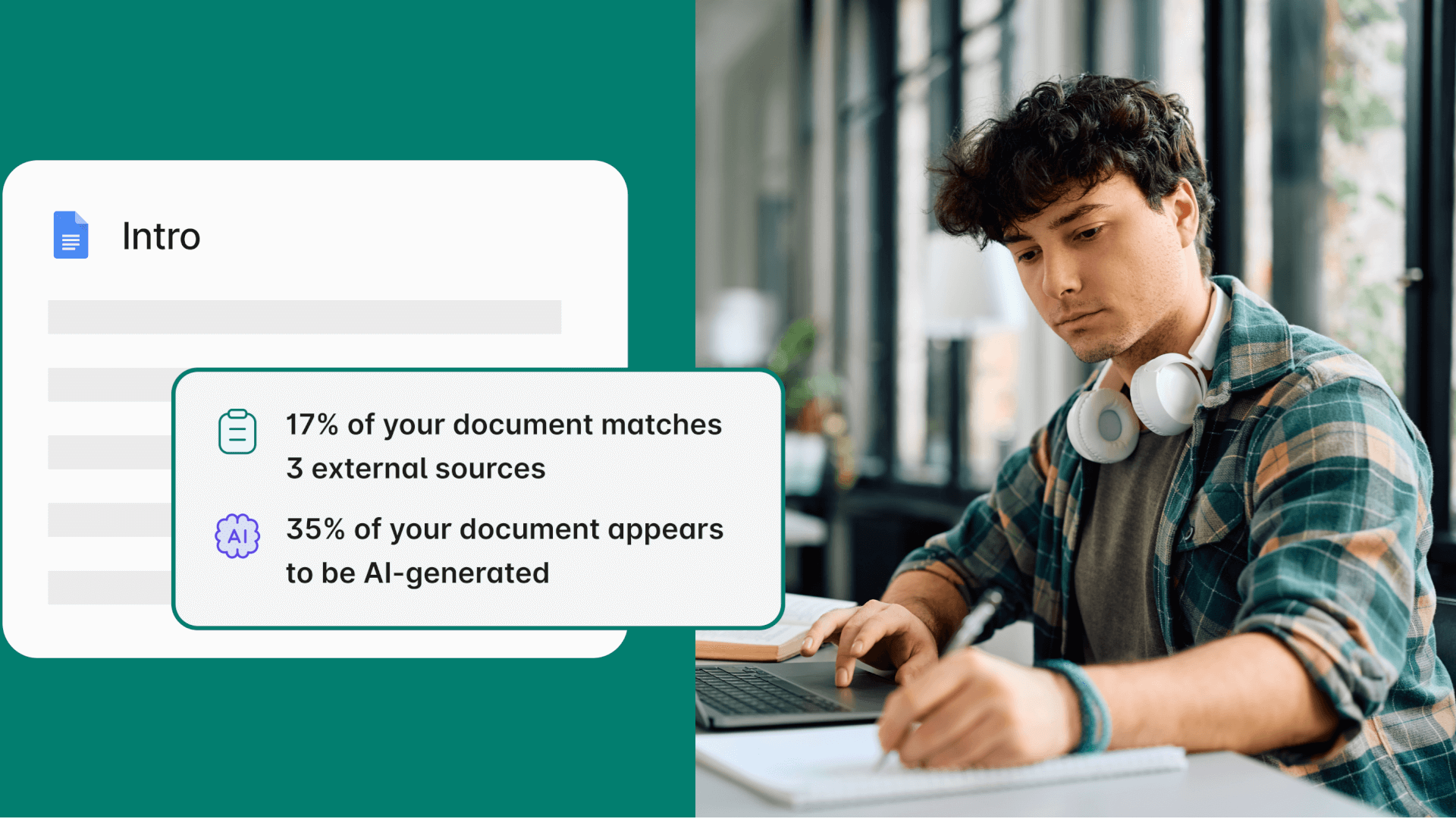

Grammarly's expansion from writing assistance to AI tools that can write for you continued this week with a new feature designed to spot AI-composed text. Grammarly Authorship is coming to Google Docs as a beta and aims to help educators in particular work out when students are using AI to write their assignments.

Teachers and education policymakers have been wrestling with the issue of figuring out when something is written using AI for a long time, but ChatGPT and its rivals heightened the sense of urgency for such a tool as they make the traditional methods unreliable. The difficulty has been compounded by issues around false positives and difficulties in picking AI compositions from those written by students learning how to write, especially those who have English as a second language.

Grammarly Authorship attempts to address those problems by avoiding the way many existing AI detection tools analyze a text when it's done. Instead, Grammarly Authorship tracks the real-time writing of a text. Supposedly it can tell when something has been typed, pasted from elsewhere, or generated on the page with AI. The tool will integrate with more than half a million applications and websites, centering on writing platforms like Google Docs, Microsoft Word, and Apple's Pages.

The feature can track typing as it happens and note when text is copied over or when an AI tool generates or modifies text, such as Google Gemini or Microsoft's Bing AI. Grammarly Authorship then makes a report and splits the paper up into categories based on if it was written, AI-generated, or pasted from elsewhere. It even shows a replay of the document being written to show exactly what happened.

Student AI Monitor

Individuals can purchase access to Grammarly Authorship, but educators are clearly the main market for the tool. AI-generated writing among students and in academic circles is contentious and has led to wrongful accusations by teachers, even as other students have admitted using AI to write papers that never get caught.

"As the school year begins, many institutions lack consistent and clear AI policies, even though half of people ages 14–22 say they have used generative AI at least once," Head of Grammarly for Education Jenny Maxwell explained. "This lack of clarity has contributed to an overreliance on imperfect AI detection tools, leading to an adversarial back-and-forth between professors and students when papers are flagged as AI-generated. What’s missing in the market is a tool that can facilitate a productive conversation about the role of AI in education. Authorship does just that by giving students an easy way to show how they wrote their paper, including if and how they interacted with AI tools."

Of course, that only matters if educators decide to trust Grammarly's new concept. It will have to hit performance goals in real-world scenarios. That's on top of competing with possible rivals. OpenAI has developed some new tools to detect content generated by ChatGPT and its AI models with a kind of watermark. But the company decided against rolling it out yet, lest it cause problems even for those with benign interests. That follows the failure of its first AI text detector, which it shut down in just six months.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Though having proof like the Authorship report would remove the question of whether a student really wrote their paper, there's an inherent privacy issue that may make some reluctant to use the tool. Students may not want to share all the false starts in their writing or all the ways it didn't go well. There doesn't have to be a secret behind wanting only to share the final product. But they may not get a choice. Writing at home may soon resemble taking a test, where a proctor keeps an eye on students to prevent cheating, and even innocent moves may evoke suspicion.

You might also like...

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.