ChatGPT just got a massive upgrade that takes it to the next level – here are the 3 biggest changes

ChatGPT is now smarter than ever

- o3 and o4-mini are out for Pro, Plus and Team users now, free users can try o4-mini, too

- They can combine and use every tool in ChatGPT's arsenal

- o3 and o4-mini add reasoning using images to ChatGPT's abilities

OpenAI has just given ChatGPT a massive boost with new o3 and o4-mini models that are available to use right now for Pro, Plus, Team and even free tier users.

The new models significantly improve the performance of ChatGPT, and are much quicker at reasoning tasks than the earlier OpenAI reasoning models like ChatGPT o3-mini and o1.

Most importantly, they can intelligently decide which of OpenAI’s various tools to use to complete your query, including a new ability to reason with images.

OpenAI provided a livestream for the release:

Here are the three most important changes:

1. Combining tools

Both the new reasoning models can agentically use and combine every tool within ChatGPT. That means they have access to all of ChatGPT’s box of tricks including, web browsing, Python coding, image and file analysis, image generation, canvas, automations, file search and memory.

The important thing though is that ChatGPT now decides if it needs to use a tool itself based on what you’ve asked.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

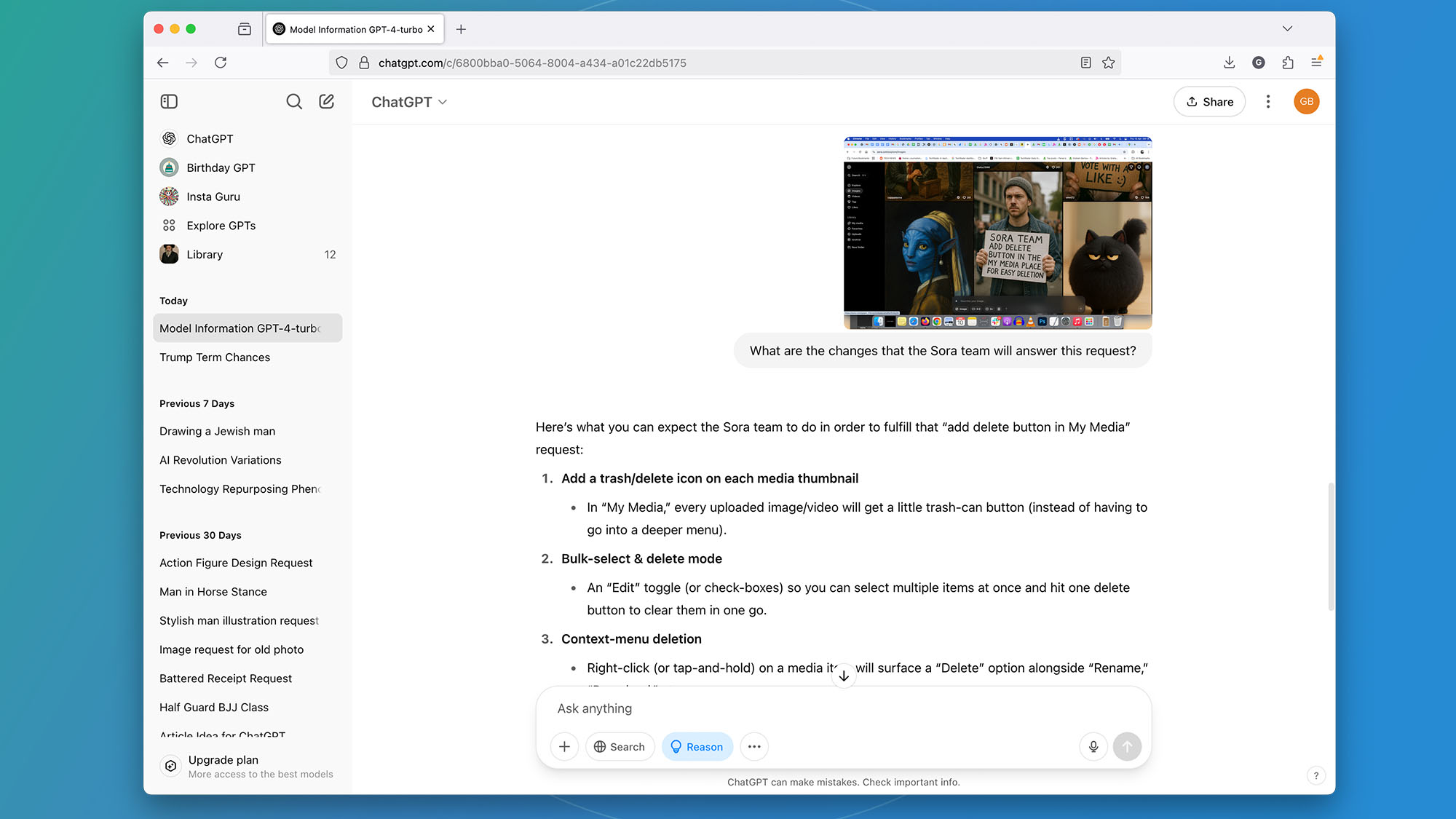

When you ask ChatGPT to do something complicated using the new models, it shows you each step it is taking, and which tool it is using, and how it arrived at that decision.

Once it has done all the research the notes on its working out process disappear and you get a report on its conclusions.

2. Better performance

The way that o3 and o4-mini can intelligently decide which tools to use is a step towards the intelligent model switching we’ve been promised with ChatGPT 5, when it finally arrives.

As you’d expect from advanced reasoning models, the report you get at the end is extremely detailed, and contains links to all sources used.

According to OpenAI, “The combined power of state-of-the-art reasoning with full tool access translates into significantly stronger performance across academic benchmarks and real-world tasks, setting a new standard in both intelligence and usefulness.”

The real world result of this is that these models can tackle multi-faceted questions more effectively, so don’t be afraid to ask them to perform several actions at once and produce an answer or report that combines several queries together.

3. Reasoning with images

Both new models are the first released by OpenAI that will integrate uploaded images into its chain of thought. They will actually reason using the images, so for example, you could upload a picture of some cars and ask what the name and model of the cars are, then how much retail value they will hold in five years time.

This is the first time that ChatGPT has been able to integrate images into a reasoning chain and presents a real step forward for multimodal AI.

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date.For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation. pic.twitter.com/rDaqV0x0wEApril 16, 2025

My verdict

I’ve tried the new models on the Plus tier and I’m impressed with both the speed and comprehensiveness of the answers to my queries. While I’ve always enjoyed the depth of reasoning that the o1 and o3-mini models have provided, it’s always meant waiting longer for a response.

The o3 model has now become my default model to choose on Plus because it’s fast enough that I don’t feel like I’m waiting around too long for an answer, but I get a satisfying amount of detail.

In short, I’m impressed. The new models feel like a natural evolution of ChatGPT into something that’s smarter and more capable. I also like the way that it can decide which of ChatGPT’s various tools it needs to use to provide the best answer.

Trying it for yourself

Here’s how to try the new ChatGPT models for yourself:

Plus, Pro and Team users will see that they can select ChatGPT o3, ChatGPT o4-mini and ChatGPT o4-mini-high from the drop-down LLM menu inside ChatGPT, and free tier users can get access to o4-mini by selecting the Reason button in the composer before submitting your query. Edu users will gain access in one week.

There’s no visual notification for free tier users that they are now using the 4o-mini reasoning model, but if you click the button and ask ChatGPT which LLM it is using then it now says 4o-mini.

There will be a rate limit for how many times a free tier user can use the Reasoning feature, and for Plus users is much higher.

OpenAI say they expect to release o3-pro “in a few weeks”, with full tool support. Pro users can already access o1-pro.

You may also like

- You can't hide from ChatGPT – new viral AI challenge can geo-locate you from almost any photo – we tried it and it's wild and worrisome

- ChatGPT spends 'tens of millions of dollars' on people saying 'please' and 'thank you', but Sam Altman says it's worth it

- I tried Claude's new Research feature, and it's just as good as ChatGPT and Google Gemini's Deep Research features

- Claude tipped to get its answer to ChatGPT’s Advanced Voice Mode soon - is adding an AI voice to a chatbot yet another tick box exercise?

Graham is the Senior Editor for AI at TechRadar. With over 25 years of experience in both online and print journalism, Graham has worked for various market-leading tech brands including Computeractive, PC Pro, iMore, MacFormat, Mac|Life, Maximum PC, and more. He specializes in reporting on everything to do with AI and has appeared on BBC TV shows like BBC One Breakfast and on Radio 4 commenting on the latest trends in tech. Graham has an honors degree in Computer Science and spends his spare time podcasting and blogging.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.