Could you pass 'Humanity’s Last Exam'? Probably not, but neither can AI

Are you smarter than an AI model?

Did you know some of the smartest people on the planet create benchmarks to test AI’s capabilities at replicating human intelligence? Well, scarily enough most AI benchmarks are easily completed by artificial intelligence models, showcasing just how smart the likes of ChatGPT’s GPT-4o, Google Gemini’s 1.5, and even the new o3-mini really are.

In the quest to create the hardest benchmark possible, Scale AI and the Center for AI Safety (CAIS) have teamed up to create Humanity’s Last Exam, a test they're calling a “groundbreaking new AI benchmark that was designed to test the limits of AI knowledge at the frontiers of human expertise.”

I’m not a genius by any means, but I had a glance at some of these questions and let me tell you, they're ridiculously tough. So much so that only the brightest minds on the planet could probably answer them. This incredible degree of difficulty means that in testing current AI models were only able to answer fewer than 10 percent of the questions correctly.

The original name for the test was 'Humanity’s Last Stand', but that was changed to Exam, just to take away the slightly terrifying nature of the concept. The questions were crowdsourced, with expert contributors from over 500 institutions across 50 countries coming up with the hardest reasoning questions possible.

The current Humanity’s Last Exam dataset consists of 3,000 questions, and we’ve selected a few samples below to show you just how tricky it is. Can you pass Humanity’s Last Exam? Good luck!

Are you smarter than an AI chatbot?

Question 1:

Hummingbirds within Apodiformes uniquely have a bilaterally paired oval bone, a sesamoid embedded in the caudolateral portion of the expanded, cruciate aponeurosis of insertion of m. depressor caudae. How many paired tendons are supported by this sesamoid bone? Answer with a number.

Question 2:

I am providing the standardized Biblical Hebrew source text from the Biblia Hebraica Stuttgartensia (Psalms 104:7). Your task is to distinguish between closed and open syllables. Please identify and list all closed syllables (ending in a consonant sound) based on the latest research on the Tiberian pronunciation tradition of Biblical Hebrew by scholars such as Geoffrey Khan, Aaron D. Hornkohl, Kim Phillips, and Benjamin Suchard. Medieval sources, such as the Karaite transcription manuscripts, have enabled modern researchers to better understand specific aspects of Biblical Hebrew pronunciation in the Tiberian tradition, including the qualities and functions of the shewa and which letters were pronounced as consonants at the ends of syllables.

מִן־גַּעֲרָ֣תְךָ֣ יְנוּס֑וּן מִן־ק֥וֹל רַֽ֝עַמְךָ֗ יֵחָפֵזֽוּן (Psalms 104:7) ?

Question 3:

In Greek mythology, who was Jason's maternal great-grandfather?

How did you do? There’s no shame in saying "not very well". I won’t lie – I don’t think I even understood what I was being asked in that second one.

When should we panic?

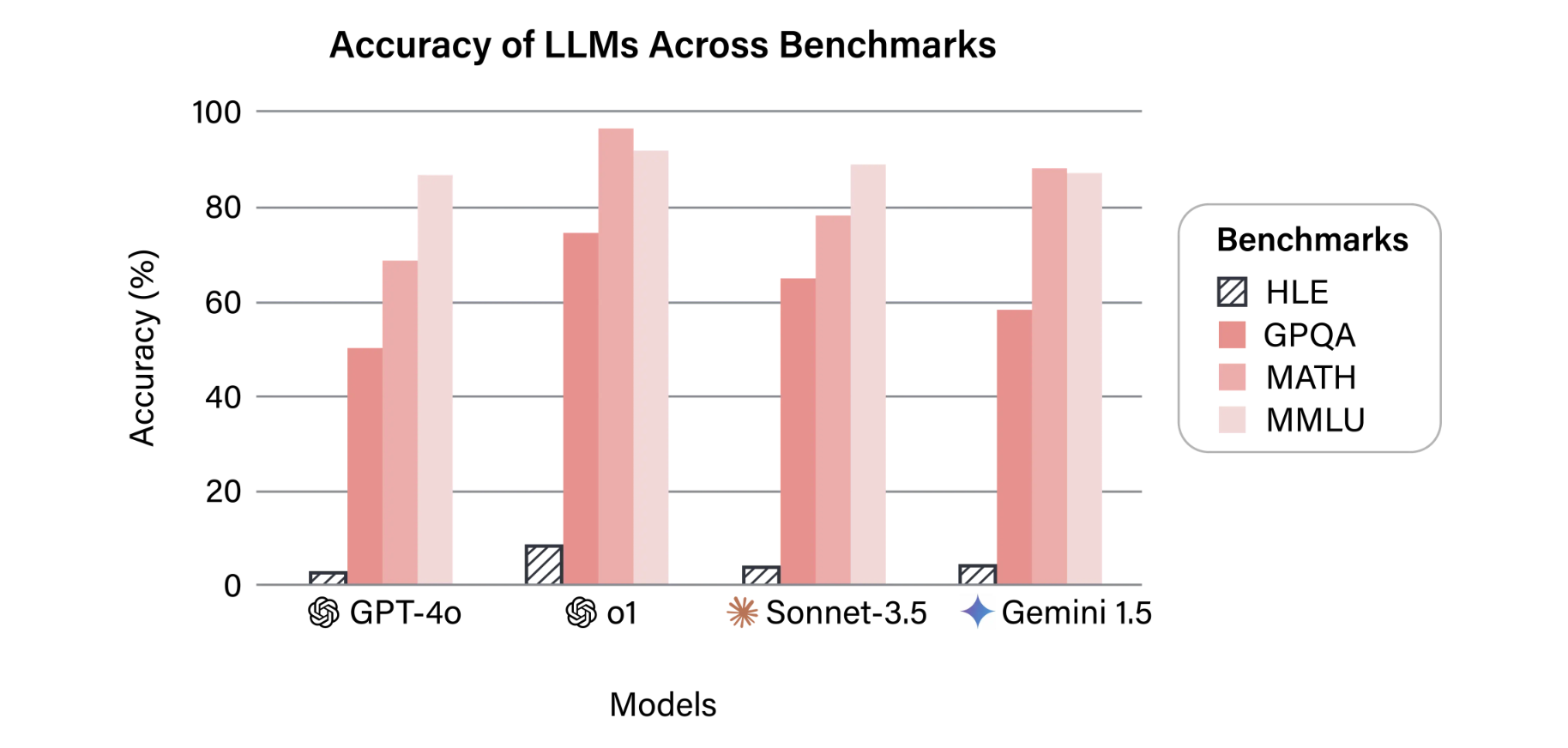

According to the initial results reported by CAIS and Scale AI, OpenAI’s GPT-4o achieved 3.3% accuracy on Humanity’s Last Exam, while Grok-2 achieved 3.8%, Claude 3.5 Sonnet 4.3%, Gemini 6.2%, o1 9.1%, and DeepSeek-R1 (purely text as it’s not multi-modal) achieved 9.4%.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Interestingly, Humanity’s Last Exam is substantially harder for AI than any other benchmark out there, including the most popular options, GPQA, MATH, and MMLU.

So what does this all mean? Well, we’re still in the infancy of AI models with reasoning functionality, and while OpenAI’s brand-new o3 and o3-mini is yet to take on this incredibly difficult benchmark, it’s going to take a very long time for any LLM to come close to completing Humanity’s Last Exam.

It’s worth bearing in mind however, that AI is evolving at a rapid rate, with new functionality being made available to users almost daily. Just this week OpenAI unveiled Operator, its first AI agent, and it shows huge promise in a future where AI can automate tasks that would otherwise require human input. For now, no AI can come close to completing Humanity’s Last Exam, but when one does… well, we could be in trouble.

You may also like

John-Anthony Disotto is TechRadar's Senior Writer, AI, bringing you the latest news on, and comprehensive coverage of, tech's biggest buzzword. An expert on all things Apple, he was previously iMore's How To Editor, and has a monthly column in MacFormat. John-Anthony has used the Apple ecosystem for over a decade, and is an award-winning journalist with years of experience in editorial.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.