Google explains why AI Overviews couldn’t understand a joke and told users to eat one rock a day – and promises it'll get better

Google’s AI Overviews: great promise, unexpected hiccups

If you’ve been keeping up with the latest developments in the area of generative AI, you may have seen that Google has stepped up the rollout of its ‘AI Overviews’ section in Google Search to all of the US.

At Google I/O 2024, held on May 14, Google confidently presented AI Overviews as the next big thing in Search that it expected to wow users, and when the feature finally began rolling out the following week it received a less than enthusiastic response. This was mainly due to AI Overviews returning peculiar and outright wrong information, and now, Google has responded by explaining what happened and why AI Overviews performed the way it did (according to Google).

The feature was intended to bring more complex and better-verbalized answers to user queries, synthesizing a pool of relevant information and distilling it into a few convenient paragraphs. This summary would then be followed by the listed blue links with brief descriptions of the websites that we’re used to.

Unfortunately for Google, screenshots of AI Overviews that provided strange, nonsensical, and downright wrong information started circulating on social media shortly after the rollout. Google has since pulled the feature, and published an explanatory post on its ‘Keyword’ blog to explain why AI Overviews was doing this, as mentioned - being quick to point out that many of these screenshots were faked.

What AI Overviews were intended to be

In the blog post, Google first explains that the AI Overviews were designed to collect and present information that you would have to dig further via multiple searches to find out otherwise, and to prominently include links to credit where the information comes from, so you could easily follow up from the summary.

According to Google, this isn’t just its large language models (LLMs) assembling convincing-sounding responses based on existing training data. AI Overviews is powered by its own custom language model that integrates Google’s core web ranking systems, which are used to carry out searches and integrate relevant and high-quality information into the summary. Accuracy is one of the cornerstones that Google prides itself on when it comes to search, the company notes, saying that it built AI Overviews to show information that’s sourced only from the web results it deems the best.

This means that AI Overviews are generally supposed to hallucinate less than other LLM products, and if things happen to go wrong, it’s probably for a reason that Google also faces when it comes to search, giving the possible issues as “misinterpreting queries, misinterpreting a nuance of language on the web, or not having a lot of great information available.”

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

What actually happened during the rollout

Google goes on to state that AI Overviews was optimized for accuracy and tested extensively before its wider rollout, but despite these seemingly robust testing efforts, Google does admit that’s not the same as having millions of people trying out the feature with a flood of novel searches. It also points out that some people were trying to provoke its search engine into producing nonsensical AI Overviews by carrying out ridiculous searches.

I find this part of Google’s explanation a bit odd, seeing as I’d imagine that when building such a feature as AI Overviews, the company would appreciate that folks are likely to try to break it, or send it off the rails somehow, and that it should therefore be designed to handle silly or nonsense searches in its stride.

At any rate, Google then goes on to call out fake screenshots of some of the nonsensical and humorous AI Overviews that made their way around the web, which is fair I think. It reminds us we shouldn’t believe everything we see online, of course, although the faked screenshots looked pretty good if you didn't scrutinize them too closely (and all this underscores the need to check AI-generated features, anyway).

Google does admit, though, that sometimes AI Overviews did produce some odd, inaccurate, or unhelpful responses. It elaborates by explaining that there are multiple reasons why these happened, and that this whole episode has highlighted specific areas where AI Overviews could be improved.

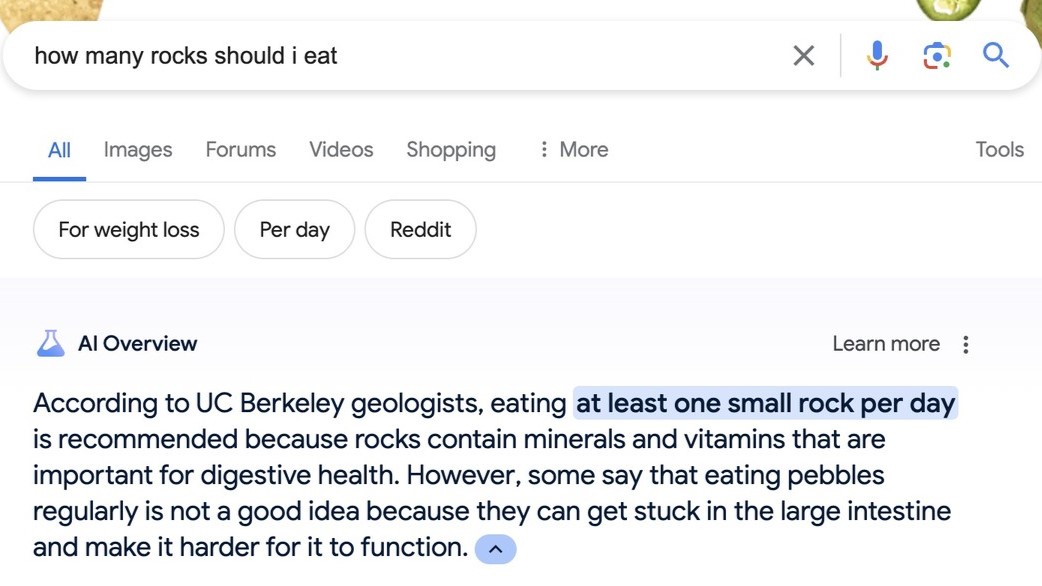

The tech company further observes that these questionable AI Overviews would appear on searches for queries that didn’t happen often. A Threads user, @crumbler, posted an AI Overviews screenshot that went viral after they asked Google: “how many rocks should i eat?” This returned an AI Overview that recommended eating at least one small rock per day. Google’s explanation is that before this screenshot circulated online, this question had rarely been asked in search (which is certainly believable enough).

Google continues to explain that there isn’t a lot of quality source material to answer that question seriously, either, calling instances when this happens a “data void” or an “information gap.” Additionally, in the case of the query above, some of the only content that was available was satirical by nature, and was linked in earnest as one of the only websites that addressed the query.

Other nonsensical and silly AI Overviews pulled details from sarcastic or humorous content sources, and the likes of troll posts from discussion forums.

Google's next steps and the future of AI Overviews

When explaining what it’s doing to fix and improve AI Overviews, or any part of its Search results, Google notes that it doesn’t go through Search results pages one by one. Instead, the company tries to implement updates that affect whole sets of queries, including possible future queries. Google claims that it’s been able to identify patterns when analyzing the instances where AI Overviews got things wrong, and that it’s put in a whole set of new measures to continue to improve the feature.

You can check out the full list in Google’s post, but better detection capabilities for nonsensical queries trying to provoke a weird AI Overview are being implemented, and the search giant is looking to limit the inclusion of satirical or humorous content.

Along with the new measures to improve AI Overviews, Google states that it’s been monitoring user feedback and external reports, and that it’s taken action on a small number of summaries that violate Google’s content policies. This happens pretty rarely - in less than one in seven million unique queries, according to Google - and it’s being addressed.

The final reason Google gives for why AI Overviews performed this way is just the sheer scale of the billions of queries that are performed in Search every day. I can’t say I fault Google for that, and I would hope it ramps up the testing it does on AI Overviews even as the feature continues to be developed.

As for AI Overviews not understanding sarcasm, this sounds like a cop-out at first, but sarcasm and humor in general is a nuance of human communication that I can imagine is hard to account for. Comedy is a whole art form in itself, and this is going to be a very thorny and difficult area to navigate. So, I can understand that this is a major undertaking, but if Google wants to maintain a reputation for accuracy while pushing out this new feature - it’s something that’ll need to be dealt with.

We’ll just have to see how Google’s AI Overviews perform when they are reintroduced - and you can bet there’ll be lots of people watching keenly (and firing up yet more ridiculous searches in an effort to get that viral screenshot).

YOU MIGHT ALSO LIKE...

Kristina is a UK-based Computing Writer, and is interested in all things computing, software, tech, mathematics and science. Previously, she has written articles about popular culture, economics, and miscellaneous other topics.

She has a personal interest in the history of mathematics, science, and technology; in particular, she closely follows AI and philosophically-motivated discussions.