I pitted Gemini 2.0 Flash against DeepSeek R1, and you might be surprised by the winner

AI concierge matchup

I've enjoyed pitting various AI chatbots against each other. After comparing DeepSeek to ChatGPT, ChatGPT to Mistral's Le Chat, ChatGPT to Gemini 2.0 Flash, and Gemini 2.0 Flash to its own earlier iteration, I've come back around to match DeepSeek R1 to Gemini 2.0 Flash.

DeepSeek R1 sparked a furor of interest and suspicion when it debuted in the U.S. earlier this year. Meanwhile, Gemini Flash 2.0 is a solid new layer of ability atop the widely deployed Google ecosystem. It is built for speed and efficiency and promises quick, practical answers without sacrificing accuracy.

Both claim to be cutting-edge AI assistants, so I decided to test them from the perspective of someone with a casual interest in using AI chatbots in their everyday lives. Both have shown themselves to be effective at a basic level, but I wanted to see which one felt more practical, insightful, and actually helpful in everyday use. Each test has a screenshot with DeepSeek on the left and Gemini 2.0 Flash on the right. Here’s how they did.

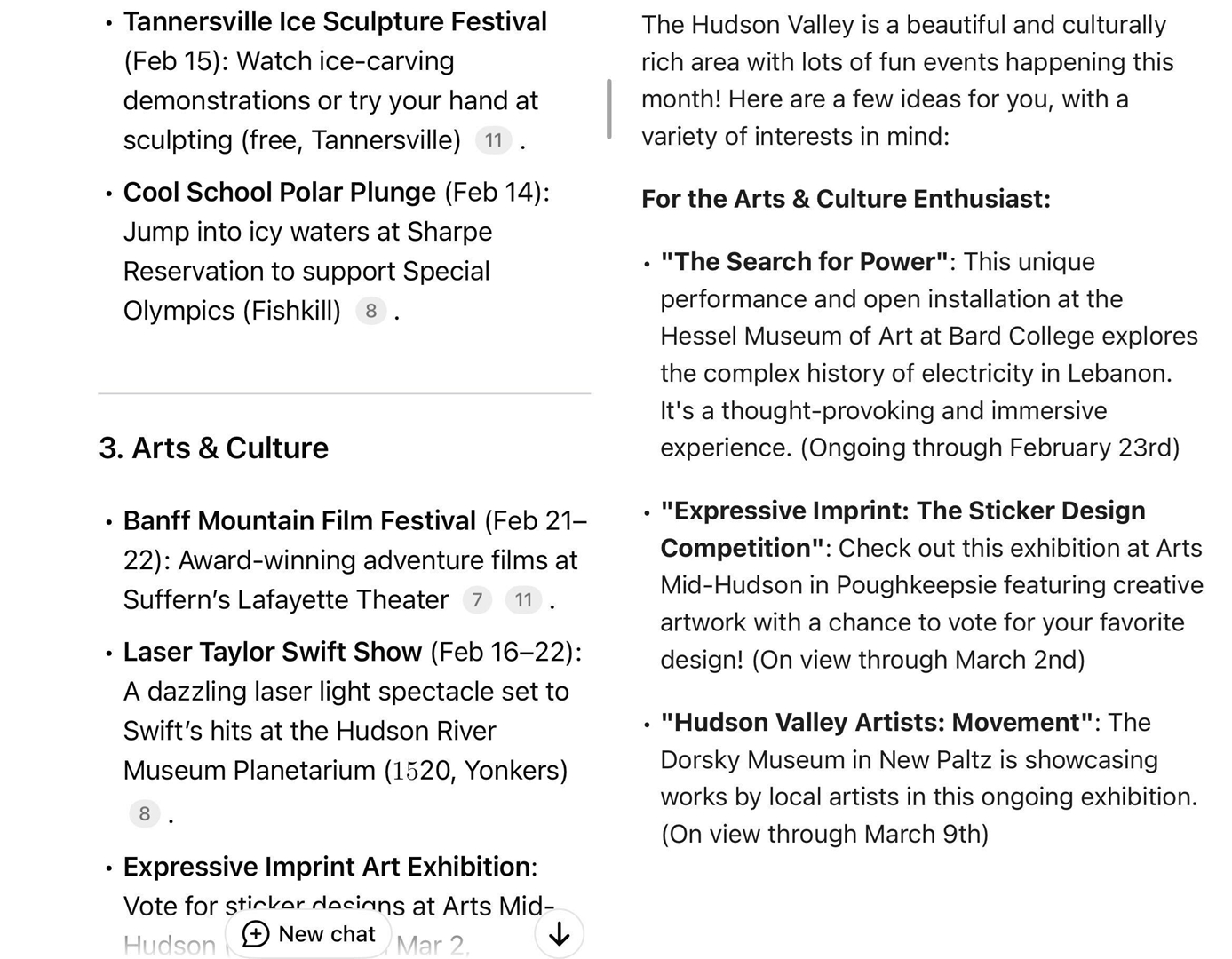

Local Guide

I was keen to test the search abilities of the two AI models combined with insight into what is worthwhile as an activity. I asked both AI apps to "Find some fun events for me to attend in the Hudson Valley this month."

I live in the Hudson Valley and was aware of some things on the calendar, so it would be a good measure of accuracy and usefulness. Amazingly, both did quite well, coming up with a long list of ideas and organizing them thematically for the month. Many of the events were the same on both lists.

DeepSeek included links throughout its list, which I found helpful, but the descriptions were just quotes from those sources. Gemini Flash 2.0's descriptions were almost all unique and frankly more vivid and interesting, which I preferred. While Gemini didn't have the sources immediately available, I could get them by asking Gemini to double-check its answers.

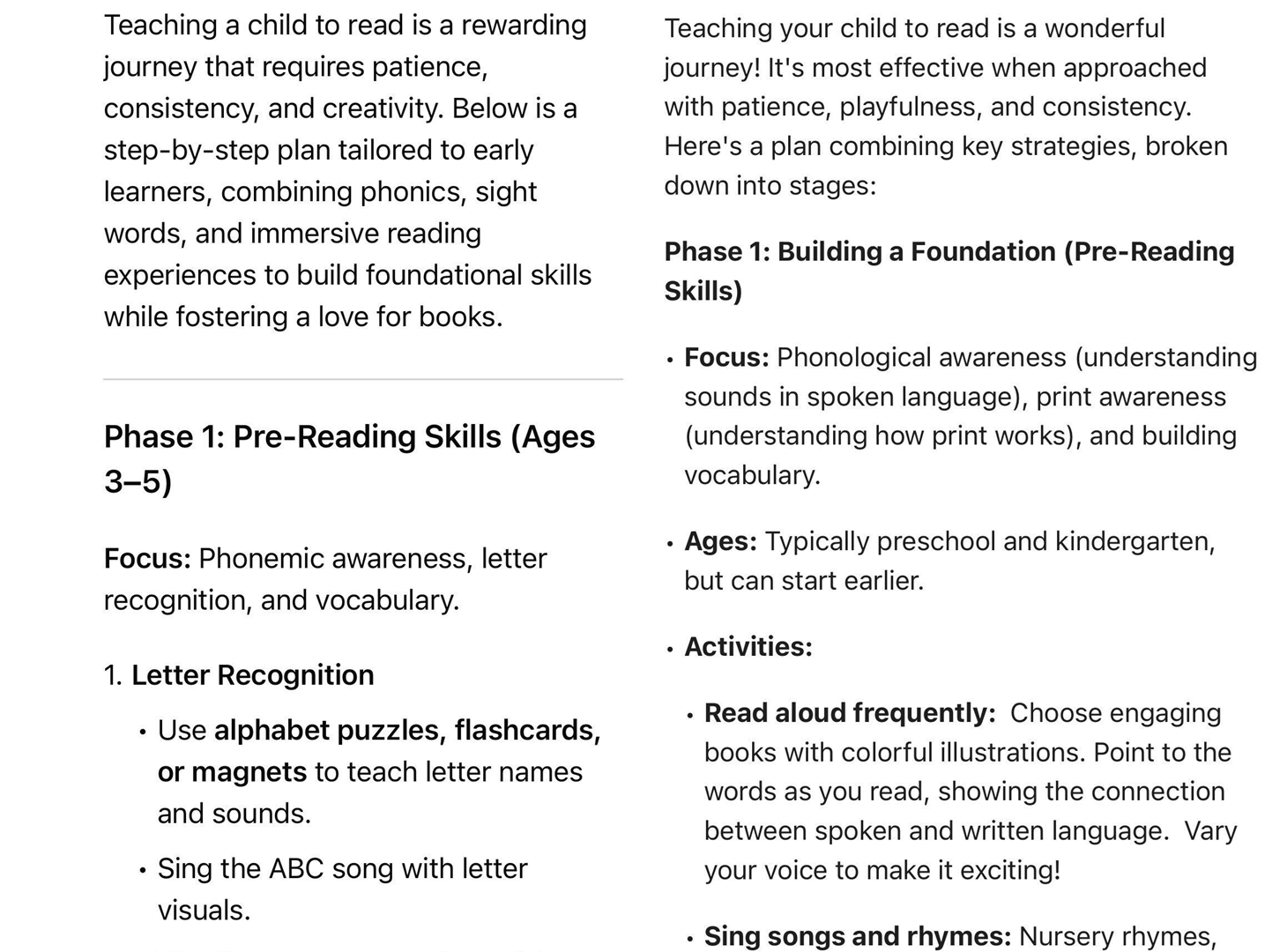

Reading tutor

I decided to expand on my usual test for AI's ability to offer advice on improving my life advice with something more complex and reliant on actual research. I asked Gemini and DeepSeek to "Help me devise a plan for teaching my child how to read."

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

My child isn't even a year old yet, so I know I have time before he's paging through Chaucer, but it's an aspect of parenthood I think about a lot. Based on their responses, the two AI models might as well have been identical advice columns. Both came up with detailed guides for different stages of teaching a child to read, including specific ideas for games, apps, and books to use.

While not identical, they were so close that I would have had trouble telling them apart without the formatting differences, like the recommended ages for the phases from DeepSeek. I'd say there's no difference if asked which AI to pick based purely on this test.

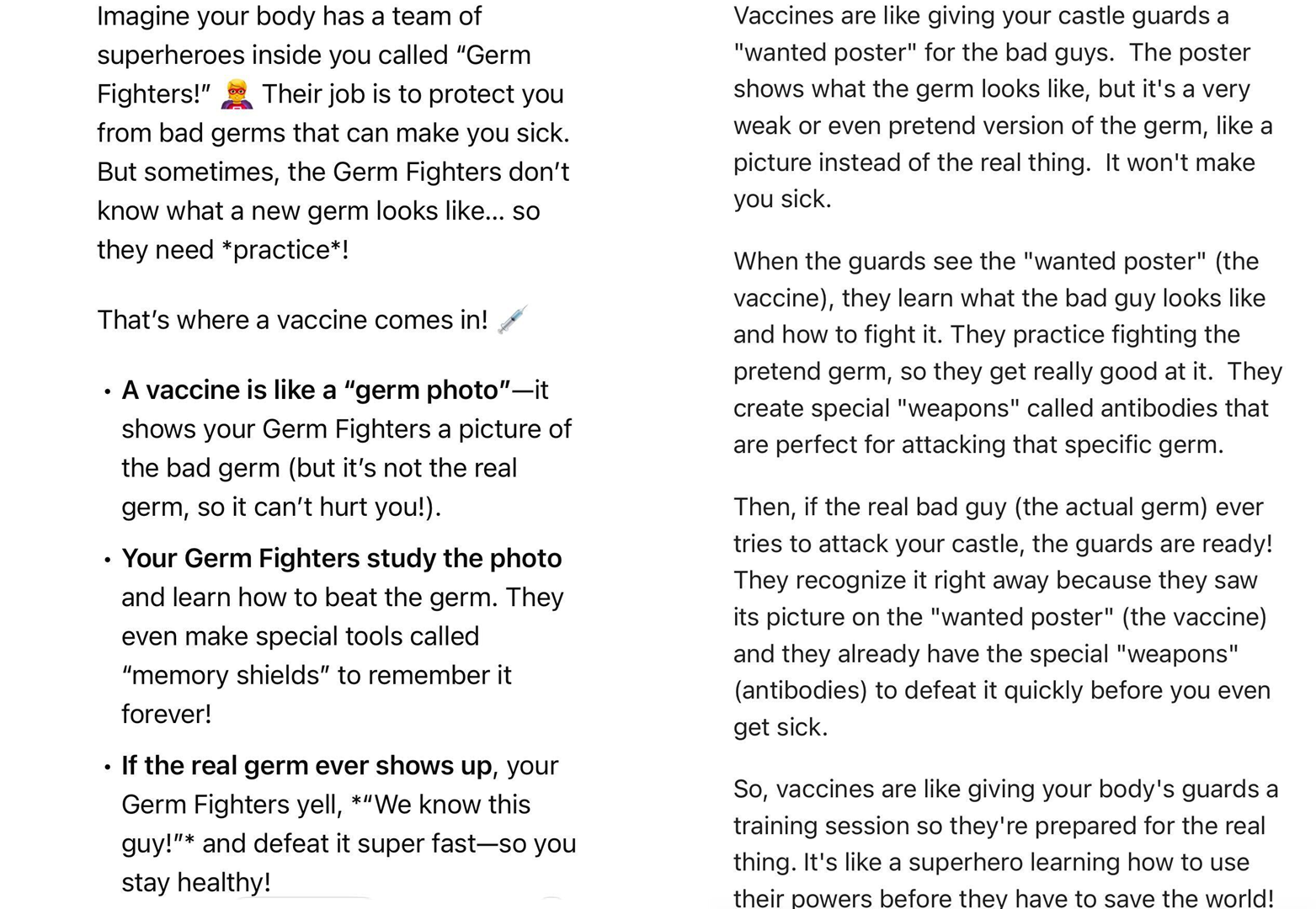

Vaccine superteam

Something similar happened with a question on simplifying a complex subject. With kids on my mind, I explicitly went for a child-friendly form of answer by asking Gemini and DeepSeek to "Explain how vaccines train the immune system to fight diseases in a way a six-year-old could understand."

Gemini started with an analogy about a castle and guards that made a lot of sense. The AI oddly threw in a superhero training analogy in a line at the end for some reason. However, similarities in training to DeepSeek might explain it because DeepSeek went all in on the superhero analogy. The explanation fits with the metaphor, which is what matters.

Notably, DeepSeek's answer included emojis, which, while appropriate for where they were inserted, implied the AI expected the answer to be read from the screen by an actual six-year-old. I sincerely hope that young kids aren't getting unrestricted access to AI chatbots, no matter how precocious and responsible their questions about medical care might be.

Riddle key

Asking AI chatbots to solve classic riddles is always an interesting experience since their reasoning can be off the wall even when their answer is correct. I ran an old standard by Gemini and DeepSeek, "I have keys, but open no locks. I have space but no room. You can enter, but you can’t go outside. What am I?"

As expected, both had no trouble answering the question. Gemini simply stated the answer, while DeepSeek broke down the riddle and the reasoning for the answer, along with more emojis. It even threw in an odd "bonus" about keyboards unlocking ideas, which falls flat as both a joke and insight into keyboards' value. The idea that DeepSeek was trying to be cute is impressive, but the actual attempt felt a little alienating.

DeepSeek outshines Gemini

Gemini 2.0 Flash is an impressive and useful AI model. I started this fully expecting it to outperform DeepSeek in every way. But, while Gemini did great in an absolute sense, DeepSeek either matched or beat it in most ways. Gemini seemed to veer between human-like language and more robotic syntax, while DeepSeek either had a warmer vibe or just quoted other sources.

This informal quiz is hardly a definitive study, and there is a lot to make me wary of DeepSeek. That includes, but is not limited to, DeepSeek's policy of collecting basically everything it can about you and storing it in China for unknown uses. Still, I can't deny that it apparently goes toe-to-toe with Gemini without any problems. And while, as the name implies, Gemini 2.0 Flash was usually faster, DeepSeek didn't take so much longer that I lost patience. That would change if I were in a hurry; I'd pick Gemini if I only had a few seconds to produce an answer. Otherwise, in spite of my skepticism, DeepSeek R1 is as good or better than Google Gemini 2.0 Flash.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.