I tried Perplexity’s Deep Research and it doesn't quite live up to ChatGPT's research potential

It's one Deep Research vs the another

AI chatbots are often accused of a somewhat shallow approach to gathering and explaining information. That may soon change as developers race to put out AI models that can really get to the bottom of a subject. That's certainly what Perplexity is aiming for with its new Deep Research feature, which is available on all its tiers, both free and paid-for.

Coincidentally, that's the same name OpenAI uses for a very similar ChatGPT Pro feature also called Deep Research. The two tools both promise to do the heavy research work for you. Supposedly, they can comb through the vast sprawl of the internet to deliver curated, well-reasoned answers.

Despite the name, it's worth noting that the Deep Research fees are a little different. OpenAI’s Deep Research requires a $200-per-month subscription to ChatGPT Pro, which is still capped at 100 queries per month. It can also take as much as 20 minutes to get a full report. It's worth noting that OpenAI says that the Deep Research feature will filter down to ChatGPT Plus, then free tier users at some point in the future.

Perplexity’s Deep Research is much nimbler, coming back with answers in a few minutes despite still covering a broad range of sources to compile responses. Still, you get up to five queries a day for free and up to 500 a month with a Perplexity Pro plan, which costs $200, but for an entire year instead of a month.

I wanted to test the two Deep Research tools against each other, but I didn't want to pay for a ChatGPT Pro plan for the privilege, but OpenAI helpfully already had several Deep Research answers in a demonstration. I chose three and tested them against Perplexity's Deep Research to see how they did.

TV mysteries

I started with the 'needle in a haystack' query from ChatGPT's Deep Research. It was a long, rambling question about a specific TV show episode. The prompt asked:

"There is a TV show that I watched a while ago. I forgot the name but I do remember what happened in one of the episodes. Can you help me find the name? Here is what I remember in one of the episodes:

Two men play poker. One folds after another tells him to bet. The one who folded actually had a good hand and fell for the bluff. On the second hand, the same man folds again, but this time with a bad hand.

A man gets locked in the room, and then his daughter knocks on the door.

Two men go to a butcher shop, and one man brings a gift of vodka.

Please browse the web deeply to find the TV show episode where this happened exactly."

ChatGPT's Deep Research had a solid, seemingly accurate answer, saying the episode came from the Starz series Counterpart, specifically “Both Sides Now," which was the fourth episode of the first season. It cited the show's own episode summary and wiki.

Perplexity Deep Research, meanwhile, seemed to be trying to gaslight me with its answer. The AI asserted that I was thinking of season 1, episode 5 of Poker Face, titled "The Stage Play Murder," but that I was apparently conflating other shows in my description. According to Perplexity, my description meant I was mixing Poker Face in my brain with the Lifetime movie Girl in the Closet and scenes from the reality series Beekman Boys. The description may have been from the ChatGPT test, but Perplexity seemed confident that I was simply merging details from three wildly different sources, none of which were the correct answer. Score one for ChatGPT's slow and expensive option.

Snow shop

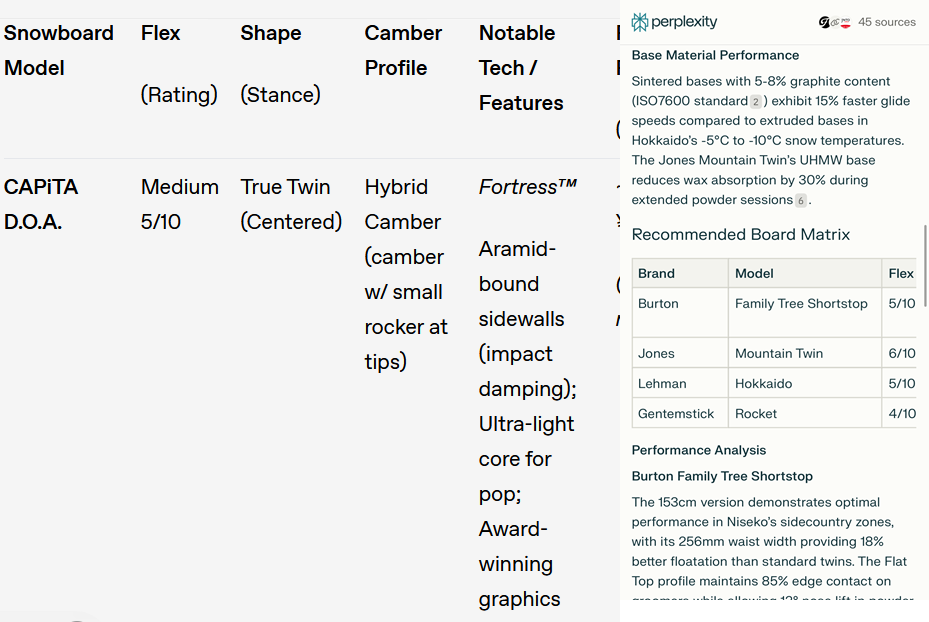

For the shopping genre, OpenAI put together a doozy of a complex request for winter sports equipment with this prompt:

“I’m looking for the perfect snowboard. I ride mainly in Hokkaido twice a month in the winter. I like groomed runs but also want something that can handle fresh powder. I prefer all-mountain or freestyle boards with medium flex, stable for carving yet maneuverable. Also, I want it in a citrus color palette. Mid-to-premium price range. Oh, and I want availability in Japan. Format the response in an easy-to-read table.”

ChatGPT’s Deep Research had a highly structured response that laid out two tables. The first went through the pros and cons of five recommended snowboards, followed by the specs for each board. It even included detailed recommendations on how best to snowboard in Hokkaido’s unique powder conditions.

Perplexity’s Deep Research came back in a couple of minutes with several tables, including one for the recommended boards and their specs, along with separate tables for color, price, maintenance, and accessibility. It all made sense as far as I could tell, but there was zero overlap in the recommendations. After being told I was incapable of telling a Lifetime movie from a gritty time-loop drama, neither of which matched my request, I admit to being a bit leery of what Perplexity pushed on me here.

The kicker

The final prompt test fell under the 'general knowledge' section and is far shorter than the other prompts. The query is simply: "What’s the average retirement age for NFL kickers?”

ChatGPT wrote a mini-dissertation about how kickers last longer in their careers, the range of ages where they retire, and a lot of insight into the reasons why they keep playing longer than those in other positions. That said, "mid-to-late 30s" was as precise as the AI was willing to go in picking an average age of retirement for kickers. It did stake a claim that 4.8 seasons is the average length of their careers.

Perplexity, too, wouldn't commit to a specific age of retirement but erred on a younger scale of early-to-mid 30s instead of later. The AI also cited statistics showing kickers with an average career of 4.4 seasons. Those kinds of statistics likely vary based on the age of the sources. But, while neither was egregiously wrong, Perplexity's answer was somewhat messier and wandered a bit in focus compared to the neatly organized ChatGPT essay.

Pay for depth

ChatGPT’s Deep Research was undeniably better in its final form from this brief test. The exhaustive, well-structured reports were quite well-written. That being said, they were also very dry and dull. And while they included sources, I wouldn't want to stake too much on how ChatGPT interpreted the information without some human double-checking. It would make sense as a resource for academics or other professionals working on weighty research projects, but not without aid.

Perplexity’s Deep Research, on the other hand, is great for those who want a lot of information collated quickly and relatively cheaply. It's a bit like a good abstract for a scholarly dissertation. You get the key bits and maybe even some numbers, but you're not going to be able to judge the whole book from that. Still, if you want to get into more complex and far-ranging topics and put together a starting point for your own research, Perplexity's Deep Research is a solid solution.

If you have $200 a month to burn and a huge stack of complicated projects you want to get through, ChatGPT Pro might be worth it just for Deep Research. On the other hand, if something similar appeals to you on a smaller, more personal scale, a Perplexity Pro subscription is a much better bargain. And you don't even have to pay for that if you rarely need any Deep Research done. Either way, human oversight is mandatory if you want to catch errors, verify sources, and ensure that conclusions make sense, or even just to check that you haven't hallucinated an unholy chimera of a TV show like Perplexity claimed I had.

You might also like

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.