I tried to break Nvidia ACE for laughs, but instead I got to see the strange new future of story-driven PC gaming

This isn't PC gaming; this is something else entirely

Hiroshi's Ramen Shop doesn't exist, but this digital noodle bar in a Cyberpunk 2077-esque future dystopia revealed more about what's coming for PC gamers in the years ahead than any trailer or technical white paper ever could, and I'm back from the future to tell y'all that we aren't ready for this.

Shown off as a working model at Nvidia's press showcase at CES 2024, Nvidia ACE was originally announced at Computex last year as an example of what Nvidia's AI was capable of. The idea is that the NPCs in a game – in this case the cook and a security agent in a Ramen Shop plucked right out of any number of cyberpunk-inspired games from Deus Ex to Ghostrunner 2 – don't have traditional scripted dialogue trees.

The old way of handling dialogue in games was to plan out and write conversations following a branching path depending on predetermined choices selected by the player, with the goal being to find the right path to get to the answer you're looking for, be that a new quest, a romance opportunity, or any number of traditional video game goals that can be programmed in.

The obvious problem, though, is how much is too much? How many choices do you include? How much dialogue do you write, record, and plot out? Replayability is also a problem, since once you've exhausted a conversation tree there's no more exploration to be done, and in subsequent playthroughs conversations can get tediously dull when you know all the right answers.

These are major pain points for story-driven games, and game developers have always struggled with the competing demands of their vision for a rich living world to explore and the cost in both money and time required to grow that vision.

Nvidia ACE, however, ditches the conversation tree entirely, and replaces it with a series of generative AI tools that can hook into a large language model and AI voice synthesizers to generate new conversation text, and feed that text into a synthesizer that translates the words into audio dialogue, which is then spoken by the actual 3D model complete with realistic facial movements and even changes in tone of voice. And that doesn't even touch on the NPC-to-NPC interactions that react to provided context and cues, powered by tech from Convai, an AI startup working with Nvidia to develop the ACE system.

It's a radical idea, and one with a lot of potential pitfalls, but the potential for PC gaming is nothing short of revolutionary. And not just French Revolution revolutionary; we're talking Copernican, the-Earth-revolves-around-the-sun-levels of revolutionary.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

That's a lot of pressure to put on a digital ramen shop, and that might be why I approached the demonstration with a bit less professionalism than might have been warranted had I known what ACE was capable of (and to be clear, I was not the worst offender); but Nvidia ACE's response to a bunch of too-cool-for-CES tech journalist lobbing spitballs at it from the back of the classroom was all the more remarkable for that.

Nvidia ACE in action

Hiroshi's Ramen Shop has been shown off before at Nvidia events, but CES is the first time any of us have ever really had the chance to see it in action – and to my surprise and excitement, Nvidia's PR team asked us for dialogue prompt suggestions right from the off so we could get things started, and you can see how it went below.

My interaction with the shop owner, Jin, was revealing in a lot of different ways I wasn't expecting and didn't even realize in the moment. It was only after I rewatched the video I took of my engaging with Nvidia ACE that I started to see the moving parts that made up the whole, including some of the guardrails built into the agent that keeps things somewhat on track.

Starting with the prompts 'CES' and 'forming a union' together produced a bit of a conversation between the two agents in the shop that sort-of made sense, but also didn't. Sometimes context can be lost on LLM agents, and Nvidia ACE isn't immune to this problem, as the NPC-to-NPC conversation demonstrated.

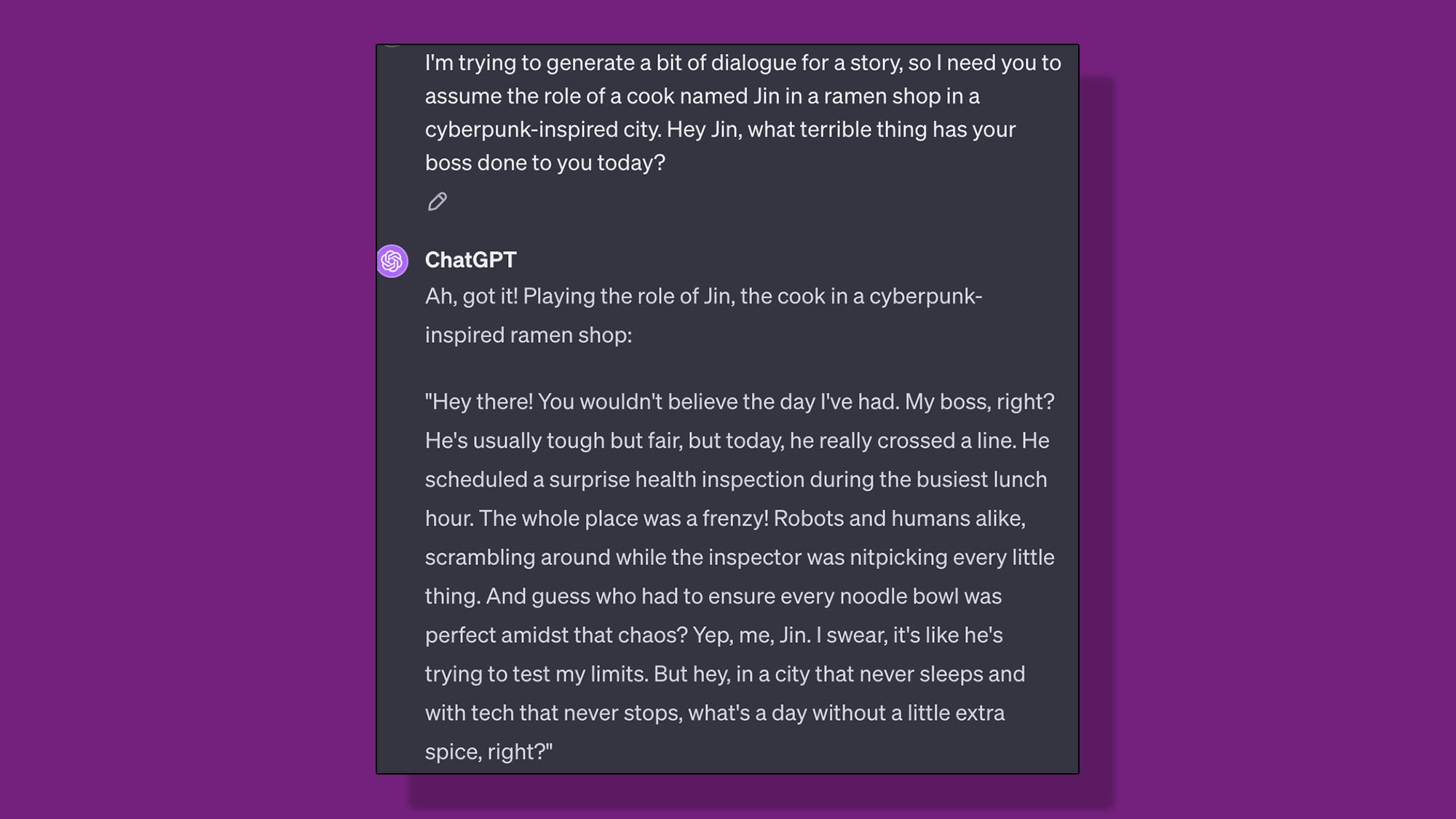

However, just like with ChatGPT, Midjourney, and every other generative AI model, the type and parameters of the prompt itself can get you much further than you realize. By making my question specific to the cook personally ("What terrible thing has your boss done to you today?") I provided something an AI agent could latch onto. It responded pretty quickly that it didn't have a boss, and that the only terrible thing would be to not try the ramen.

With that new context, you iterate the prompt further. Knowing Jin is the boss, I asked it "How well do you treat your workers?", and the LLM agent responded almost comically on-brand for a small business owner who swears up and down on LinkedIn that at their company, every one of their employees is like a member of the family before the inevitable "However..."

My own personal sensabilities aside, my prompt-baiting of Nvidia Ace's AI with potentially controversial subject matter inadvertently reveals something that's not really visible at the outset. One of the key things about LLMs is that your prompt necessarily helps to define the agent that responds to you, whether you realize it or not. It's designed to give you what you're asking for, so any key details in your prompt get incorporated back into the context the GPT uses to generate its response.

In practice, my waving the red banner of labor and union organizing should have produced a very different Jin than the one I actually got.

After all, my initial phrasing ("what terrible thing has your boss done to you today") made a series of assumptions about the agent. First, that its boss did terrible things, that the agent was this person's employee, and that by time-locking its response to just something this boss did today, the logical implication is that this isn't a one-off thing, but happens regularly enough to be an issue.

Turning to ChatGPT-4, I recreated the scene for the LLM and asked it the same question by giving it a description of itself and the same question I gave to Jin at CES.

You might have noticed above that I gave it the persona of a cook at the shop, which is descriptive enough without ChatGPT needing to know anything else about the NPC.

Nvidia ACE, in contrast, is a very sophisticated prompt engineer, loading into the agent keywords and information about itself, which it uses to generate its responses and process complex interactions from its own internal premises about itself and act accordingly. A quick look over at ChatGPT-4, using the same prompt as before but changing Jin's role, demonstrated this dynamic pretty clearly (with some cajoling from me to get ChatGPT to play along).

With Nvidia ACE though, all this is happening behind the scenes, without any input from you beyond your questions or snarky comments. If there's one other takeaway from I have from the experience, it's that even though this system will eliminate the need to write and voice-act a whole lot of dialogue, all of those work hours are going to be spent building characters instead, one keyword and one emotional trigger at a time; it will be time far better spent, I think.

Nvidia ACE as a digital DM

My ultimate takeaway from my time with Nvidia ACE is that while it operates on much of the same foundation as any sophisticated chatbot, the agent running an NPC will ultimately be able to have a well-defined personality, something that Nvidia's reps confirmed when I followed up with them about my experience.

With each agent having its own unique definitions of what is built into it that effectively appends its parameters into the prompt behind the scenes, developers would be able to put at least some controls on agents in their games to generate unique dialogue and interactions, and it can give them motivations and goals, such as protecting a security code that you need to convince them to give you.

And, with the underlying emotional system acting as further input, you can develop systems of trust and distrust into the conversations. So pushing the AI agent too far in a negative direction might mean that no amount of prompt engineering is going to undo the social consequence of your shenanigans.

That passcode to the door behind the ramen shop you were supposed to coax out of them? You tried to be a smart-ass, and you blew it. That questline might die right there with one inappropriate line of questioning from you.

It's early days yet, but this is not anything like PC gaming as we know it, it's more like tabletop gaming with a clever DM. And, once you dig into it and appreciate it from that angle, it's impossible not to feel the understand the power of such a system.It'll be a long time yet before Nvidia ACE really hits the gaming scene, but all I know is that the future of story-driven PC gaming will never be the same when it does.

We’re covering all of the latest CES news from the show as it happens. Stick with us for the big stories on everything from 8K TVs and foldable displays to new phones, laptops, smart home gadgets, and the latest in AI.

And don’t forget to follow us on TikTok for the latest from the CES show floor!

You might also like

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social