Look for the AI disclaimer from Google on photos that look a little too good to be true

Google Photos adds a label to reveal AI edits behind the scenes

We’ve all been using photo filters and related tools for years to make our faces, food, and fall decor look their best. AI tools arguably manipulate photos in fundamental ways well beyond better lighting and removing red eyes.

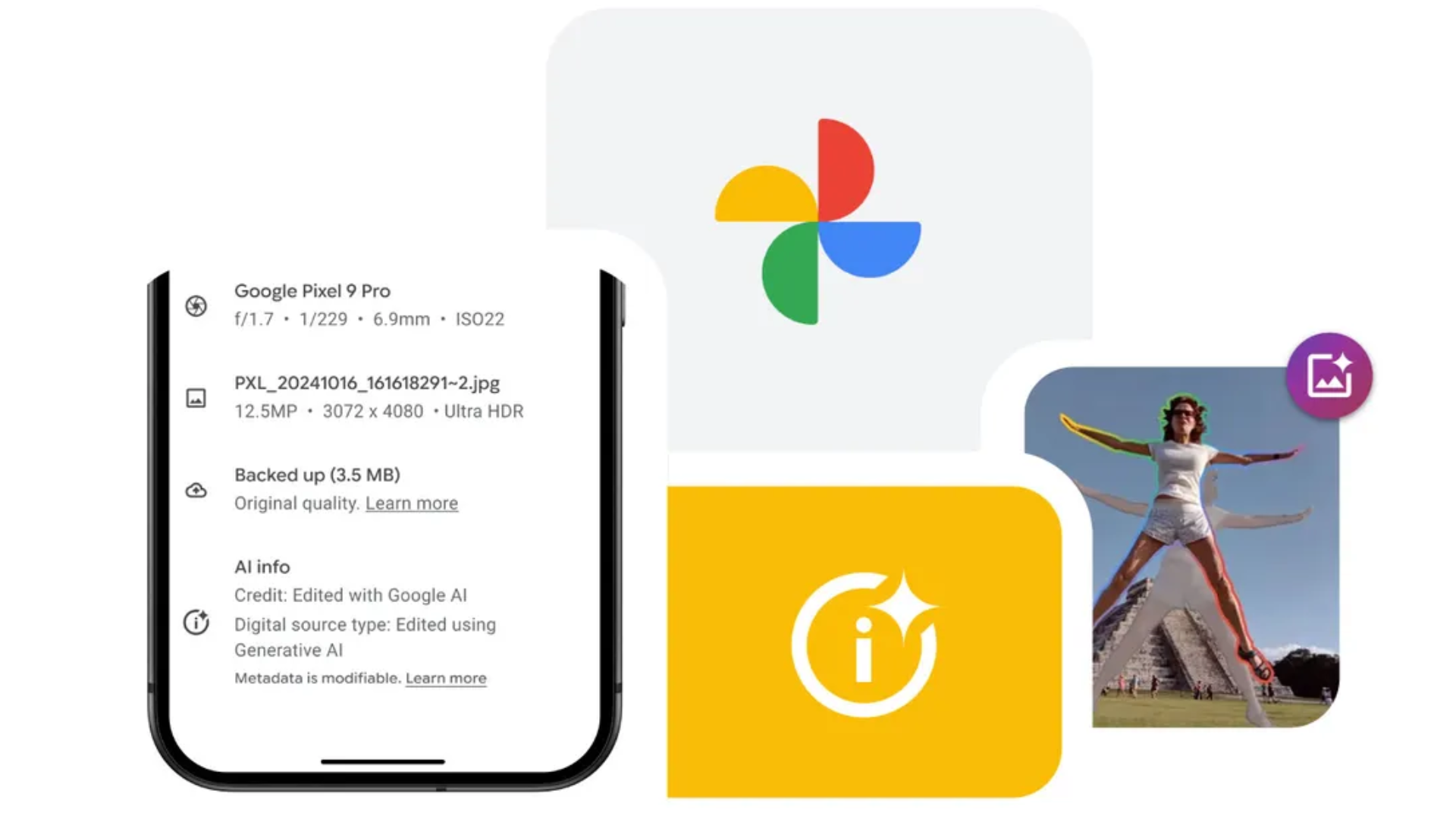

Google Photos has several generative AI features that can alter an image, but Google will now mark on a photo that you’ve used those tools in the name of transparency.

Starting next week, any photo edited with Google’s Magic Editor, Magic Eraser, or Zoom Enhance tools will show a disclaimer indicating that fact within the Google Photos app. The idea is to balance out how easy it is to use AI editing tools in ways that are hard to spot by looking. Google hopes the update will reduce any confusion about image authenticity, whether innocent or done with malicious intent.

Google already marks a photo’s metadata if it’s been edited with generative AI using technical standards created by the International Press Telecommunications Council (IPTC). The metadata is only seen when examining the data behind a photo, relevant only for investigative purposes and record-keeping. But the update digs out that bit of metadata to show along with an image’s more mundane details, such as its file name and location.

AI image mania

Google isn’t singling out its AI tools for the transparency initiative either. Any blended image will have a disclaimer. For instance, the Google Pixel 8 and Pixel 9 smartphones have two photo features: Best Take and Add Me. Best Take will meld together several photos taken together of a group of people into one image to show everyone at their most photogenic, while Add Me can make it look like someone is in a picture who wasn’t there. As these are in the realm of synthetic image creation, Google decided to give them a tag indicating they are built from multiple pictures, though not with AI tools.

You probably won’t notice the change unless you decide to check a picture that seems a little too amazing or if you want to check everything you see out of well-founded caution. However, professionals will probably appreciate Google’s move since they don’t want to undermine their credibility in a dispute over whether they used AI. Trusting a photograph isn’t always enough when AI tools are good enough to trick the eye. A tag or lack thereof by Google might boost trust in a photo.

Google’s move points to what may be the future of photography and digital media as AI tools grow more common. Of course, doing so is also a marketing move. It’s a very minor change to Google Photos in many ways, but proclaiming it helps Google look like it's being responsible about AI while actually doing so.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.