That mind-blowing Gemini AI demo was staged, Google admits

Real-time voice conversations not yet possible

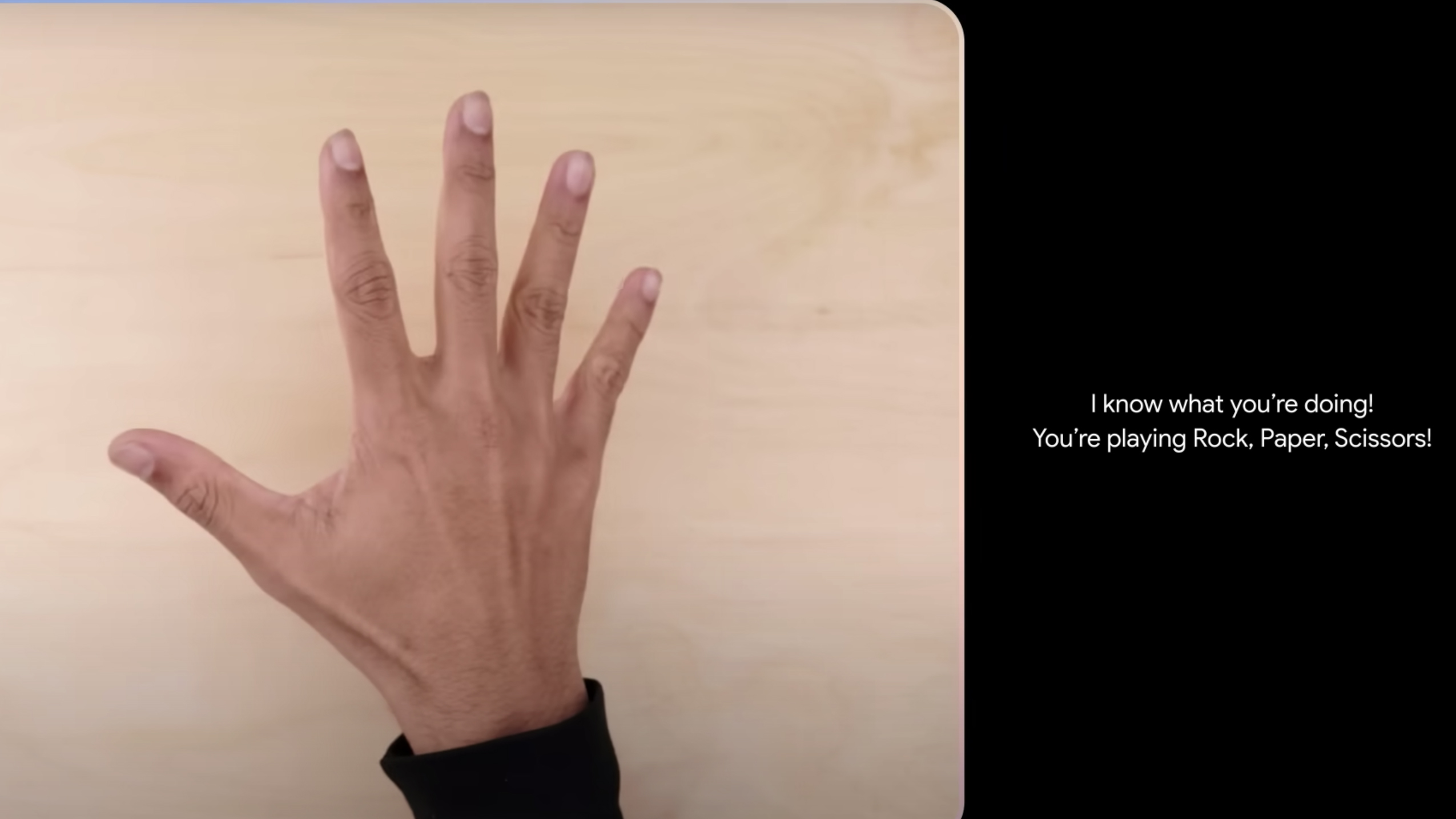

Earlier this week, Google unveiled its new Gemini artificial intelligence (AI) model, and it’s safe to say the tool absolutely wowed the tech world. That was in part due to an impressive “hands on” video demo (below) that Google shared, yet it’s now emerged that all was not as it seemed.

According to Bloomberg, Google modified interactions with Gemini in numerous ways in order to create the demonstration. It raises questions over the chatbot’s abilities, as well as how much Google has been able to catch up with rival OpenAI and its own ChatGPT product.

For instance, the video’s YouTube description explains that “for the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity.” In other words, it probably takes a lot longer for Gemini to respond to queries than the demo suggested.

And even those queries have come under scrutiny. It turns out that the demo “wasn’t carried out in real time or in voice,” says the Bloomberg report. Instead, the real demo was constructed from “still image frames from the footage, and prompting via text.”

This means that Gemini wasn’t responding to real-world prompts quickly in real time – it was simply identifying what was being shown in still images. To portray it as a smooth, flowing conversation (as Google did) feels a little misleading.

A long way to go

That’s not all. Google claimed that Gemini could outdo the rival GPT-4 model in almost every test the two tools took. Yet looking at the numbers, Gemini is only ahead by a few percentage points in many benchmarks – despite GPT-4 being out for almost a year. That suggests Gemini has only just caught up to OpenAI’s product, and things might look very different next year or when GPT-5 ultimately comes out.

It doesn’t take much to find other signs of discontent with Gemini Pro, which is the version currently powering Google Bard. Users on X (formerly Twitter) have shown that it is prone to many of the familiar “hallucinations” that other chatbots have experienced. For instance, one user asked Gemini to tell them a six-letter word in French. Instead, Gemini confidently produced a five-letter word, somewhat confirming the rumors from before Gemini launched that Google’s AI struggled with non-English languages.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Other users have expressed frustration with Gemini’s inability to create accurate code and its reluctance to summarise sensitive news topics. Even simple tasks – such as naming the most recent Oscar winners – resulted in flat-out wrong responses.

This all suggests that, for now, Gemini may fall short of the lofty expectations created by Google’s slick demo, and is a timely reminder not to trust everything you see in a demo video. It also implies that Google still has a long way to go to catch up with OpenAI, despite the enormous resources at the company’s disposal.

You might also like

Alex Blake has been fooling around with computers since the early 1990s, and since that time he's learned a thing or two about tech. No more than two things, though. That's all his brain can hold. As well as TechRadar, Alex writes for iMore, Digital Trends and Creative Bloq, among others. He was previously commissioning editor at MacFormat magazine. That means he mostly covers the world of Apple and its latest products, but also Windows, computer peripherals, mobile apps, and much more beyond. When not writing, you can find him hiking the English countryside and gaming on his PC.