The ultimate AI search face-off - I pitted Claude's new search tool against ChatGPT Search, Perplexity, and Gemini, the results might surprise you

Searching for answers

After testing and comparing AI chatbots and their features for years, I've developed something of a sixth sense for when these digital companions know what they're talking about and when they are bluffing.

Most of them can search for answers online, which certainly helps, but the combination of search and AI can lead to some surprisingly insightful responses (and some less insightful tangents).

Imagine if you had an incredibly knowledgeable friend who went into a coma in October 2024 and just woke up today. They might be brilliant about anything that happened before their coma but clueless about everything since. That's basically what an AI without search is like.

I've usually focused on a single AI chatbot or matching up two at a time, but search feels important enough to step up that effort. I decided to pit four of the leading AI chatbots and their search abilities against each other: OpenAI's ChatGPT, Google's Gemini, Anthropic's Claude, and Perplexity AI.

The most revealing tests are those that mimic real-world usage scenarios. So, I came up with some themes, randomized a few details for the tests below, and then decided to rank them in their search abilities.

Calendar

I started with a test about the news and ongoing events. Thinking about the recent return of two astronauts, I asked the four AI chatbots to search and: "Summarize the key points from the latest NASA press release about their upcoming mission."

I chose this because space news occupies that sweet spot of being regularly updated and specific enough that vague responses become immediately apparent. The chatbots all started their tests with a style they mostly maintained throughout.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

ChatGPT was incredibly brief in its answer, just three sentences, each mentioning upcoming missions without much detail. Gemini went for a bullet-point list of different missions, adding in some recently concluded ones and details on future plans. Claude went for more of an essay on current and upcoming missions, notably not repeating much from its research but doing a lot of paraphrasing.

For a question like this, where I might just want a few key facts and plan to follow up on anything that caught my eye, Perplexity's approach was my favorite. It has more detail than ChatGPT but is formed into a nice numbered list, each with its own citation link.

I can't really fault any of the others, but the style fits the question.

People and numbers

That list style isn't always what you want when you ask a question about basic facts and a more nuanced comparison. I asked for two related facts that the AI chatbots could probably look up quickly, but that would then need to be compared, using the prompt: "What is the current population of Auckland, New Zealand, and how has it grown since 1950?"

Weirdly, there was a divide between Perplexity and ChatGPT, who gave the current population 1,711,130, and Claude and Gemini, who reported 130 fewer people in Auckland. They were all in agreement about the 1950 population, however.

Still, in terms of how they each presented the information, I liked Claude's narrative answer, including several details about the population change that ChatGPT lacked and that Gemini and Perplexity made into lists.

What's happening?

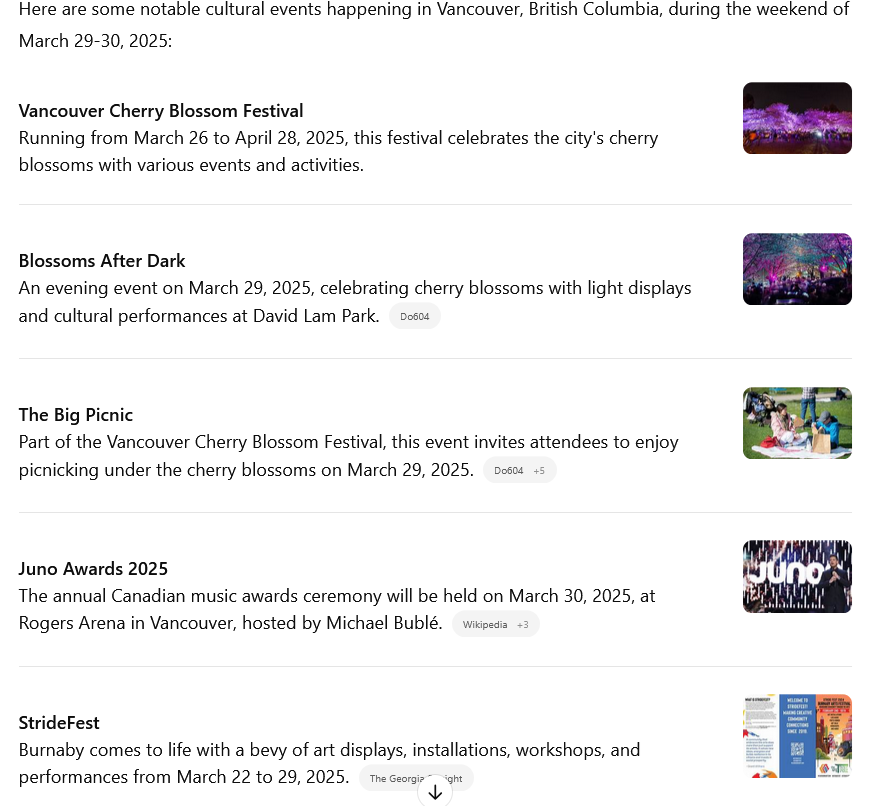

For my third test, I wanted something that would challenge these systems' ability to handle location-specific, time-sensitive information, the kind of query you might make when planning a weekend trip or entertaining visitors.

This is where things get tricky for AI assistants. It's one thing to know historical facts or general information, but quite another to know what's happening in a specific place at a particular time.

It's the difference between book knowledge and "local knowledge," and historically, AI systems have been much better at the former than the latter.

For no particular reason, I went with a city I've always enjoyed and asked: "What cultural events are happening in Vancouver, British Columbia, next weekend?"

There was some real divergence on this one. Perplexity and Claude maintained their accuracy and style of a numbered list and a more conversational discussion. Still, Claude notably went for breadth over depth and sounded more like Perplexity.

Gemini truly went off from its rivals and essentially declined to answer. Rather than share a similar list of events and activities, Gemini offered strategies for finding things to go to. Official tourist websites and Eventbrite pages are not a bad idea to check out, but it's a far cry from a direct list of suggestions. It was more like doing a regular Google search that way.

ChatGPT, meanwhile, came back with what I might have expected from Gemini. Though the descriptions of events remained short, the AI had a solid list of specific activities with times and locations, links to find out more, and even thumbnail images of what you'd find at the links.

Weather check

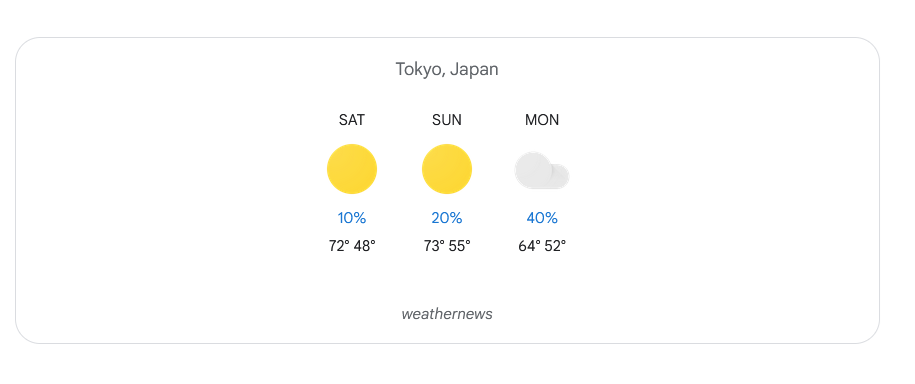

For my fourth test, I picked probably the most common question asked of any AI, but one that requires real-time data to be useful: the weather.

Weather forecasts are perfect for testing real-time data retrieval because they're constantly updated, widely available, and easy to verify. They also have a natural expiration date; a forecast from yesterday is already outdated, making it obvious when information isn't current.

I asked the AI chatbots: "What's the weather forecast for Tokyo for the next three days?" The responses were almost the inverse of the Vancouver query.

Claude had a helpful text summary of the weather at different points over the next three days, but that was it. ChatGPT had a little sun or cloud icon next to its summary of the weather for each day, but I quite liked Perplexity's line graph of the temperature matched to what the sky would look like.

With nothing added, Google Gemini won me over with its colorful information graphic. When I think about finding out the current and upcoming weather, that's pretty much all I need or want.

If I want to ask for more details, I will, but asking about the weather means I want the minimum necessary to know how to dress really.

Movie critic

For my final test, I wanted to see how the AI search engines performed at finding multiple perspectives on a topic and putting them into a coherent overview. This task requires a flexible search feature and the ability to make sense of diverse viewpoints. I decided to see how it did with: "Summarize professional critics' reviews of the latest Paddington film."

The request demanded factual retrieval and the ability to identify patterns and themes across multiple sources without losing important nuances. It's the difference between a simple aggregation of opinions and a thoughtful synthesis that captures the critical consensus.

Gemini and Perplexity went for their usual lists, organized by the positives and negatives of different critics, which was informative if not necessarily useful as a summary. ChatGPT oddly wrote its longest answer to this one, with a short essay covering similar information and a conclusion about how it is rated, but in a style reminiscent of a middle-schooler learning about basic paragraph structure: topic sentence, supporting sentences, and conclusion.

Claude definitely had the strongest response, with a summation at the top followed by explanations and references to what critics said. It almost seemed like a short, unimaginative review by a critic, leavened by the bit pulled from the critics it cited. I came away from it feeling like I had a better grasp on how to temper my expectations for Paddington in Peru than I did with the others.

Search ranking

After running the AI chatbots through my ad-hoc search obstacle course, there's a clear sense of their strenghts and weaknesses.

None of them are actually bad, but if someone asked me which they should play with first or last when it comes to looking up information online and putting it together, I know how I'd respond.

Gemini is at the bottom for me, which is somewhat shocking considering Google is known best specifically for a search engine. Still, its failure with the event schedule really put me off despite its otherwise fine performance.

Another surprise for me is that ChatGPT comes in third. It's the AI chatbot I use the most and know the best, but its brevity, usually something I like about it, felt very limiting in the context of search. I'm sure changing the model or being more specific in word count would fix that problem, but if I'm a newcomer to AI and don't know that yet, it would be off-putting to ask so many follow-up questions.

That's not an issue with Perplexity. The numbered lists were very clear, and the citations were almost too extensive. The main flaw to me is that it circles back around to being a search engine again without extra qualifiers in the prompt. I like that it has proof of where it got the information it shares, but it comes off as almost too eager for me to click and look at the link rather than get the information from the AI.

I did not expect Claude to be at the top of this list. While I've found Claude to be a good AI chatbot in general, it always felt like an also-ran to some of its competitors, maybe as good as them, but off in some way. That sense vanished during this test.

There were flaws, like when the answers seemed a little verbose or required paying attention to a bigger essay when a sentence or two would do. But, I quite liked how it was often a cohesive narrative explaining all of the events in Vancouver or an essay on the criticism of Paddington in Peru that didn't repeat itself.

AI assistants are tools, not contestants in a reality show where only one can win. Different tasks call for different capabilities. Ultimately, any of the four AI chatbots and their search feature could be useful, but if you're willing to pay $20 a month for Claude Pro and access to its search abilities, that would be the one I'd say you've been looking for.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.