‘Well the end of aging and death wouldn’t be bad’: Professor who coined the term AGI for superintelligence thinks we’ll get human-level AI in ‘three to five years’

Exclusive: the Singularity is coming, and it's got three legs and cat ears

You might not have heard of Dr Ben Goertzel, the CEO of the Artificial Superintelligence (ASI) Alliance, and founder of SingularityNET, the world’s first decentralized AI platform, but he’s on a mission to accelerate our progress towards the point in history that is popularly known as the singularity, when AI becomes so intelligent that it surpasses human intelligence and enters into a recursive sequence of self-improvement cycles that leads to the emergence of a limitlessly powerful superintelligence.

The term AGI (Artificial General Intelligence) was invented to describe superintelligence by a group including Goertzel, Shane Legg and Peter Voss when they were thinking about a title for a book Goertzel was editing, in which Legg and Voss had authored chapters. The book came out in 2005 with the title Artificial General Intelligence, and then Goertzel launched the AGI conference series in 2006. (He later discovered that the physicist Mark Gubrud had used the term in an article in 1997, so while it was Goertzel who launched the term into the world and got it adopted, it had popped up online before.)

Sam Altman of OpenAI, the makers of the popular AI chatbot ChatGPT, has predicted that humans will create superintelligence, or AGI, in "a few thousand days", and Goertzel is equally ambitious.

“I personally think that we’re going to get to human-level AGI within, let’s say, three to five years, which is around the same as Ray Kurzweil (author of the 2005 book The Singularity Is Near), who put it at 2029," Goertzel told me on a video call from his home in Seattle. "I wouldn’t say it's impossible to make the breakthrough in one or two years, it's just that's not my own personal feel of it, but things are going fast, right?”

'I can’t say there’s a zero percent chance that leads to something that’s bad'

Indeed they are. Once you get to a human-level AGI, things can advance quite rapidly, and in good or bad ways. We’ve all seen movies like The Terminator. What if AGI just decides it doesn’t need humans around and getting in the way, and wants to get rid of us?

Goertzel continues: “Ray Kurzweil thought that we’d get to human equivalent AGI by 2029 and then massively superhuman AGI by 2045, which he then equated with the Singularity, meaning a point where technological advance occurs so fast that it appears infinite relative to humans, like your phone is making a new Nobel prize-level discovery every second.

"I can’t say there’s a zero percent chance that leads to something that’s bad. I don’t see why a super AI would enslave people or use them for batteries or even hate us and try to slaughter us; but I mean, if I’m going to build a house I mow down a bunch of ants and their ant hills, I’ll put a bunch of squirrels out of their home; I can see an AI having that same sort of indifference to us as we have towards non-human animals and plants much of the time.”

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Before you get too worried, Goertzel does see some room for optimism when it comes to the implications of AGI: “On the other hand I don’t see why that’s overwhelmingly likely, because we are building these systems, and we’re building them with the goal of making them help us and like us mostly, so there doesn’t seem a reason why as soon as they get twice as smart as us they’re suddenly going to turn and stop trying to help us and want to destroy us.

"The best we can do, even if we’re worried about dangers, is direct AGIs towards being compassionate, helpful beings, right? And that leads into what I’ve been doing with SingularityNET.”

Dr Ben Goertzel is on a mission to accelerate the emergence of human-level artificial general intelligence (AGI) and superintelligence for the benefit of all mankind.

Goertzel has two big AGI projects that are coupled together. One is OpenCog Hyperone, which is an attempt to build AGI according to reasonable cognitive architecture that’s an embodied agent who knows who it is and who we are, and tries to achieve goals in the world in a holistic way.

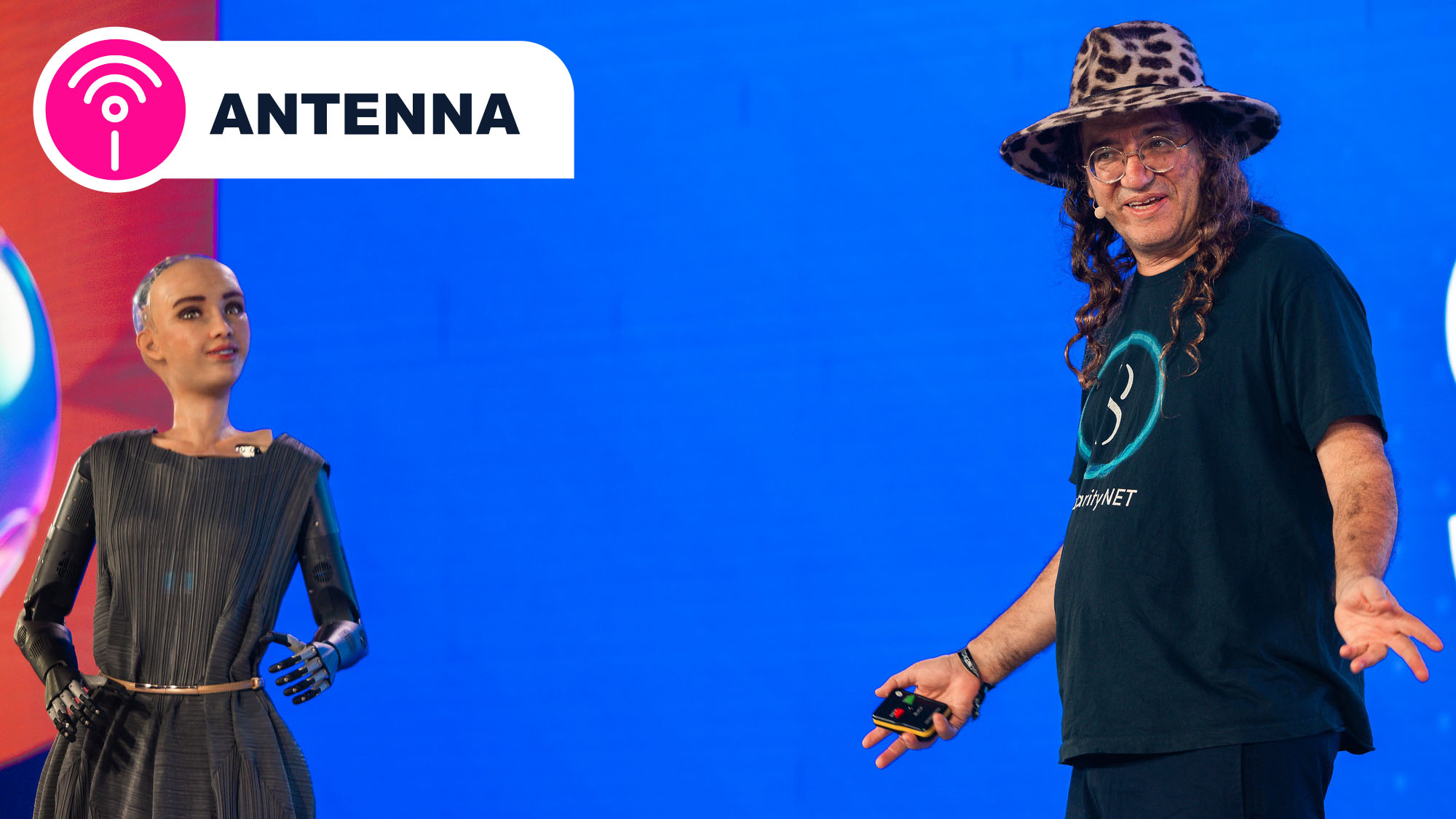

Some of the photos of Goertzel in this article show him sharing the stage with two robots created by Hanson Robotics – Sophia and Desdemona. Sophia, a 'social robot' who can mimic social behavior, has the bald head and Desdemona, also known as 'Desi', is the lead vocalist in a band that includes Goertzel on keyboards. Desi is wearing the cat ears and has three legs. The photos are from TOKEN2049, a 2024 cryptocurrency conference in Singapore, but these AI robots date back from much earlier and represent some of the early advances in AI, and robotics, before the emergence of the large language models (LLMs) we have today. In fact, Goertzel still sees value in their evolution, because they were created with something different in mind than today’s chatbots.

“While ChatGPT (and other chatbots) have advanced a lot, they’re not embodied agents," he says. "ChatGPT, when you talk to it, doesn’t know who it is or know who you are, or try to create a bond with you. It totally objectifies the interaction. The goal with the AI behind these robots was starting from a different point. It was starting from having a sort of rich emotional or social relation with the person being interacted with, and that still seems like an important thing to be doing, even though some other aspects of AI have advanced so far with large language learning models and such.

'Don't worry, nothing is under control'

"Large language models like deep neural nets can be part of an AGI, but I think you also want other parts so we have logical reasoning systems, we have systems that do creativity by evolutionary learning like a genetic algorithm. It’s a fairly different approach to AGI than the ChatGPTs of the world.”

Goertzel's SignularityNET, is a platform designed to run AI systems that are decentralized across many different computers around the world, so they have no central owner or controller, much like the blockchain model. This is either very important for making AGI ethical, or very dangerous and terrifying, depending on your perspective.

Goertzel is in the former camp. “Ram Dass, the guru from my childhood had this beautiful saying, ‘Don’t worry, nothing is under control’, so either you think that’s great and the way it has to be, or you think 'What’s going to happen? We need some fearless leader to direct things!' I’m obviously among those who think that if AI is controlled by one party as we get the breakthrough to AGI, it's bad because that party has some narrow collection of interests that will then channel the AGI’s mind in a very special way which isn’t for the good of all humanity. I would much rather see AGI developed in the modality of the internet, or the Linux operating system – sort of by and for everyone and no one, and that’s what platforms like SingularityNET and the ASI alliance platform allows.”

Goertzel is putting his money where his mouth is. SingularityNET is offering more than over $1 million in grants to developers of beneficial AGI, but they need to apply before December 1 2024.

'Well the end of aging and death wouldn’t be bad'

I’m left feeling slightly apprehensive about the future of, well, everything. Is there anything Goertzel can do to reassure me that there’s some benefit to AGI for humanity? Can he give me some examples of good things that could happen?

“Well the end of aging and death wouldn’t be bad," he suggests. "If you could cure all diseases and end involuntary death and allow everyone to grow back their body from age 20 and keep it there for as long as they wanted… I mean that would mean getting rid of cancer and dementia and mental illness. That would be a significant plus. Or let’s say having a little box air-dropped by a drone in everyone’s backyard, that would take a verbal instruction and 3D-print you any form of matter that you wanted, like a molecular nano assembler… that would be highly advantageous.”

I realize at this point that he’s talking about the replicators from Star Trek.

“You probably want some guard rails on there”, he adds, almost casually. “If you look at the environment and global warming and such, more efficient ways of generating power from solar, geothermal and water. No doubt AGI would be able to solve those faster than us. I mean, the upside is pretty significant.”

Well, that’s one way of putting it. At this point my head is spinning with possibilities.

“Even setting aside the crazier stuff like brain-computer interfacing, I mean, you could upgrade your own brain," Goertzel continues. "You could fuse your mind with the AGI to whatever level you felt was appropriate. If you did too much you could sort of lose yourself, which some people might not mind, but if you did just a little, you could, say, learn to play a musical instrument in half an hour instead of 10 years, right?”

'Just put them in space, to cool things down'

It seems that the possible upsides of AGI are obvious to anybody who has read a lot of science fiction. But I wonder if we have enough resources to make all this happen on Earth, since even our current AI chatbots need to consume vast amounts of resources in order to perform relatively simple work.

I should probably have anticipated Goertzel's answer: “Well, there’s a lot we don’t know how to mine on the planet, but AGI would,"

True, but what about the amount of water AI requires for cooling?

“Just put them in space, to cool things down," he says. "Put the AGI on satellites in space, using solar energy and resources from mining the moon and asteroids. Once you have something that’s multiple times smarter than humans, it doesn’t have to be bound by the constraints that bind us in practice. There’s so much we don’t understand that a system even twice as smart as us would understand. We don’t know what the constraint would be.”

I leave my talk with Goertzel trying to imagine what constraints could possibly apply to minds beyond the human level. All I can think of are the words of William Blake on describing the face of a tiger, the natural world's most fearsome predator: “What immortal hand or eye, Could frame thy fearful symmetry?”

I’m still unsure if AGI will be a force for good or ill, or if those are just concepts that apply to lesser mortals, and not to the superintelligence gods to come; but right now we still have a degree of control over the direction AGI takes, and it’s reassuring to know that there are people like Ben Goertzel in the world, pushing for an ethical and compassionate AGI in the new world to come.

You might also like...

Graham is the Senior Editor for AI at TechRadar. With over 25 years of experience in both online and print journalism, Graham has worked for various market-leading tech brands including Computeractive, PC Pro, iMore, MacFormat, Mac|Life, Maximum PC, and more. He specializes in reporting on everything to do with AI and has appeared on BBC TV shows like BBC One Breakfast and on Radio 4 commenting on the latest trends in tech. Graham has an honors degree in Computer Science and spends his spare time podcasting and blogging.