Hallucinations are dropping in ChatGPT but that's not the end of our AI problems

Does AI even know you?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

ChatGPT, Gemini, and other LLMs are getting better about scrubbing out hallucinations but we're not clear of errors and long-term concerns.

I was talking to an old friend about AI – as one often does whenever engaging in causal conversation with anyone these days – and he was describing how he'd been using AI to help him analyze insurance documents. Basically, he was feeding almost a dozen documents into the system to summarize or maybe a pair of lengthy policies to compare changes. This was work that could take him hours, but in the hands of AI (perhaps ChatGPT or Gemini, though he didn't specify), just minutes.

What fascinated me is that my friend has no illusions about generative AI's accuracy. He fully expected one out of 10 facts to be inaccurate or perhaps hallucinated and made it clear that his very human hands are still part of the quality-control process. For now.

The next thing he said surprised me – not because it isn't true, but because he acknowledged it. Eventually, AI won't hallucinate, it won't make a mistake. That's the trajectory and we should prepare for it.

The future is perfect

I agreed with him because this has long been my thinking. The speed of development essentially guarantees it.

While I grew up with Moore's Law, which posits a doubling of transistor capacity on a microchip roughly every two years, AI's Law is, putting it roughly, a doubling of intelligence every three-to-six months. That pace is why everyone is so convinced we'll achieve Artificial General Intelligence (AGI or human-like intelligence) sooner than originally thought.

I believe that, too, but I want to circle back to hallucinations because even as consumers and non-techies like my friend embrace AI for everyday work, hallucinations remain a very real part of the AI, Large Language Model (LLM) corpus.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

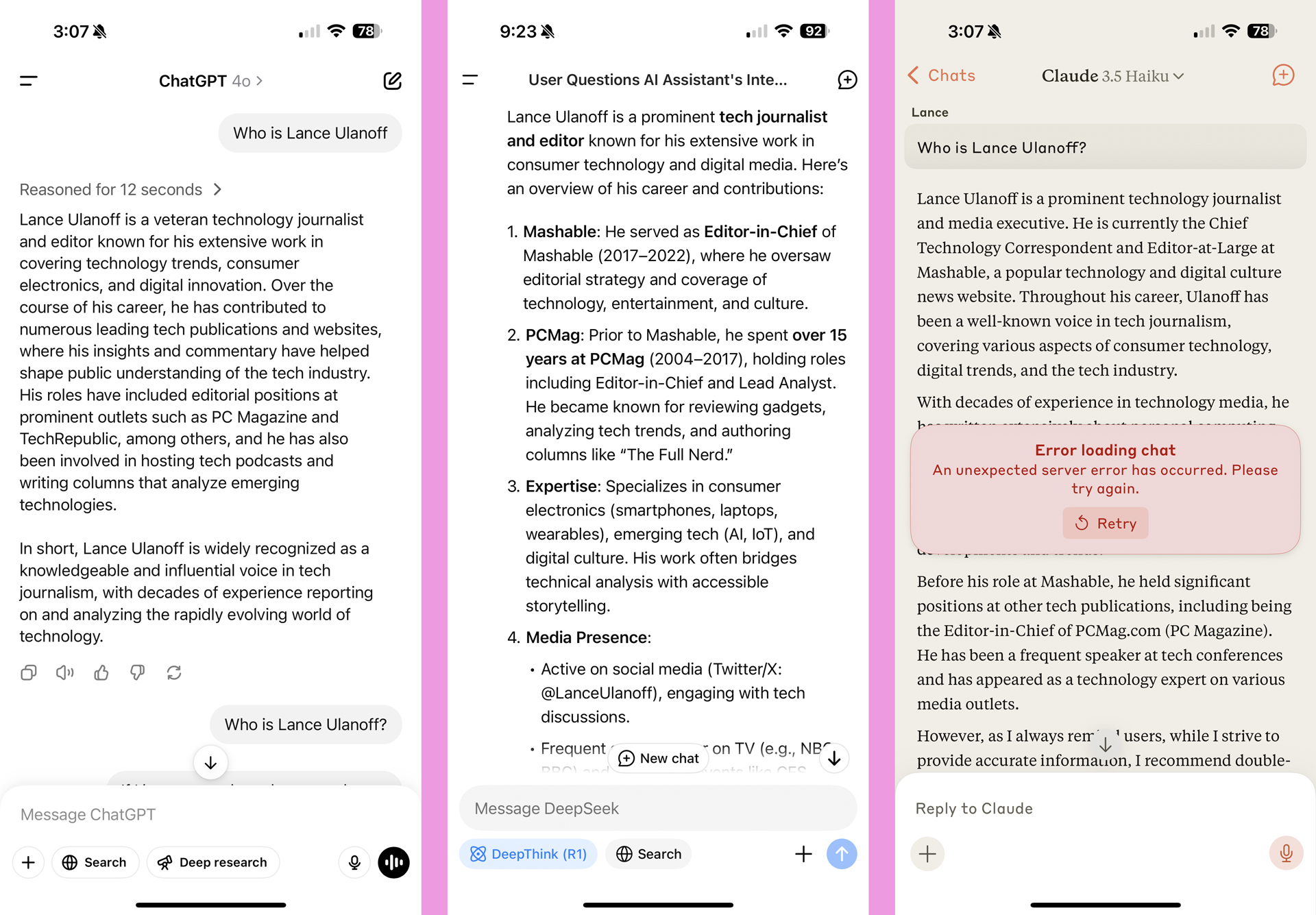

In a recent anecdotal test of multiple AI chatbots, I was chagrinned to find that most of them could not accurately recount my work history, even though it is spelled out in exquisite detail on Linkedin and Wikipedia.

These were minor errors and not of any real importance because who cares about my background except me? Still, ChatGPT's 03-mini model, which uses deeper reasoning and can therefore take longer to formulate an answer, said I worked at TechRepublic. That's close to "TechRadar," but no cigar.

DeepSeek, the Chinese AI chatbot wunderkund, had me working at Mashable years after I left. It also confused my PCMag history.

Google Gemini smartly kept the details scant, but it got all of them right. ChatGPT's 4o model took a similar pared-down approach and achieved 100% accuracy.

Claude AI lost the thread of my timeline and still had me working at Mashable. It warns that its data is out of date, but I did not think it was 8 years out of date.

What percentage of AI answers do you think are hallucinations?March 24, 2025

I ran some polls on social media about the level of hallucination most people expect to see on today's AI platforms. On Threads, 25% think AI hallucinates 25% of the time. On X, 40% think it's 30% of the time.

However, I also received comments reminding me that accuracy depends on the quality of the prompt and topic areas. Information that doesn't have much of an online footprint is sure to lead to hallucinations, one person warned me.

However, research is showing that models are not only getting larger, they're getting smarter, too. A year ago, one study found ChatGPT hallucinating 40% of the time in some tests.

According to the Hughes Hallucination Evaluation Model (HHEM) leaderboard, some of the leading models' hallucinations are down to under 2%. Older models like Meta Llama 3.2 are where you can head back into double-digit hallucination rates.

Cleaning up the mess

What this shows us, though, is that these models are quickly heading in the direction my friend predicts and that at some point in the not-too-distant future, they will have large enough models with real-time training data that put the hallucination rate well below 1%.

My concern is that in the meantime, people without technical expertise or even an understanding of how to compose a useful prompt are relying on large language models for real work.

Hallucination-driven errors are likely creeping into all sectors of home life and industry and infecting our systems with misinformation. They may not be big errors, but they will accumulate. I don't have a solution for this, but it's worth thinking about and maybe even worrying about a little bit.

Perhaps, future LLMs will also include error sweeping, where you send them out into the web and through your files and have them cull all the AI-hallucination-generated mistakes.

After all, why should we have to clean up AI's messes?

You might also like

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.