What is AI Distillation?

Distillation helps make AI more accessible to the world by reducing model size

Distillation, also known as model or knowledge distillation, is a process where knowledge is transferred from a large, complex AI ‘teacher’ model to a smaller and more efficient ‘student’ model.

Doing this creates a much smaller model file which, while keeping a lot of the teacher quality, significantly reduces the computing requirements.

Using distillation is very popular in the open source community because it allows compact AI models to be deployed on personal computer systems.

A popular example is the wide range of smaller distilled models that were created across the world soon after the launch of the open source DeepSeek R1 platform.

History of Distillation

The concept of distillation was first introduced by Geoffrey Hinton (aka the ‘godfather of AI’) and his team in 2015. The technique immediately gained traction as one of the best ways to make advanced AI workable on modest computing platforms.

Distillation allowed, and continues to allow, for the widespread use of day-to-day AI applications - which would otherwise need to be processed by huge cloud based computers.

Most distilled models can be run on home computers, and as a result there are hundreds of thousands of AI applications in use around the world, doing tasks such as music and image generation or hobbyist science.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Distillation works by using the larger teacher model to generate outputs that the student model then learns to mimic.

Rather than merely copying these outputs, the student model typically learns from them, and uses those learnings to create a smaller clone of the teacher. It is not just the open source sector that uses distillation.

All foundation models from companies such as OpenAI and Google are typically distilled down into more manageable versions for distribution to business and individuals.

These companies also often provide distillation tools to their top clients to help them create smaller model versions.

Distillation vs Fine-Tuning

It should be noted that distillation is completely different from fine-tuning.

Distillation creates a new smaller model that emulates the behavior of the larger one, while fine tuning adapts a model to a specific task by training it on task-specific data.

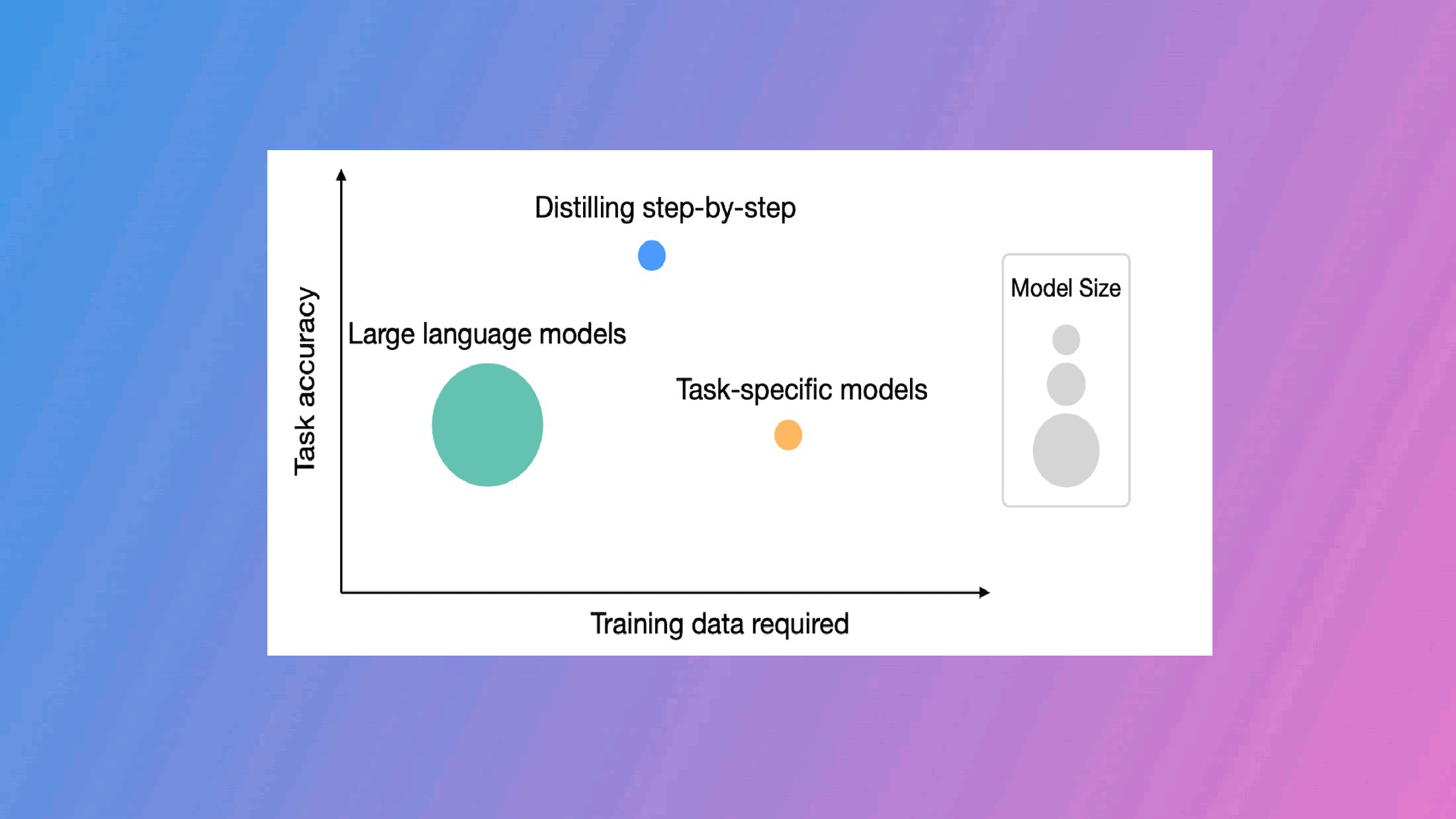

Interestingly enough, both distilled and fine-tuned models can sometimes outperform their larger brethren on specific tasks or roles.

However in the case of distillation, the resulting model will lose some of the wide-ranging knowledge that the main model originally contained. That is not necessarily the case with fine-tuned models.

There are three main methods of distillation, response, feature and relation based techniques.

Without going into too much boring technical detail, each method focuses on a different way of emulating the qualities of the original model.

And each approach offers benefits and disadvantages in terms of the quality of the resulting student model.

For this reason different methods are adopted by the various foundation model companies, in order to try and obtain performance advantages in the market.

Importance of Distillation

Distillation is now a critical part of the enterprise AI world, as over time flagship foundation models have grown to need massive resources to operate.

Instead of processing trillions of parameters, needing city sized data centers and power supplies, smaller distilled models can be run on-premise by large corporations and government organizations.

The widespread availability of these kinds of options makes AI a more democratic technology, and opens up the benefits to a much wider audience all-round. It also opens the door to more private and secure AI applications.

There are other advantages that come from distillation. The smaller models run faster and use significantly lower energy.

They also run in a much smaller memory footprint and can be trained for specialized tasks.

These benefits have turned distillation into an essential feature of the modern AI landscape, bridging the gap between hugely expensive foundation models and practical applications that everyday users can benefit from.

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the tech industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour. He's an expert in all things software, security, privacy, mobile, AI, and tech innovation.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.