If you want to turn yourself into an AI doll, at least do it safely

Experts share some tips on how to minimize the privacy and security risks of viral AI trends

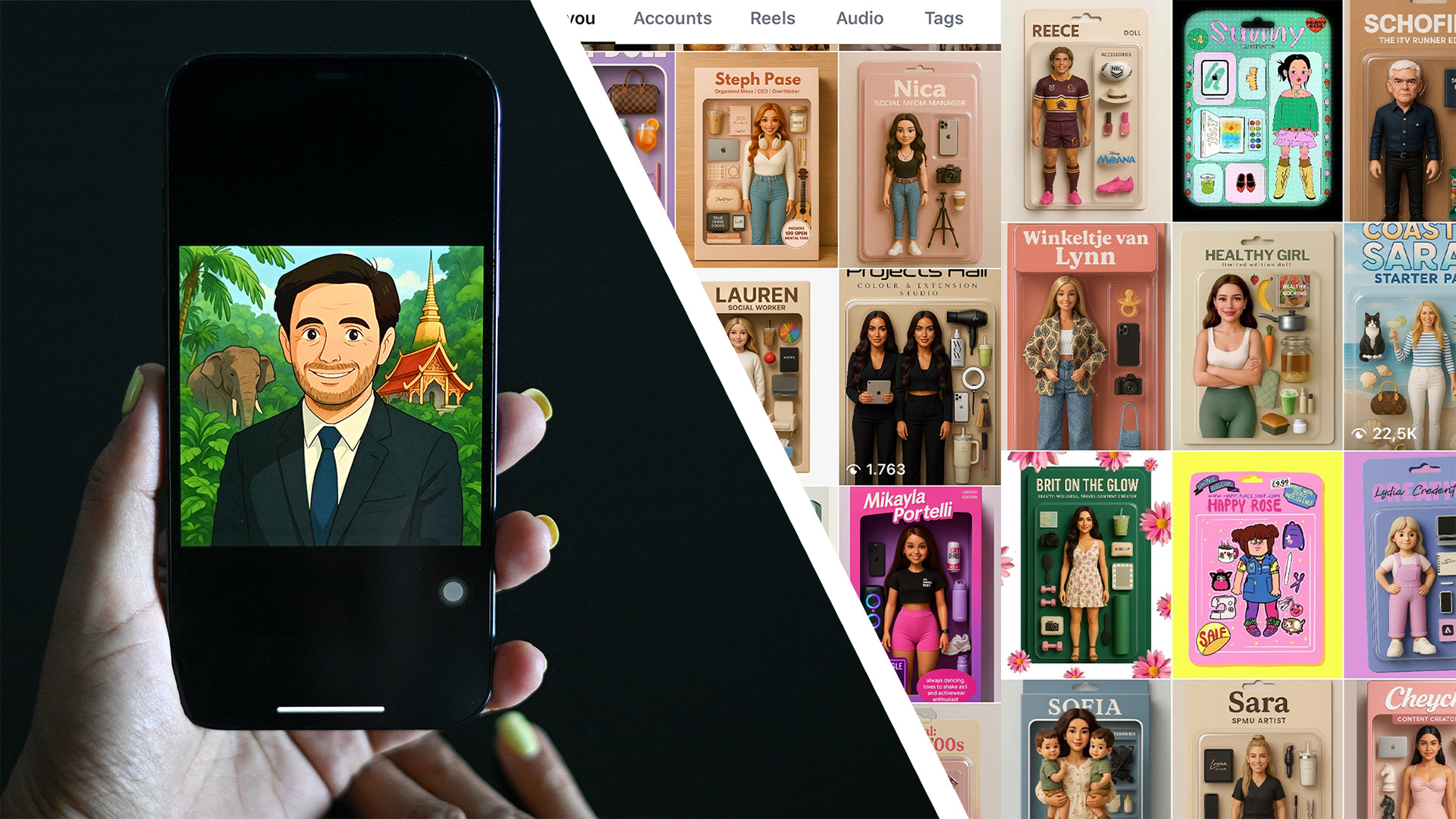

It's official, viral AI-generated images are the hottest internet trend people cannot resist right now. Everyone wants their own Ghibli-style or AI doll version of themselves, friends, or family.

We don't know what the new trendy style will be next, but we could assume there's likely going to be more. A nightmare for our privacy and security, a gift to AI companies and hackers.

I understand that most people aren't willing to stop sharing their faces and missing out on the fun of these viral AI trends. Nonetheless, it's crucial to do it safely, at least. If you don't believe me, then here's what experts advise on how to minimize the risks.

The internet never forgets

The first step to safely interacting with ChatGPT and similar tools is to understand the privacy and security implications that come with it.

Perhaps because of the conversational tone characterizing AI chatbots, it's easy to forget you're talking to a machine that will record your responses. Therefore, you might end up oversharing explicit information about your life.

Yet, "The internet never forgets," Miguel Fornés, a cybersecurity expert at Surfshark, one of the best VPN services on the market, told me. "This information could be used against us in the case of a data leak, like impersonating us, answering our 'security questions,' or preparing a targeted phishing attack or scam."

Also, according to the Director of AI & Innovation at Norton, Iskander Sanchez-Rola, behind these apparently harmless and Insta-worthy viral AI trends, "there are many very real, hidden risks that many people don’t realize," he said.

For starters, facial recognition uses technology and biometrics, typically through AI, to identify human faces. So, "When you voluntarily upload a photo, which is high-quality facial data, to create an AI-generated action figure or doll, this could be used to train facial recognition systems," Sanchez-Rola explains.

Other risks involve data harvesting, including your sensitive metadata like location or devices used, that AI companies can use to build a better digital profile about your persona to sell it to advertisers. If leaked, hackers can also use these high-resolution images to create deepfakes or other unauthorized content.

There are many very real, hidden risks that many people don’t realize

Iskander Sanchez-Rola, Norton

This is why experts recommend avoiding sharing highly personal information that can be used to identify you, such as your full legal name and job, as well as other confidential information like your kids' names, financial details, or medical data.

Similarly, you should refrain from uploading high-resolution pictures or videos of yourself, as these could give away important biometrics that you may use for authentication. Be wary also about the surroundings included in your picture to avoid sharing sensitive details like where you live or work.

All in all, Eamonn Maguire, Head of Account Security at Proton, told me: "The safest way of engaging with these types of trends would be to use photos that are already in the public domain, with unrealistic information, but that still is beside the point of why these trends are 'interesting' or 'cool'."

Assume your data could be leaked

As we have seen, one of the major risks of engaging in viral AI trends is the possibility that your conversational history could be breached by malicious or unintended parties.

To stay safe, according to Fornés at Surfshark, you should always assume your data could be leaked, and so be prepared for it. He then advises taking the following steps:

- Protect your account. This includes activating the 2FA feature, using strong and unique passwords for each service, and avoiding logging in on shared computers.

- Minimize the real data you provide to the AI model. Fornés recommends using nicknames or alternative data instead. You should also consider using an alternative ID just for dealing with AI models.

- Use the tool skeptically and responsibly. You should use the AI model without history or conversational memory features activated and in incognito mode, whenever possible.

Know what you're signing for

The power of generative AI models is that they can learn from both the internet and users' conversations. Whether webscraping – the practice of collecting information from the web – is subject to strict limitations under GDPR and similar laws, AI companies are free to use all the information you willingly share with their services.

That's why it is crucial to understand how the provider will use your data before starting to engage with its AI chatbot. How? By reading the service's privacy policy before starting to upload your face and other personal data.

I know, this could be a long and daunting task. Experts then suggest starting from what data is collected to determine if that includes unnecessarily sensitive data. The next step is then understand how this data will be used, shared, and for how long it will be retained.

Fornés from Surfshark recommends being cautious of vague phrases like "for business purposes" or "to improve services" that do not provide further details.

Sanchez-Rola at Norton also suggests checking whether your chatbot permanently deletes or anonymizes your photos and data after you create your AI doll, or if these are stored for a different purpose.

He also recommends reviewing the service's settings to stop data from being used for training whenever possible. As with any other application, you should also minimize the permissions you grant to the app and limit them to those strictly necessary.

Lastly, "To stay safer while following these trends, try not to jump into new apps without thinking it through. First, check if a service you already trust offers something similar. Trends can be fun, but they might come with long-term privacy risks," said Sanchez-Rola.

Maguire from Proton also suggests using local AI models whenever possible. These LLMs are, in fact, considered to be more private as your data should never leave your device.

He said: "Ultimately, the only way to keep your data safe is not to share it with AI tools, and to keep it encrypted and out of the hands of big tech."

You might also like

Chiara is a multimedia journalist committed to covering stories to help promote the rights and denounce the abuses of the digital side of life – wherever cybersecurity, markets, and politics tangle up. She believes an open, uncensored, and private internet is a basic human need and wants to use her knowledge of VPNs to help readers take back control. She writes news, interviews, and analysis on data privacy, online censorship, digital rights, tech policies, and security software, with a special focus on VPNs, for TechRadar and TechRadar Pro. Got a story, tip-off, or something tech-interesting to say? Reach out to chiara.castro@futurenet.com

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.