Nvidia's AI dominance will be the death knell for its GeForce graphics cards

The incentives to make AI Nvidia's primary focus are obvious

If there's one thing that we can say about this generation of Nvidia graphics cards, it's that they haven't all been well-received.

And while there are several that easily slot into our best graphics card list, the numbers don't lie: the GPU market has been rather cold this generation, and sales of both AMD and Nvidia's latest GPUs haven't been stellar.

This is all the more surprising given how few gamers were able to buy the last-gen Nvidia Ampere cards thanks to the cryptobubble and cryptominers buying up all the GPUs to crunch useless math all day long in the hopes of earning a token before the token's value plunged.

Whatever the cause of the GPU slump for Nvidia, it's surprising that even as its consumer GPUs have struggled to sell at times, the company shocked many by coming out of nowhere and briefly joining the Trillion Dollar Club as the company's market value soared over the last few months.

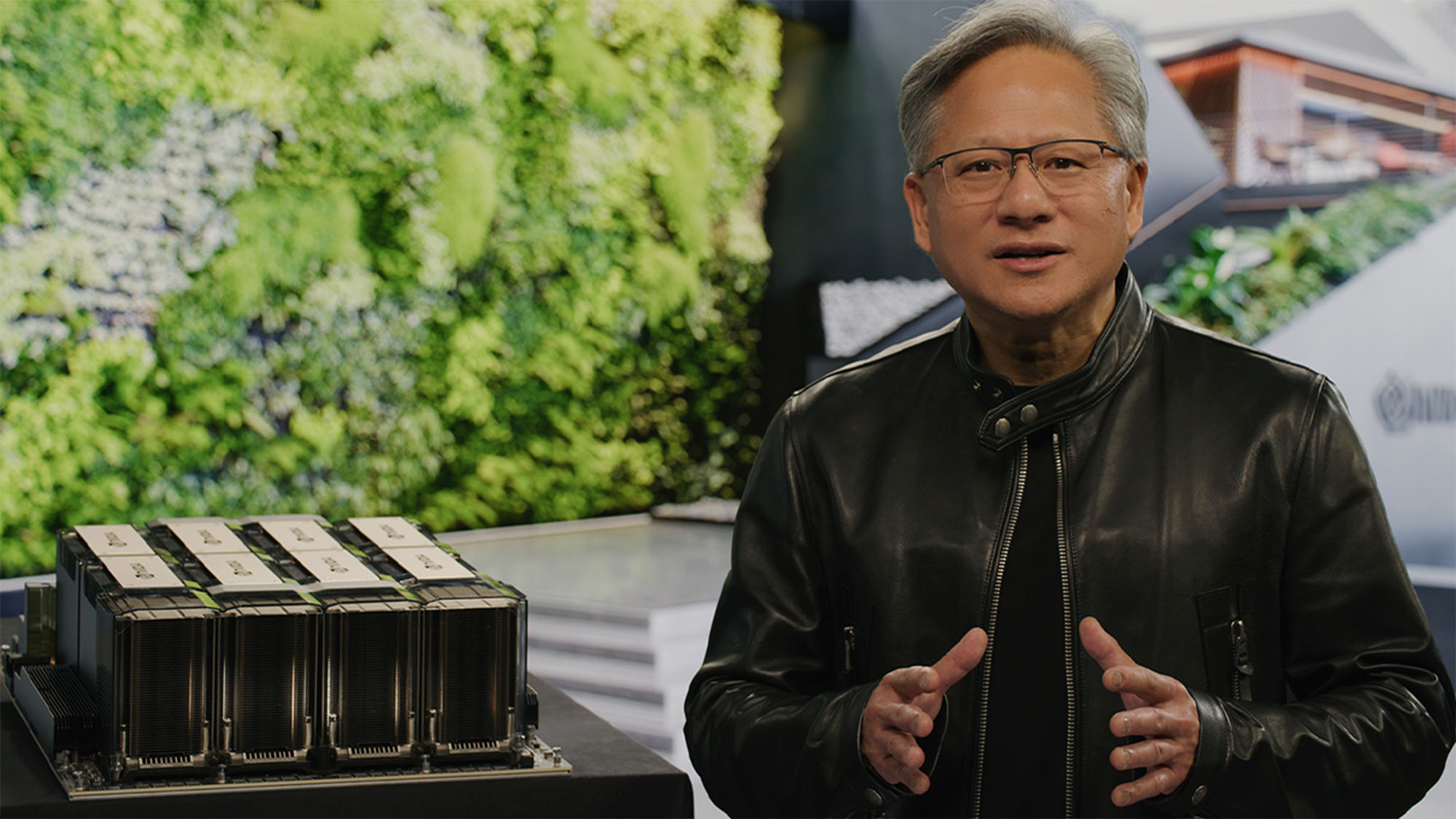

How is this possible? Two letters: AI. See, Nvidia's GPU hardware is especially well-suited to machine learning tasks that power generative AI models like ChatGPT and Midjourney. So as the AI boom has accelerated, demand for Nvidia silicon has likewise shot into the stratosphere, returning record-breaking profits to the company even as its consumer GPU sales have lagged.

The fact that AMD and Intel are as far behind Nvidia as they are has effectively given Nvidia a monopoly on AI chips, at least until all the stock of Nvidia silicon is bought up and industry giants like Microsoft, Amazon, and Google have to look to less-efficient alternatives for compute power.

These two diverging trends clearly point to a new future for Nvidia, one that relies much less on consumer GPUs, and I wouldn't be surprised if Nvidia exits the consumer graphics card market entirely in the next decade.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Nvidia slows production of its consumer Lovelace GPUs to focus on Hopper

Last week, news came down that Nvidia had essentially put its Lovelace GPUs on ice, which shouldn't surprise anyone considering that there is still a lot of stock of Nvidia graphics cards out there, with a lot of cards even getting some pretty great graphics card deals from retailers — something that was unheard of last generation.

With sales of its GPUs falling way below expectations and retailers apparently ticked off that they now have plenty of GPU stock taking up warehouse space, slowing production of new consumer GPU stock doesn't make much sense.

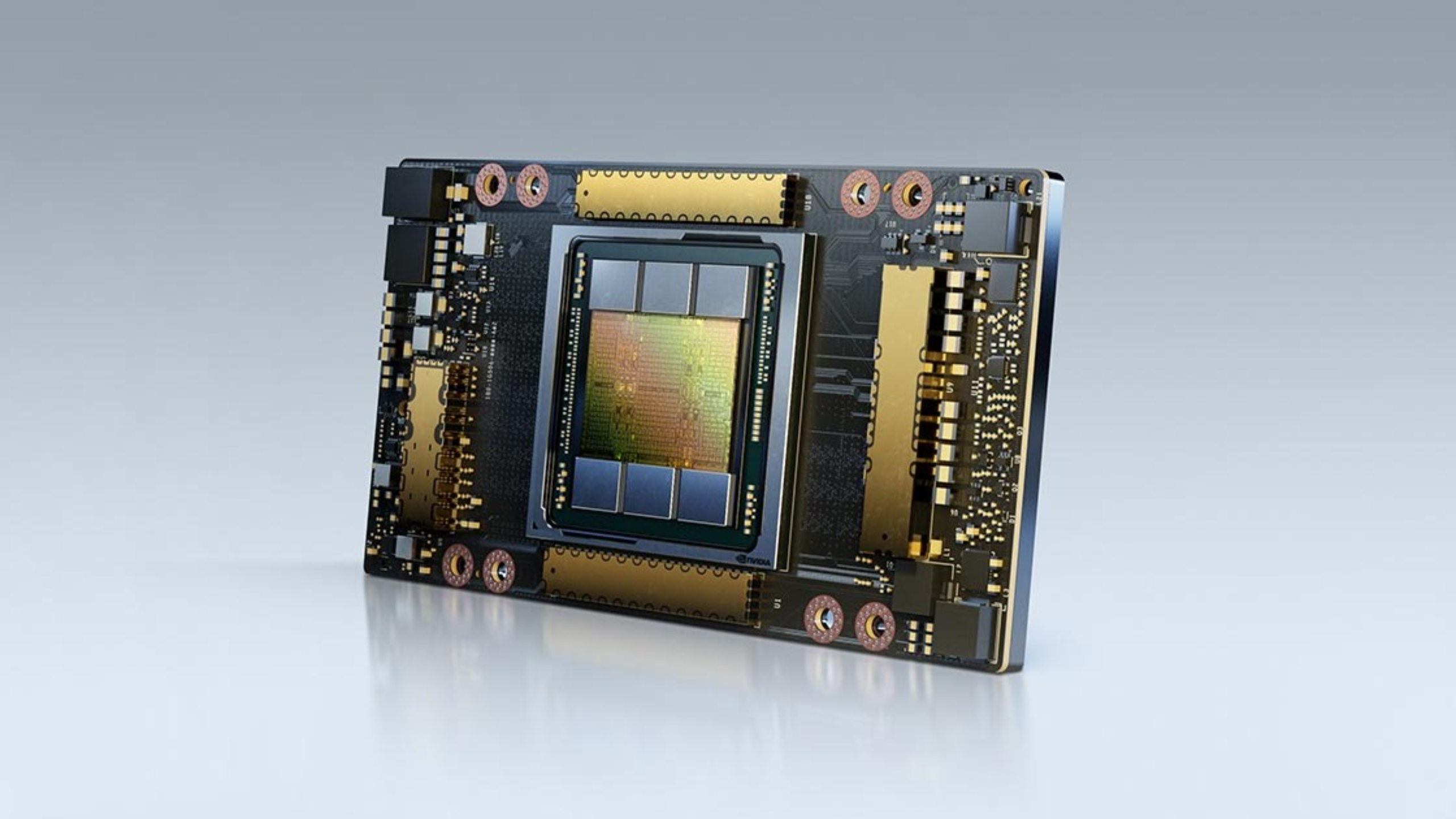

But there's something else at play here, according to the news reports. Specifically, Nvidia was pivoting itself towards producing more Hopper chips instead, and these are the chips that power datacenters and generative AI networks and models that power ChatGPT, Midjourney, and others.

These are the chips that are in demand right now, and with companies like Google and Amazon coming in with deep pockets and an essentially insatiable need for more advanced GPUs, Nvidia can effectively name its price.

Unfortunately for gamers, unlike the cryptobubble (which produced nothing of real value), generative AI actually makes stuff. Useful stuff. The kind of stuff that can save businesses a lot of time and expense, so the generative AI boom we're seeing now is much more likely to be enduring in the long term as everyone expects demand for these models to only increase from here.

Will Nvidia eventually pivot to AI hardware entirely?

I've said a number of times now that with the rise of generative AI, the Nvidia GeForce GPU is likely in its final few generations. Usually I get bewildered looks when I do, and I totally understand that. Graphics card sales make up more than 80% of Nvidia's revenue, with most of those being consumer graphics cards. How do you just pivot away from not just your core business model, but your core product?

Well, it's not hard to fathom if you have shareholders, and Nvidia has millions of them that its board is answerable to. In a world of scarce silicon and essentially insatiable demand for AI dedicated hardware in the next decade (with significantly higher price premiums per silicon chip that Nvidia can charge), it would be corporate malpractice for Nvidia to continue to make consumer graphics cards its core product.

The market pressure on Nvidia to shift its focus away from gamers and dedicate its limited volume of silicon to feeding the AI datacenter demand from Google, Microsoft, Amazon, and a few other trillion-dollar companies will become irresistable.

That is where the maximum profit is, and the market will demand that Nvidia leverage its industrial advantage to bring in the greatest return to investors as possible. And that's not in making gamer gear, I hate to say.

Whether Nvidia likes it or not, whether we like it or not, Nvidia will soon become an AI-first company, with a vestigial gaming division that is primarily if not overwhelmingly dominated by its cloud gaming service GeForce Now (which is also a faster growing profit segment than producing gaming GPUs for desktops and laptops).

How many generations of GeForce graphics cards do we have left? If we get an RTX 7000-series, I'd be shocked. Orders for 5000-series silicon are already being negotiated most likely, and work on chip design for 6000-series GPUs is probably also well underway at this point, so the inertia might win the day there, but that's no guarantee.

But beyond that, it's hard to argue in favor of producing a gaming graphics card with a profit margin of 10% to 20% when a Hopper chip could possibly net you a profit of 40% to 50%, and you can sell them in bulk to datacenter customers.

There's only so many silicon wafers that can be produced by TSMC, Samsung, or Intel, and Nvidia isn't going to be their only customer. So if Nvidia can only buy a set amount of silicon, it will need to maximize the profit it makes on each one, and gaming graphics cards for individual sale isn't where you'll find it.

If we do ever see a 7000-series GPU, it will be as a cloud service option, not something you can buy on the store shelf. If we get an RTX 5000 series and RTX 6000 series, they probably won't be getting the love and attention from Team Green that its past generations have gotten, so how good will they really be in the end? Who knows.

It's not all doom and gloom for gamers though.

Gamers will still have options

Nvidia GeForce cards might be an endangered species in the future, but if you're worried that AI will spell the death knell for PC gaming, fear not.

AMD only just introduced AI accelerators to its RDNA 3 graphics cards, so it is so far behind Nvidia in terms of AI hardware that it's not likely to catch up any time soon, so its graphics hardware won't be in demand for the AI boom in the same way (though AI will still take a bite out of supply)

Intel, meanwhile, has its own equivelent of tensor cores in its Arc GPUs known as XMX render engines, so you might think that they'd be competitive in the AI hardware space. But Intel's Arc GPUs are still on their first generation, and while Intel XeSS really does show how powerful its hardware XMX engines can be, it's still not as good as Nvidia's, so if anything it will be a distant second.

Plus, Intel also has an entire datacenter division that is absolutely massive, so it doesn't need Arc GPUs to compete with Nvidia. Further development of Intel Xeon can do that. Intel also has its own foundries, so it's not limited by how many silicon wafers TSMC can produce. If it wants to make graphics cards and datacenter chips, it absolutely can and will do that.

In the end, it strikes me as inevitable that by the end of the decade, Nvidia will have transitioned to making AI chips full-time, with its GeForce brand side-stepping into cloud gaming where GeForce graphics tiers will continue, but with far fewer consumer graphics cards (if any). That's just where the money is going to be, and if Nvidia doesn't make that pivot, a shareholder revolt might force the issue.

In its place, the current AMD vs Nvidia rivalry in GPUs will transition into an AMD vs Intel one, similar to how it is with the best processors, with both trying to get their own piece of the AI pie through their datacenter divisions and largely failing to dislodge Nvidia.

I could say that all of this should be taken with a grain of salt, that its speculating on technology in seven years time (which might as well be a geological epoch given the advance of things), and all of that is true.

But in the end, whatever 2030 looks like will be radically different from how things look today, thanks to AI. That much I can guarantee, and Nvidia will be a radically different company when all is said and done.

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social