What I want to see from next-gen graphics cards in 2025

More performance is great, but I want to see more in 2025

This generation of GPUs saw some serious changes within the AMD-Nvidia arms race, alongside the entrance of a third major player. Nvidia continued to be the top GPU manufacturer thanks to its fantastic performance across all price tiers, class-leading ray-tracing, and outstanding resolution upscaling technology through its GeForce RTX 40 series despite high prices.

Meanwhile, rival AMD continued to provide the best bang-for-buck graphics cards with the Radeon RX 7000 series while spreading their tech across video game consoles and handhelds.

After decades of dominating the CPU market, Intel even made its grand entrance into the discrete GPU market with its Intel Arc series. Though compatibility with older games and general performance weren’t anywhere near Nvidia and AMD at first, both A750 and A770 served as a respectable starting point for Intel.

As 2024 inches closer to the new year, much speculation has been made about the arrival of new cards from the new big three. According to rumors, Nvidia is slated to release their 50 series GPUs a few weeks after a potential CES 2025 reveal. On the other hand, AMD senior vice president Jack Huynh said in a recent interview that the chip maker’s current GPU focus is more mid-range and affordable market spaces. Most recently, Intel announced and launched their new Intel Arc B580 to rave reviews (including TechRadar's own Intel Arc B580 review, which earned a coveted five-stars). Even better, the Intel Arc B570 is slated for release early in 2025.

There are plenty of features that we're expecting with the new round of GPUs slated to drop next year, from VRAM increases to better enthusiast-level performance. However, here are some things I want to see from next-generation cards coming in 2025.

Better ray tracing

Real-time lighting, shadows, ambient occlusion, reflections, and global illumination completely revolutionized gaming once Nvidia released the first commercial graphics cards with ray tracing capabilities.

Introduced in 2018 through the Nvidia GeForce RTX 2080, the technology has gone on to become one of the most important visual effects in modern gaming. AMD would later feature the tech in its Radeon RX 6000 series in 2020, and since making its debut in Battlefield V, ray tracing has become an option in many of the best PC games like Cyberpunk 2077 and Alan Wake II.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Intel’s Arc cards debuted in 2022, and despite its ray tracing capabilities falling short of Nvidia or AMD's, it was at least present.

One of the issues with the technology though is how resource-intensive it is, which absolutely tanks performance. Though current generation cards have done better, there’s still much to be done in that area.

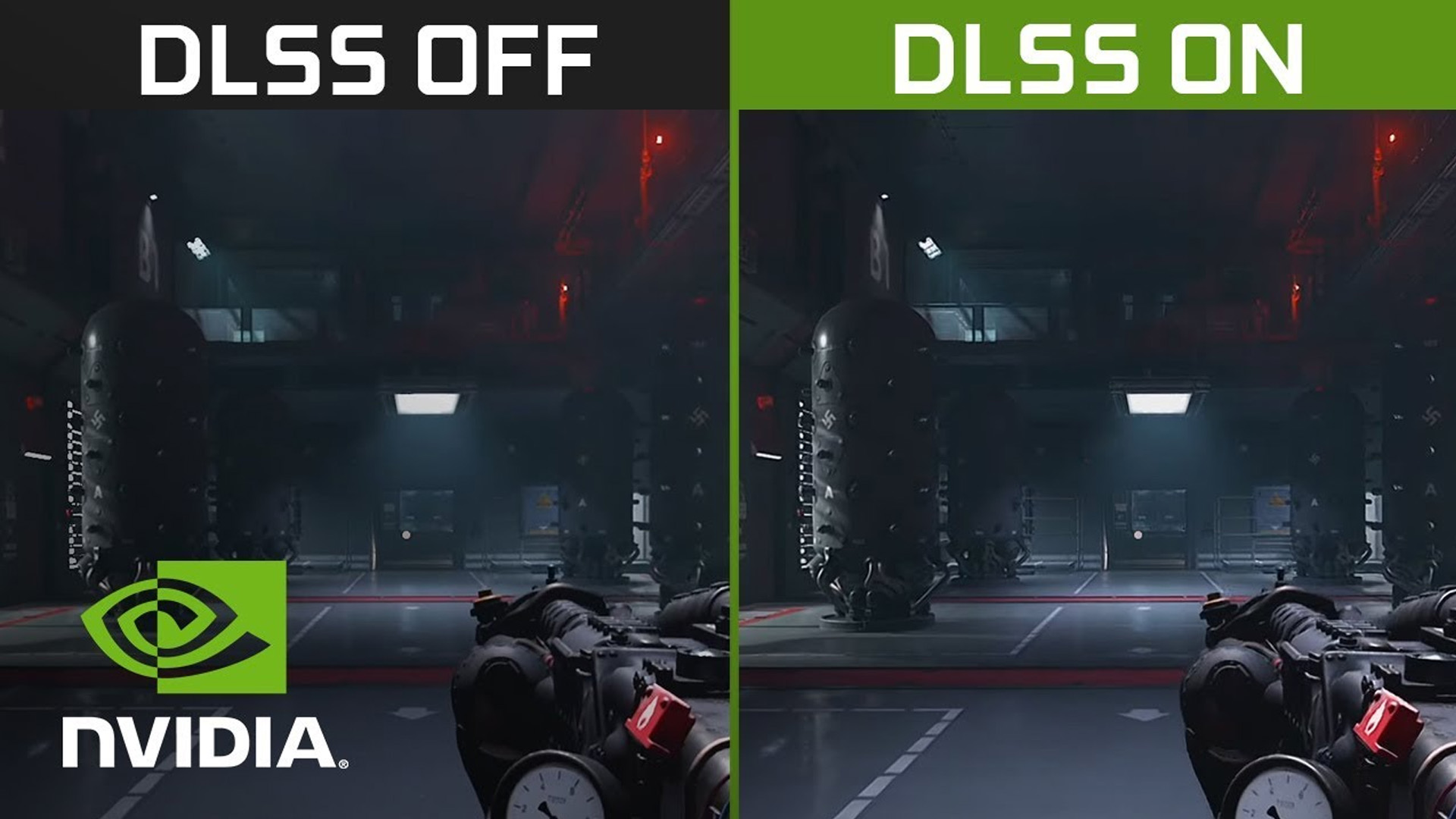

Better AI upscaling

Nvidia’s AI dominance began with its Deep Learning Super Sampling (or DLSS) technology that was introduced in 2018 on its RTX 20 series GPUs.

It allowed smaller-resolution images to be rendered, then upscaled into higher resolutions, vastly improving gaming framerates. It's also become a less resource-intensive way to achieve 4K resolutions in modern gaming consoles.

AMD would introduce their competitor upscaling technology, FidelityFX Super Resolution (or FSR), and Intel has also debuted Intel XeSS for both its graphics cards as well as some competing graphics cards that can use DP4a instructions (which is many, but not all, modern GPUs).

Though all three versions of AI upscaling look fine, they still contain artifacts, blurriness, and ghosting among others issues that can disrupt immersion. For now, the performance gain makes it worth using, but here’s to hoping that the tech continues to improve with next-gen GPUs so we don't have to sacrifice as much visual quality for those extra frames.

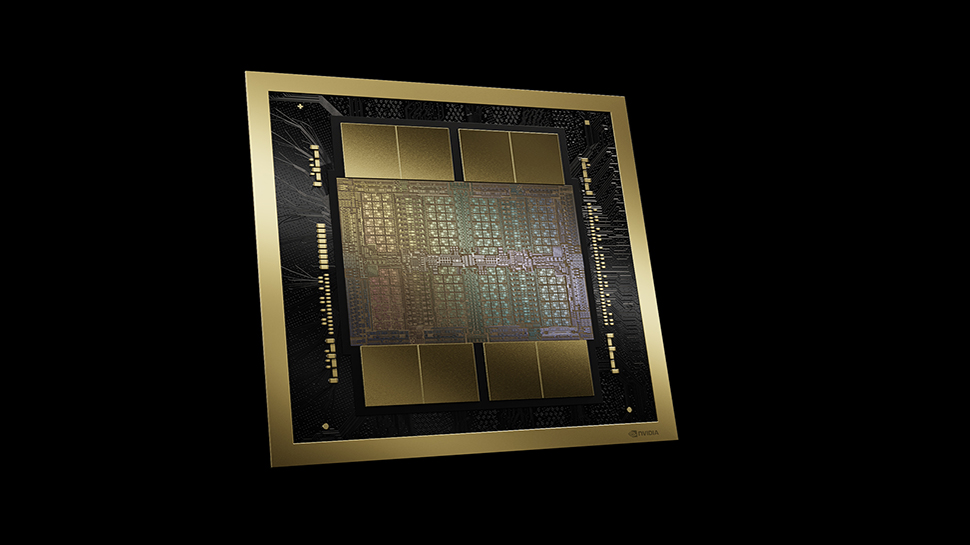

The arrival of disaggregated GPU architectures

Intel’s future 'Celestial' GPUs might use what's known as a “disaggregated GPU architecture,” according to an Intel patent filing. And while it's unlikely to make it into Intel Battlemage GPUs, this modular design could offer flexibility and improved power efficiency, especially valuable for high-performance graphics cards.

Similar chipset designs have been speculated for AMD’s RDNA 4 series and Nvidia’s RTX 50 series, though these rumors have largely quieted in recent months.

However, there are potential challenges including ensuring fast interconnects between chipsets to maintain performance. If done right, disintegrated chipsets could become just as revolutionary as multi-core CPUs became during the mid-2000s, and we might get to see some in 2025.

Reduced power consumption

As GPU chip sets get more powerful with each iteration, power consumption has notched higher as well (at least on the enthusiast side). Nvidia’s upcoming RTX 5090 card is rumored to have a thermal design power (TDP) nearing 600W, an increase from the RTX 4090's 450W.

Corsair has confirmed that the 12V-2x6 connector will likely remain the standard for next-generation GPUs, and when it comes to AMD and Intel, both are unlikely to adopt similar high-power standards for their GPUs through the continued use of 8-pin connectors. Hopefully, the next-gen chipsets will feature more performance without the huge power cost.

Ural Garrett is an Inglewood, CA-based journalist and content curator. His byline has been featured in outlets including CNN, MTVNews, Complex, TechRadar, BET, The Hollywood Reporter and more.