Apple's next accessibility features let you control your iPhone and iPad with just your eyes

Eye tracking, Music Haptics, and Live Captions for VisionOS are just a few of the features.

Ahead of Global Accessibility Day on May 16, 2024, Apple unveiled a number of new accessibility features for the iPhone, iPad, Mac, and Vision Pro. Eye tracking is leading a long list of new functionality which will let you control your iPhone and iPad by moving your eyes.

Eye Tracking, Music Haptics, Vocal Shortcuts, and Vehicle Motion Cues will arrive on eligible Apple gadgets later this year. These new accessibility features will most likely be released with iOS 18, iPadOS 18, VisionOS 2, and the next version of macOS.

These new accessibility features have become a yearly drop for Apple. The curtain is normally lifted a few weeks before WWDC, aka the Worldwide Developers Conference, which kicks off on June 10, 2024. That should be the event where we see Apple show off its next generation of main operating systems and AI chops.

Eye-Tracking looks seriously impressive

Eye-tracking looks seriously impressive and is a key way to make the iPhone and iPad even more accessible. As noted in the release and captured in a video, you can navigate iPadOS – as well as iOS – open apps, and even control elements all with just your eyes, and it uses the front-facing camera, artificial intelligence, and local machine learning throughout the experience.

You can look around the interface and use “Dwell Control” to engage with a button or element. Gestures will also be handled through just eye movement. This means that you can first look at Safari, Phone, or another app, hold that view, and it will open.

Most critically, all setup and usage data is kept local on the device, so you’ll be set with just your iPhone. You won’t need an accessory to use eye tracking. It’s designed for people with physical disabilities and builds upon other accessible ways to control an iPhone or iPad.

Vocal Shortcuts, Music Haptics, and Live Captions on Vision Pro

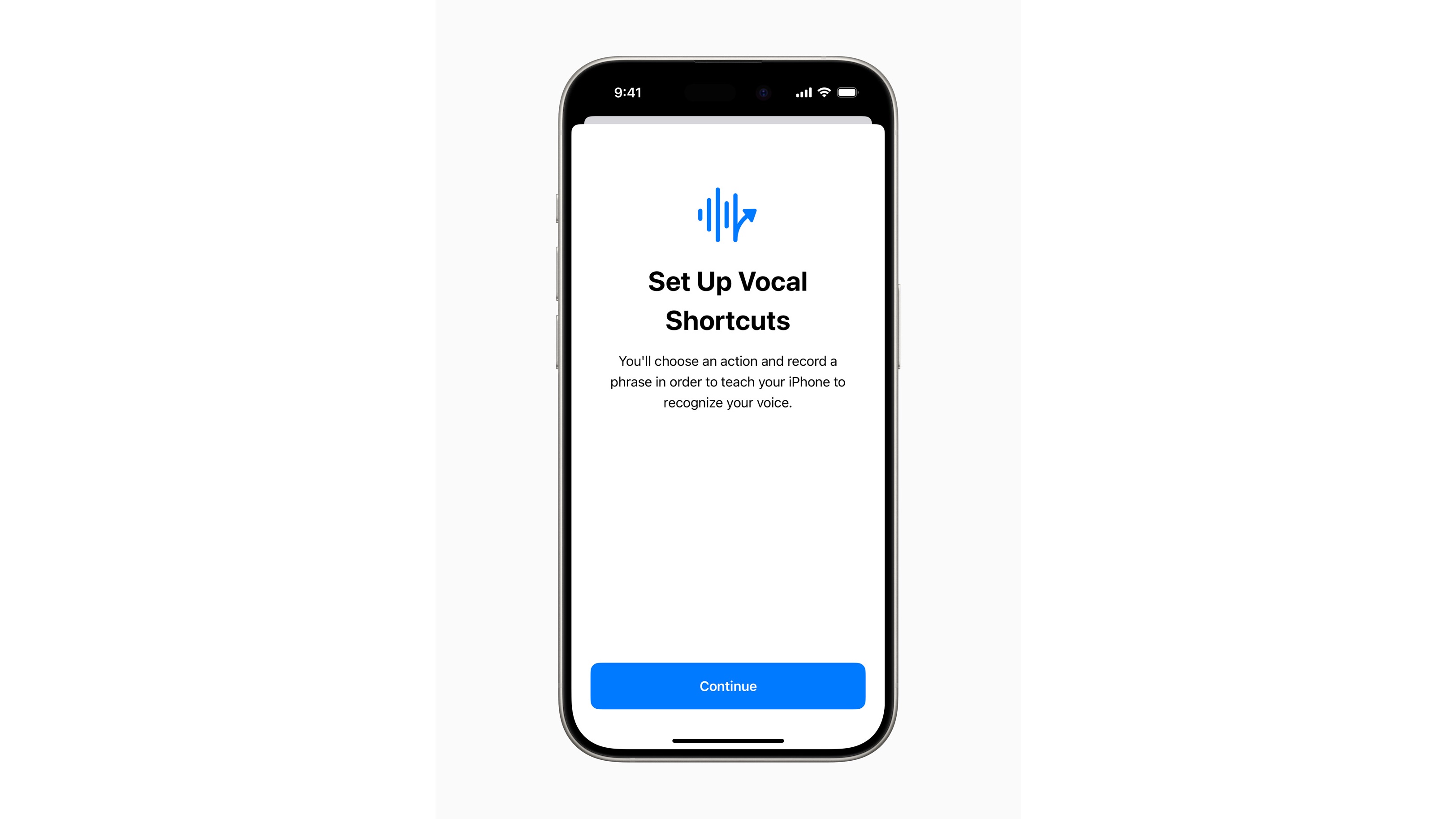

Another new accessibility feature is Vocal Shortcuts, designed for iPad and iPhone users with ALS (amyotrophic lateral sclerosis), cerebral palsy, stroke, or “acquired or progressive conditions that affect speech.” This will let you set up a custom sound that Siri can learn and identify to launch a specific shortcut or run through a task. It lives alongside Listen for Atypical Speech, designed for the same users, to open up speech recognition for a wider set.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

These two features build upon some introduced within iOS 17, so it’s great to see Apple continue to innovate. With Atypical Speech, specifically, Apple is using artificial intelligence to learn and recognize different types of speech.

Music Haptics on the iPhone is designed for users who are hard of hearing or deaf to experience music. The built-in taptic engine, which powers the iPhone’s haptics, will play different vibrations, like taps and textures, that resemble a song's audio. At launch, it will work across “millions of songs” within Apple Music, and there will be an open API for developers to implement and make music from other sources accessible.

Additionally, Apple has previews of a few other features and updates. Vehicle Motion Cues will be available on iPhone and iPad and aim to reduce motion sickness with animated dots on that screen that change as vehicle motion is detected. It's designed to help reduce motion sickness without blocking whatever you view on the screen.

One major addition arriving for VisionOS – aka the software that powers Apple Vision Pro – will be Live Captions across the entire system. This will allow for captions for spoken dialogue within conversations from FaceTime and audio from apps to be seen right in front of you. Apple’s release notes that it was designed for users who are deaf or hard of hearing, but like all accessibility features, it can be found in Settings.

Since this is Live Captions on an Apple Vision Pro, you can move the window containing the captions around and adjust the size like any other window. Vision accessibility within VisosOS will also gain reduced transparency, smart inverting, and dim flashing light functionality.

Regarding when these will ship, Apple notes in the release that the “new accessibility features [are] coming later this year.” We’ll keep a close eye on this and imagine that these will ship with the next generation of OS’ like iOS 18 and iPadOS 18, meaning folks with a developer account may be able to test these features in forthcoming beta releases.

Considering that a few of these features are powered by on-device machine learning and artificial intelligence, aiding with accessibility features is just one way that Apple believes AI has the potential to make an impact. We’ll likely hear the technology giant share more of its thoughts around AI and consumer-ready features at WWDC 2024.

You Might Also Like

Jacob Krol is the US Managing Editor, News for TechRadar. He’s been writing about technology since he was 14 when he started his own tech blog. Since then Jacob has worked for a plethora of publications including CNN Underscored, TheStreet, Parade, Men’s Journal, Mashable, CNET, and CNBC among others.

He specializes in covering companies like Apple, Samsung, and Google and going hands-on with mobile devices, smart home gadgets, TVs, and wearables. In his spare time, you can find Jacob listening to Bruce Springsteen, building a Lego set, or binge-watching the latest from Disney, Marvel, or Star Wars.