This is how Apple is telling developers to build apps for Vision Pro and spatial computing

Less is more

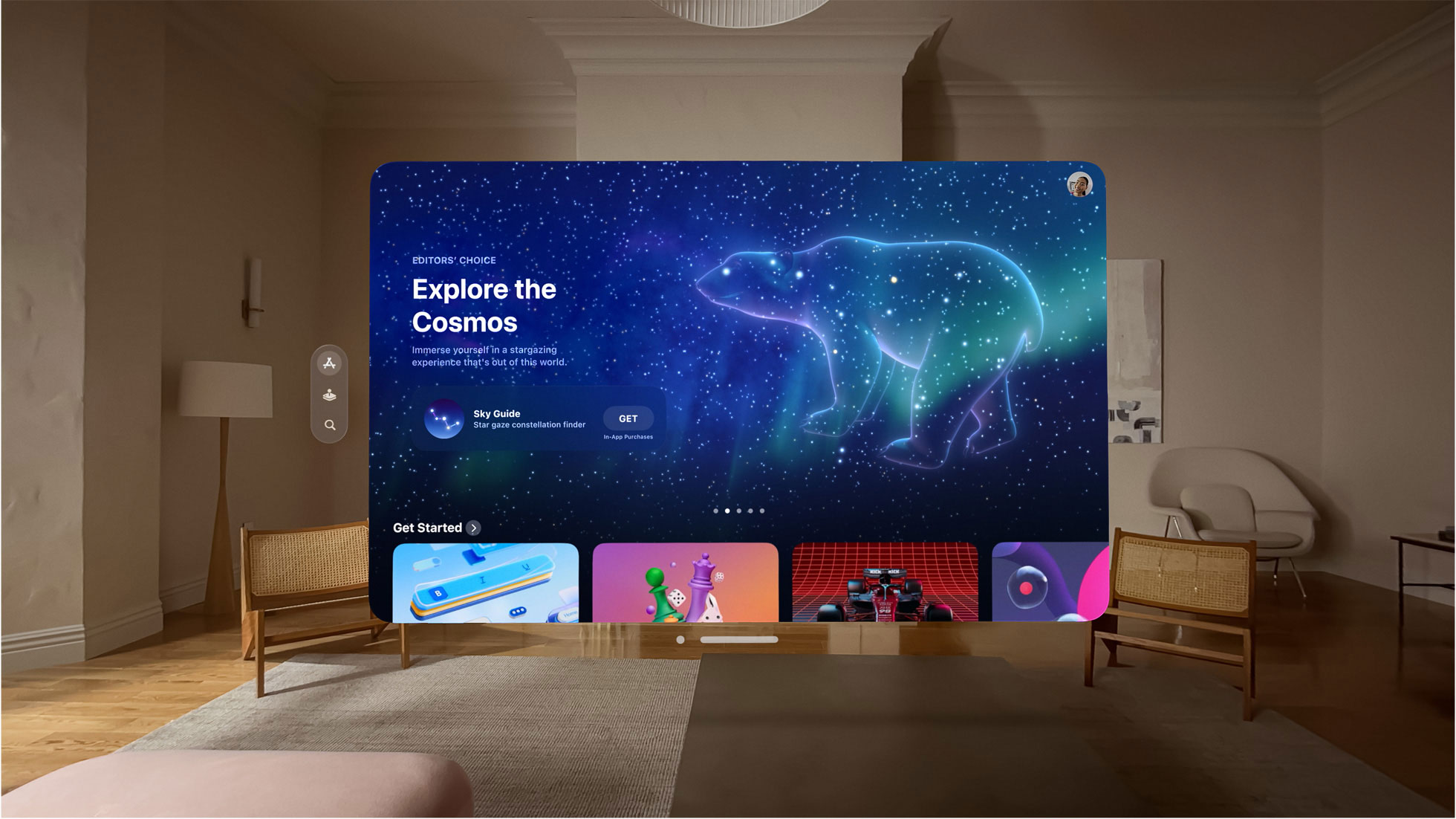

If you want to understand what your Apple Vision Pro spatial computing experience will be like, you need to look at the upcoming apps. Oh, right, there, are none yet. That means we're left to look to developers and, no surprise, even they have questions about what it means to develop for Apple's newest computing and digital experience platform.

A few weeks ago, I asked about a dozen developers if they were excited about building apps for the Vision Pro. Most said yes and some were even willing to imagine what those app experiences might be like.

We're still months from the consumer release of Apple's mixed-reality headset and while I've tried it a couple of times, a true understanding of what it will be like to play, communicate, explore, and even work in the headset remains elusive. That conversation I had with the developers reminded me of that much. None had a real concrete idea of exactly how to compute inside a Vision Pro or how they might be building applications designed to work on the new visionOS platform.

There are, it seems, several common questions developers have when it comes to building apps for Vision Pro and all of them are asked and answered in a recent Apple Developers blog post entitled Q&A: Spatial design for visionOS, which is based on a Q+A the Apple design team held with developers in June at WWDC23 (where Apple first unveiled Vision Pro and visionOS).

Even though I'm not a developer I could relate to all of the developers' burning visionOS questions and found Apple's answers illuminating and, in some cases, surprising.

Start slow

Vision Pro is a mixed reality headset that is capable of virtually a complete pass-through view that puts AR elements in your real world, or of complete immersion. The latter, which I experienced, is impressive, especially for the way it can put a virtual version of your hands (but not your knees) inside the experience.

For developers, though, knowing where in the spectrum of immersion to place users can be daunting. Apple does not recommend that these apps fully immerse users, at least at the start.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"We generally recommend not placing people into a fully immersive experience right away – it’s better to make sure they’re oriented in your app before transporting them somewhere else," says Apple in the post.

Apple also recommends that visionOS developers create a ground plane to connect their apps with the real world. Indeed, most of the time you'll probably be using Vision Pro in passthrough or mixed reality mode, which means that having a connection to the real floor under your feet will help, well, ground the app and keep it from feeling disorienting.

The moment

There was a moment in my first Vision Pro experience when a virtual butterfly (that looked real) fluttered from a windowed forest space onto my finger. It was stunning and unforgettable.

It appears Apple wants developers to think about such moments in their apps. No, Apple's not recommending everyone add a butterfly (although that would be wonderful). Instead, Apple tells developers to think about how their apps can shine in spatial computing. It is, as Apple puts it, "an experience that isn’t bound by a screen."

In one demo I saw, the key moment was clearly when a dinosaur emerged from the wall in front of me, but Apple notes in the post that key moments can be something as simple as adding a focus mode that includes spatial audio in a writing app.

3D challenges

In Vision Pro, you're no longer computing on a flat plane. Everything is in 3D and that requires a new way of working with display elements. Apple solves this in visionOS by applying gaze and gesture control. In the post, Apple reminds developers to think about that 3D space when developing apps.

"Things can get more complex when you’re designing elements in 3D, like having nearby controls for a faraway element," explained Apple Designers.

In general, developing apps for visionOS will be more complex. Instead of just thinking about how mousing over to an on-screen element might work, developers must think about what happens when someone wearing Vision Pro looks at an element. I remember being impressed with how every experience in Vision Pro always knew exactly where I was looking and how buttons and windows changed based on my gaze.

Comfort is the thing

Spatial computing involves more of your body than traditional computing. First of all, you're wearing a headset. Second, you're looking around to see wraparound environments and apps. Finally, you're using your hands and fingers to control and engage with the interface.

Apple, though recommends that developers do not spread the main content all over what could be a 360-degree window.

"Comfort should guide experiences. We recommend keeping your main content in the field of view, so people don't need to move their neck and body too much. The more centered the content is in the field of view, the more comfortable it is for the eyes," wrote Apple designers in the post.

I do recall that while some of my Vision Pro experiences felt immersive, I wasn't whipping my head back and forth to find controls and track action.

A sound decision

Key to my Vision Pro experience was the sound, which uses spatial audio to create a 360-degree soundstage. But it's not just about the immersive experience. Apparently, sound can be used to help connect Vision Pro wearers to their spatial computing experiences.

In the post, Apple reminds developers who may not have thought much about sound before to use audio cues in their apps, noting that "an audio cue helps [users] recognize and confirm their actions."

I don't know exactly what it will be like to live and work in the world of spatial computing, but Apple's guidance is quickly bringing things into focus.

You might also like

- Apple sees Vision Pro on Pixar platform

- visionOS: everything you need to know about the Vision Pro's ...

- The Apple Vision Pro brings 'validation' and unique opportunities to ...

- New Apple Vision Pro videos give us a closer look at the sci-fi ...

- Apple Vision Pro: price, release year, and everything we know about ...

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.