This cool secret iPhone feature lets you video chat in the dark

Memoji has a secret weapon

The front camera on your iPhone packs a secret weapon. The same tool that all of the best iPhones use to create Memoji can also be used as a night vision camera for video chats. Even when the camera looks completely dark, you can activate the Memoji and your caller will see you perfectly … albeit with the filter applied. If you know how to set up Memoji, you now have night vision.

The front-facing camera on the iPhone, ever since the iPhone X with its forehead notch, contains what Apple calls a TrueDepth sensor. The TrueDepth sensor is very similar to the technology that Microsoft used on the Xbox Kinect. It involves an infrared projector and a corresponding sensor camera.

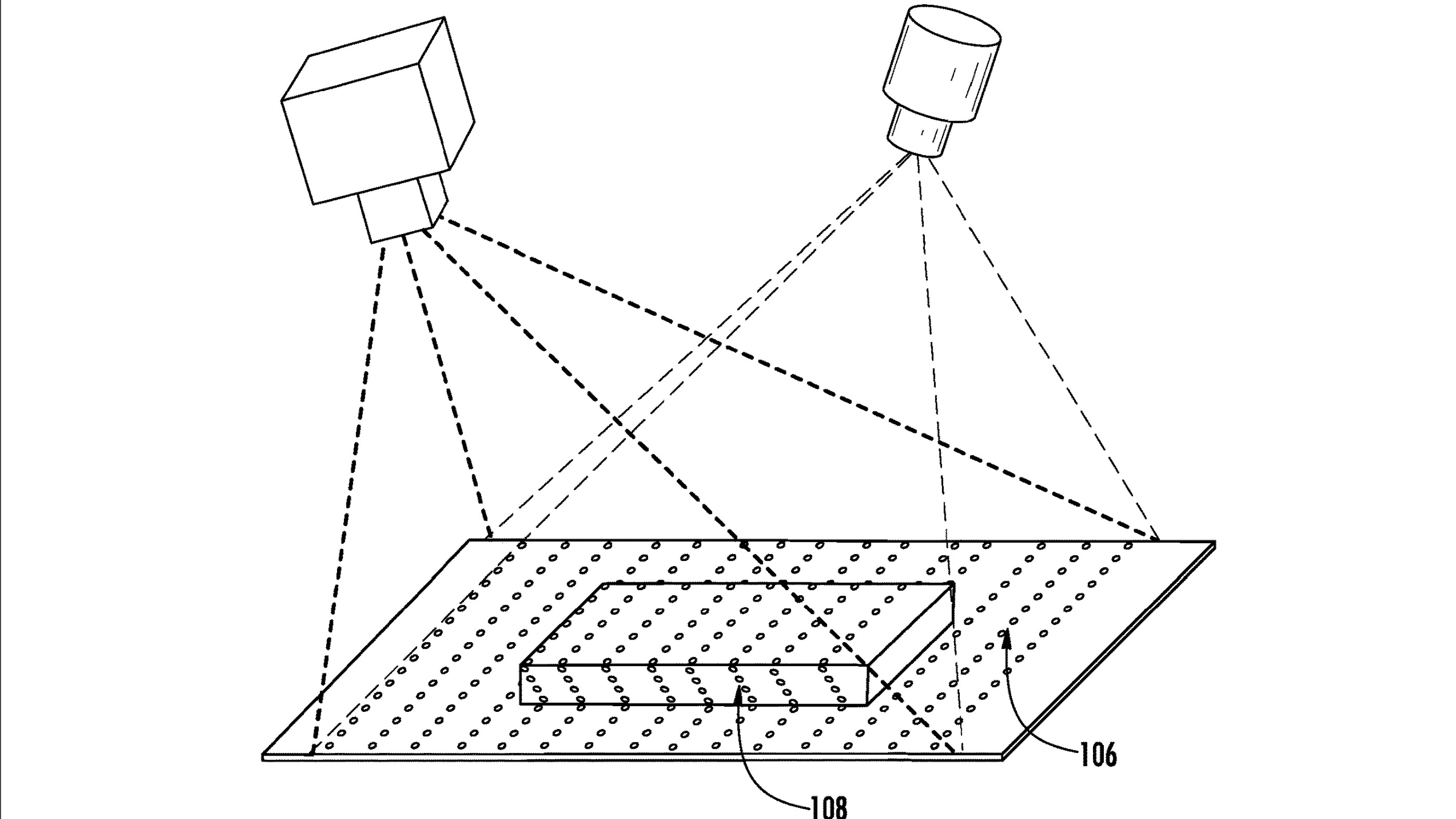

The iPhone TrueDepth module sprays an array of invisible dots all over your face. If you wear glasses capable of seeing into the infrared spectrum, you can actually see the dots and the pattern that they make. The camera on the iPhone can detect and read the dots, and it analyzes the pattern to create a 3D model of your face and head.

Our friends at PatentlyApple have images from Apple’s patent on the TrueDepth technology, and you can see in the diagram how the IR dot projection looks.

This is how face unlock works on the iPhone. It uses the infrared camera to map your face, and it matches this map with the original face unlock map it created. This is why a photo of your face shouldn’t fool the iPhone. It can recognize depth, so a 2D image shouldn’t work.

Because the camera is infrared, it works in total darkness. In a black room, the iPhone front camera won’t be able to take a good picture, but it will be able to turn your head into a unicorn or a koala bear or a talking pile of … well, you can play with the AR emoji and the Memoji to find out yourself.

How to get night vision in FaceTime using TrueDepth

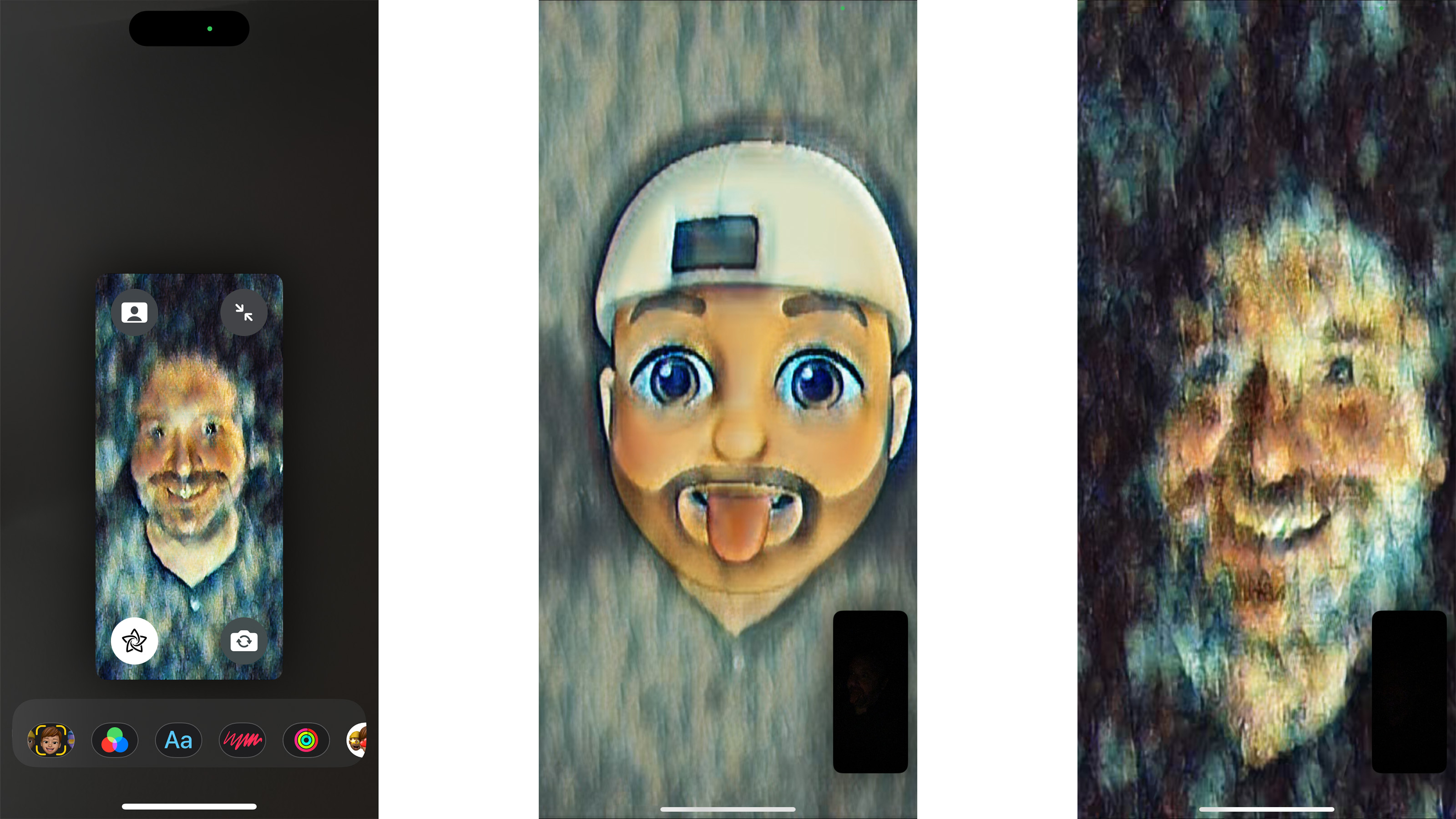

If you want to use TrueDepth for night vision in a FaceTime call, first start your call. Your caller will be fullscreen, and your camera view will appear in a smaller window. Tap on that smaller window.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Tap the star icon in the bottom left corner of the smaller window. This opens the filter menu. You can choose to become a Memoji by tapping the first icon. If you haven’t created your own Memoji, now is a good time.

If you’d like a more natural look, tap the icon with green, red, and blue circles to open the photo filters. The “watercolor” filter uses the TrueDepth camera. Even in a completely dark room, you’ll be able to share a watercolor version of yourself, in color or black and white.

Will this work on my phone?

The TrueDepth camera is unique to Apple. If you have an iPhone with a notch, from the iPhone X and newer, or an iPhone 14 Pro or 14 Pro Max, you have the TrueDepth sensor. Your results will definitely vary, depending on how new your iPhone model is.

We tried this night vision hack with an iPhone 14 Pro and an older iPhone 11. The iPhone 14 Pro worked splendidly and provided the screenshots you see in this story. The iPhone 11 could barely make out our face, but even just a slight bit of illumination helped and resulted in a successful filter.

Most other phone companies that offer a face unlock feature use simpler photo recognition. The more fancy versions of this feature will look to see if your face is moving, or if your eyes blink, to make sure the camera sees a real human and not a photograph.

Apple is unique in using infrared among the major phone makers. Huawei and Xiaomi have used similar technology on phones, but Samsung and Google use a more basic camera setup for face unlock on phones like the Galaxy S22 and the Pixel 7.

More secrets in the iPhone camera

It would be cool to see Apple embrace this technology further and offer filters that give a more accurate picture of us in the dark, especially for video chat. There is also another secret weapon hiding on the back of the phone that may have similar implications.

The rear camera on the iPhone uses a LiDAR scanner, essentially a version of RADAR technology. Using this so-called “time of flight” data, the iPhone can create a virtual map of your surroundings.

So far, Apple has been using these features almost entirely for augmented reality (AR) in games and apps. It also assists the cameras in autofocus. The TrueDepth sensor is showing us that there is much more potential for the IR tech on board, and we imagine that Apple has only begun to unlock the LiDAR capabilities on the iPhone.

Future Apple AR products, especially an Apple glasses wearable device, would likely rely heavily on both of these technologies. It will be interesting to see, literally, how they fare in the dark.

Phil Berne is a preeminent voice in consumer electronics reviews, starting more than 20 years ago at eTown.com. Phil has written for Engadget, The Verge, PC Mag, Digital Trends, Slashgear, TechRadar, AndroidCentral, and was Editor-in-Chief of the sadly-defunct infoSync. Phil holds an entirely useful M.A. in Cultural Theory from Carnegie Mellon University. He sang in numerous college a cappella groups.

Phil did a stint at Samsung Mobile, leading reviews for the PR team and writing crisis communications until he left in 2017. He worked at an Apple Store near Boston, MA, at the height of iPod popularity. Phil is certified in Google AI Essentials. He has a High School English teaching license (and years of teaching experience) and is a Red Cross certified Lifeguard. His passion is the democratizing power of mobile technology. Before AI came along he was totally sure the next big thing would be something we wear on our faces.