What is the Dynamic Island on the iPhone 14 Pro and what can it do?

More than meets the island

We’d heard that the Apple iPhone 14 Pro might lose the notch at the top of the screen in favor of a hole punch arrangement, similar to what we see on most Android phones. While the iPhone 14 still uses the notch design, the new iPhone 14 Pro not only uses a cutout that Apple calls its Dynamic Island, Apple has fully embraced the void created by the holes in the screen, and made them an integral part of the new iOS 16 interface.

The notch first appeared on iPhones with the iPhone X, as Apple and other manufacturers tried to figure out how to hide all of the buttons, cameras, and bezels that cluttered the front of their flagship smartphones. Apple isn’t just squeezing a selfie camera up there. There is also a proximity sensor that phones use to detect when they are close to your face or in your pocket, so it can activate or sleep the touchscreen accordingly.

Apple also has a special depth detector, the TrueDepth camera, that can determine how far away something is from the front of the phone. This is especially useful in AR applications like Snapchat filters because TrueDepth helps the iPhone draw an accurate 3D model of your face. Apple also uses TrueDepth as part of its Face ID unlocking feature.

That’s a lot of technology to hide. Some manufacturers have managed to stuff almost everything behind the display, even a selfie camera and a fingerprint reader. Apple has skipped the fingerprint reader ever since it ditched the home button in which it was housed. The notch always seemed like an iconic, if controversial design choice.

What lives on the Dynamic Island

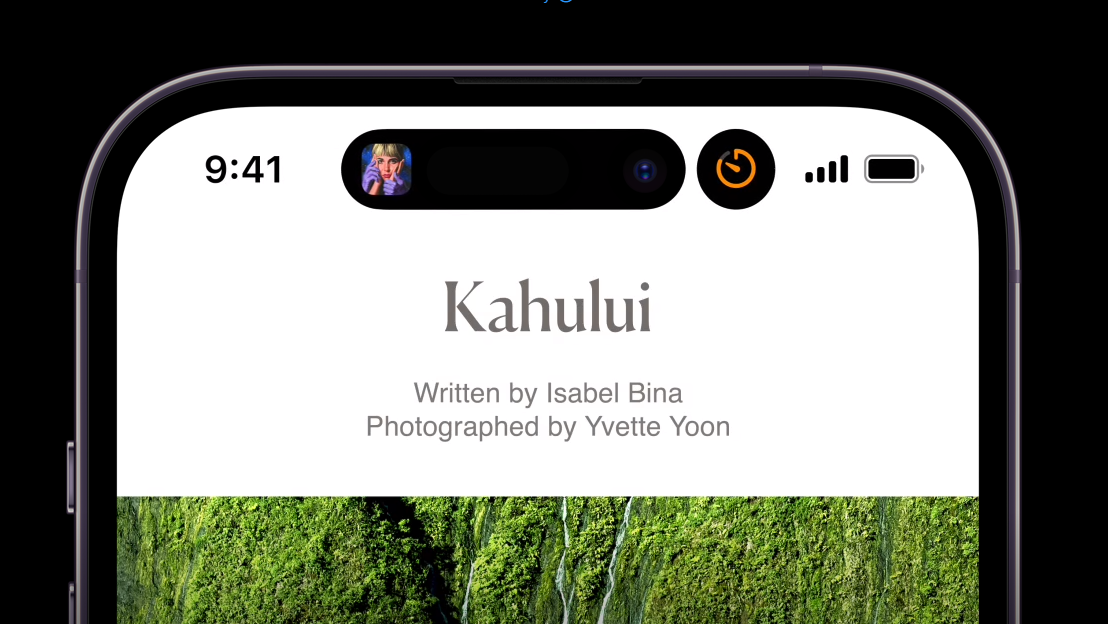

When we heard that Apple would be using a more standard hole-in-the-screen option, we believed it would make the iPhone easy to confuse with other Android phone designs. Apple must have felt the same way because it has done everything possible to make sure its own Dynamic Island hole punch stands out.

The Dynamic Island has three hardware components. There is the 12MP camera and the infrared projector that form the TrueDepth camera system. Then there is the proximity sensor, which is now behind the display. The camera is, on the entire iPhone 14 line, the first iPhone selfie camera with autofocus. The infrared projector throws invisible dots all over a subject, and the camera detects the dots and uses them to form a 3D image with depth.

Making the Dynamic Island a destination

Every other manufacturer ignores its cutout, treating it like an unwanted growth or deformity. Apple has made the ingenious play of embracing the cutout and making it a vibrant part of the phone’s operating system. The Dynamic Island cutout will deliver alerts and notifications, and it will shake, rattle, and roll while the phone is in the midst of activities. When an alarm goes off, an alarm bell will shake on the island. When music is playing, you’ll see musical notes. You get the idea.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

What’s more, you’ll be able to interact with the Dynamic Island, which will expand as you need it. Apple says the Island will respond no matter where you touch it, so don’t worry about tapping on the camera instead of the screen. When you see a flight alert, for instance, you can tap on the Dynamic Island and it will expand into a much more detailed flight path display.

Apple has opened up the Dynamic Island to third party support, so it won’t just be Apple’s own apps taking advantage. For now, you’ll be able to read messages, respond to AirDrop requests, and even follow turn-by-turn navigation just by glancing at or tapping the Dynamic Island.

Can catch up on everything Apple introduced at its Far Out event.

Phil Berne is a preeminent voice in consumer electronics reviews, starting more than 20 years ago at eTown.com. Phil has written for Engadget, The Verge, PC Mag, Digital Trends, Slashgear, TechRadar, AndroidCentral, and was Editor-in-Chief of the sadly-defunct infoSync. Phil holds an entirely useful M.A. in Cultural Theory from Carnegie Mellon University. He sang in numerous college a cappella groups.

Phil did a stint at Samsung Mobile, leading reviews for the PR team and writing crisis communications until he left in 2017. He worked at an Apple Store near Boston, MA, at the height of iPod popularity. Phil is certified in Google AI Essentials. He has a High School English teaching license (and years of teaching experience) and is a Red Cross certified Lifeguard. His passion is the democratizing power of mobile technology. Before AI came along he was totally sure the next big thing would be something we wear on our faces.