Fighting fake news: how Google, Facebook and others are trying to stop it

The fight against fake news is on, but is it working?

Tech giants like Google, Facebook and Wikipedia are undertaking a concerted effort to crack down on the dissemination of fake news. While some may cry “fake news” to any story they disagree with, fake news is defined by Facebook as “hoaxes shared by spammers” for personal and monetary reasons.

The term “fake news” also encompasses falsehoods disguised to look like legitimate news and overtly biased reporting meant to sway voters during elections.

Although fake news has always existed, Google’s preeminence in search and Facebook’s position as a dominant content distribution platform have changed the way we consume news.

In the past, most people got their news from trusted newspapers with clear ethical guidelines that set them apart from dubious sites on the web. Today, users more often consume news shared through social networks as part of a curated feed, often with little vetting, forming an echo chamber effect of confirmation bias.

We’ve already seen some of the results of proliferating fake news in the 2016 US presidential election and Britain’s Brexit vote. Experts found that for both, state-sponsored fake news campaigns attempted to sway voters toward more populist candidates. It’s unclear, however, if these efforts had any material effect on election results.

The issue of fake news is far from over, but with increased awareness of what constitutes fake news and its potential impacts in the real world, tech companies, journalists and citizens are feeling the pressure to combat its spread.

How tech companies are fighting fake news

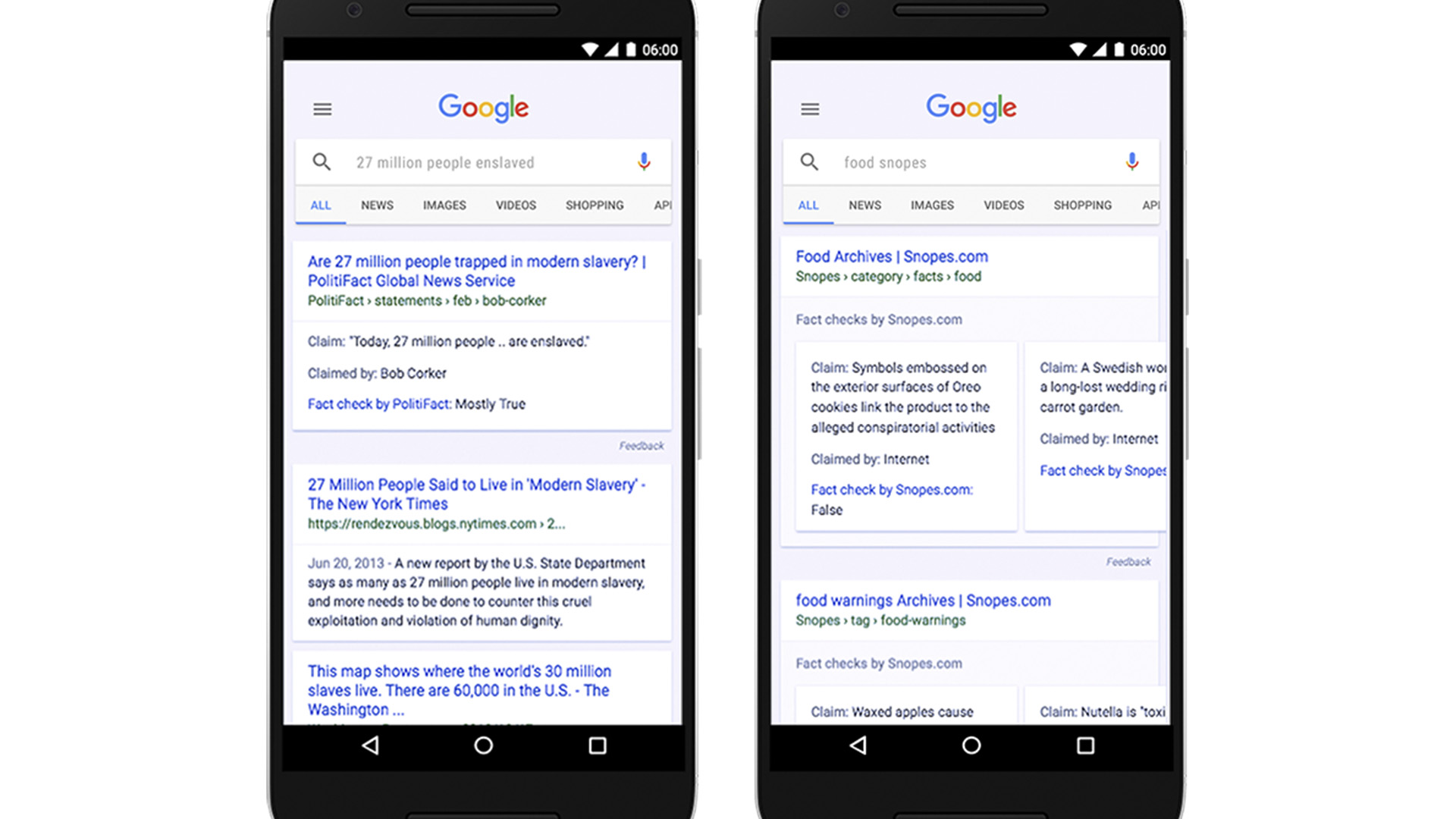

To address fake news, Google announced plans last month to improve the quality and reliability of its search results. This came after the search giant was criticized for showing results for sites denying the Holocaust.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

One of the methods Google is employing is using human editors to evaluate the quality of search results. The ultimate goal of this effort is to train the company’s search algorithms to spot low quality and false content.

Another tool Google has adopted is user reporting for its Autocomplete feature, adding another human element into the mix. Users can now report when Autocomplete results are offensive, misleading or false. Google is also working to add reporting features to its Featured Snippets, which are the small blurbs found at the top of search results.

Facebook CEO Mark Zuckerberg initially scoffed at the idea the social network was responsible for the dissemination of fake news that ultimately swayed the US election.

But in recent months, Facebook has stepped up its efforts to fight fake news, and Zuckerberg admitted in a post the company has a “greater responsibility than just building technology that information flows through.”

In December 2016, Facebook launched new tools for users to report low quality or offensive content as well as fake news.

Facebook didn't stop there; it partnered with third-party fact checking organizations like Snopes, Politifact and the Associated Press to flag stories as disputed. What’s more, a warning will pop up when users go to share disputed content with a link to find out why the content is flagged.

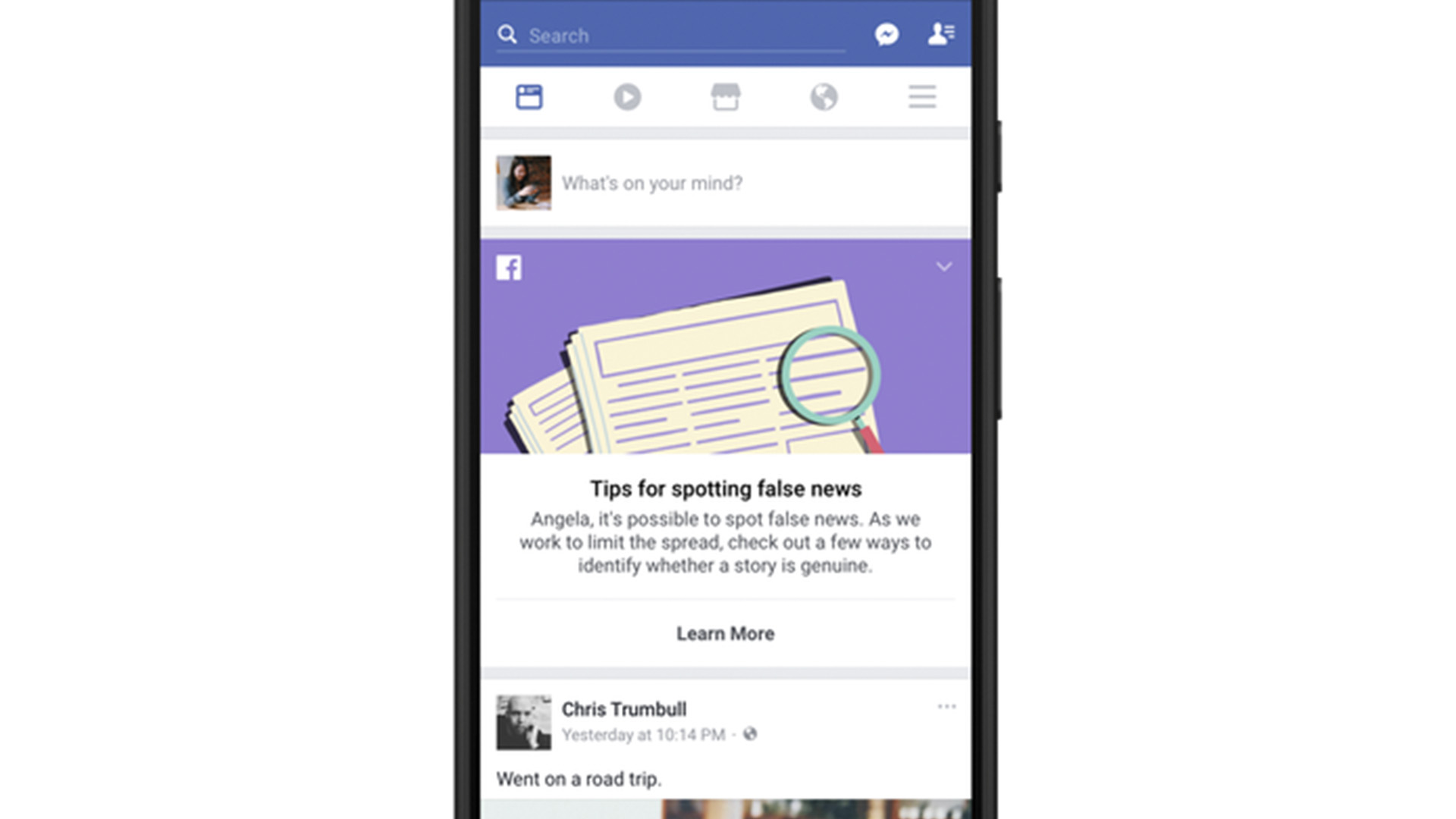

Facebook also launched a guide for spotting fake news that appeared at the top of users’ News Feeds. The guide included basic steps and tips on how to vet sources and recognize fake news, but only appeared in 14 countries for “a few days” in April.

“When people click on this educational tool at the top of their News Feed, they will see more information and resources in the Facebook Help Center, including tips on how to spot false news, such as checking the URL of the site, investigating the source and looking for other reports on the topic,” said Adam Mosseri, Facebook’s VP of News Feed, in a blog post at the time.

While Facebook and Google have launched tools and other initiatives to combat the spread of misleading or false information, Twitter has been relatively silent.

The company has a spam policy that bans users for “repeatedly [creating] false or misleading content,” but not much else in the way of stemming the spread of fake news articles and other misleading content.

Twitter declined to comment for our story, and Google did not respond to interview requests. Facebook declined an interview but pointed us to its blog posts on its fake news initiatives.

Jimmy Wales, the co-founder of Wikipedia, has also taken up the torch against fake news by launching a new online publication called Wikitribune.

Wikitribune aims to pair journalists with volunteer community contributors to cover political topics, science and technology. The site will be funded primarily by donations, like Wikipedia, through crowdfunding campaigns.

The hope is Wikitribune will differentiate itself from traditional news organizations by allowing the online community to work with professional reporters to represent facts and offer greater transparency into what goes on in a newsroom. The site's tagline is: 'Evidence-based journalism'.

Is any of this working?

With the steps Google and Facebook have taken in recent months, one might wonder if any of them are doing anything to stop fake news.

Unfortunately, it appears too early to tell, but the recent election in France gives some hope the measures aren’t for naught. Leading up to May 7’s French presidential election, Facebook suspended 30,000 fake accounts that were spreading fake news, spam and misinformation, according to Reuters.

Facebook suspended 30,000 fake accounts that were spreading fake news, spam and misinformation

Facebook also went old school by taking out full page ads in French newspapers, including Le Monde, L’Express, Süddeutsche Zeitung, Der Spiegel and Bild, according to the Washington Post.

The ads outlined basic steps to spotting fake news, similar to the guide that briefly appeared at the top of users’ News Feeds.

While Facebook declined to provide numbers for our story, NewsWhip, a company that tracks the spread of stories across social media, found that just 10% of the top 200 most shared stories surrounding the French election were fake news. This is compared to the nearly 40% that were deemed fake news leading up to the US election.

“There is a fake news problem without any doubt, but what we like to do is try to quantify how bad that problem is,” said NewsWhip CEO Paul Quigley in a Bloomberg report earlier this month.

While the data from the French election suggests fake news fighting efforts were to some degree effective, misinformation was still widely shared.

NewsWhip saw fake news stories claiming Emmanuel Macron, who went on to win the election, was aligned with Islamic terrorist group Al-Qaeda garner over 100,000 engagements across social media. Macron also filed a legal complaint against newspaper Le Pen for its story falsely reporting he had an offshore bank account.

“It’s hard because people have predispositions to liking certain kinds of stories," Quigley told Bloomberg, "and if you have a certain opinion of certain politicians and you’re being fed a diet from particular websites that confirms your worldview every day, you’re going to want more of that.

“So people are kind of opting into fake news a little bit — it’s hard for the platforms, because they are developing algorithms that serve you the stuff you want.”

Gatekeeper or content distributor?

One argument heard often in the discussion around fake news is the erosion of the US Constitution’s First Amendment.

The amendment states, in part: “Congress shall make no law ... abridging the freedom of speech, or of the press.“

Other countries like the UK have similar protections, but what the First Amendment means in practice in the US is that citizens are free to express themselves in nearly any way they choose through any medium. Anything that hinders that expression is considered a violation of their rights.

However, Sally Lehrman, Director of the Journalism Ethics Program and the Trust Project at Santa Clara University, doesn’t think Facebook is violating the First Amendment by policing fake news.

“It’s not a question of free speech. [Facebook] already makes decisions about what they do and don’t show. [Facebook] is not the government or an open public square,” Lehrman tells TechRadar, referring to Facebook’s previous removals of the historic ‘Napalm Girl’ photo and violent videos.

Lehrman is correct in that Facebook already polices the content on its platform. For example, Facebook prohibits nudity and violent imagery, but the social network is constantly reevaluating what content to allow or forbid.

In a Facebook document leaked to The Guardian, an example was given of a user’s statement describing a grossly violent act against a woman. Although violent and upsetting, Facebook determines something like this is self expression and therefore permissible.

Last year, Facebook got into hot water after Gizmodo broke news the social network’s human editors routinely suppressed conservative news in the Trending Topics section.

This led Facebook to fire its human curators and turn instead to algorithms to simply show what stories were generating buzz.

Months later, however, Facebook was forced to redesign the Trending Topics section to combat the proliferation of fake news. And just this week, Facebook redesigned its Trending Topics section once again to show more sources around a single news story.

Changes like this have propelled Facebook into the content distribution business rather than simply acting as a social media network.

“Facebook needs to think about its responsibilities [as a platform],” Paul Niwa, Associate Professor and Chair of the Department of Journalism at Emerson College, tells us.

“Are they a content producer or are they a gatekeeper?” he poses. ”That is an ethical, not a legal conversation. If they don’t adopt these ethical standards, it’s going to threaten their business because either the government or the public will take action against Facebook.”

Google is a different beast than Facebook, according to Lehrman. “Google is different because it’s the open web,“ she says. “The public isn’t asking Google to censor [its results] but asking it to be more thoughtful about it.”

Tech companies are now in process of holding themselves accountable

Santa Clara University's Sally Lehrman

That’s not to say Google couldn’t do more to combat fake news. “I don’t see any training in Google News about how to compare information,” says Niwa, referencing Facebook’s tips for spotting fake news. “Tech companies should be investing in news literacy,” he continues.

While linking to fact checking sites is a good start, Google could do more with being transparent about how it prioritizes results, which can be found in this company blog post but not in the results themselves.

Working together

After months of launching new tools and initiatives, one thing is clear: tech companies can’t fight fake news on their own. The strategies Google and Facebook are employing rely heavily on third-party fact checking organizations, media outlets and the public.

“I think we’re all responsible for fake news,” says Lehrman. “Journalism and news organizations hold themselves accountable, and tech companies are now in process of holding themselves accountable.”

Niwa notes the public needs to be just as vigilant. “Citizens have to take a stronger responsibility over what we read and to take an active role about credibility of sources,” he says. “This is not something that is too much to ask as Americans used to do this during the penny press era of the 19th century.”

One of the ways Niwa sees journalists, tech companies and citizens working together is in educating the public about vetting their news sources.

“Because of the consolidation of media, Americans have lost a lot of those skills,” says Niwa. ”Tech companies should be investing in [teaching] news literacy.”

Niwa cites the transition from print to broadcast media as another time when fake news ran rampant. “It took decades for Americans to sort all of that out, and I think we’re in the midst of that process.”

While it’s easy to point fingers at Facebook and Google as the conduits through which fake news flows, journalists and citizens bear some responsibility as well.

The experts we spoke with say journalists must work with tech companies to combat the spread of fake news via fact checking services and by helping to train the algorithms companies' engines rely on.

Citizens, meanwhile, need to take responsibility for vetting sources and thinking twice before sharing news they find.

It may be too early to tell if fake news is on the decline and if Google, Facebook, and others’ efforts are successful in stemming its spread. However, what is clear is that fake news isn’t going away unless all parties step up to fight it.

“I’m optimistic about the future,” says Niwa. “I believe that the more experience users have with the internet, the more likely they are to discuss ideas other than their own.”

Lehrman is equally optimistic about the future of news consumption. “There’s a thirst for news and we see that with [news] subscription rates going up,” she says. “There’s a lot of work to be done, but the bright spot is that we’re seeing a lot more news consumption and active engagement.”

Update: The story has been updated to clarify Lehrman's statements on the First Amendment.