The future of photography: when your phone will be smarter than you

AI will democratize photography

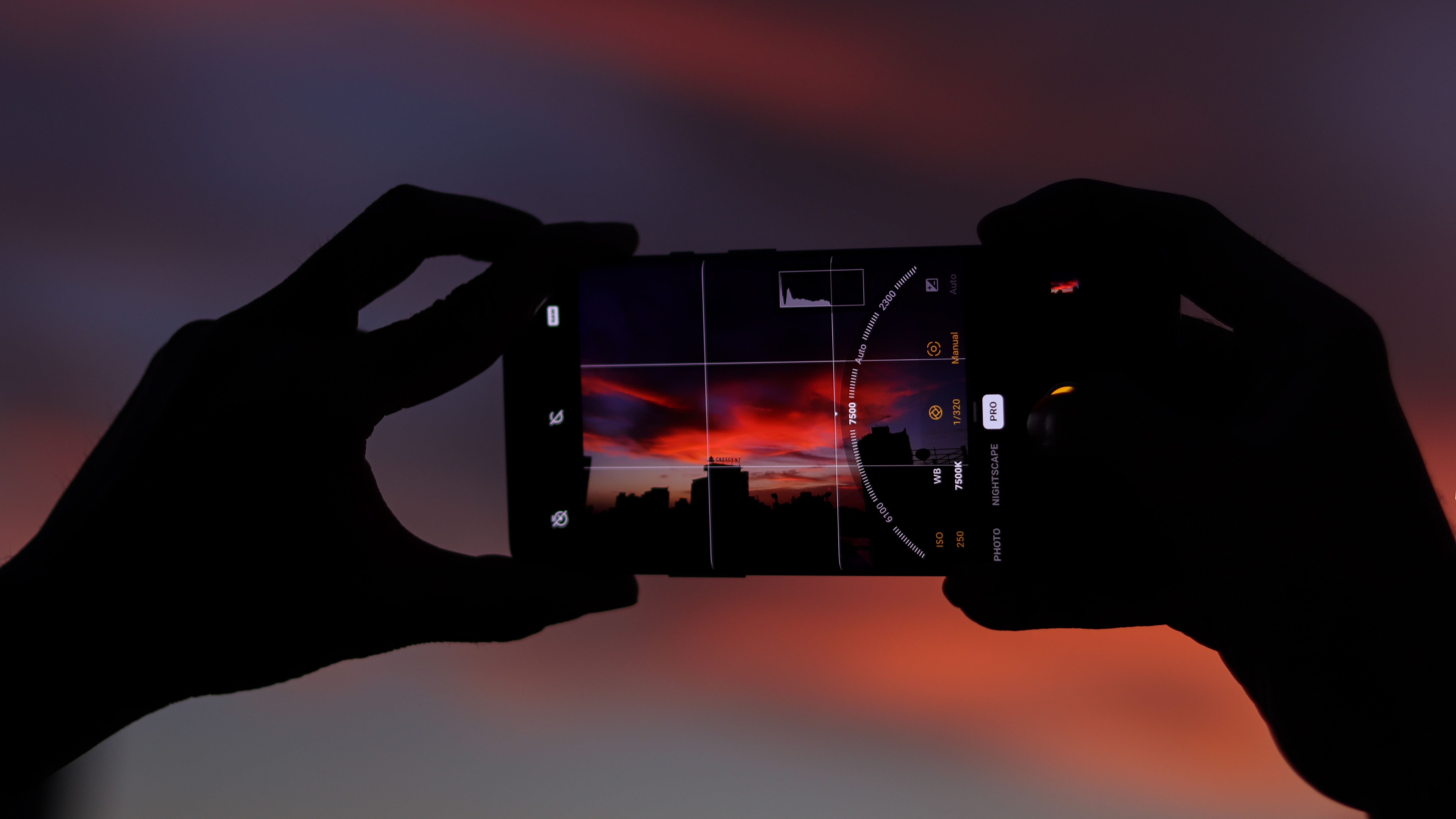

How often does it happen that you’re scrolling through Instagram, come across something really cool which was apparently shot on a phone, but when you try it, it just doesn’t match? Even if your phone might be capable to do it?

Some of us might hate to admit this, but it is probably because the photographer was better with the camera he/she was using, or they probably knew how to edit in the right way. Basically, the person knew how to make the most of whatever resources were at disposal, which is very likely the same as yours. In even simpler words, the same hardware was used to create better results because of better knowledge of the software and manual settings.

What if our phones would just know how to get the shot based on what we ask it to do?

Cameras on smartphones have come a long way in the last few years. Along with an increase in quality and quantity, they’ve become easier to use. Getting the shot you wanted is usually not that difficult, especially with high-end phones. They work well for most scenarios, but can be confusing when trying to shoot something out of the ordinary.

To understand what a phone camera could possibly do one day, we need to understand what it can’t do today. All modern digital cameras work in the same way in principle — Light waves reflect off objects, enter the camera passing through a series of lenses and hits the sensor, which then processes and converts them into signals which can be interpreted as an image. The differences between any two images is basically a fluctuation of any of these parameters. Some of these can be controlled while others are what you have to make do with.

For instance, the biggest reason why images from a DSLR look so nice over a smartphone image is the significantly bigger sensor. Image sensors have the biggest impact on the look of the image, where bigger is almost always better. In technical terms, it allows for better details, a shallower depth of field and potentially brighter images. While the sensors in smartphone cameras have definitely gotten bigger, there’s also nearing the upper limit of how much space they can occupy within a phone.

AI to the rescue!

The solution? Bridging the hardware inadequacy via smart software (software being a very wide term here). The individual adjacent pixels are combined to have significantly larger resultant pixels and improve light sensitivity. The depth of field is increased via implementations such as portrait mode which understands what the subject is and defocuses from everything else. Since the aperture can’t be changed and pulling the shutter speed will bring shake to the equation, phones use superior processing to make images brighter even when there may not be enough light.

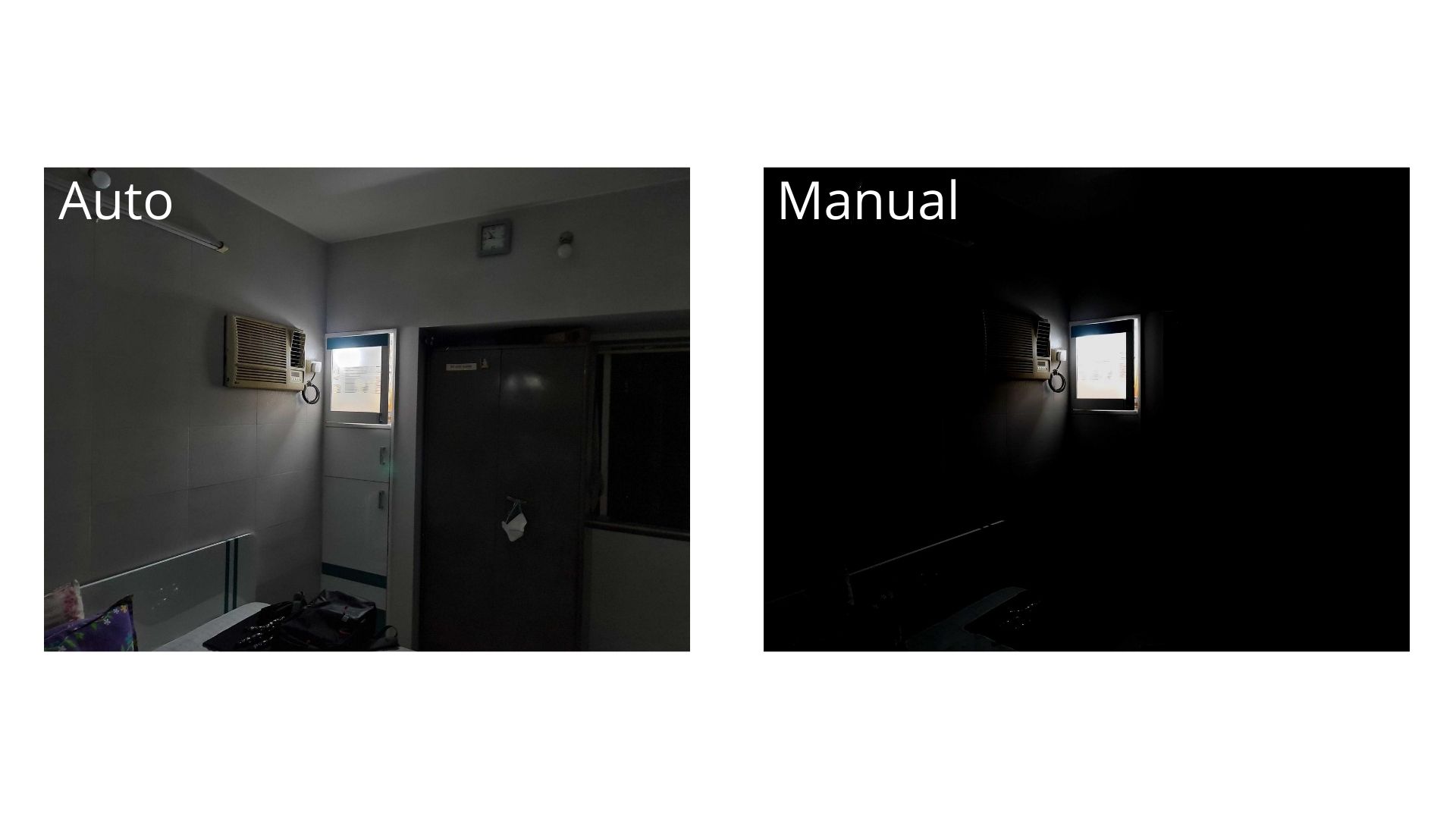

The last bit is going to get increasingly more important as more phones look to adopt varying degrees of computational photography. Here’s a very basic test you can do to understand how effective it can be. Try clicking a picture of something in less than ideal lighting conditions, such as a dimly-lit room, in the auto. Now check its EXIF data (usually found under the ‘details’ section of an image) and note down the settings. Switch to the Pro mode, move to the same settings and then click an image. The result will invariably be worse.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The sole reason for this is that while shooting with manual controls, the phone holds back how much it processes an image. Any real camera (aka non-smartphone) will not natively support such optimiazations and will be dependent on the exposure triangle. On modern phones, the exposure triangle is getting more irrelevant each day. Features such as Night mode widen the gap further.

The ability of a smartphone to change how it behaves when given a specific set of instructions which override the shooting settings is what will put them ahead of dedicated cameras.

We’re not saying that DSLRs are endangered, but there are definitely a lot of things a smart phone would be able to do better than a dumb camera. For this particular example of shooting in Night mode, the phone understands that the shutter speed needs to be pulled but not too much, multiple images are clicked and stacked to increase illumination throughout the, highlights are suppressed and shadows are raised, any shake is compensated for, the noise is reduced, align with a bunch of smaller tweaks to yield a brighter image. But this is just the beginning…

Overtaking a 'real' camera

Astrophotography, a style reserved for extremely high-end shooters is now possible on affordable smartphones that take the night shooting optimizations to the next level. Bring able to shoot constellations with just a phone and tripod, without any knowledge of how to focus or expose an image, or a mount that tracks and compensates for Earth’s rotation is beyond exciting.

On the same lines, some phones offer a long exposure or a light painting mode. This is not only a technically difficult option, but also one that can be tricky even if you know your way around with a camera. If that wasn’t enough, the phone will also give you an edited image ready for sharing. Low-light photography is just one of the areas where phones are better. They also win in areas such as dynamic range, focusing, stabilization, audio capture, weather-proofing, burst photography and many more.

It’s no secret that we’re almost at the point where the hardware on smartphones can no longer improve with the current state of technology. Sensors will probably get a little bigger, manufacturers will toy with the idea of faster apertures, and OIS could get more robust, but we’re looking at marginal improvements now. Moreover, the changes that come with these upgrades can also have detrimental effects. A big sensor combined with a wide aperture is the recipe for creamy bokeh, but take it too far and you have a camera that will struggle to focus on any macro subject. This is due to the plane of focus getting so slim so anything that is a few millimetres on either side starts going out of focus.

The solution can once again come from a combination of smart software that makes the camera function in a particular way - focus stacking. As the name suggests, it involved clicking multiple pictures at the same settings but with slight changes in where the camera focuses. Once the stack is ready, the images are combined at their sharpest points to yield an image that has a subject entirely in focus.

Even better than offering manual controls?

Manufacturers often face the challenge of whether they should prioritize giving more features to users or give an easier user experience. Companies such as LG, Samsung and Huawei lean towards the former while Apple and Google lean toward the latter. Neither is particularly wrong, as it is a conscious choice to not give an option that users may not know how to use and end up getting bad results. Here’s where AI and computational photography can solve things and bring features that were hitherto inaccessible.

People like to click cool pictures and smartphone makers want to assist in their aspirations when possible.

Once you understand how every image is practically just a function and combination of the settings, the opportunities to break these rules increase exponentially. It might even compel smartphone makers to move to ideas that don’t imitate real cameras but find smarter workarounds for problems.

The move to multiple lenses on smartphones is a classic example of how you can achieve versatility when your camera’s capabilities are limited; by letting another camera take care of it. Multiple focal lengths is the most popular example of this, but there are some other unusual creations too. Monochrome sensors can help improve tonality and dynamic range as black and white images are inherently better at that. Some phones opt for the exact same cameras just to be able to gather more information within an image and enable more editing legroom. In simpler terms, smartphones continue to adapt and overcome conventional camera challenges via sophisticated hardware and software combinations. We’re just at the beginning of this revolution.

By taking the route less travelled, some companies have also experimented with ideas such as built-in ND filters via electrochromic glass that can control the physical amount of light entering the camera by changing its opacity. As gimmicky as it may seem, it opens up opportunities such as truly cinematic video shooting where the shutter speed doesn’t keep bouncing as the ambient light, or something way trickier such as day-time long exposures or silky water.

- Traditional cameras too slow to focus? Add a laser AF module

- Not enough space for more glass to enable higher zoom? Fold the lenses inward

- Images often shaky? Add a gimbal system

- Not enough background blur? Add a portrait mode

- Too much blur? Add focus stacking capabilities

- Dynamic range too low? Shoot multiple pictures at different metering levels and combine

- Images turning out too bright at your desired settings? Make the glass convert into an ND filter

Perhaps, we could finally see iPhones and Pixels offer added versatility in this manner instead of manual controls.

In a time when physical attributes of a camera are about to hit a wall, hardware will be a decreasingly smaller part of the equation in the future. And the best part? Since these will be software-driven features, your phone’s skills could theoretically keep on growing as more models get trained and shared.

The point of this piece is to show consumers what could be cooking in a few years from now as manufacturers find ways to add these features in a way that more people can use it. In an ideal world, you just tell your phone what you’re looking for and it takes care of the rest with minimal assistance. A future where smartphones truly democratize all forms of photography might not be that far.

Aakash is the engine that keeps TechRadar India running, using his experience and ideas to help consumers get to the right products via reviews, buying guides and explainers. Apart from phones, computers and cameras, he is obsessed with electric vehicles.