A comprehensive history of the PC

A detailed look at the emergence and evolution of the personal computer

The PC; the personal computer; the IBM-compatible. Whatever you want to call it, somehow it has maintained a dominant presence for nearly four decades.

If you try to launch any program written from the ’80s to the 2000s onwards, you have a good chance of getting it to launch: your PC has backward compatibility going right back to the ’70s, enabling you to run pieces of history as though they were from yesterday.

In fact, your computer is brimming with heritage, from the way your motherboard is laid out to the size of your drive bays to the layout of your keyboard.

Flip through any PC magazine and you’ll see everything from bulky desktop computers to sleek business laptops; from expensive file servers to single-board devices only a few inches big.

- Here's our list of the best PCs you can buy

- We've also built a list of the best gaming PCs

- Check out our list of the best Linux desktops available

This article by John Knight was originally published as a two-part series in Linux Format issues 268 and 269.

Somehow, all these machines are part of the same PC family, and somehow they can all talk to each other.

But where did all of this start? That’s what we’ll be examining: from the development of the PC to its launch in the early ’80s, as it fought off giants such as Apple, as it was cloned by countless manufacturers, and as it eventually went 32-bit.

We’ll look at the ’90s and the start of the multimedia age, the war between the chip makers, and the establishment of Windows as the world’s leading but not best operating system.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

But before we go anywhere, to understand the revolutionary nature of the PC you first need to grasp what IBM was at the time, and the culture that surrounded it.

Emergence of IBM

IBM was formed in the early 20th century by people who invented the kind of punch-card machines and tabulators that revolutionised the previous century. IBM introduced Big Data to the US government, with its equipment keeping track of millions of employment records in the 1930s.

It gave us magnetic swipe cards, the hard disk, the floppy disk and more. It would develop the first demonstration of AI, and be integral to NASA’s space programmes.

IBM has employed five Nobel Prize winners, six Turing Award recipients, and is one of the world’s largest employers.

IBM’s mainframe computers dominated the ’60s and ’70s, and that grip on the industry gave IBM an almost instant association with computers in the minds of American consumers.

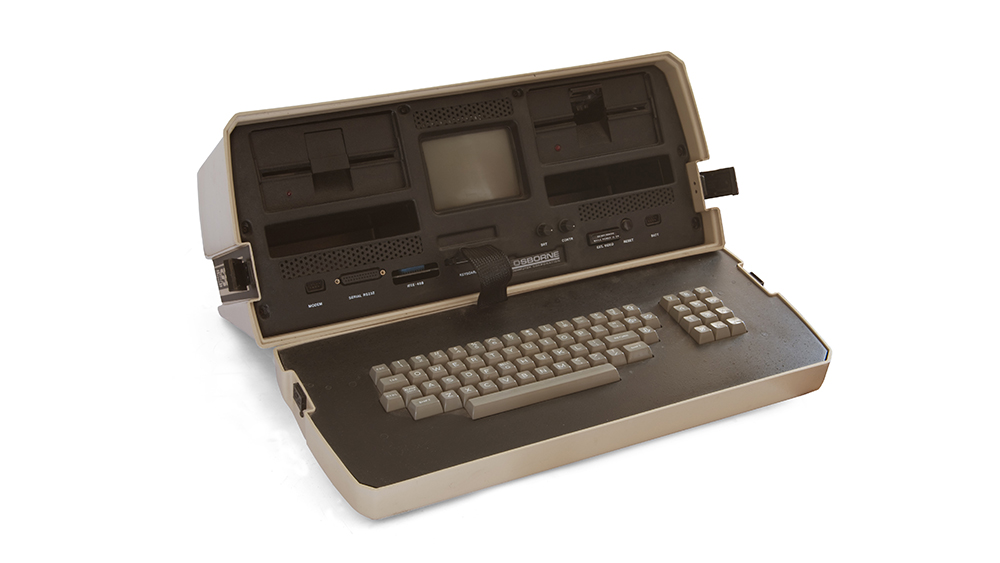

But trouble was on the horizon. The late ’70s were saturated by ‘microcomputers’ from the likes of Apple, Commodore, Atari and Tandy. IBM was losing customers as giant mainframes made way for microcomputers.

IBM took years to develop anything, with endless layers of bureaucracy, testing every detail before releasing anything to market.

It was a long way from offering simple and (relatively) affordable desktop computers, and didn’t even have experience with retail stores.

Meanwhile, microcomputer manufacturers were developing new models in months, and there was no way IBM could keep up with traditional methods.