AMD unleashes 128-core Epyc ‘Bergamo’, trounces Intel’s most expensive CPU

Ampere and others firmly in AMD’s crosshair with a new Cloud Native model.

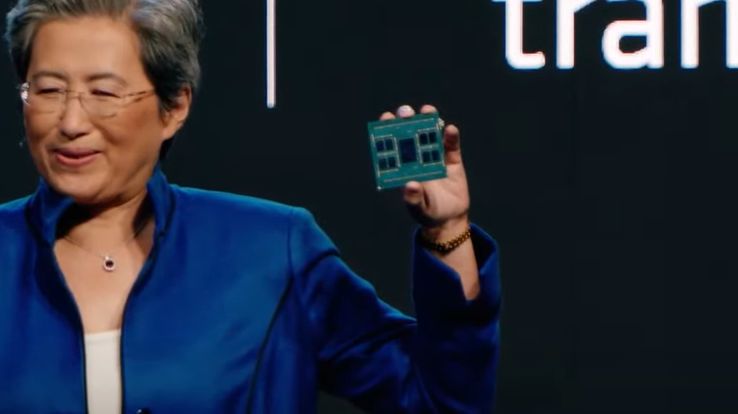

AMD has unveiled its first cloud optimized processor, the EPYC “Bergamo” with up to 128 Zen 4c cores and 82 billion transistors in a single socket at its Data Center & AI Technology Premiere event in San Francisco on Tuesday.

The top-of-the-range processor, the Epyc 9754, is aimed at cloud native workloads that are popular with Arm vendors such as Ampere computing. The new core is smaller by 35% (and therefore cheaper to produce) using the same 5nm process as the original Zen 4 core and AMD claim that it offers the highest vCPU density available. That is achieved using fewer CCDs with more cores on each (16 vs 8).

Bergamo is already shipping with selected partners (including Meta) and promises performance gains of up to 160% compared to its closest competitor, Intel’s Xeon Platinum 8490H, a $17,000 Sapphire Rapids parts with “only” 60-cores. AMD’s CEO Lisa Su, also said that the chip would deliver 80x extra performance per watt compared to its Intel rival.

More cores to come?

Zen4c cores are fully compatible (and socket compatible with Zen4 cores, which means that they support all the goodies that comes with the SP5 socket: 12-channel DDR5 memory, PCIe Gen 5.0 plus, unlike Arm rivals, does multi-threading as well (so 256 threads in all).

AMD hasn’t disclosed Bergamo’s TDP but it is likely to hover around 400W which is the same as the top-end Zen 4 part with 96 cores, the Epyc 9654. What we do know is that it has 256MB L3 cache and will likely sell for cheaper because of a much smaller die size.

What to expect next? The 9754 is the first of many SKUs with other models with higher frequencies likely to emerge depending on how the competitive landscape evolves. Will there be even more cores? Perhaps with a smaller node but that will likely come in 2025. The launch of Bergamo comes a day after Intel sent a press release that stated its superiority across a range of AI-heavy benchmarks, with, it says, 80% greater inference throughput in AI use cases.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.