Apple devices to detect and report child sexual abuse images: Here's how it works

Across iMessage, Siri, iCloud Photos

Apple has unveiled new features on its platforms that seek to protect children online, and this includes scanning iPhone and iPad users' photos to detect and report large collections of child sexual abuse images stored on its cloud servers.

"We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM)," Apple said on its site

Apple has introduced the new child safety features after collaborating with child safety experts.

"This program is ambitious, and protecting children is an important responsibility. These efforts will evolve and expand over time.," it added.

Apple to focus on three areas in child protection

The child safety features fall broadly in the three areas.

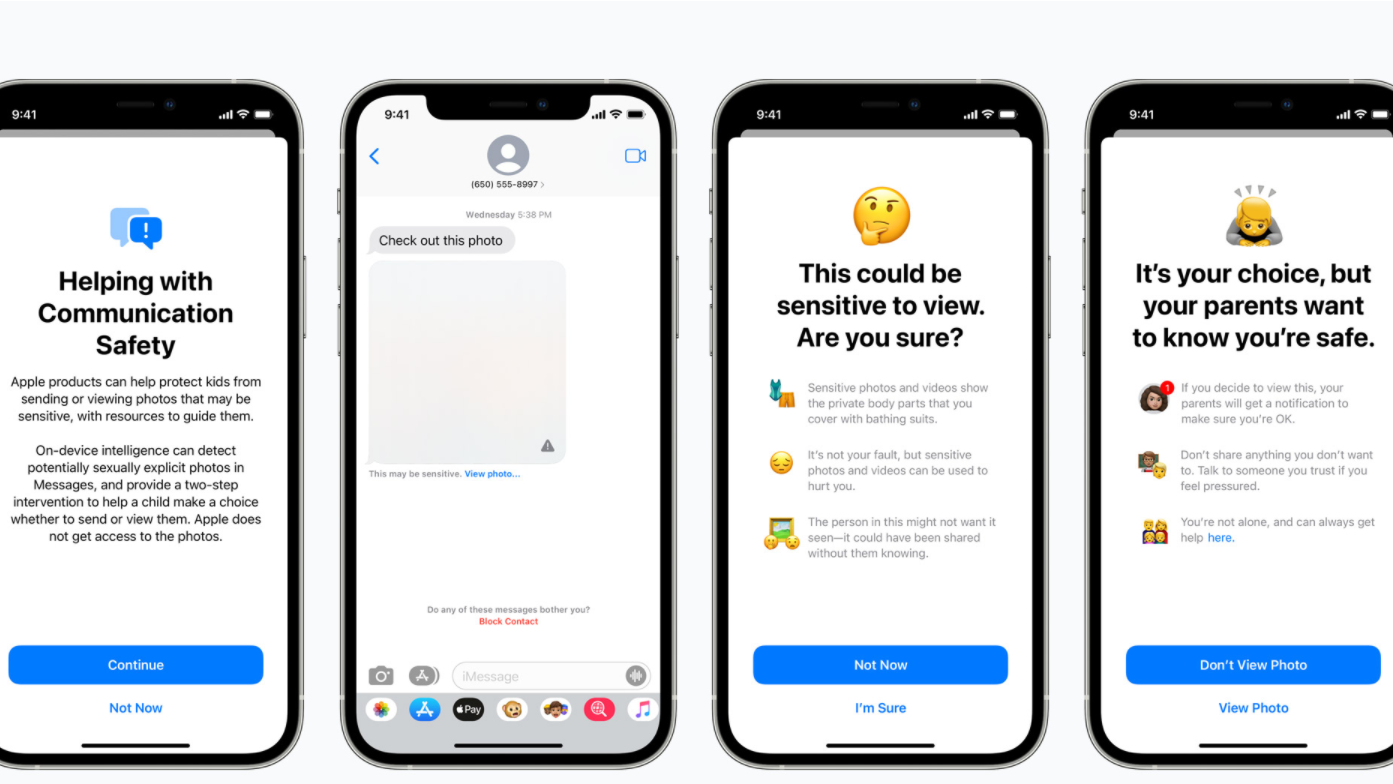

"First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online," the company said. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

Secondly, Apple said, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online. "CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos."

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The third and final update pertains to Siri and Search to provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.

These features are coming later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey, Apple said. To start off, these features are unveiled in the US.

This is how it will work

Apple also explained in detail how the three features will work.

The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos. Apple said it uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit.

When a child receives harmful content, the photo will be blurred and the child will be warned, and presented with helpful resources to deal with the situation. Further, if the child does view the material, their parents will get a message. If a child attempts to send sexually explicit photos, it will be warned before the photo is sent, and their parents will also be notified in the event.

Apple clarified that the feature is designed in such a way that the company does not get access to the messages.

Apple says privacy will not be breached

With regard to CSAM images, Apple said a new technology in iOS and iPadOS will allow it to detect such content stored in iCloud Photos.

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result, Apple said.

The new technology will allow Apple to provide valuable and actionable information to law enforcement regarding the proliferation of known CSAM. Apple said it does these things even while providing significant privacy benefits over existing techniques "since Apple only learns about users’ photos if they have a collection of known CSAM in their iCloud Photos account. Even in these cases, Apple only learns about images that match known CSAM."

Apple said Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.

Over three decades as a journalist covering current affairs, politics, sports and now technology. Former Editor of News Today, writer of humour columns across publications and a hardcore cricket and cinema enthusiast. He writes about technology trends and suggest movies and shows to watch on OTT platforms.