Brain gain: how AI is helping us work and live better

Because sometimes our grey matter isn’t quite enough

Artificial intelligence is big news at the moment, with pretty much every company claiming that its latest product is powered by AI.

Some of these products are genuinely useful, while others are fairly ludicrous (we’re looking at you AI toaster), but we’re undoubtedly heading into a world where our lives are increasingly augmented by some kind of digital assistance.

And while that prospect might be an unsettling one, especially for those worried that their job might be at risk from the rise of automation, there are some major benefits to augmenting our own brain power by embracing a little artificial intelligence.

Here we’re going to look at the artificially intelligent technologies that we’re already using to make our lives easier, and taking a look forward to where we might end up as machines keep getting more intelligent.

Digital assistants

One of the easiest examples for most people to understand as an AI is your digital assistant. By that we mean Alexa, Siri, Google Assistant, Cortana, or any of the ever-growing number of voice assistants out there.

While their soothing, slightly robotic voices may sound similar to those of KITT from Knight Rider or HAL from 2001: A Space Odyssey, we're still a long way from a genuinely intelligent (and most importantly sentient) robot pal.

But that’s not to say there’s no intelligence in the smart speakers that house our digital assistants. There's a very impressive school of AI known as Natural Language Processing (NLP) at work behind every request.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Imagine a standard request to an Amazon device, which might sound something like this:

“Alexa! Is it on? Yes it’s on. Shush Barbara. Alexa, can you play that song that goes ‘do be doo be don’t believe me, just watch’ please.”

A human would be able to hear there are two people talking, and that one of them wants to listen to the wonderful summer anthem Uptown Funk by Mark Ronson featuring Bruno Mars. For a computer it’s more complicated. It has to identify key words that are valuable, and more importantly, which words need to be ignored.

Let’s head back to Barbara and her friend:

“Alexa! [which is the ‘wake word’, so the assistant is now listening for the request] Is it on? [which is a question and could potentially be answered but isn’t because the sample continues] Yes it’s on. Shush Barbara [all which needs to be ignored]. Alexa, [the wake word again] can you [superfluous but pleasant] play [action] that song [media type] that goes ‘do be doo be [unnecessary information] don’t believe me, just watch [song identifier]’ please [more superfluous information]”

Using NLP, the digital assistant is able to draw out the information it perceives to be the most important, and then loads up a media player, and begins playing your tune. All so that you don’t have to wrack your grey matter for the name of the song, and spend time searching for it.

At the moment our digital assistants are most commonly being used to set timers, but it’s only a matter of time before we start delegating to them more and more responsibilities which we used to handle ourselves.

The memory game

Nowhere is our delegation and augmentation of our brain function more obvious than in how we deal with memory. Gone are the days when you had to actually remember a person’s telephone number, while our calendars remind us of our appointments, our heating systems automatically adjust to our routines, and our map apps remember our commonly visited destinations and intelligently suggest possible destinations based on the time of day that we open the app.

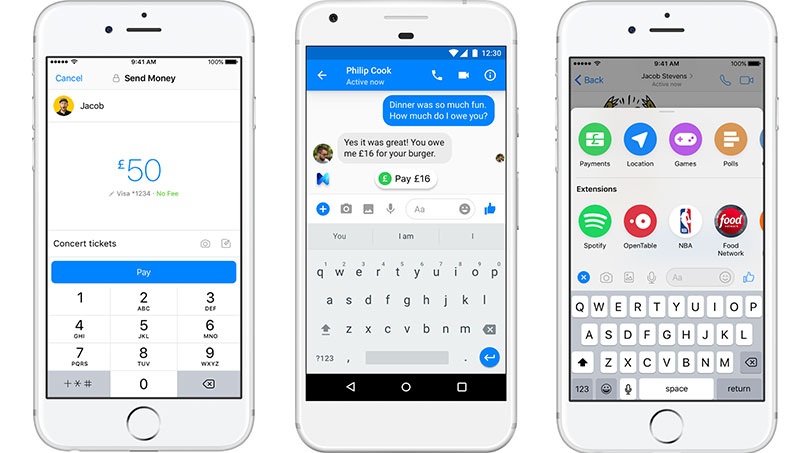

And these intelligent suggestions are only becoming more common. Netflix suggests movies that you like based on algorithms that identify the kind of films and television you like, social media providers are all adopting suggestions based on your profile, and Facebook even has an AI that lives in its Messenger platform that makes helpful suggestions based on the conversation you’re having.

You’re in the middle of a chat with your friend, you politely remind them that they owe you five dollars, and M pops us with the suggestion that your friend does a peer-to-peer payment in the app.

While this AI is serving the purpose of keeping you in the app, it is also attempting to make your life easier. And the more these AIs smoothen the sharper edges of life, the more of them we're likely to encounter.

Daddy, what’s that?

One of the fields of AI that is seriously exciting is the way that programs are being created that can identify the subjects of photographs. Being able to identify human faces has existed for years now, as anyone who’s ever gone on a ‘tagging’ spree in Facebook will attest, but now there are some amazing steps being made in identifying all manner of objects.

Google Lens is one of the forerunners in the ‘point your smartphone camera at something and it’ll tell you what it is’ game (and that's not all it does), and there is something that feels magical about it.

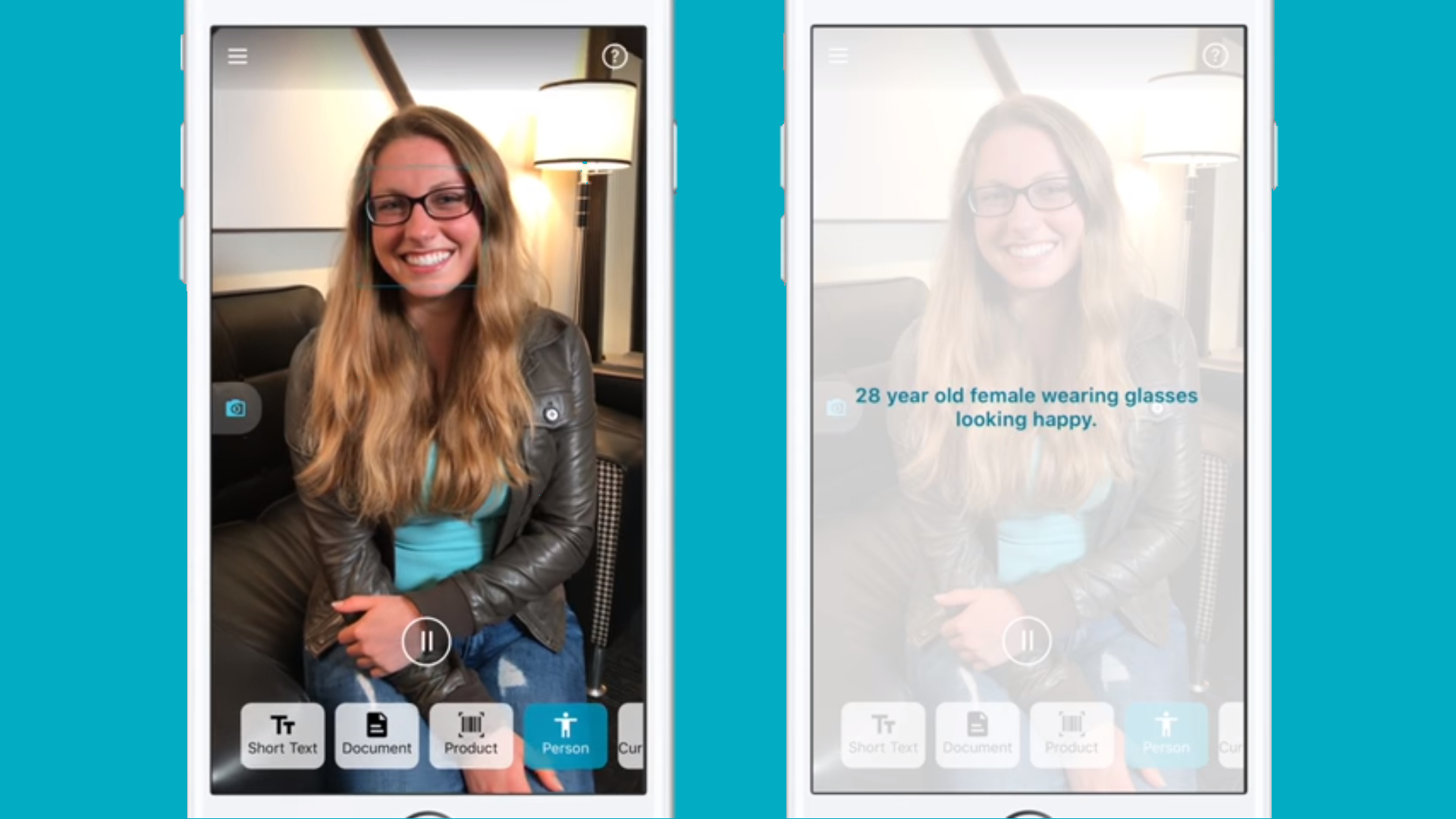

Not only does it mean you’ll soon be able to effectively Google anything you can point your phone at, the technology also opens up some really exciting possibilities for people who suffer from visual impairment.

Microsoft has created an app called Seeing AI that can identify the subject of an image and read out what it sees so that a user with impaired vision is effectively given new sight by the app.

At the moment the technology is still in an early stage of its development, but can already identify people, emotion, heat, color, a number of different global currencies, and can read printed and handwritten text.

The rise of the machines

While all of this progress in AIs that augment our brains is great for making our lives easier, it does raise the question of what happens when the machines become more intelligent than us.

Well, there’s a possibility that may never happen, because the augmentation becomes a part of our brains. That’s if Elon Musk has anything to do with it.

The man behind Tesla, Space X, and Hyperloop has a company called Neuralink that is working on creating brain-computer interfaces that would solve the problem of the singularity by implanting an interface into our brains that creates a symbiotic relationship with our AI.

Imagine being able to search the internet with your mind, recall all your appointments, even communicate with people using a brain-computer interface. While it may sound like total science-fiction, the team working on Neuralink are making some serious progress.

According to Musk: “The thing that people, I think, don’t appreciate right now is that they are already a cyborg. You’re already a different creature than you would have been 20 years ago, or even 10 years ago. You can see this when they do surveys of like, 'how long do you want to be away from your phone?' and – particularly if you’re a teenager or in your 20s – even a day hurts. If you leave your phone behind, it’s like missing limb syndrome. I think people – they’re already kind of merged with their phone and their laptop and their applications and everything.“

Which really raises the question; with the proliferation of AI augmentation of our own brain power, are we ushering in the age of the cyborg?

- TechRadar's AI Week is brought to you in association with Honor.

Andrew London is a writer at Velocity Partners. Prior to Velocity Partners, he was a staff writer at Future plc.