Google's latest AI tests show computers are just as selfish as humans

DeepMind applies game theory to artificial intelligence

Famous for mastering the ancient game of Go - a feat considered impossible for computers - the Google-owned DeepMind program is playing something a little different these days: social dilemmas.

The artificial intelligence (AI) arm tested game theory scenarios to see whether AIs can learn to work together for a mutual benefit.

As described in DeepMind's blog post, one of the more famous "games" used in these kind of social experiments is the Prisoner's Dilemma, in which two criminals have to either rat out a partner for a chance at walking free, or risk staying silent for a reduced sentence.

Rather than toss a computer in jail, however, scientists at DeepMind came up with two games that closely emulates these type of "compete vs. cooperate" conundrums.

Wolfpack: The Gathering

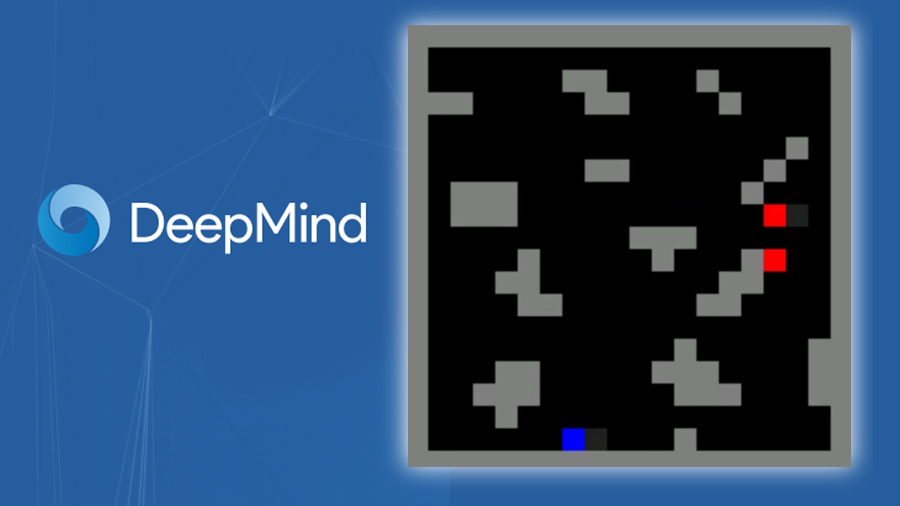

The first game DeepMind tested these concepts with is called "Gathering". Here, each AI agent - represented as red and blue pixels - independently gathered green pixels representing apples.

As the apples start to dwindle, the agents can also "tag" another one to disable it temporarily, earning extra time to pick up apples unobstructed as supplies diminished.

The second game, "Wolfpack," puts together a team of AI agents into a 2v1 game of predator (red pixels) versus prey (a lone blue pixel).

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The catch here is that a predator scores each time it catches the prey, but stands to gain a bigger reward if its teammate is in proximity.

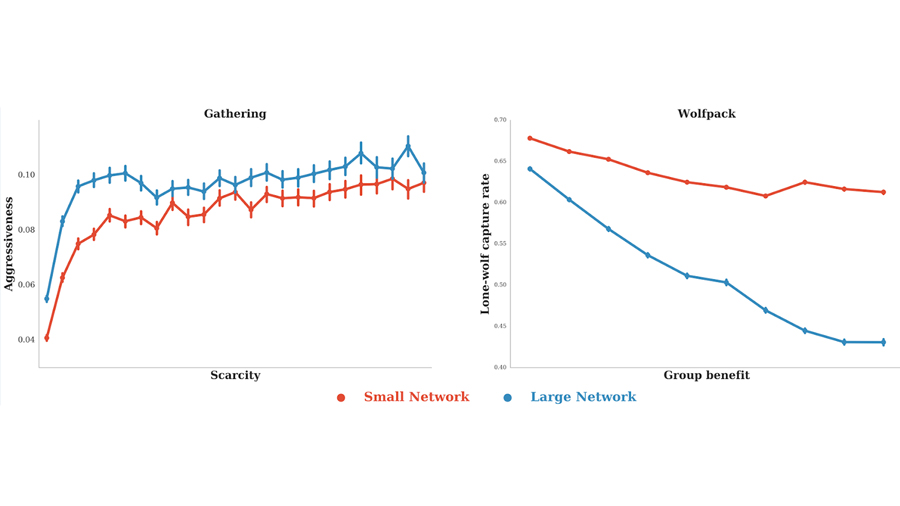

In Gathering, aggressiveness (use of the immobilizing tag) was measured as apples became scarcer, while in Wolfpack, the rate of captures made by a lone wolf (where the teammate isn't close by) was measured against the group bonus granted for cooperating.

After playing multiple games of differing network sizes, DeepMind's researchers analyzed the variables informing each AI's judgement calls, which you can see below:

What does all this mean?

As you can see in the results, agents opted to immobilize opponents more as apples grew scarce in Gathering, and lone-wolf captures went down in proportion to the reward gained from working together in Wolfpack.

It goes without saying that these behaviors run identically to rational human nature, where contesting for resources is minimal when they are plentiful, and individualistic behaviors are less likely when there is a far greater reward for teamwork.

As for what DeepMind can do with this data, researchers posit that their findings closely resemble the theory of Homo economicus - the idea that human nature is, generally speaking, rational yet ultimately self-interested.

Using this mindset, DeepMind says its data could one day help researchers better understand complex, multi-agent systems like the economy, traffic patterns, or the environment. That, or make the ultimate competitor for conspire-to-win games like The Settlers of Catan, but hey, that's just our take.

- Here are the best computers on the market