Are we addicted to graphics power? Are Nvidia and AMD, in reality, the pushers of the most addictive shader skag?

In any other walk of life you'd be dragged off to rehab for this intensely self-destructive cycle of substance abuse. But just like that heroin addict, who's currently trying to break into your home and steal your pride and joy gaming system, the PC industry is addicted to graphics.

It's the driving force behind the games we love and the reason we love the PC so much in the first place and why consoles will always be toys in comparison. Of course, we could turn around, throw our hands heavenwards and shout 'Enough is enough, this madness must end! Stop the development! My graphics card is good enough.' But where would that get us? We'd still be playing Tomb Raider on an original 3dfx Voodoo card.

The desperate truth is we need, we long for that hit of hardcore 3D acceleration. We need help, we need treatment, what we need is the latest graphics card. Sliding that long card into a tight PCI Express slot always feels so good.

For many years now we've been happy in this abusive relationship. Clinging to our ageing card, trying to scrap the last remnants of a decent frame rate together by installing hacked drivers and dropping the resolution, until we end up crawling back to our favourite green or red dealer for a fresh hit of delicious 3D.

But today that magic hit isn't just about graphics: from HD decoding and physics acceleration to GP-GPU features, that graphics card is offering a lot of technology. The next generation of cards is set to up this technology to a new level and with the advent of a new pusher on the scene – in the form of chip-giant Intel – the entire graphics market is set for an enormous shake up.

It'll be a combination of new competition, changing demands and the evolving technology that is bringing general processing and graphics processing closer and closer together. But what will happen when these two worlds collide? Lets find out…

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Back in time

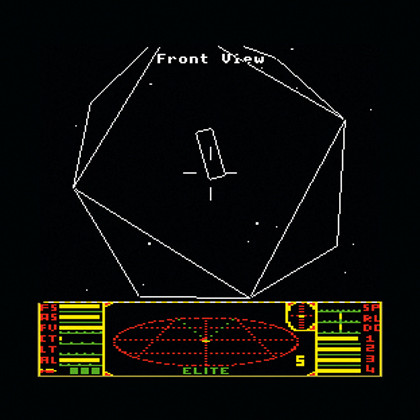

Not that we want to dwell on the past but how did this addictive relationship start? The answer lies with what PCs were back in the early nineties and how 3D images are generated in the first place. So lets take you back, back, back in time to when the original Doom, Duke Nuke 'em 3D and Wing Commander adorned our screens.

3D gaming was a simplistic affair – sometimes referred to as 'vector games'. 3D line objects were made of vectors; a mathematical construct and nothing more than a line in space defined by two points. Put three vectors together and you get a triangle, put enough triangles together and you can form anything.

Luckily for your average 7MHz, 16-bit processor, vectors can be manipulated using simple matrices functions, so they can be scaled and rotated in our imaginary space before being drawn to the screen. But lines aren't very exciting, unless they're white and Bolivian in origin.

As a stepping stone to true 3D, Doom and its clones were based on 2D maps that had simple height information and the actual 3D effect were a textured wall projection. Similarly, the monsters were flat bitmaps positioned on that same 2D map, scaled according to their distance from the player.

NOT SO ELITE: Graphics have come a long way since the dark days of 1982

This combined with pseudo lighting effects enabled id Software to generate a basic, fully textured 3D world on a lowly 386 PC. Faster processors have enabled devs to combine the texture handling used in Doom with a true full 3D Vector Engine to create the likes of Descent in 1995, and in 1996, the seminal Quake.

But despite all the cleverness of these engines, incredibly basic abilities, such as texture filtering were and remain simply too processor intensive for a standard CPU to even consider attempting in real time.

Acceleration heaven

The first time our gelatinous eyeballs gazed upon the smooth textures and lighting effects in Quake or the explosive effects of Incoming, we were hooked. It was these types of effects and abilities that enabled a mid-nineties PC to pull off arcade-level graphics.

While not wanting to delve into degree level subjects, to really understand why graphics cards exist as they do today, it's helpful to know what's required to create that eye-pleasing 3D display we so enjoy. As you'll see graphics cards started with handling only a fraction of the total process, up until today where they embrace almost the entire task.

We've already mentioned vectors and how they can be used to build up models from triangular meshes. You start here with your models, these need to be transformed and scaled to fit into a virtual 'world view', the application then applies a 'view space', which is how the player will view this world. It's a pyramid volume cut out of the world space and bounds the only area of interest to the renderer.

From this pyramid we get the clipping space, which is the visible square of our virtual viewport and finally these are translated into the screen space where the 2D x/y coordinates are calculated ready for the pixel rendering. These steps are important as originally this was done on the CPU, but stages were slowly shifted to the GPU. So are you still with us?

As that's the simple part, each of those 'views' is required for different stages in rendering. For instance, to help optimise the rendering it makes sense to discard all the undrawn triangles. Occlusion culling will remove obscured objects, trivial clipping removes objects outside the 'view space' and finally culling determines which triangles are facing away from the viewer and so can be ignored.

The clipping space view is created from this remaining world space and any models that bisect the viewing boundary box need to be clipped off and retessellated, leaving only the visible triangles in the final scene.

- 1

- 2

Current page: A brief history of graphics cards

Next Page Lighting, unified shaders and Larrabee