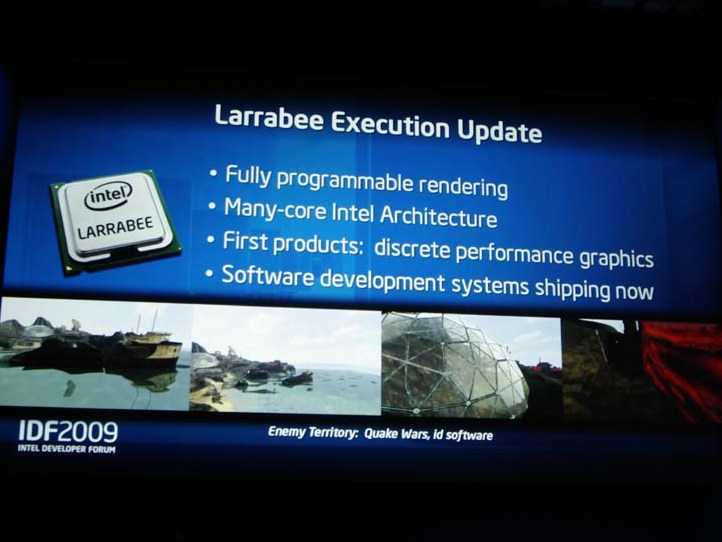

Intel demos Larrabee chip

Nice ray-tracing, but what about real games?

Intel treated the world to the first ever public demonstration today of the mythical Larrabee co-processor. Sadly, however, Intel failed to disclose any details whatsoever about the much anticipated graphics-optimised multi-core chip.

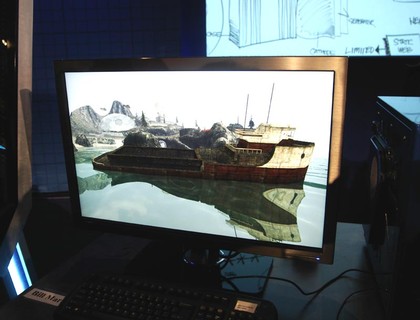

The demo itself was distinctly underwhelming, comprising an unimpressive exposition of Quake Wars: Enemy Territory with added real-time ray-tracing. While Larrabee is expected to be a

, the more pressing question is whether it will be any good for conventional raster-based graphics.

Raster graphics, of course, is the method used by existing GPUs from AMD and NVIDIA and that's not expected to change for several years to come.

Trouble ahead?

Moreover, in the weeks leading up to this year's Intel Developer Forum, the online rumour mill has been awash with tales of trouble regarding Larrabee.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

For starters, it's said to be running at least six months late thanks to bugs afflicting early spins of the chip. Making matters worse, it's thought Intel is having problems getting conventional DirectX graphics running quickly through Larrabee's revolutionary programmable graphics pipelines.

The best way to scupper such negative rumblings would have been a solid demo of Larrabee running a current game. Anyway, following the presentation Intel bigwig Sean Maloney was distinctly prickly when pressed for further details. In the end, he disclosed nothing new at all.

But never mind, because we can tell you what Larrabee will look like. Namely, it will be a two billion transistor 45nm chip with 32 in-order cores. About the same size as AMD's new high GPU, the Radeon HD 5870, then. Intriguing stuff, but nothing we saw today nor the raw specs of the chip get us any closer to knowing just how competitive it will be compared to incumbent GPUs from Nvidia and AMD.

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.