MediaTek: meet the chip maker that has powered your favorite gadgets for years

And, how you're going to see a lot more of it soon

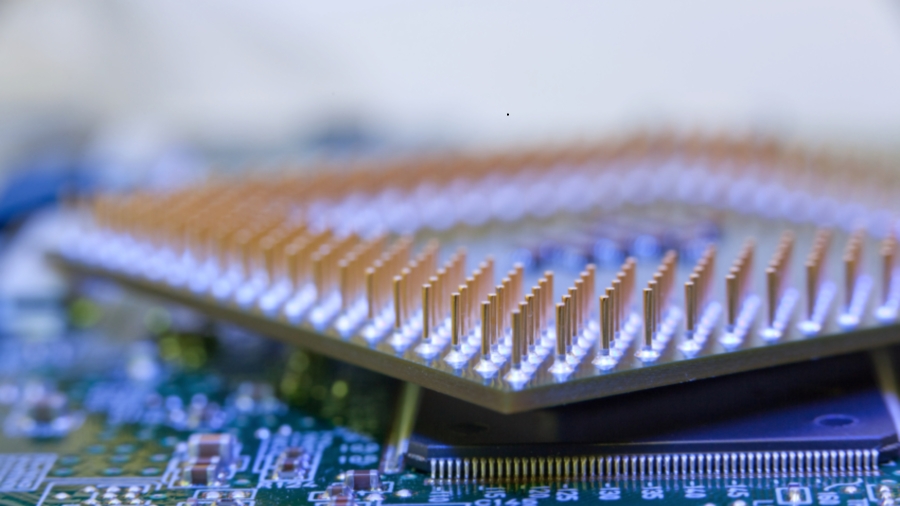

So, let's talk about chips specifically. Everyone generally just sees "processor" and has an idea of what that is from their phone to their laptop, but they might not even think about what's going on in there. Could you help intimate some of the specific differences between, say, the chip inside my phone – an SoC, or system-on-a-chip – and the chip inside the computer I just built?

Probably a few things, but I can give some interesting examples. I think it all starts from the design constraints. The phone, no matter what we do, is always going to be power constrained [by the battery].

I can put more stuff on the silicon than I can turn on – that's physics today. I can cost-effectively give you more CPUs, more GPUs, more features than you want turned on at all times. The constraint is always power. Battery life is what drives everything. Those of us in the mobile space, this is what we've lived and breathed from day one, because that has never been solved and it never will.

It's always a battle to balance the performance [or speed] and the power [or longevity]. So, we do all of the usual things, like migrating the process node technology and Moore's Law, but you just push more performance and you're [then] back bouncing.

The first major difference is that most of the mobile stuff is built on ARM, and obviously the classic PC stuff is x86 [Intel's long-standing standard for PC chip architecture]. The ARM roadmap has moved up in performance; it's moved to 64-bit and high-end cores.

For our latest Helio X product, Helio X20, we've built a deca-core, so it's 10 cores. Straight off the bat, why the hell would you put 10 cores on a phone? Of course, we were the first to launch octa-core processors a couple of years ago.

But, it's really not about the number 10. What was much more fundamental and important was that we built the 10 cores in what we call tri-cluster.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

If you're familiar with ARM's big.LITTLE, we basically extended that from two to three. So, instead of big.LITTLE, we have big, medium, little.

The logic is quite simple. In a phone, you always want to run on the lowest-power system that you can. So, in this architecture, we have two big cores – the biggest thing that ARM makes – and we build and design those things to be as fast as they can be.

We also have four little cores, and we optimize those for power, i.e. I don't care what the frequency is. I take the reasonable frequency of operation, but they're going to be built with the lowest possible power consumption.

The problem we saw with big.LITTLE is, if you pushed one [core] to huge performance and the other to lowest power consumption, the gap started to get big. Then, you kind of get this gear shift thing going from a Mini [Cooper] to a Ferrari.

So, we put another cluster in the middle, four cores – same architecture as this one but optimized more for frequency. [That makes for] a middle set of gears, right?

The whole idea for this is that, the ARM architecture and the flexibility of this structure we can overlay on that what we call Core Pilot – our name for what manages what's happening with the cores. The clever thing is then that you only run on the lowest possible power consumption configuration that you want.

For most of the time, the phone is probably quite happy running down here in the low cluster. And, these [other cores] are off, basically sitting there – dark silicon, if you will – not used. But, that's going to give you the longest possible battery life.

Then, if the user decides they want to run a heavy graphics game or launch a complex website, we may turn on some of the bigger cores. So, you'll get the performance boost when you need it.

The innovation has come with – thinking about 10 cores is an awful lot, right? – but it's really more about the architecture of how they're configured and how they're used that allows for performance for those moments when you need it. In most people's usage phones, [those] are limited bursts of [speed].

Sure, there's probably the guy walking around playing video games all of the time.

Pokémon Go, man. That's my life in New York City.

But, I think that, in the high end, there's been a lot more innovation around computing architectures, with things like big.LITTLE and us extending it to three configurations with the Core Pilot, to try to find this balance of power and performance.

Perhaps the legacy computing platforms have never had to do as much with that.

There's also heterogeneous computing, and that's recognizing that a CPU is not always the right thing to run everything on. And now you have CPUs, GPUs – we may even put other clusters of things on there to run other stuff.

A good example in mobile phones again is that you may just want a standby mode, right? The phone is in standby, nothing else is powered on, but you might want to keep just a very small, embedded core even, alive. Maybe it's doing nothing more than looking at sensors.

Like Apple's co-processor.

Right, exactly. That's the other extreme of this, right? Wherein you build – and, we've done this on our high-end processors – you build a dedicated, very small, isolated power domain just to do this kind of stuff. Then, something will wake up the phone and you start up all of the other stuff.

Again, I come back to the constraints. Nobody wants a big, fat phone, and everybody wants a very slim, thin design, but they also want one or two days of battery life. So, you've got to balance those things.

That has generated a lot more innovation in the computing architecture and also how we use CPUs or GPUs or image pipeline processors, or other dedicated audio processors, for different functions and managing how you run these different systems.

As we see this move into AR [augmented reality] and VR [virtual reality], it's going to drive more of that. This is a lot of data moving around. To make this [VR] a good experience, you need high-resolution displays. You need fast frame rates. Maybe it's 4K/120 [frames per second] that we end up with, but that's a lot of data.

Doing that power-efficiently I think is going to be quite interesting.

The tri-cluster system puts you in a good position to attack that.

How far we extend that, who knows? And, when we talk about Core Pilot, it also manages our GPUs, not just our CPUs. Again, sometimes it's better to run something on the GPU. Very specific imaging might be more effective if you run on that GPU.

Again, having all of that on a single SoC rather than on different chipsets [like with x86 systems] makes that easier.

It seems then, that software or firmware is going to become even more important than it already is going forward to manage all of these things as smartly as possible.

And, that's something that, for example, for Core Pilot, it obviously is something that's building on top of Linux kernels. It's obviously building on top of what ARM has already done with the big.LITTLE stuff.

It's what's taking the task requests from the Android operating system, So, it essentially sits between the lower-layer software and the application layer stuff coming from Android. And, it's making decisions on which task gets parsed to which cores, which cores we need to turn on to satisfy those tasks.

And, [it's] doing the diagnostics, learning. "OK, so that application in the past used this kind of thing, therefore that's what I should turn on. All of that is built-in.

What's interesting is that the desktop folks barely have to worry about that. They all have core controllers to varying degrees of success. Surely, they do have to worry when it comes to laptops, etc. But, there you're working with such a bigger supply of power, so maybe it's less of a consideration. You can probably see where we're going with this. Now, we won't name names, but being a specialist in SoC, what do you make of the more traditional chip makers having so much trouble making successful SoCs?

It is a little puzzling sometimes. For me, it comes back to a few things. One, the focus on mobile and the pace – frankly, it's brutal. It's much more dynamic.

Well, you're on a nine month cadence or something like that, right?

Yeah, and you see how fast stuff is changing, right? Now, it's a 1.5 billion unit market versus a couple-hundred-million unit market. But, I think the PC space became a bit monopolized, is probably the right word, very early on. So, the cadence was controlled by one or two companies.

No one company in this mobile industry – like, we don't control it, Qualcomm doesn't control it. It's quite dynamic. I think that feeds a certain innovation and differentiation and competitive ecosystem. We have to be better, right?

On top of that, I think there's quite a lot of technologies that have to come together. Frankly, to be good at this stuff, a lot of it is also the kind of analogy stuff – the radio piece, the GPS, the Wi-Fi, the power management. We've obviously spent quite a lot of R&D effort into making sure we have all of those technologies driving toward that SoC mindset.

[That's] because it's competitive. The only way to make money is to make sure you can integrate as much as you can into the silicon. Those are the kind of things that have always driven us.

But, I'll name names, it has astounded me so far that Intel hasn't been able to deliver integrated SoCs. It's surprising.

Well, maybe there are some folks that didn't quite see the onset of every device on the planet being connected. It seems like you guys saw from an early point that TVs are going to have chips, these goofy little media players will have them, etc, whereas before it was some little 8-bit controller. Now, we need a full system on a chip to run a damn Blu-ray player.

Yeah, because it's running a full operating system, it's connected, it has to have the stacks, the networking. And, that opens up applications, different user configuration – all that kind of stuff.

So, I wonder how much complacency was there in terms of, "Oh, those devices aren't going to need that kind of stuff, so don't worry about it."

Yeah, I don't know. You'd have to go and ask them.

- These are the PC's best processors you can buy today

- 1

- 2

Current page: SoC vs traditional chips: MediaTek explains

Prev Page Who (and where) is MediaTek?Joe Osborne is the Senior Technology Editor at Insider Inc. His role is to leads the technology coverage team for the Business Insider Shopping team, facilitating expert reviews, comprehensive buying guides, snap deals news and more. Previously, Joe was TechRadar's US computing editor, leading reviews of everything from gaming PCs to internal components and accessories. In his spare time, Joe is a renowned Dungeons and Dragons dungeon master – and arguably the nicest man in tech.