Critical components

Deca-core processors and video cards with 12GB of video memory are arguably some of the hottest components of our generation. But, what do you know about the chips that made today's veritable supercomputers actually possible? Anything? Well then, bring up a chair and listen up, whipper snappers.

Without these chips, you wouldn't have Doom, Spotify, Facebook, Instagram or even the ability open up a simple document. Not only did these processors make computing possible, they also shaped history from the rise of 3D gaming to establishing a standard codebase for PCs everywhere.

This article is part of TechRadar's Silicon Week. The world inside of our machines is changing more rapidly than ever, so we're looking to explore CPUs, GPUs and all other forms of the most precious metal in computing.

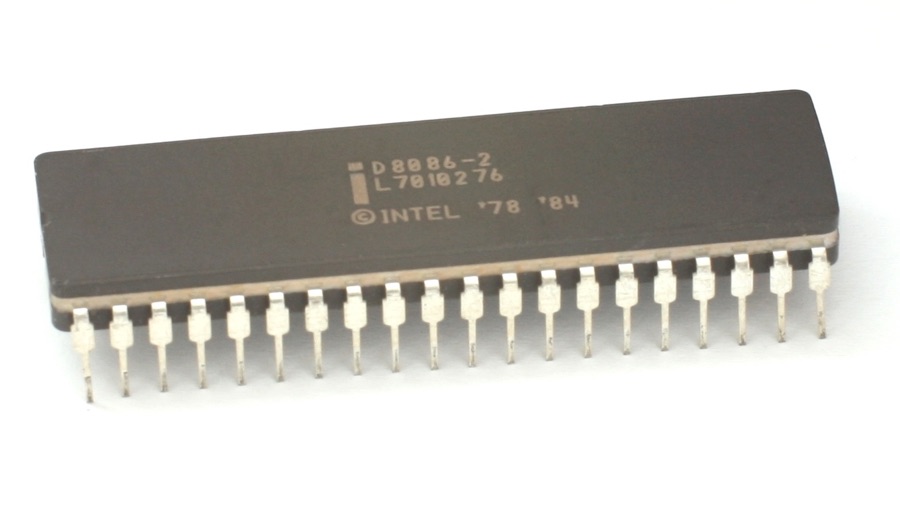

Introducing the x86 standard

By 1979, the microprocessor was seven years old and Intel had just announced the 8086 as the world's first 16-bit central processing unit (CPU). Big wigs including NASA and IBM adopted the chip, helping Intel gain dominance in the computing world. However, the real lasting legacy of this processor comes from how it established the x86 information set as a standard the computer industry needed in the early days. Without this universal platform, the exponential improvement in computer speed, capacity and price-to-performance would have never taken hold.

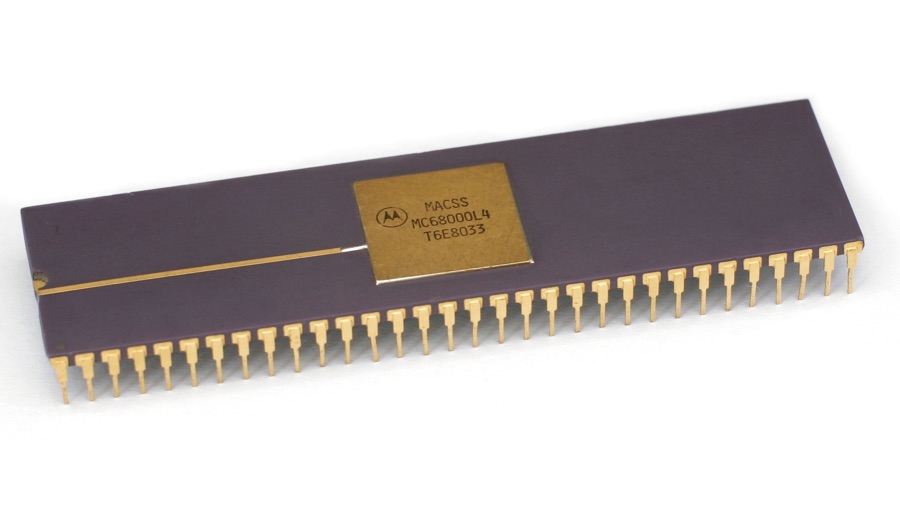

Hello world

Developed in the same year as Intel's breakout 8086 chip, the Motorola 68000 was a 32-bit processor that leap-frogged over everything else in development at the time. The chip was powerful and flexible, allowing it to be used in a wide variety of machines, including the Apple Lisa and Macintosh, Commodore Amiga, Atari ST and arcade cabinets throughout the world.

3D gaming rises

Before gamers had to worry about ambient occlusion, tessellation or a thousand other graphical settings, how games look came down to 2D and 3D graphics. Up until 1996, you had graphics cards that could power both flat and three-dimensional games, but the 3dfx Voodoo 1 was the first GPU to focus solely on the rising popularity of 3D games, like Doom and Quake.

In order to play 2D games, users would have to plug in a second card through a VGA pass through, but ultimately gamers were too busy tearing through first-person shooters to care. The Voodoo 1 arguably put 3D games on the map, and it was powerful enough to keep up with Unreal Tournament and Duke Nukem 3D, even though they were released years after its introduction.

The GPU is born

The Nvidia GeForce 256 was the first graphics card to be officially called a GPU in 1999. Technically, there were plenty of 'GPUs' before it, but Nvidia's new card was able to take on a laundry list of tasks including transforming, lighting and rendering a minimum of 10 million pixels per second with just a single-core processor. Beforehand, the processor was responsible for a lion share of the same tasks, and thus the ongoing relationship between GPUs and CPUs was born.

Dual GPUs rise

The ATi Radeon Rage Fury MAXX was the company's first ever dual-chip graphics card, announced at the tail end of 1999. Although 3dfx claimed the honor of creating the first dual- and quad-chip graphics cards with the Voodoo 4 and Voodoo 5, AMD created a powerhouse in its own right by pairing two Rage 128 PRO graphic GPU cores with a "massive" 64 MBs of memory.

Beyond the sheer raw power of the card, the MAXX introduced a new method for rendering alternate frames. Whereas 3dfx's cards would task each graphics core with drawing the alternating scan lines of an image, AMD's dual-chip GPUs were designed to draw the entire picture and trade off the next one to its partner. Eventually, this same alternate frame rendering methodology was passed on to all multi-GPU systems.

Olympus falls

With 2,688 CUDA cores, 6GB of GDDR5 RAM, and 7.1 billion transistors all-packed into the 10.5-inch frame, the original Nvidia GTX Titan was an Olympian among graphics cards. Announced back in 2013, there wasn't any other card that delivered 4,500 gigaflops of raw power.

Of course, priced at $1,000, it came at an extreme premium, and that's no real surprise considering it was designed to power gaming supercomputers. Regardless of whether gamers had the money to afford it, the Titan demanded everyone's attention and, more importantly, set a new benchmark in graphical power for everyone to chase. And, that race doesn't show any sign of slowing down.

- Now read all about the 10 biggest moments in computing history

Kevin Lee was a former computing reporter at TechRadar. Kevin is now the SEO Updates Editor at IGN based in New York. He handles all of the best of tech buying guides while also dipping his hand in the entertainment and games evergreen content. Kevin has over eight years of experience in the tech and games publications with previous bylines at Polygon, PC World, and more. Outside of work, Kevin is major movie buff of cult and bad films. He also regularly plays flight & space sim and racing games. IRL he's a fan of archery, axe throwing, and board games.