10 biggest moments in computing history

The last 70 years of technological advancement

Then and now

There have been huge advances in the computer world since the early 1900s. It all started with calculators and typewriters being considered were 'new-fangled' contraptions, and in the 70-odd years since the true birth of the computing era, there's been an exponential explosion in technology and capabilities.

No longer are we in the age of room-sized computers. No longer are we in the age of analog. Yet, sometimes it's good to look back and see where we've come from, to help us imagine where we're going. With that in mind, here are our top 10 biggest moments in computing history.

This article is part of TechRadar's Silicon Week. The world inside of our machines is changing more rapidly than ever, so we're looking to explore CPUs, GPUs and all other forms of the most precious metal in computing.

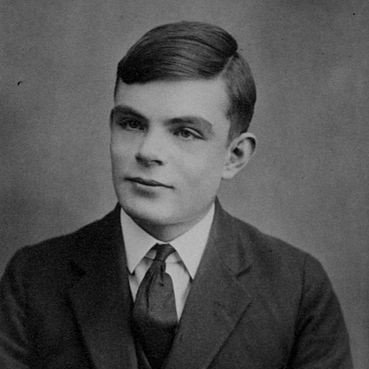

1939: Alan Turing's mad genius

Born in 1912 in Paddington, London, Alan Turing left a remarkable legacy on the computing industry. By 1932, he was writing mathematical whitepapers concerning algorithmic truths and conceptualizing Turing Machines as the earliest computer. He led a group of cryptologists and scientists in an attempt to decipher German messages – although unlike in the movie retelling, it was actually a team of Polish scientists that cracked the Enigma code.

In 1939, Turing, fellow codebreaker Gordon Welchman and his team created the giant machine that easily decrypted daily messages. Named Bombe (or 'Christopher' in the movie), it was a complicated electrically-powered machine programmed to decrypt the message key of the double-encrypted German messages.

1941: First mouse

The concept for the 'mouse' was invented in 1941, though the first publicly announced mouse didn't actually come into being until around 1968.

In 2003, a former Bristol professor named Ralph Benjamin claimed he was the original creator of a mouse-like device. Secretly patented 1946 by the British Royal Navy, the 'ball tracker' was a metallic ball that would roll between wheels to send coordinates to a radar system, however, it wasn't public knowledge until decades later.

Doug Engelbart and his team of Stanford researchers created a wooden box shell on two wheels which would send movement information back to the computer. Engelbart and his team would then go on to create a wooden mouse in 1968, and by 1973 point-and click computers were being developed.

1971: Microprocessor computing

In the early days, computers were huge. They were impressive to be sure, but having entire buildings devoted to a single machine wasn't really efficient. This lead to the invention of smaller and smaller computing components, with the most important one being the microprocessor.

Intel developed the first micro-sized central processing unit (CPU), the 4004, in 1971. It could process a whole four bits at any given time. Only three years later, the Intel 8008 made its first appearance in a consumer computer, an 8-bit IBM PC. The Intel 8008 ended up kicking off the Pentium series five model revisions later, and by the end of the run it had 20,000 times more transistors and a nearly 3,000 times quicker clock speed.

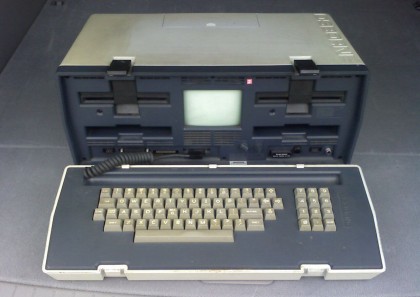

1973: Portable computers

The lightweight laptops and tablets out today have nothing on the original 'portable computer'. The first portable computer – the Osborne 1 – boasted a 4MHz CPU, 64 kilobytes of memory, a detachable (but wired) keyboard that also attached itself as part of the protective casing for transport and even a built-in 5-inch black-and-white screen. Back in 1973, it was the ultimate portable computing system.

The Osborne spawned a mobile-computing revolution that eventually led to the first laptop. Even 35 years ago, the first portable computer came with the first computer games, a text-based game and later Pong, installed via 5.25-inch floppy disks. Taking inflation into account, these portable computers would be priced at $4,500 compared to the $1,800 they costed originally.

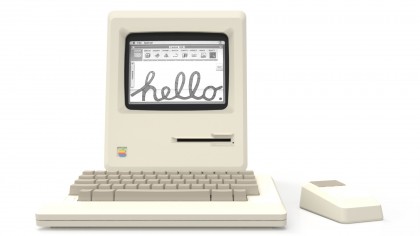

1984: Birth of the Macintosh

The rise of personal (desktop) computing saw the emergence of the Commodore 64, the IBM Personal Computer, and the Macintosh 128K. But before any of these machines even hit the market, most computers were relatively the same; featuring black-and-white screens with Motorola processors and featuring mostly DOS-based user interfaces. In the early days, Apple and Microsoft were even friendly (with some Apple PCs running early Microsoft OS software).

However, after Apple and Microsoft split on not-so-friendly terms, the Macintosh OS debuted in 1984, with a Super Bowl ad made by Ridley Scott. Thus began the Apple Macintosh versus Microsoft war that rages on today. Steve Jobs accused Microsoft of stealing its graphical user interface, though arguably, both took inspiration from the user interface created in the Xerox PARC labs.

2005: Apple and Intel

Since 1991, Apple had been utilizing IBM PowerPC processors in its PowerBook laptop computers (the MacBook's precursor) but eventually stopped using IBM's chipset due to stagnating increases in computing power. In 2005, Apple announced it would utilize Intel x86 processors for its popular line of computers after nearly 15-years of partnership with IBM. Only three years after transitioning to Intel CPUs, Apple released the Intel-only OS 'Snow Leopard', making the PowerPC machines obsolete.

Switching to an Intel chipset enabled Apple to expand its mobile computer lineup with the MacBook Air and eventually the revived MacBook. Apple used both the PowerPC processors and those from Intel for quite a while, until finally doing away with PowerPC support in late 2013.

2002: The dawn of hyperthreading

In 2002, Intel revolutionized another aspect of computer processing through hyper-threading. In each processor there are a number of cores and hyperthreading parallelizes computing of multiple tasks. Essentially it enables simultaneous processing by creating a virtual processor for the second set of tasks.

Intel gave the example of turning a one-lane highway into a two-lane highway, speeding up traffic for all lanes (or processes in hyperthreading). In multi-core processors, multiple tasks are being run through each core at the same time, ensuring the fastest processing possible.

While it was left on the back burner for a little more than a decade, hyperthreading came back in full swing for Intel's 6th generation of Skylake processors. Most computers running the Intel Core i-series use hyperthreading, including Macs and Windows PCs, tablets and more.

2006: AMD Buys ATI

AMD (Advanced Micro Devices) was founded in 1969, and created microchips and circuits for early computer components. After finding its footing, the company would later expand into manufacturing other computer components and even building parts for Intel chips. Eventually, AMD would create its own spin on the Intel lineup and offer competing processors to challenge the computing behemoth.

ATI started making integrated graphics processors for computers in 1985 and created standalone graphics processors by the early 90s.

But it was a big shake-up in the computing world when these two merged in 2006. Both companies were already huge names in the business when AMD bought ATI for $4.3 billion and 58 million shares of stock (around $5.4 billion total).

The big name in chipsets purchasing the big name in graphics processing to create one mega computer dynamo was a huge thing. While the ATI name only lasted a few more years before it was retired, the Radeon graphics line it created has endured to this day.

2013: Console crazy

While the first console was arguably the Atari 2600, with Nintendo Entertainment System soon to follow, it was the introduction of the Sega Genesis that truly sparked the first great console war.

However, over time even Nintendo's popularity waned, leaving only Sony and Microsoft to duke it out for the top spot in console computing since 2001. The heated competition ushered in a new age of entertainment and versatile computing.

The Xbox had better specs, but Playstation 2 had the longevity and following, having released the first Playstation in 1994. In an upset, the Xbox 360 dethroned the PS3 as the top console of the yester-generation. Now the tides have shifted once again in the PS4's favor over the Xbox One, although the recent introduction of the Xbox One S is turning the tables for Microsoft.

Even 15 years later the console wars haven't changed. What has changed, though, is that the two systems are closer to each other, with both essentially running computer processors and x86 processes. Both consoles have essentially become little PCs – and Microsoft only means to merge the two platforms more closely together with Xbox Play Anywhere and Windows 10.

20xx: Artificial intelligence

We end back where we began, with Alan Turing.

The main identifier of artificial intelligence is that the machine can think freely and critically rather than just delivering programmed responses to standardized inputs. Turing developed three ways to test for actual artificial intelligence in a computer 50 years before we saw a machine that possessed even the most rudimentary AI capabilities.

Described as "The Imitation Game" (Yes, like the movie), the computer has to successfully imitate being a human. A computer (A) and a male human (B) try to convince an interrogator (C) that the computer was human.

Nothing has managed to pass Turning's test yet. Even one of the most advanced robots in the world, Honda's running and dancing bipedal ASIMO (Advanced Step in MOtion) robot, doesn't have the intelligence to be considered artificial intelligence. It may have a humanoid look and speech pattern, but it wouldn't be able to live up to Turing's artificial intelligence test.

In Halo the video game, Cortana is an artificial intelligence, a glimpse of what may be ahead for us. Yet, Microsoft's current version of Cortana or Apple's Siri aren't nearly there yet as they both still require static responses to proposed problems. While true artificial intelligence hasn't yet come to fruition, that hasn't stopped us from trying.

- Take a step back in time and checkout the most influential computers of our time