10 Britons who shaped the history of computing

From Babbage and Wilson to World War Two's unsung heroes

While Americans may have stolen the computing limelight since the end of the Second World War, Brits have been responsible for many of its founding principles, some going back to the 19th century, and for several of the big steps that have made it such a powerful force. There is a long history of tech-savvy pioneers who were born and worked under the Union Jack.

We've put together a list of 10 Britons who contributed to the rise of computing for Brit Week, presented in no particular order.

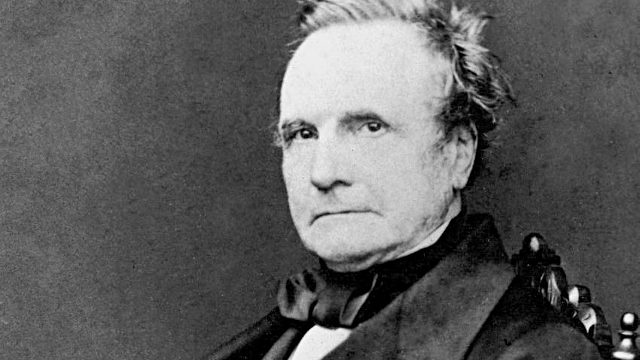

1. Charles Babbage

English mathematician Charles Babbage is often dubbed the 'father of computing' for his conceptual work on early mechanical calculators in the 19th century.

In 1822, Babbage invented a steam-powered machine he named the difference engine, which could perform and print complex calculations using wheels and gears arranged in columns. Despite being of great potential, Babbage only managed to partially complete the machine over the next decade.

Assisted by Ada Byron, daughter of poet Lord Byron, he partly built the difference engine's successor, the analytic engine, in 1835. It could be programmed to perform huge calculations up to 31 digits long using punch cards and could be described as the first programmable computer.

Babbage is remembered as a surly and difficult character who never quite managed to complete his creations due to insufficient funding (although he did manage to secure government grants). Despite this, his brilliance is evident in the fact that it wasn't until the next century that another serious attempt was launched at building anything that resembled a computer.

2. Ada Byron (Countess of Lovelace)

Being Lord Byron's daughter brought Ada Byron certain privileges, one of which was meeting important people of the time, such as Charles Dickens. Another was Charles Babbage, who was introduced to her at a party when she was 17. Byron had already developed a gift for mathematics and language by that time and was said to be fascinated by Babbage's ideas, many of which were influenced by Joseph Jacquard's mechanical loom.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

There is evidence in written letters that Babbage, who went on to collaborate with Byron on his analytic engine, referred to her as 'the Enchantress of Numbers'. Byron designed the 'punch card' program that gave instructions to the machine and allowed it to be 'programmed', in addition to translating the engine's principles into French while adding her own notes and further developing Babbage's ideas.

As such, she is often dubbed the first female computer programmer, and in 1980 the US Department of Defense named a newly developed computer language "Ada" after her.

3. Sophie Wilson

Born in Leeds in 1957, Sophie Wilson is noted for multiple contributions to computing. Her career spans back to 1979 when she joined Acorn Computers to co-write the operating system for the BBC Micro, which is based on the programming language BBC Basic (a beginner's all-purpose symbolic instruction code).

The BBC Micro was hugely successful, going on to sell 1.5 million units after its release in 1981, almost a decade before Windows 3.1 launched. It was one of the first PCs for a generation of people, including future UK technology figures David Darling (co-founder of Codemasters) and Mike Lynch (co-founder of Autonomy).

Perhaps Wilson's greatest achievement, however, lies in designing the instruction set for one of the first RISC processors, the Acorn RISC Machine (ARM), alongside its principal designer Steve Fuber. The microprocessor transformed handheld computing by boosting processing speed and simplifying task management while allowing low power consumption.

Subsequent versions of the microprocessor went on to find a home in the vast majority of mobile computers and smartphones in the world today.

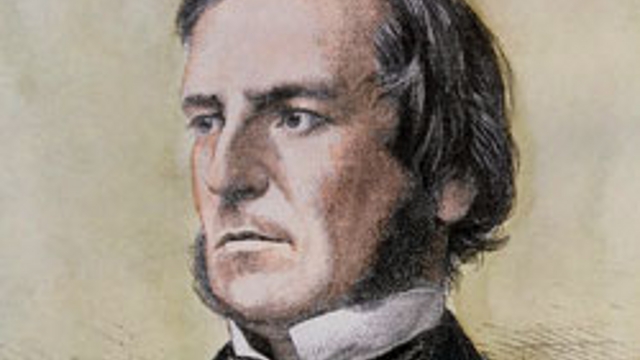

4. George Boole

English mathematician George Boole was a logical man in more ways than one. He developed an algebra for logic in the mid 1800s, which went on to form the basis for modern digital computer logic.