Google plans to make the internet safer for kids – but it’s not enough

Analysis: Google has a greater duty of care

Updated: We've clarified some points to give a better idea of what the goal of the announced features are. As we pointed out in the original story, Google's moves are welcome, but there is still work to do.

Google should heed the wise words of Spider-Man’s Uncle Ben: with great power comes great responsibility. As arguably the most influential company on the internet, thanks to its search engine, Chrome browser and YouTube video sharing site, Google has a duty of care for kids and teens who use its services when online.

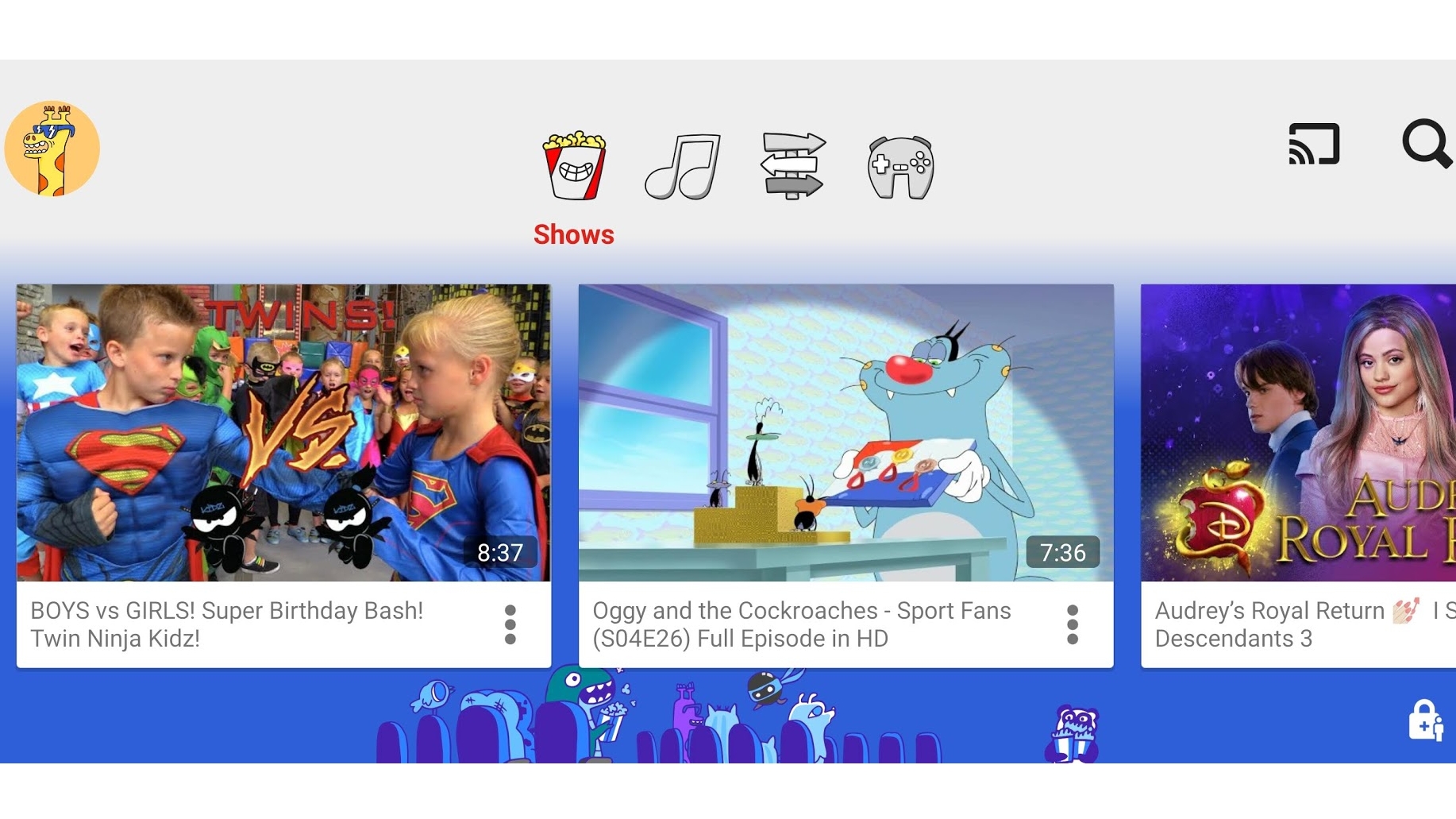

The company has been rolling out parental control features, such Family Link, along with dedicated apps such as YouTube Kids, but the harsh truth is that children are still accessing inappropriate content, either deliberately or by accident, and they are doing this through Google’s products.

- Best parental control software

- How to use Family Safety parental controls in Windows 10

- We show you how to set up Chromebook parental controls

So, it’s welcome that Google is outlining its plans to improve this with a blog post titled “Giving kids and teens a safer experience online.”

This includes new tools that allow people under 18, or their parent or guardian, to request their images are removed from Google Image results. Having your image posted online without your consent can be extremely distressing, so this move will at least make it harder for people to view and share those images.

Meanwhile, YouTube, possibly Google’s most popular service when it comes to kids and teens, is changing the default upload setting available for teens aged 13-17 so they are now the “most private option available”. Google also promises that it will “more prominently surface digital wellbeing features, and provide safeguards and education about commercial content.”

SafeSearch in Google will now be enabled by default for people under 18, and will also be coming to smart displays that use Google, and the Google Assistant will be tweaked to help prevent inappropriate content from being shared – though Google is rather vague on the specifics about this.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Adverts across the internet, and apps in the Play Store, are also getting worked on to ensure they are appropriate for the age of the user.

Good, but not enough

These changes are a good start, but in our view they still don’t go far enough, and in some cases still don’t address many of our concerns.

For example, while allowing under 18s to request the removal of their images from Google Image results, this won’t remove the images from the internet – it just means they shouldn’t appear in Image search results. Presumably, any sites hosting the images will remain up, and could even be found via a standard Google search.

Putting the onus on children or guardians to find and flag their images may be an upsetting experience.

To be fair to Google, it can't dictate what websites host – and nor should it. This new feature could be used in relation to any kind of image of an under 18 year old, such as school photos hosted on a website, or family photos shared on social media, where parents or guardians don't want images that include their children to be easily searchable. It wouldn't be fair to expect Google to penalise these institutions, and there would likely be a backlash if it did.

If a child, or parent or guardian, requests their image to be removed from Google Image search, there does not need to be a justification or policy violation, which should hopefully make the process as quick as possible.

However, for more worrying images, such as doctored photos or images shared without consent and used for cyber bullying, there's still a concern that Google could do more.

We spoke to security specialists and industry experts about Google’s latest announcements, and they appear to be unimpressed.

“Charities and Administrations have been advocating and providing most of these ‘new’ measures for quite some time,” Brian Higgins, security specialist at Comparitech, told us.

“There is now clearly enough of a business case for Google to accept that they should have been doing all of this and much more. None of the ideas here are new, they just cost money and impact on Google’s ability to gather data and target advertising which is why it’s taken them so long to implement any of them.”

Baroness Kidron's child safety organisation 5Rights Foundation, welcomed the move, saying that "these steps are only part of what is expected and is necessary, but they establish beyond doubt that it is possible to build the digital world that young people deserve, & that when govt takes action, the tech sector can and will change."

Walking the tightrope

As for YouTube, making default upload settings more private for teens aged 13-17 doesn’t address many parents’ concerns about the video sharing site. For a start, allowing 13 year olds to upload videos at all is a bit worrying, and any tech savvy teen (which is pretty much all of them) will easily change the default sharing settings.

But what about unsuitable content that still crops up in videos that are seemingly flagged as for children? There are seemingly kid-friendly videos that contain violence or inappropriate language (as reported by the New York Times), which can still all too easily be found by children.

Google’s announcement doesn't fully address that, though it has now stopped autoplay for users under 18, something that YouTube Kids previously had issues with. Google is also constantly removing disturbing videos that feature child-specific themes, the fact is that the onus still remains on parents to monitor their children's content in its entirety.

As for turning SafeSearch on by default for under 18s, that’s a bold move, but how much difference will it make? Originally we read Google's announcement as stating that you will need to be logged in to make use of SafeSearch, which would not impact people who use Google without signing into their account (such as on shared computers at school, for example).

In the statement, Google says "SafeSearch... is already on by default for all signed-in users under 13 who have accounts managed by Family Link. In the coming months, we’ll turn SafeSearch on for existing users under 18 and make this the default setting for teens setting up new accounts."

However, Google has been in touch to explain that you will not need to be signed in for SafeSearch to be enabled if Google thinks the user is under 18. While you need to provide your date of birth when setting up a Google account, which gives Google good evidence of your age, if you don't use a Google account, Google can still get an idea of the age of user, and if it believes they are under 18, they can enable SafeSearch.

It's not clear how Google does this, but it's estimated based on data and signals from search history, YouTube use and activity from other Google services.

This is promising, but won't affect people using shared computers. But, this may be the best Google can do. It has to walk a careful line between limiting what children see online, and censoring websites. Many people worry about Google’s power over the internet as it is, so the company won’t want to be seen to be overreaching.

However, there is a problem with children and teens having access to inappropriate content and as the world's biggest search provider, Google will always be in the firing line over its plans to stop it.

We’ve contacted Google with these points raised, and will update this article when we hear back.

- Looking for something to watch? These are the best family-friendly movies in eye-popping 4K

Matt is TechRadar's Managing Editor for Core Tech, looking after computing and mobile technology. Having written for a number of publications such as PC Plus, PC Format, T3 and Linux Format, there's no aspect of technology that Matt isn't passionate about, especially computing and PC gaming. He’s personally reviewed and used most of the laptops in our best laptops guide - and since joining TechRadar in 2014, he's reviewed over 250 laptops and computing accessories personally.