Here's the secret behind 8K AI upscaling technology

The history of upscaling, and why AI is an actual game-changer

Samsung’s 2020 business strategy for TV sales is simple: 8K or bust. With its QLED 4K TV sales being undercut by budget 4K TVs, Samsung plans to shift the market again, to a format that has (so far) very few competitors, but also very little native content.

Yet as we saw in our 4K vs 8K comparison test earlier this year, you don’t actually need video shot in 7680 × 4320 (8K) resolution to take full advantage of those millions of pixels—Samsung’s 8K TVs use AI upscaling to convert any video type (SD to 4K and everything in-between) into 8K resolution.

Upscaling, of course, isn’t anything new. For years, 4K and even HD sets found ways to stretch lower-resolution content to fit the greater pixel-per-inch ratio of modern TVs. But with 8K TVs needing to fill four 4K TVs worth of pixels, conventional upscaling methods just don’t work, for reasons we’ll get into below.

Now, after visiting the Samsung QA Labs in New Jersey and speaking with its engineers, we have a better idea of how Samsung uses artificial intelligence and machine learning to make 8K upscaling possible—and how its AI techniques compare to other TV manufacturers’ early AI efforts.

Why conventional upscaling looked so terrible

Before 1998, television broadcast in 720x480 resolution, and films shot in higher quality were compressed to fit that format. That’s 345,600 pixels of content, which would only take up a tiny window on modern TVs with higher pixels-per-inch (PPI) ratios. That SD content? It must be stretched to fit over 2 million pixels for high definition, over 8 million for 4K or over 33 million for 8K.

The baseline for upscaling is maintaining the proper pixel ratio by simple multiplication. To convert HD to 4K, the TV’s processor must blow up one HD pixel to take up four pixels of space on the higher-res screen. Or 16 pixels during an HD-to-8K conversion.

Without any image processing the picture ends up, to quote Tolkien, “sort of stretched, like butter scraped over too much bread.” Each bit of data becomes unnaturally square-shaped, without a natural gradient between details and colors. The result is a lot of blocking, or noise, around objects on screen.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

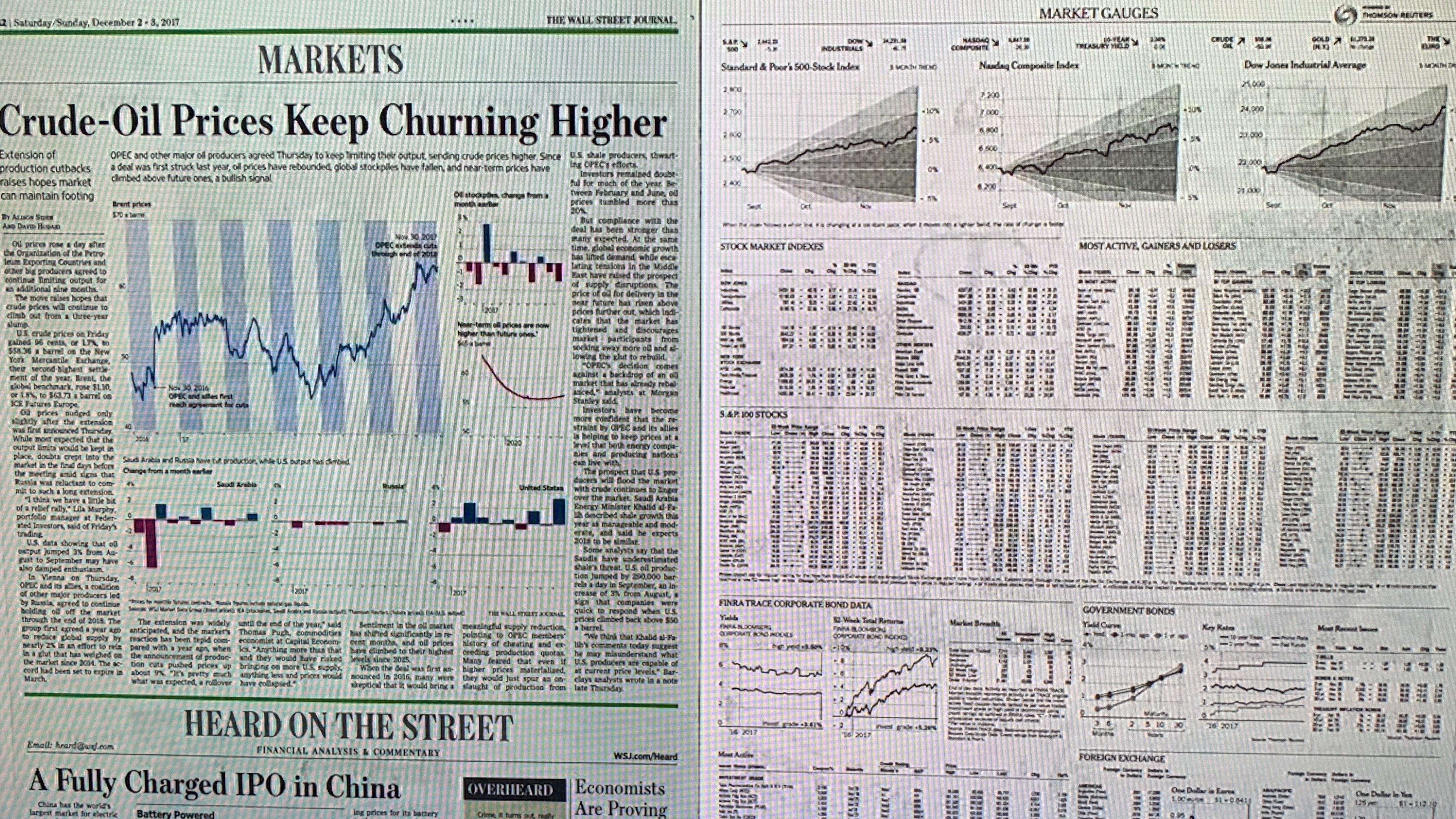

You’ll also likely see something called “mosquito noise”. To compress a video to work for your limited internet bandwidth, broadcasters and websites must fill the stream with intentional color flaws, or “compression artifacts”. The purposefully flawed pixels will swarm around parts of the screen where there are sharp contrasts, like the brown bridge before blue sky in the image above.

The math behind upscaling

In the face of these issues, TV programmers taught their TVs to analyze and digitally process the images in real time to fill in or repair missing or damaged pixels. And they accomplished this using mathematical functions, which you can tell your loved ones the next time they say that too much TV rots your brain.

Specifically, engineers taught the TV processor to interpolate what each missing pixel’s color value should be, based on its surrounding pixels. To do so, it had to define its kernel: the function that assigns color priority to a pixel’s neighbors, based on their proximity.

The most basic kernel used in TVs is nearest neighbor kerneling, which simply calculates which pixel is nearest a vacant pixel and pastes the same color data into the empty pixel. This method causes the picture to take on a blocky zig-zag pattern, or aliasing, with poor edging. Picture a black letter “A” on a white screen; a missing pixel just outside the letter may be filled in as black, while a pixel on the edge of the letter could display as white. The result will either be a gray blob around the letter or a jagged staircase of black and white going up and down.

Bilinear interpolation requires more processing power but is more effective. In this method the blank pixel is compared against the nearest two neighbors to form a linear gradient between them, sharpening the image. This produces smoother visuals but can be inconsistent. So other TVs use bicubic interpolation, which pulls from the 16 nearest pixels in all directions. While this method is most likely to get the color as close to accurate as possible, it also typically produces a much more blurry picture, with edges taking on a distracting halo effect.

You can likely guess the problem already: these TVs fill in pixels based on mathematical formulas that are statistically most likely to produce accurate visuals, but have no way to interpret how they’re thematically supposed to look based on what’s actually on the screen.

So, after explaining how these algorithms consistently came up short, Samsung’s team explained how their AI overcomes these shortcomings.

Samsung’s secret: Machine learning, object recognition and filters

Samsung’s secret weapon is a technique called machine learning super resolution (MLSR). This AI-driven system takes a lower-resolution video stream and upscales it to fit the resolution of larger screen with a higher PPI ratio. It’s the equivalent of the old ridiculous TV trope of computer scientists zooming in and “enhancing” a blurry image with the tap of a keystroke, except done automatically and nearly instantaneously.

Samsung’s reps explained how they analyzed a vast amount of video content from different sources—high- and low-quality YouTube streams, DVDs and Blu-Rays, movies and sporting events—and created two image databases, one for low-quality screen captures and one for high-quality screen captures.

Then, it had to train its AI to complete a process called “inverse degradation” by the AI industry. First, you take high-resolution images and downgrade them to lower resolutions, tracking what visual data is lost. Then you must reverse the process and train your AI to fill in the missing data from low-resolution images so that they mirror the high-resolution images.

Samsung’s team calls this process a “formula”. Its 8K processors contain a formula bank with a database of formulas for different objects, such as an apple or the letter “A”. When the processor recognizes a blurry apple in an actor’s hand, it’ll restore the apple edges, repair any compression artifacts, and ensure that blank pixels take on the right shade of red based on how apples actually look, not based on vague statistical algorithms. Plus, along with specific object restoration, the AI will adjust your stream based on whatever you’re watching.

According to Samsung, it has dozens of different “filters” that change how much detail creation, noise reduction and edge restoration is needed for a given stream, based on whether you’re watching a specific sport, genre of movie or type of cinematography.

Upscaling in action

The TV on the left is a Samsung 4K TV with no AI upscaling; the TV on the right is an 8K. On the left, you can spot blocky greens and poor transitions from light to dark sections around the actor.

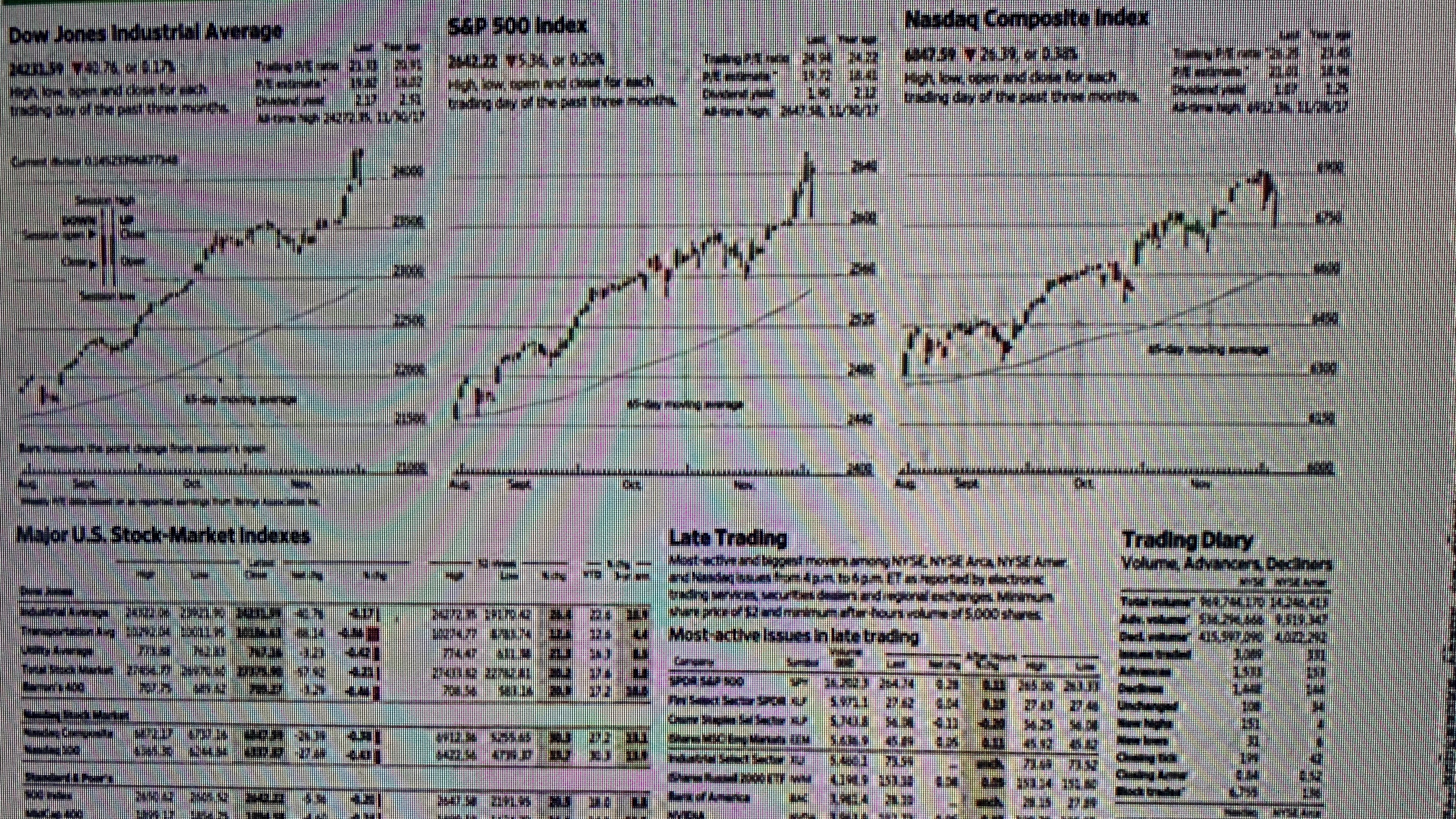

Stock info on a 4K screen.

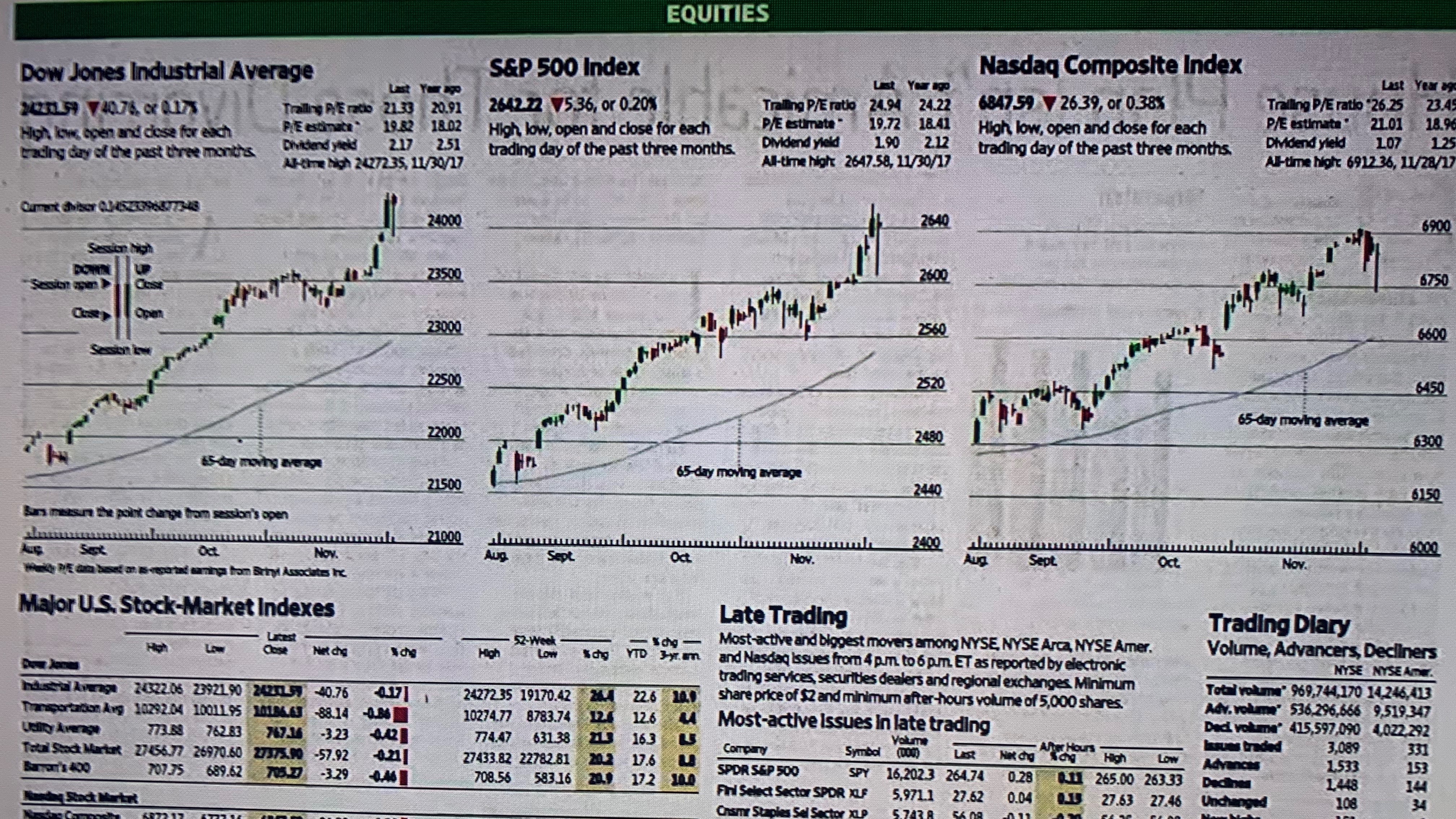

Compare this screen to the previous one. Larger headers are readable on both, but only this 8K screen makes the smaller text readable. Partially due to better brightness, but also due to better edge restoration.

Another 8K-restored screen of text

Compared to the previous screen, any non-headline text is much harder to read here.

The edge restoration shown in the slideshow above—an insane amount of text to restore in real time—isn’t even the most difficult task for the AI, according to Samsung's engineers. Instead, replicating the proper textures of an object in real time remains a difficult challenge. They must ensure that the processor augments the appearance of objects without them taking on an artificial appearance.

What the processor won’t do (according to Samsung) is miscategorize an object. “It won’t turn an apple into a tomato”, one engineer assured us, though without giving any details. Very likely the processor is trained to avoid any drastic alterations if it doesn’t recognize what an object is.

You also won’t see the AI alter the “directorial intent” of a movie, as Samsung’s team put it. So if a director uses the bokeh effect, the blurred background will stay blurred, while the foreground gets dialed up to 8K crispness.

They also claimed they don’t specifically analyze more popular streams for their object categorization, aiming more for general quantity and diversity of content. So, no word if they have a “dragon” or “direwolf” formula for your Game of Thrones binge-watches.

New Samsung 8K (and 4K) TVs ship with the most recent formula bank installed, and then new object data is added via firmware updates that you must approve. Samsung says that it will continue to analyze new visual streams to expand its object library, but that it does that locally on Samsung servers; it doesn’t analyze data from people’s TVs.

Just how many object formulas has Samsung accumulated from its endless stream analysis? One of its engineers gave an off-the-cuff amount that sounded impressively large, suggesting the processor will typically recognize a huge number of objects on screen. But a PR rep cut in and asked that we not print the number, saying that they’d rather that consumers focus on how well Samsung’s MLSR works than on arbitrary numbers.

AI upscaling: the new normal?

Samsung isn’t the only TV manufacturer that currently uses artificial intelligence and image restoration for its TVs.

Sony’s 4K ad page goes into obsessive detail about its AI image processing solutions. Its new 4K TVs contain processors with a “dual database” of “tens of thousands” of image references that “dynamically improv[e] pixels in real time”.

LG also announced ahead of CES 2019 that its new a9 Gen 2 TV chip would feature image processing and machine learning to improve noise reduction and brightness—in part by analyzing the source and type of media and adjusting its algorithm accordingly.

Beyond the AI elements, however, it seems as though these TV processors do still depend somewhat on automated algorithms. When we previously interviewed Gavin McCarron, Technical Marketing & Product Planning Manager at Sony Europe, about the AI image processing in Sony TVs, he had this to say:

"When you're upscaling from Full HD to 4K there is a lot of guesswork, and what we're trying to do it to remove as much of the guesswork as possible. [Our processor] doesn't just look at the pixel in isolation, it looks at the pixels around it, and on each diagonal, and also it will look up the pixels across multiple frames, to give a consistency in the picture quality.”

Sony, along with LG and Samsung, very likely use some form of bilateral or bicubic algorithm as its baseline upscaling system. Then they analyze the near-4K content and determine which pixels should be augmented with image processing and which should be deleted as noise.

In that sense, most TV manufacturers are relatively close to one another in the AI upscaling race. The exception is Samsung, which uses the same techniques but fills in four times the number of missing pixels to fit an 8K screen. We’ll have to wait and see if other manufacturers’ AI efforts will allow them to leap into the 8K market as well.

- What TV can you buy with Samsung's 8K upscaling tech? Check out the Samsung Q950R 8K QLED TV

Michael Hicks began his freelance writing career with TechRadar in 2016, covering emerging tech like VR and self-driving cars. Nowadays, he works as a staff editor for Android Central, but still writes occasional TR reviews, how-tos and explainers on phones, tablets, smart home devices, and other tech.