This technology could make Alexa and Google Assistant better listeners

Sounds good to us

Speech recognition tech is playing an increasingly important role in our lives, whether we’re asking our Amazon Echo to play our favorite Spotify playlist or getting a run-down of the news from the Google Assistant built into our smartphones.

Although speech recognition technology has existed in some form since the 1950s, it’s only in the last few years that it has found a practical application in the form of voice assistants built into smartphones, speakers and more.

The latest wave of speech recognition innovation has come about thanks to the leaps and bounds made in artificial intelligence in recent years, with tech giants like Google, Amazon, and Apple touting their use of neural networking in the development of their voice assistants.

Machine learning

What sets voice assistants like Amazon Alexa, Apple’s Siri, and Google Assistant apart from early iterations of speech recognition technology is the fact that they are constantly learning, picking up your speech patterns, vocabulary, and syntax with every interaction.

Most voice assistants these days also have voice recognition, which makes it possible for them to distinguish between different users – and with the might of these huge tech companies and search engines behind them, voice assistants are getting better all the time.

However, while the technology has irrefutably improved since it first made its way onto consumer devices, limitations with artificial intelligence and machine learning has meant that voice assistants as still relatively crude in terms of the sounds they can interpret and respond to.

Sounds plausible

This could be in part due to the emphasis of speech recognition over sound recognition; after all, a large amount of the sonic information our brains take in on a daily basis comes from nonverbal sounds, like the honking of car horns or a dog barking.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Although the origin of language is thought to predate modern humans, potentially even spanning as far back as Homo Ergaster (1.5-1.9 million years ago), our ancestors were able to identify and process sound long before that.

While verbal communication plays a large role in our rational understanding of the world around us, it’s nonverbal sound that often elicits an emotional, evolutionarily ingrained response – we know with very little context that a growling animal is warning us to back off, while a crying baby needs attention. A loud bang makes us feel frightened, and we flinch, bringing our hands up to protect our heads.

Not only that, but nonverbal sound plays a huge role in the way we communicate with each other; for example, in response to the crying baby, a parent might make soothing cooing noise, just as we might shout at a growling animal to try to scare it away.

So, if nonverbal sound is so important to our understanding of the world and the way we communicate, why are voice-activated assistants so hung up on language?

Audio Analytic’s mission

One British business thinks the time has come for our connected devices to learn about the art of listening to pure sound – Audio Analytic is a Cambridge-based sound recognition company that is dedicated to improving smart technology in the home.

Led by CEO and founder Dr Chris Mitchell, Audio Analytic’s research into sound recognition and AI means that voice assistants like Amazon Alexa could soon be given an important extra layer of auditory information: context.

After completing a PhD, focusing on teaching computers how to recognize musical genres, Mitchell realized that there were no companies working primarily in sound recognition. So he started with a list of all the sounds he could think of and their characteristics and with that, set up Audio Analytic.

Although Audio Analytic was born into the field of corporate security, Mitchell told us that “the company found a market in the consumer electronic space” as connected devices became more commonplace in the average household.

With so many connected microphones entering our homes through smart speakers like the Amazon Echo, Google Home, and Apple HomePod, a “world of possibilities” suddenly opened up for the company – with a particular focus on smart home security.

How does it make my home safer?

So, how can sound recognition technology improve smart security devices? Well, one example is if a burglar tries to break into your house, smashing a window in the process. If your smart speaker has the ability to interpret sound and correctly identify the amplification, wavelength, and sonic frequency of glass breaking, it can then send you a notification, as well as sending a signal to other connected devices in the home.

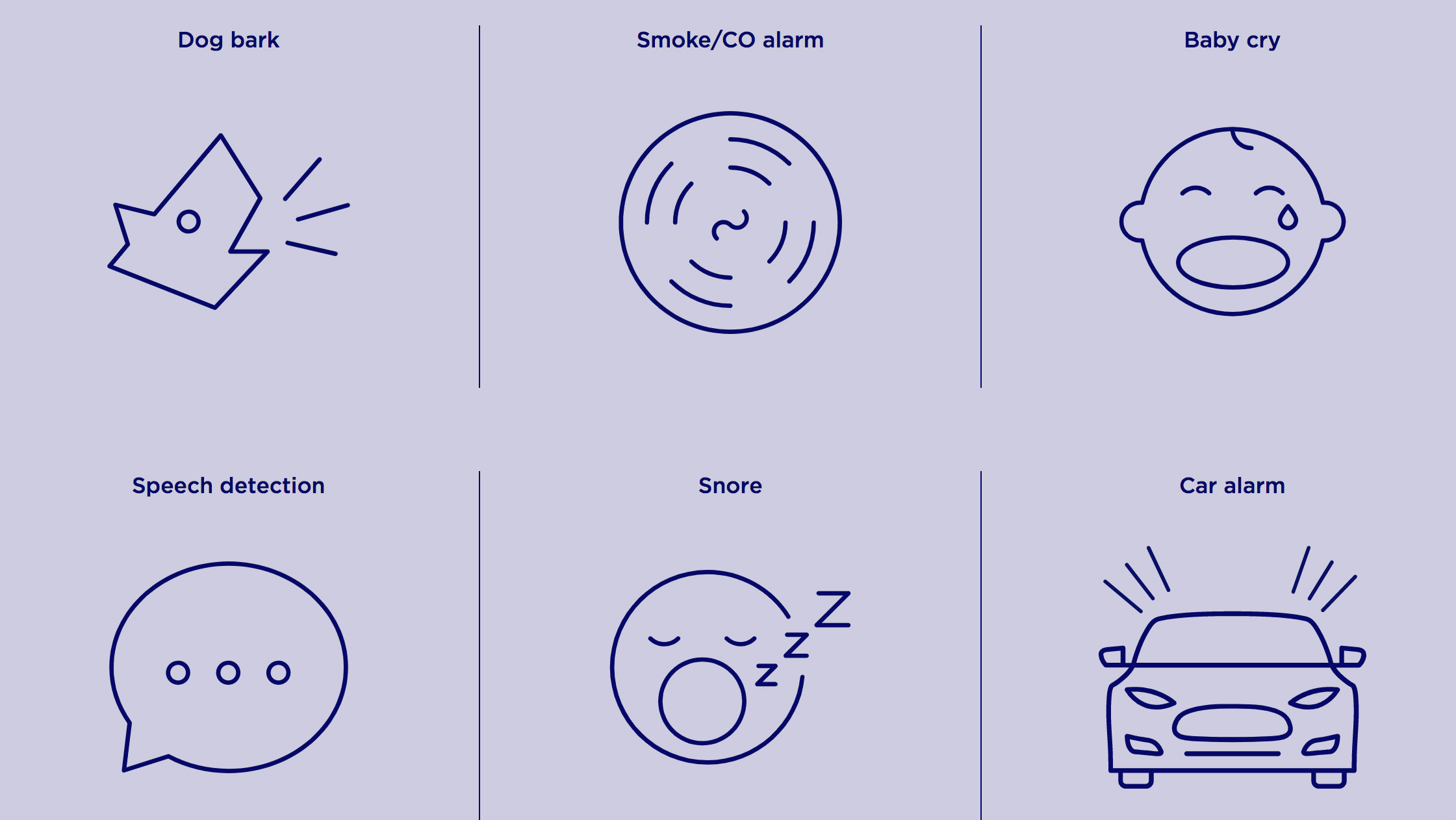

This works particularly well when you have smart security devices like the Hive Hub 360, which has Audio Analytic’s sound recognition technology built in. This means it can recognize sounds as varied as your dog barking to the sound of your windows breaking, and as a result, can activate other Hive devices.

So, if a window breaks in your home, you can automatically set your Hive Lights to switch on and scare off a potential intruder. The really clever thing about this technology is that it doesn’t notify you about every sound in your home, only the ones it deems important, thanks to the sorting of different sounds or ‘ideophones’ into huge sonic libraries by Audio Analytic.

What about AI assistants?

Aside from home security, the other result of improved sound recognition technology is smarter, more empathetic voice assistants, as Mitchell explains: “If I could give a voice assistant a sense of awareness, well-being, and all the other things I know come from sound, then their personalities can be extended, as well as their responsiveness and their usefulness.”

Think back to the crying baby, and imagine you have Audio Analytic’s sonic library built in to the Amazon Echo Dot in your child’s room. It’s 1am and you’re tucked up in bed when you get a notification on your smartphone telling you the Echo Dot has detected the sound of a baby crying.

Alexa then turns on the lights in your hallway so you can find your way in the dark, while the Echo Dot plays soothing music in the baby’s room. Maybe Alexa even talks to the baby, reassuring her that you’re on your way, or perhaps it reads her a bedtime story, calming your child until you get there and rock her back to sleep.

Whether you find this sweet, or dystopic to the extreme, largely depends on your feelings towards AI technology, but clearly sound recognition has the potential to make voice assistants like Alexa more understanding, more human, and infinitely more intelligent.

Looking to the future

You can take the crying child analogy even further when you consider the connection between different sounds. Although Audio Analytic’s focus so far has been on individual sound, Mitchell believes the future of the company lies in the identification and contextualisation of multiple sounds together.

“Imagine the baby’s crying, and she’s been coughing a lot, and sneezing a lot… you suddenly start to build up a much richer picture… so, the combinations of all of these sound effects and the context [they] paint could enable some really helpful features,” he says.

If a voice assistant can identify the sounds of crying, coughing, and sneezing, it’s not a huge leap to suggest that it might one day be able to link those sounds together and deduce a possible cause – in this case, the voice assistant might suppose the baby is unwell with a cold, and could suggest remedies, bring up the number for the doctor, or order you some cough medicine.

This kind of rational thought comes naturally to human beings, but it’s still early days for artificially intelligent entities; however, provide AI assistants with the right tools (i.e., expansive sonic libraries), and there’s no reason why this couldn’t be a possibility in the future.

The problem with AI

Of course, the idea of a voice assistant making diagnoses will trigger alarm bells for many people – after all, artificial intelligence is nowhere near a match for the human brain in terms of reasoning and emotional intelligence.

Artificial intelligence can’t rival millions of years of evolution and social conditioning, and implicit bias at the level of data and algorithmic models means that voice assistants pick up race, gender, and ideological prejudices, making it difficult for us to place our trust in them completely.

Still, voice assistants powered by machine learning are improving every day, and it may not be long before we see Alexa become a little more human, particularly if its algorithms are better trained to interpret sonic as well as linguistic data.

And if that means no more tripping over toys in the dark, then count us in.

Olivia was previously TechRadar's Senior Editor - Home Entertainment, covering everything from headphones to TVs. Based in London, she's a popular music graduate who worked in the music industry before finding her calling in journalism. She's previously been interviewed on BBC Radio 5 Live on the subject of multi-room audio, chaired panel discussions on diversity in music festival lineups, and her bylines include T3, Stereoboard, What to Watch, Top Ten Reviews, Creative Bloq, and Croco Magazine. Olivia now has a career in PR.