How to test anti-ransomware: This is how we do it

Which anti-ransomware tech is best? Here's how we find out

Ransomware may not make the headlines quite as often as it did in the past, but it hasn’t gone away. In December 2018, for instance, a new threat apparently created by a single hacker managed to infect at least 100,000 computers in China, encrypting files, stealing passwords and generally trashing users’ systems.

Antivirus companies like to claim they'll keep you safe, with vague but impressive sounding talk about 'multi-layered protection', 'sophisticated behavior monitoring' and the new big thing: 'machine learning'. But do they really deliver?

The easiest way to get an idea is to check the latest reports from the independent testing labs. AV-Comparatives Real-World Protection Tests and AV-Test's reports are an invaluable way to compare the accuracy and reliability of the top antivirus engines, for instance.

The problem is that the test reports only give you a very general indicator of performance with malware as a whole. They won't tell you how an engine performs specifically with ransomware, how quickly it can respond, how many files you might lose before a threat is stopped, and other nuances. That's exactly the sort of information we really want to know, and that's why we've devised our own anti-ransomware test.

Ransomware simulator

It's possible to test anti-ransomware software by pitting it against known real-world threats, but the results aren't often very useful. Typically, the antivirus will detect the threat by its file signature, ensuring it never reaches any specialist anti-ransomware layer.

What we decided to do, instead, was write our own custom ransomware simulator. This would act very much like regular ransomware, spidering through a folder tree, detecting common user files and documents and encrypting them. But because we had developed it, we could be sure that any given antivirus package wouldn't be able to detect our simulator from the file alone. We would be testing its behavior monitoring only.

There are weaknesses with this concept. Most obviously, using our own simple, unsophisticated code would never provide as effective or reliable an indicator as using real undiscovered ransomware samples for each review.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

But there are plus points, too. Using different real-world ransomware for one-off reviews means some anti-ransomware packages might be faced with very simple and basic threats, while others got truly dangerous and stealthy examples, depending on what we could find at review time. Running our own simulator means every anti-ransomware engine would be measured against the same code, giving every package a fair and equal chance of success.

What we look for

Our test procedure is simple. Once we've set up the test environment (copying the user documents to their various folders), we check the anti-ransomware package is working, minimize it, launch the simulator, and wait.

That's where it begins to get interesting, because this isn't just a pass/fail situation. These are the issues we consider when weighing up how successful an anti-ransomware package has been.

The first and most fundamental step is that the ransomware simulator must have its process killed, limiting the number of files that will be damaged.

Detection must happen quickly, because the longer the delay, the more files will be lost. We count the number of encrypted files to assess effectiveness.

The best anti-ransomware packages will recover at least some, and usually all damaged files, ensuring you don't lose any data at all. If this happens, we compare the recovered files with the originals to confirm they're fully restored.

The ransomware simulator should have its executable deleted, quarantined, or otherwise locked away from user access. (Sounds obvious, but not every package does this).

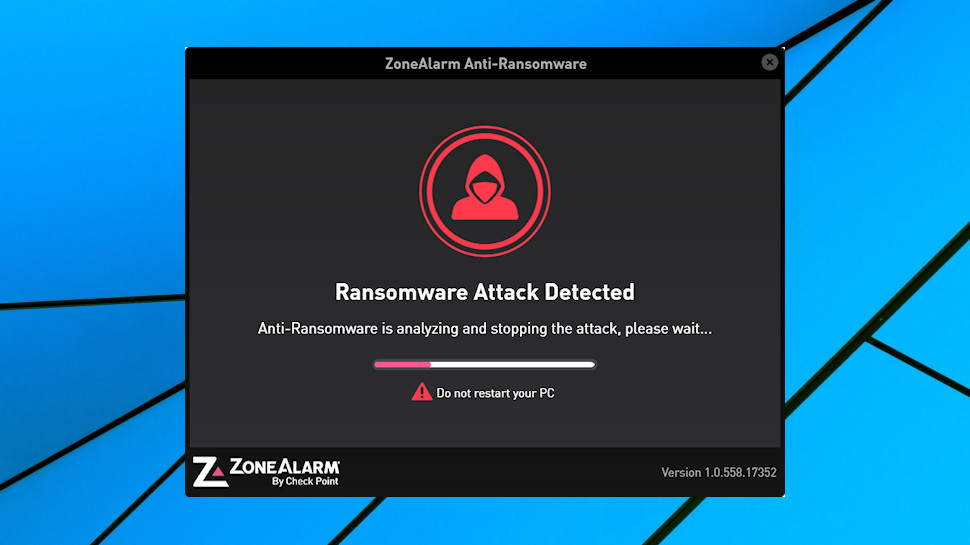

The user should ideally be informed that a threat has been detected and dealt with, allowing them to inspect the damage.

Finally, an anti-ransomware product can earn bonus points for any extra clean-up steps it takes (deleting ransomware notes, say), and any further help it can give the user, for example offering to initiate a deep antivirus scan to help try and find any associated dangers.

Our ransomware simulator may appear to be a simple test, then, but by revealing how individual packages react, it tells us a great deal about their effectiveness, and how useful they're likely to be.

Interpreting the test results

Although many anti-ransomware packages successfully block our simulator, many don't. A test fail can seem like a disaster, but it needs to be interpreted with care.

If a package can't detect our simulator, for instance, that doesn't necessarily mean it won't block undiscovered real-world ransomware. AV-Comparatives, AV-Test and other labs regularly show that most vendors can detect the huge majority of undiscovered threats from their behavior alone. The packages we are testing are proven to work very well, and our simple test doesn't change that.

It's worth keeping in mind that anti-ransomware (and all antivirus software) is forever walking a fine line between blocking all genuine threats, while never touching legitimate software. There are archiving and security applications which might work their way through a folder tree, processing and apparently encrypting files, and it's possible a 'failed' anti-ransomware package has recognized our simulator, weighed up many factors and decided it isn't a threat.

For example, the anti-ransomware software might look for files which have been downloaded recently, have a recent date, are packed executables (compressed, making it harder to view the contents), aren't signed, have dubious URLs or Bitcoin references embedded, and that look for various antivirus packages, along with other suspect signs.

Perhaps the anti-ransomware is scoring our simulator so low on this threat index that it assumes it's legitimate and allows the test to run, even though its actions are very ransomware-like.

Without knowing the precise reason for an anti-ransomware's failure to detect our simulator, we can't condemn it outright. It's taking a risk by allowing the simulator to run, but this isn't strong evidence that the software can't detect real-world threats. We don't read anything major into it, and neither should you.

The real value of our simulator test comes almost entirely from looking at the passes. If an anti-ransomware package detects our test threat, that first tell us it's more cautious about what it allows to run. But what's most important is how well it handles that threat, and protects your data.

If an anti-ransomware package misses our simulator, then, we could say that's a very small black mark (or maybe a light gray mark). But detecting and blocking the simulator is a big plus, and doing that in a way which prevents any data loss – recovering encrypted files, for instance – while keeping you up-to-date with informative alerts, indicates top-of-the-range technology which should also protect you well against real-world threats.

- Check out our list of the best antivirus

Mike is a lead security reviewer at Future, where he stress-tests VPNs, antivirus and more to find out which services are sure to keep you safe, and which are best avoided. Mike began his career as a lead software developer in the engineering world, where his creations were used by big-name companies from Rolls Royce to British Nuclear Fuels and British Aerospace. The early PC viruses caught Mike's attention, and he developed an interest in analyzing malware, and learning the low-level technical details of how Windows and network security work under the hood.