Searching for keywords

For a search engine, the original table is going to have billions of rows since there are billions of pages on the web. The rows will be relatively short (maybe less than a thousand fields). However, the inverted table will have correspondingly fewer rows – let's say of the order of magnitude of a million or so – but each row could be huge.

Note though, something interesting about this data per word: it is highly compressible. We made the document names numbers, with a table that would give us the actual document for a given number. Now, there's no reason to store the numbers. Instead, we could have a large bitmap – an array of bits for each word. If document number x has the word, then bit x in the bitmap would be set, otherwise it would be clear.

If we posit our hypothetical search engine indexing a trillion pages from the web, these bit arrays would be 130GB in size or so when uncompressed. However, it's likely that for words that are not commonly used, the majority of these bitmaps would be empty, or 0. Highly compressible, in other words.

There's another reason for storing the inverted file as a bitmap like this. Consider now the search string entered by the user and how that will be used. When we use a search engine, we don't usually search for individual words. More commonly we tend to search for phrases – 'inverted file', for example.

Consider what this search phrase means to the search engine: it means that each document presented to the user in the result set must contain both the word 'inverted' and the word 'file'. This is a logical 'AND' operation. The search engine is able to retrieve the data for each word, and in order to find the document numbers that have both words, all it needs to do is the same logical AND operation on the bitmaps.

This will produce a new bitmap with bits set where both bits were set in the original two bitmaps, and clear otherwise. Thus queries can be easily mapped onto the inverted file data structure. Thus far, we've seen how to use an inverted file in order to find documents that contain a particular word.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

It must be emphasised that for a commercial search engine like Google, these indices are gigantic. They're so big that they are spread out and duplicated over many servers. However, for smaller sets of documents (usually known as a corpus) – for example a large group of PDFs, Office files or emails that you have on disk – creating an inverted file to index them is very easy, and can reduce search times dramatically.

Indeed, the hardest part of completing such an exercise is understanding the document format. Another point we must make is that for the vast majority of searches done on the Internet, users only look at the first couple of pages of results.

Rarely do people venture beyond those first 20 matches, and indeed they mostly stay on the first page (if the result you want is not there it's easier to type in another slightly different search phrase than to scan-read the next two or three pages. After all, that first page of results may have given you extra hints on how to search for what you want). So it is important that those first 10 to 20 results are as relevant as possible.

While they were at Stanford University, Sergey Brin and Larry Page published a paper called The Anatomy of a Large-Scale Hypertextual Web Search Engine in which they described implementing a new prototype of a search engine.

This search engine was called Google (a misspelling of googol, a word meaning 10 to the hundredth power). In the paper, Brin and Page talk about how to make search results more relevant and compelling by using an algorithm called PageRank (named after Larry Page).

Ranking the results

PageRank is a voting system for analysing links. Suppose we have a web page on the Internet, and there are 10 other pages linking to it. That page will then be deemed more important or relevant than a second page that has only five other pages linking to it.

The importance (or quality) of the first page will be higher than that of the second page, just because it has more incoming links. Page and Brin had recognised that not all incoming links are equally important. They modified this simple citation-counting algorithm by assigning a PageRank to each page and then weighting the importance of the incoming link by the PageRank of the source page.

The upshot of this change is that a link from a page with a high PageRank is more important than a link from a page that has a low PageRank. This concept makes sense; some sites are more trustworthy than others. Brin and Page extended this concept further by deciding that if the source page has a high number of outgoing links, it dilutes the importance of each link.

The PageRank of a page, then, is the sum of all the PageRanks of the pages linking to it, divided by the number of outgoing links they have. Intuitively, this formula encapsulates the view that if a page is cited from other (well-cited) pages on the Internet, it's probably relevant and worth investigating.

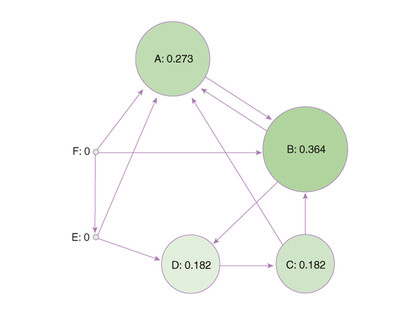

Figure 3: The PageRank of a website depends on how many other well-cited pages link to it

Figure 3 shows a calculation of PageRank for a small corpus of six documents, A though F. The links between the documents are shown by arrows, and the area of a document is proportional to its PageRank. Notice that although A has more links coming in than B does, B has the higher PageRank, because A has more links from pages that are irrelevant (E and F in particular).

Another way to view PageRank is to imagine a person that, once he's given a random page to start from, randomly clicks on links on the pages he visits without hitting the Back button. The PageRank of a page is essentially the statistical probability that he visits that page. The PageRank that Google Toolbar displays for pages is not this probability.

Instead, using a formula that remains a trade secret, Google converts a PageRank probability to a whole number between 0 and 10, with 0 meaning 'unranked' (the page is too new to have been cited often) and 10 assigned to the most important or highest-quality pages. There's only one 10 as far as we know: the Google search page.

Sites like Wikipedia, Twitter and Yahoo! manage a 9 for their homepages, whereas Facebook only manages an 8. Now that we have a method for determining a page's importance, we can fairly easily order the search results for a given page by order of their importance and quality.

This article should have given you some insight into how a modern search engine works. There are more details to understand, though, and a good place to start is Page and Brin's Paper.

-------------------------------------------------------------------------------------------------------

First published in PC Plus Issue 281

Liked this? Then check out The tech that's shaping the web's evolution

Sign up for TechRadar's free Weird Week in Tech newsletter

Get the oddest tech stories of the week, plus the most popular news and reviews delivered straight to your inbox. Sign up at http://www.techradar.com/register