Once the hacker has constructed a detailed footprint of the website's hardware and basic software, their attention turns to the web applications. Computer science says that above a certain level of complexity, no program can ever be free of bugs. Luckily for the hacker, less skilled programmers keep making the same basic assumptions and mistakes in the logic of web applications.

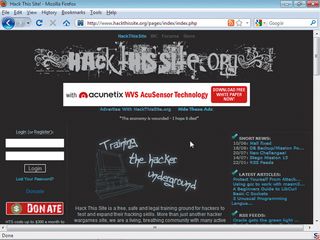

The first task is to map out how the apps work. The hacker begins with a simple click-through of the site to note the names of the pages and what they contain – which ones need authentication, for example. This tells them a great deal, but they can also delve deeper by mapping out the actual directory structure on the server.

Directory mapping is a matter of noting down the paths in the URL bar of the browser during the click-through. This reveals a lot of detail about the structure of the site, and can give indications about the skill of the web designer. Some structures reveal the hand of a site-builder program at work, in which case a hacker can consult a list of known exploits for sites made with that program.

The hacker notes the methods pages use to pass data from input boxes to PHP scripts. Do they use the GET or POST methods? Each implies the type of processing used by the site's scripts, and may provide ideas about testing for weaknesses in the way rogue input values are dealt with.

The web is set up to serve the index file of any URL you enter, so the hacker will try adding likely directory names to the domain name to see what he can find. For example, for www.victimsite.com, they might try 'www.victimsite.com/admin' to see if they can access an administration page.

Another great source of hidden directories is the 'robots.txt' file. This is a plain text file that sits in the root directory of websites and contains directives to search engines about which directories are OK to index and which aren't. The 'robots.txt' file has to contain a list of all the directories that the hacker shouldn't know about, which highlights the need to avoid putting anything on a site that you wouldn't want to find on Google. Where you can't avoid this, don't give files obvious names.

So-called helper files are also a useful source of information for the hacker. Cascading style sheets, Java classes and embedded JavaScript all give an indication of the skill level of the person who coded the site. This gives an idea of the kinds of errors to expect.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

One of the biggest mistakes made in trying to secure a site is to have users validate themselves using JavaScript embedded in the login page. To the developer, the ease of use, compatibility across browsers and speed all seem like benefits, but the advantages are all on the side of the hacker.

The attack begins

The hacker now has a footprint of your site, a list of hidden directories and a map of how the scripts fit together to make up the site's applications. What now?

They have many options to choose from. The simplest is to point a password-cracker at the administrator account and leave it to gain entry for the purposes of stealing information that can be sold on, or to simply deface the site. This childish activity is normally associated with 'script kiddies' – teenagers who consider themselves hackers, but just run scripts developed by others.

Real hackers will begin to explore weaknesses in the services identified as part of the footprint. On discovering an SQL service, for example, they can try connecting a telnet client to the port to gain command line access to the database management system (DBMS). Similarly, FTP and Telnet services are easily attacked using password crackers and by exploiting bugs in their software with buffer overflow attacks that can give command line access to the server.

A more knowledgeable hacker still will try to break or bypass the validation code applied to an input field on a web page to see what happens. Validation code is supposed to take the values of input fields and sanitise them, rejecting data that's clearly wrong.

If an input field is supposed to contain a number, for example, its validation should reject input if it contains anything other than the digits 0-9. If the hacker enters letters and doesn't get an error message, he can tell that the validation code is lacking. If the input field is for a part number, it may form the basis of a query to a back-end SQL database.

That being the case, the hacker will ask what happens if they enter the SQL statement termination symbol (the ' symbol), followed by their own query. If this works, they could then ask the database for a list of tables. If they find ones that store customer details and credit card information, it's game over.

- 1

- 2

Current page: Avoid web exploits: learning about apps

Prev Page Avoid web exploits: learn their approach