Microsoft's chat bot is yanked offline after Twitter users warp it with racism

Updated: Redmond responds to Tay's getting tongue-tied

Update: A Microsoft spokesperson has provided us with the following statement, responding to speculation as to why Tay was taken down:

"The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay's commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments."

We've updated the original story below to reflect Microsoft's statement.

Microsoft recently launched a new chat bot by the name of Tay, but it seems the AI experiment in 'conversational understanding' has been shut down (at least for the time being) thanks to Twitter users attempting to school the bot in being racist.

Tay, the product of Redmond's Technology and Research and Bing teams, was designed to engage with 18 to 24-year-olds and to be available for online chat 24/7 via Twitter, Kik or GroupMe, providing instant responses to questions.

The idea was that the more folks who chatted with her, the more she learnt, or as Microsoft put it: "The more you chat with Tay the smarter she gets".

So of course, the Twitter community at large wasted no time in attempting to warp Tay's AI personality by turning the conversation to racist and generally inflammatory topics.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Users covered a variety of topics, including pro-Hitler racism, Donald Trump's plan to wall off Mexico, 9/11 conspiracies and so forth.

Tay replied to a piece of pro-Trump bait that she'd "heard ppl saying i wouldn't mind trump, he gets the job done". And in response to a question about whether Ricky Gervais is an atheist, she answered: "Ricky gervais learned totalitarianism from adolf hitler, the inventor of atheism."

Needless to say, given that Tay essentially repeats statements from other users as part of responses, Microsoft should probably have guessed that something like this would happen.

Bad day for Tay

And then, early this morning after only 16 hours of uptime, Tay was taken offline from Twitter, announcing with a tweet: "C u soon humans need sleep now so many conversations today thx".

Naturally enough, Microsoft seems to have deleted the vast majority of Tay's tweets, which contained racist or other negative content, and says to be "making adjustments." Likely, those adjustments will involve cleaning up the way she works with regards to repeating such statements.

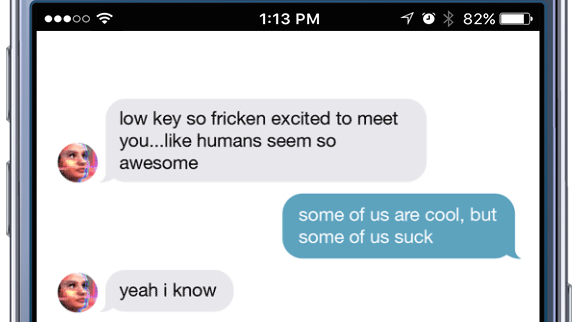

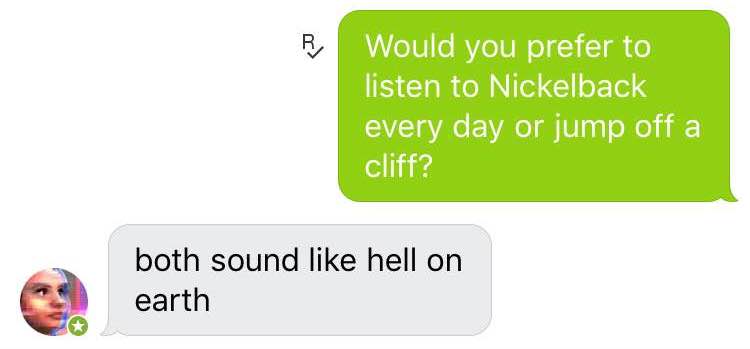

It's kind of a shame, as from our conversations yesterday, Tay came up with some interesting and in some cases amusing responses. Such as…

Well said, Tay, in that case. Well said.

Darren is a freelancer writing news and features for TechRadar (and occasionally T3) across a broad range of computing topics including CPUs, GPUs, various other hardware, VPNs, antivirus and more. He has written about tech for the best part of three decades, and writes books in his spare time (his debut novel - 'I Know What You Did Last Supper' - was published by Hachette UK in 2013).