IT infrastructure failing as if the past two decades never happened

Based on recent data center downtime events, we can only describe the process of keeping IT infrastructure running as Sisyphean.

In Greek mythology, King Sisyphus was an arrogant ruler who believed he was smarter than Zeus. As punishment for his hubris, Sisyphus was tasked with pushing a boulder up a hill in the underworld, only to have it spin out of his control and roll back down.

Based on recent data center downtime events, we can only describe the process of keeping IT infrastructure running as Sisyphean. Data center owners and operators repeatedly watch as the boulder slips from their grasp and back down the hill.

Businesses have required uninterrupted access to IT services for the past two decades. So, the majority have adopted technical, engineering and management best practices to avoid downtime incidents.

Companies invest billions of dollars and countless hours of planning, drills and preparation for operational readiness. And yet, downtime incidents still plague the industry; the rock keeps rolling back.

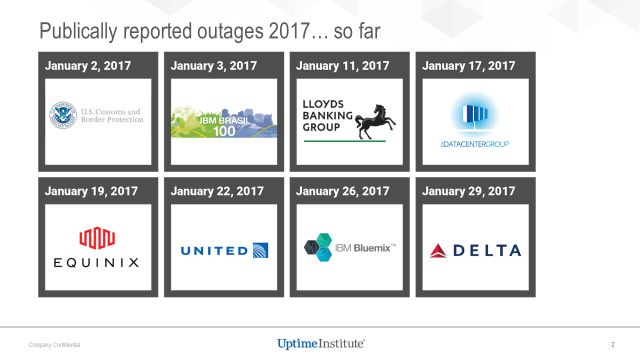

There were eight major IT Service outages in the first month of 2017 alone.

The point of evaluating of large public IT infrastructure outages is not to shame these organizations, but to point out that it can happen to anybody, from industry leaders like Amazon who pride themselves on their resilience strategies, to small government agencies.

The reasons for the outages are so often the same problems manifesting over and over again. The common thread of these events, across enterprises and service providers, is preventability. These companies and sites invested and prepared to fend off conditions that cause outages, and they failed. Mistakes were made.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

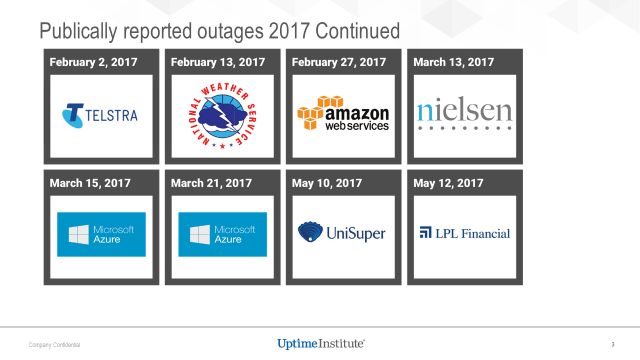

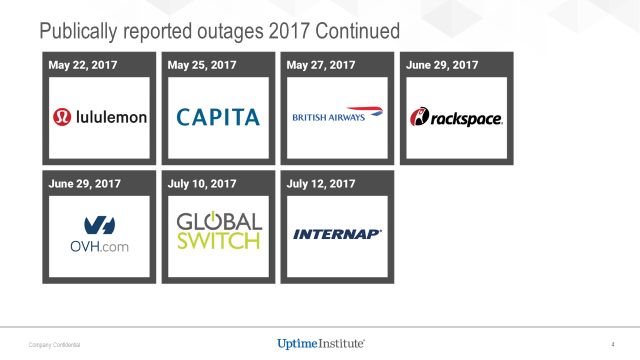

There have been more outages since this list was compiled, but you can see how in a single month, many high-profile brands with critical online and digital business processes were crippled by mistakes our industry purports to have solved twenty years ago.

Why are companies that have invested in multiple levels of physical and logical redundancy still going down at the rates we’re currently seeing today? It’s not as if there are not standards, technologies and processes in place to prevent downtime.

These failures continue to happen because preventative policies and fail-safes are rendered ineffective by human errors.

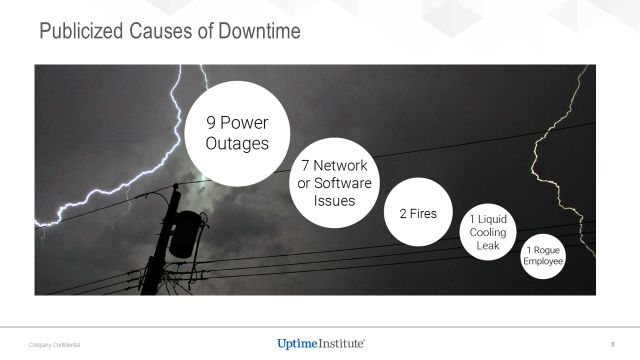

Not all of the reports provided the exact reasons for outages, but at least 40 per cent of the downtime incidents were due to power failure. The most basic function of an enterprise data center is to mitigate this exact risk, and it’s still the primary cause for downtime.

About 30 per cent of the problems were due to network or software failures. And only a handful occurred due to “freak accidents.” The takeaway is again, the causes of downtime had been anticipated and prepared for, and they still went down.

The takeaway from this analysis is that a huge percentage of these costly incidents didn’t need to happen. The problems were totally preventable – including the two highest profile downtime incidents of the past year:

British Airways threw a data center engineering contractor under the bus for flipping the wrong switch, causing a cascading outage that cost hundreds of millions of dollars. But why was a poorly trained or under-prepared contractor in that position in the first place?

When Amazon knocked off major clients from around the globe, it determined that the incident was caused by a mistyped keystroke from a technician. Again, why was that technician put in a position where that kind of cascading failure was even possible?

The industry at large overly focuses on “human error,” which we think is a misleading term. It’s a management failure when someone is untrained or unfamiliar with emergency and standard procedures, or how to manage certain modes of equipment – not human error.

The failure lies with the manager who allowed this situation to take place, not the front-line technician trying to save a situation.

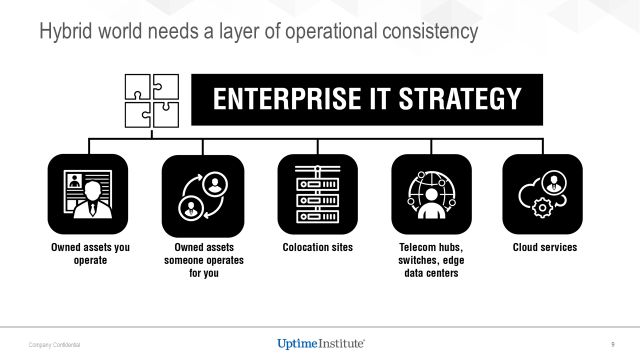

Ultimately, today’s IT Infrastructure relies on an ecosystem of providers and data centers, assets often stacked interdependently on top of each other like a Jenga tower. If you pull out some seemingly insignificant support, the whole thing may collapse.

And yet, let’s compare the various industry reactions to these recent outages. On one hand, you have CEOs from airlines taking ownership of their shortfalls in the Wall Street Journal and the New York Times.

By contrast, the world’s largest cloud computing provider claimed that it goes down so rarely that it didn’t know how to process its major outage.

Frankly, even the most cursory level of internet researching would tell you that response is wildly inaccurate. And yet, it is illustrative of the way the hyperscale cloud providers view the enterprise -- take it or leave it. And for the most part, people are taking it.

According to Uptime Institute’s 2017 Data Center Industry Survey, only eight per cent of respondents reported that their executive management was less concerned about IT service outages than they were a year ago.

That means 92 per cent of infrastructure execs are as concerned, if not more so, about their organizations IT resiliency and availability. Yet the rate of outages continues unabated. 25 per cent of respondents reported experiencing an IT service outage in the past year.

For the past two decades, companies have been pushing that boulder up the hill, knowing it’s going to come crashing back down. IT infrastructure is becoming increasingly complex, interdependent and fragmented.

There isn’t an easy answer that’s going to magically emerge when the hyperscales have reduced most of enterprise IT to a ghost town of legacy hardware. These companies aren’t being paid to mitigate and manage your organization’s risk – you are.

There are well-worn practices execs can implement to successfully manage IT Infrastructure risk, but a good first step would be to reckon with the two of biggest challenges facing our industry:

· Despite decades of training, investment and experience, data center crashes are common, and happen for the same reasons they did twenty years ago. Lack of attention to detail, proper management, and accountability results in outages around the globe.

· Ongoing adoption of cloud computing and colocation by enterprise IT departments is making IT systems more fragile in at least the short term, as interdependent IT assets are managed under varying service levels and investments, often with little regard for peripheral implications.

As organizations continue to adopt hybrid IT models, these examples illustrate that we cannot take availability for granted. IT outages are rampant, and totally preventable.

This piece is part one of two on infrastructure failure, read part two to learn about best practices organizations can use to minimize their risk of becoming a cautionary tale like the ones in today’s article.

- Matt Stansberry is the Uptime Institute Senior Director of Content & Publications and the Program Director for Uptime Institute Symposium

- Lee Kirby is the President of Uptime Institute

- Check out the best dedicated servers

Matt Stansberry is the Uptime Institute Senior Director of Content & Publications and Program Director for Uptime Institute Symposium. He has researched the convergence of technology, facility management, and energy issues in the data centre since 2003. Stansberry is also responsible for the annual data centre survey, and develops the agenda for Uptime Institute industry events including Symposium and Charrette.