Nvidia Computex 2024 keynote liveblog: Nvidia's data center presentation as it happened

Nvidia's Jensen Huang lays out Team Green's AI plans for 2024

Nvidia is getting this year's Computex 2024 conference started rather early with its Computex press event the evening before the conference is slated to officially kick off with the opening keynote by AMD's Dr. Lisa Su.

AMD has traditionally opened up the Computex conference with the first keynote of the event, but AMD got bumped at the last minute in 2023 for Nvidia CEO Jensen Huang, and AMD pretty much unofficially boycotted the show last year as a result.

That's how it goes between these two archrival tech companies though, and so even though AMD's Su has regained the company's regular position as the conference opener, leave it to Nvidia to find a way to rain a little on AMD's return to prominence with a surprise press event the night before.

But, I'm not here to pick sides. Rather, I'm here to bring you the latest from Team Green, whatever it may be, and help put it all into context for you. So stick with me as Nvidia reveals its plans for the rest of 2024, but given how the past two years have been going for Nvidia, I can already tell you in advance that whatever Nvidia reveals, it's going to be all about AI, all the time.

John has been covering computer hardware for TechRadar for more than four years now, and has spent the past two years as TechRadar's components editor, where he'd tested more AMD, Intel, and Nvidia hardware than is healthy for any one human being.

How to watch the Nvidia keynote

I've gone ahead and embedded the link for Nvidia's keynote below in case you want to follow along with me, with my hot takes and analysis below the stream.

Welcome to Computex 2024, folks! This is John, TechRadar's components editor, and I'm on the ground in Taipei for the biggest computing event of the year, Computex 2024.

Tonight's event will be held at the NTU Sports Center at National Taiwan University, which was already full to capacity before I even knew we could register for it, so I'll be watching it live alongside the rest of you. On the plus side, that also means I don't have to leave my very nice, air-conditioned hotel room, which is a blessing after the heat of the past two days.

You can follow along with the video above and keep it right here as I dig into any news Nvidia breaks during this event and give my gut-level analysis as it happens.

OK, we're about to get underway in a few moments, though it looks like the livestream is running a bit behind.

Still holding on the livestream, so in the meantime, let me tell you, it has been raining like crazy for the past couple days here in Taipei. Hopefully the weather clears up for the conference this week, though the weather forecast doesn't look so hot.

Also, shout out to Bogart's Smokehouse in Nangang, Taipei. Best BBQ I've had since I was in school in Texas. It's always nice to walk past all the noodle shops and dumpling spots in the city and then stumble upon a little taste of home.

OK, the livestream is starting up, so here we go.

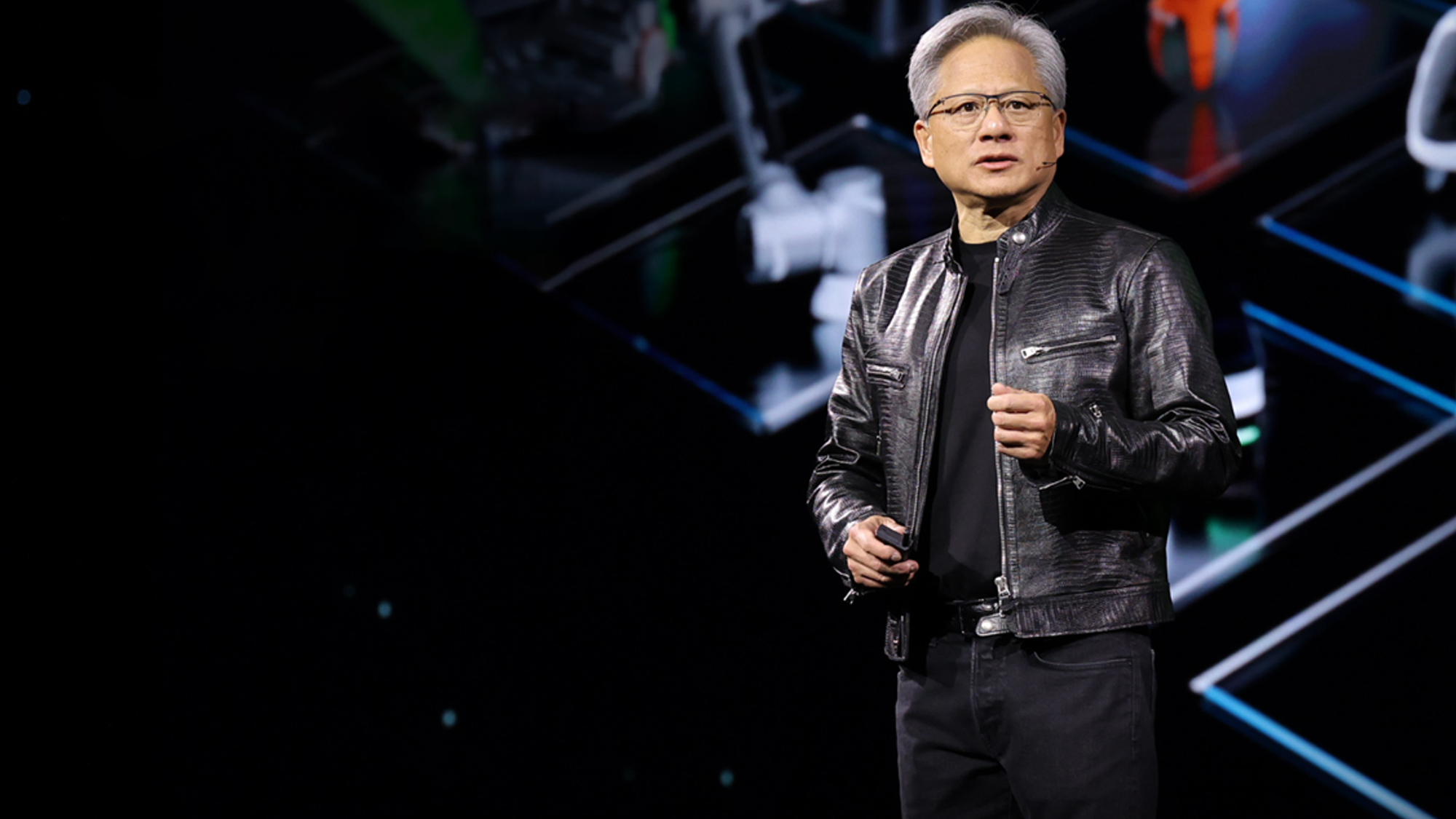

I think that's the first time I've seen Jensen not wearing his leather jacket.

Did y'all know that Nvidia is getting really into AI? Well, if you didn't, you're going to hear all about it. And I mean all about it. I guarantee that 95% of this keynote is going to be strictly AI stuff.

Omniverse is Nvidia's industrial rendering and simulation Big Thing, so unless you've got to lay out a real factory, and not just play at it in Satisfactory, then Omniverse is for you. For the rest of us, not so much.

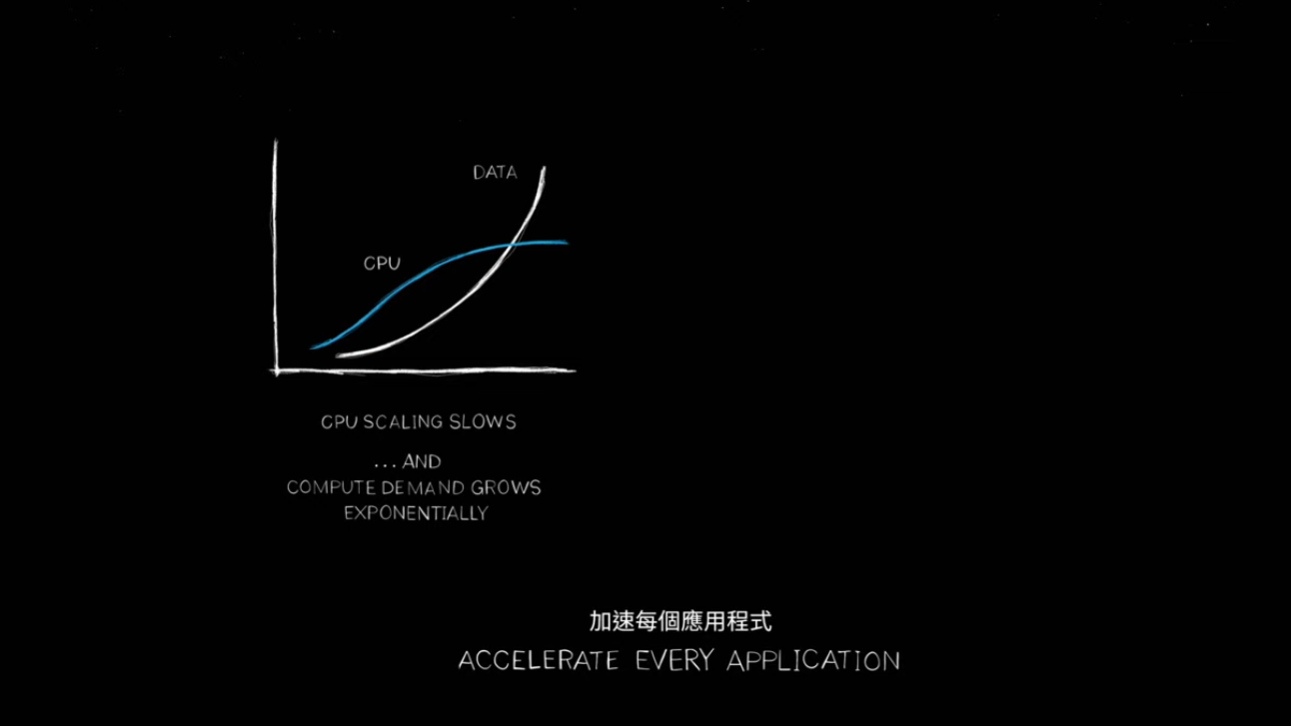

Computation inflation, huh? Well, it's as good an analogy for the end of Moore's Law as any.

So this kind of comp sci talk is fascinating to me, but I kind of get why this isn't an official Computex keynote. This is effectively a microarchitecture lecture, so if your eyes are starting to glaze over, I definitely get that. However, what Jensen is talking about is how Nvidia went from a $100 billion company to a $2 trillion dollar company in like 18 months.

So one of the reasons why a GPU is able to 'accelerate' computations that CPUs struggle with, is that GPUs are built from the ground up for parallel processing graphics.

OK, the Traveling Salesman Problem is one of those problems in computer science that is so intractable to compute that it will take 100,000 years for a classical computer to solve. It's not true to say that a quantum computer can solve that problem. We do not know that a quantum computer can do this, so Nvidia isn't about to solve the TSP using CUDA and accelerated computing.

The problem with the TSP is that you have a salesman who needs to travel to X number of cities in a curcuit. The problem is that the cities are all different distances from one another, so how to you find the most efficient route for the salesman to travel to all the cities on his list so that the total travel cost is minimized.

There is no efficient algorithm that solves this problem, and it's not known if one exists. If the number of cities on the list is 10 cities long, this is not a hard problem to solve, but if that salesman has to travel to 100 cities, it will take like a million years to brute force your way through to find that most efficient route.

The problem is scale. The more cities you add, you effectively double the time it will take to solve the problem, and that exponential growth is devastating at scale. CUDA can find a more efficient way to find the route for like 25 citires, or 30 cities, but it is not a solution to these kinds of optimization problems.

And trust me, if Nvidia had solved the traveling salesman problem, it would be many orders of magnitude more impactful on the world than generative AI. Solving the TSP means you also solve hundreds of other problems like weather forcasting, shipping logistics, and protein folding in medicine. It would be the most revolutionary advance since Calculus, and it would be all over the news and in every computer science journal on Earth.

Earth 2.0 is simply just a very advanced simulation no different than a very advanced supercomputer. Very powerful, but not truly revolutionary.

So lets also talk more about these terms. What Jensen is talking about with these AI supercomputers is the tensor core. This is a type of circuitry that can perform matrix multiplication in a very efficient manner. Matrix multiplication is a very computationally intensive process, so using an application-specific integrated circuit (AISC) is a way to build a piece of processing circuitry that does only one thing and nothing else. A tensor core is a matrix-multiplication ASIC, and as it turns out, machine learning is very dependent on matrix multiplication.

This is how Nvidia's tensor core hardware, which is in its best graphics cards as well as its data center processors, has empowered Nvidia to be the leader in AI that it is right now.

If you were hoping to hear about graphics cards at this keynote, I'm so sorry you're having to sit through this. This is incredibly dry for your everyday consumer.

If you're an electrical engineer or computer scientist, I know you must be very excited about this keynote. People like us never really get big keynotes catering to our nerdom.

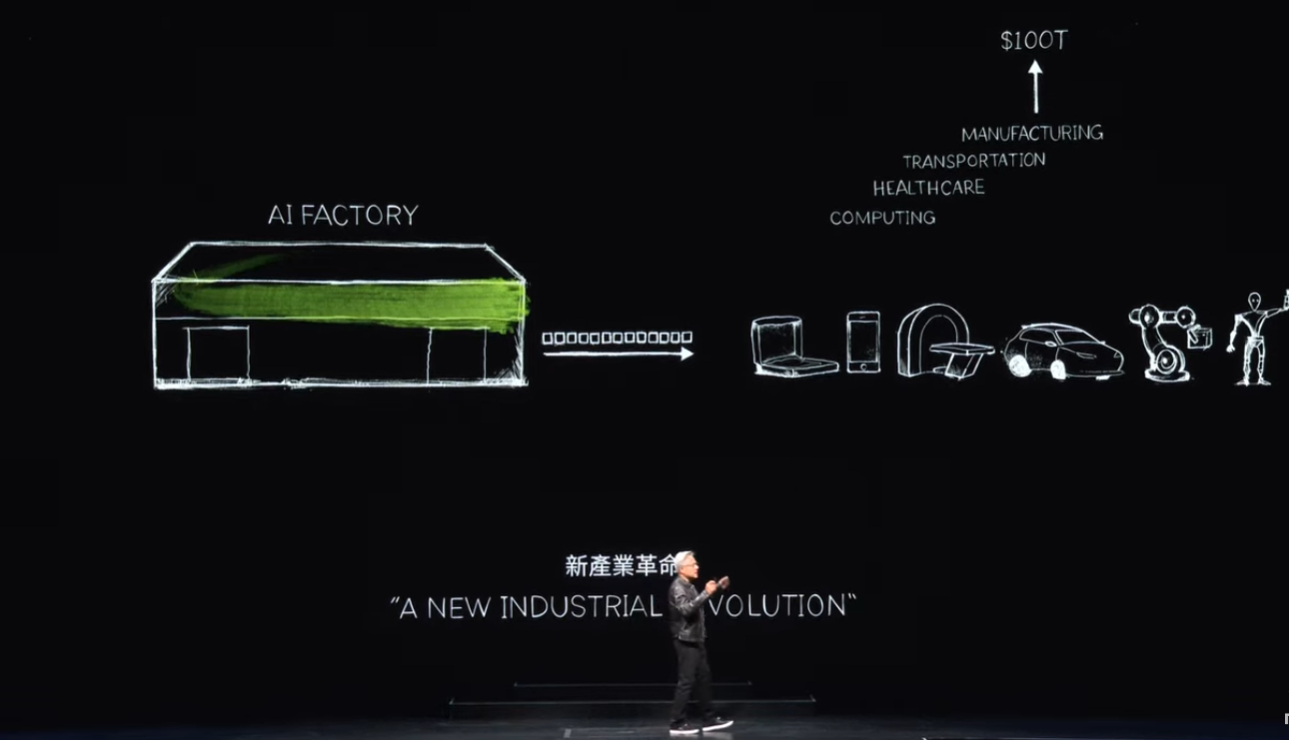

Oh, and like last year's Computex 2023 Nvidia Keynote, this keynote is very much about selling Nvidia's AI hardware to data center buyers who are probably very frustrated with the price they're having to pay for Nvidia's hardware.

Nvidia effectively has a monopoly on this tech, so if you imagine the price that Microsoft and Open AI has to pay Nvidia for the hardware needed to run ChatGPT and Copilot, multiply that price in your head by five or even 10. This is Jensen's pitch to them to justify the price they are having to pay for these chips.

So what's the deal with NIMs?

In order to continue to lead on AI and sell its hardware to different industries that might not have moved into AI, Nvidia is effectively giving them an AOL CD for free so that they can log onto the internet for the first time and hopefully find it useful enough that they're willing to pay AOL $3 an hour for internet access at dial-up speeds.

Yes, I just dated myself with that reference, but NIMs are Nvidia's way to demonstrate to various industries that there are effective use cases for Nvidia's AI hardware that they haven't considered.

Oh hell. Don't call them digital humans.

'Digital humans' reduces humanity to our particular usefulness.

Oh, I hate this. This is downright anti-human.

I get that Nvidia ACE has business applications, but God help me if I need to call my cable company to fix my internet and they give me an Nvidia ACE NIM with cat ears that still can't actually fix my problem. I'm excited to see what Nvidia ACE can do for gaming, but I am absolutely dreading companies deploying these damned things as just one more barrier between me and someone who can actually solve my problem.

The more you remove humans from the solution to a problem, the worse the solution is ultimately going to be. This is the brainchild of someone who only sees human workers as a cost to be reduces or ultimately eliminated, not as integral parts of the way the world and the economy should work.

I miss the Nvidia that just made graphics cards.

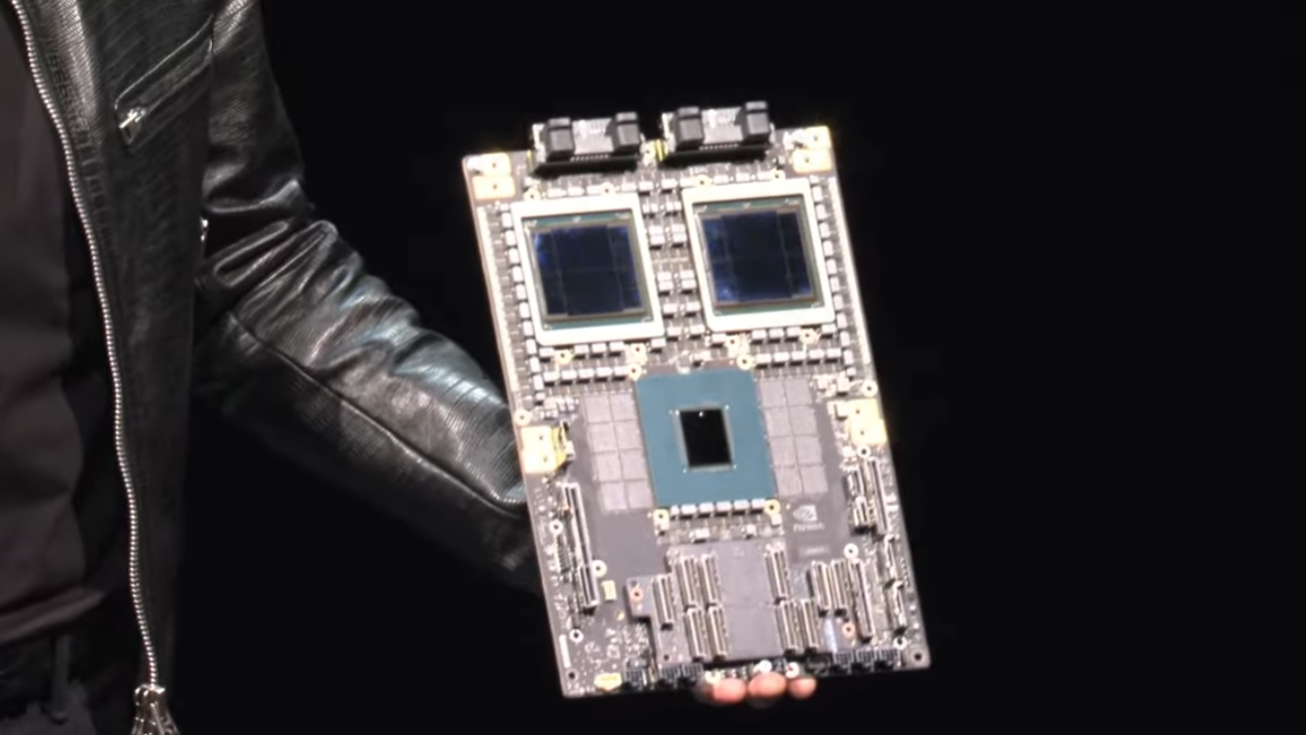

OK, Nvidia Blackwell. Two chips with a 10 terabit (terabyte? I missed that) interconnect. This is going to be an enormously powerful...chip? System? I'm not even sure what to call these things anymore. Yes, it is technically a processor, but it's also not a processor anymore.

OK, it was 10TB/s. That's a lot of data to move in a second.

That's a big frigin SoC.

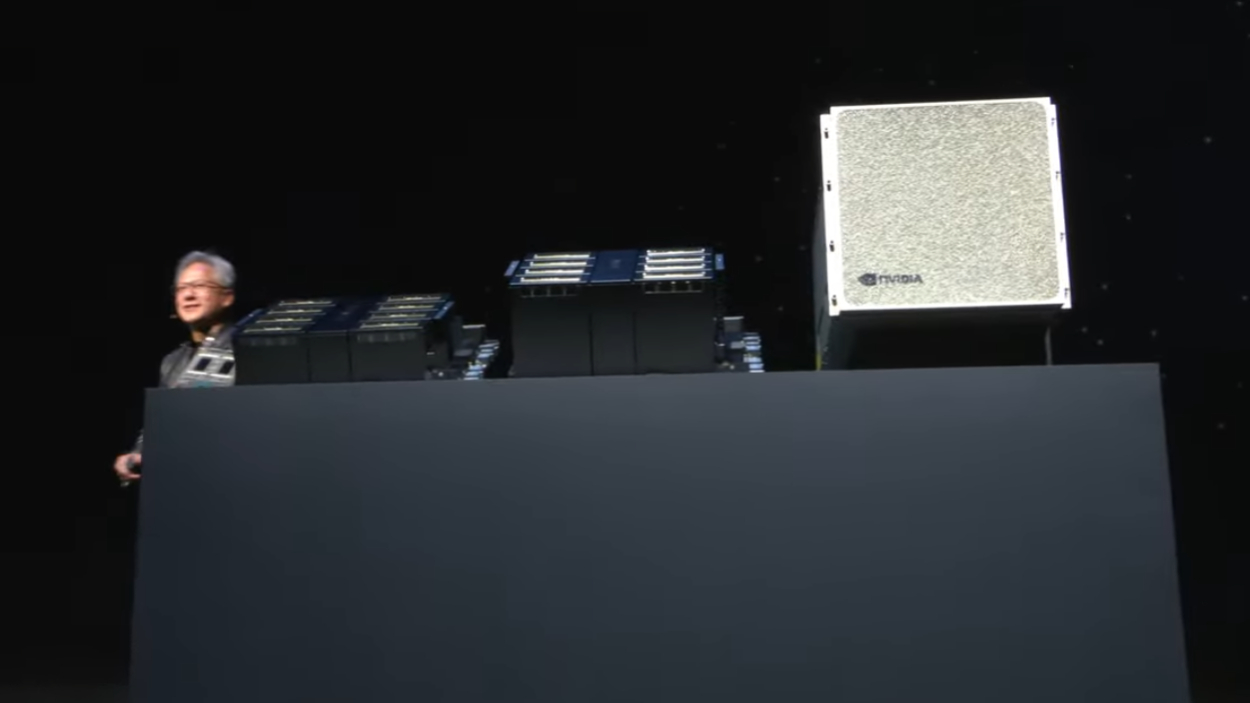

OK, so are we going to talk about the carbon emissions from all this? We're talking about 15,000 watts for that one little rack of eight GPUs with Blackwell? We really are just going to bet the habitability of the only planet known in the universe to be conducive to human life on whether these AIs can, at some point in the future, solve the problem of Climate Change for us, aren't we?

Forget Artemis and going to the moon. Forget Mars. Forget space. We need to invest everything we have into developing nuclear fusion asap.

That DGX GPU behind Jensen is going to be discovered by survivors (not necessarily human, to be clear) at some point in Earth's future the way Aloy comes across Hades and those military war machines in Horizon: Zero Dawn.

Unfortunately, my Master's degree was on the science-y side of Computer Science, not on the IT and IT management side, so I won't lie, I'm about as lost during this connectivity stuff as most of you are.

IT pros, however, this is your moment. Enjoy.

Millions of data center GPUs. OK, lets do some math.

That Blackwell GPU cluster had 8 GPUs in it, and required 15,000W. That's 1,875W per GPU.

1,000,000 x 1,875W = 1,875,000,000W.

That's 1.875 Gigawatts of power. A typical nuclear reactor puts out about 1 Gigawatt of power.

If nothing else, we might be saved from climate catastrophe by the simple fact that our power grids can't handle this kind of sustained power demand. Well, maybe not saved from catastrophe, but at least it won't be far, far worse as it could be if everything Jensen is saying comes to pass.

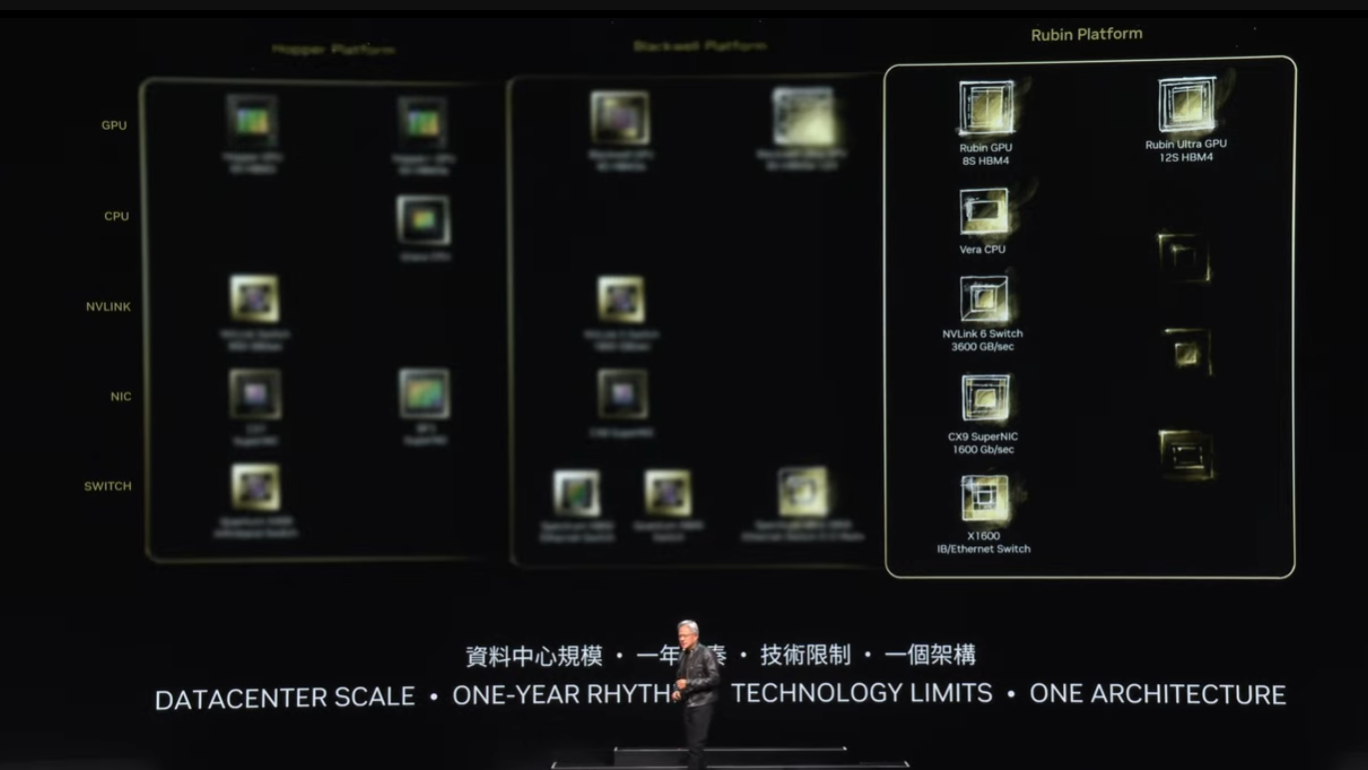

Nvidia Rubin is the next generation of GPU architecture after Blackwell. There's not much to go on here, so it's not clear if Rubin will also power the Nvidia 6000-series graphics cards, but honestly, at this point, I don't think Nvidia will be doing GeForce graphics cards in three or four years time.

I'm an outlier on that, and plenty of people call me nuts when I say that, but I just can't see why Nvidia would spend any more effort on producing gaming GPU silicon when silicon is scarce and the profit to be made off AI data center silicon is so much higher.

Do y'all remember that time that at Tesla's AI day when Elon Musk introduced Tesla's humanoid 'robot' and it was just somebody in a robot suit dancing? That was absolutely hilarious. I don't remember the name of that robot, but it doesn't matter, it's never going to be built, so it doesn't matter.

Nvidia though, these are the robots that are going to run everything in the future. They're smart because they know that a humanoid robot doesn't make any sense outside of science fiction or research. Boston Dynamic's Atlas and Atlas II are interesting exercises, but put a robot on wheels and give it a gimballed arm or two and a CPU for navigation and that's all you need to run an entire manufacturing industry.

For all the issues I'm having with this keynote, it's not because Jensen is full of it. Anything but. Everything we're seeing is the future, and Nvidia will be at the center of it, for better or worse.

Self-driving cars will never happen.

Humanoid robots, self-driving cars, and the traveling salesman problem all suffer from the same problem, namely scale. All those wheeled robots on the stage right now only have to factor in a small selection of variables in order to operate.

Humanoid robots have many thousands of variables they need to keep track of in real time just to maintain balance. Likewise, self-driving cars have to track thousands of data points, if not more, in real-time in order to make decisions, and these are very difficult problems for a classical computer system to manage.

A GPU with parallel processing can manage these problems better, but the problem with self-driving cars and the like is that unlike rendering graphics on a display, many of these variables are interdependent, so you aren't keeping track of the values of thousands of variables, you have to calculate how changing one variable will affect other variables, and that's the same intractability that makes the traveling salesman problem so hard to solve with a computer.

The best we'll ever get is if a global government imposes a prohibition on human drivers entirely, and every car on the road is a 'self-driving' car. In that world, you're only dealing with a localized networking problem with the cars on the road in your vicinity. That is doable.

OK, well, I can see why this wasn't an official Computex keynote. If you were hoping for consumer product news, you got a 10- to 15-second mention of a few RTX laptops that will have enhanced AI features, but given how quickly Jensen moved past all that to get back to the data center stuff really tells you all you need to know about where Nvidia is right now. They are no longer a graphics company, as Jensen reportedly told employees several months back, and every Nvidia keynote and live stream I've watched in the last year and a half really just reinforces that fact.

Nvidia is absolutely, 100% going to become an AI chip company, and whatever gaming appendage sticks around for a few years will become less and less of a focus for the company.

There's nothing particularly wrong with that, to be honest. Nvidia is printing money hand over fist selling AI hardware to OpenAI, Google, and all the rest, so from a business perspective, it makes perfect sense. It'd be the height of madness not to position yourself at the center of an industry that's giving you 10x better returns than what you were doing before.

Its AI revenue, even if AMD and Intel eventually produce AI data center hardware that offers genuine competition to whatever Nvidia is producing down the road, is still going to dwarf whatever money its GeForce products bring in. GeForce might continue for some time, especially on mobile devices where RTX chips can be a way to interface with Nvidia's broader AI ecosystem, but I think in the end, the consumer graphics market is going to come down the AMD and Intel. I just don't see Nvidia's heart being in the consumer graphics game any longer.

There is still something sad about Jensen picking up a GeForce RTX Super graphics card — likely an RTX 4080 Super, given the size of it in his hand — and saying (and I'm paraphrasing here) that 'we think of this as a GPU, but we all know that this' — carrying the graphics card over to the DGX data center rack and pointing at the giant black obelisk on stage — 'is the real GPU.'

Except it's not. These data center products get called a GPU because of legacy terminology for the architecture that Nvidia developed to produce computer generated frames of 3D graphics for gaming, movies, and more. These data center chips will likely do that too and the graphics card in his hand and the tower of processors encased in metal behind him might share a lot of underlying circuitry, but they are radically different things.

If it helps Nvidia sleep better at night to call what its making now GPUs, they're the ones with the $2 trillion valuation, they can do as they like. But there's a part of me, the life-long PC gamer part of me, that feels like Nvidia has decided that the PC gamers that initially propelled the company to success two decades ago don't really matter anymore.

Maybe we don't, but it still sucks nonetheless, and I know I'm not alone in feeling like I'm being left behind without so much as a 'thanks for everything,' or even just a goodbye.