Nvidia GTC 2024 — all the updates as it happened

Nvidia GTC 2024 is over - here's everything we saw and learned

Nvidia GTC 2024 is over, after a bumper few days and a whole heap of AI news.

The show's opening day saw Nvidia CEO Jensen Huang take to the stage for a two-hour keynote that covered everything from the future of AI, incredibly powerful new hardware, and even dancing robots.

Since then, we've toured Nvidia's space-age HQ, and got a behind-the-scenes look at some of the newest innovations coming out soon - and if you missed any of the news from the show, you can read through our live blog below!

- "People think we make GPUs, but GPUs don't look the way they used to" — Nvidia CEO Jensen Huang sees Blackwell as the power behind the new AI age

- "The world's most powerful chip" — Nvidia says its new Blackwell is set to power the next generation of AI

- Nvidia has virtually recreated the entire planet — and now it wants to use its digital twin to crack weather forecasting for good

- Nvidia's Project GROOT brings the human-robot future a significant step closer

- Nvidia is taking the Apple Vision Pro to the Omniverse

Good morning from San Jose, where we're all set for day one of Nvidia GTC.

Today sees the conference kick off with a keynote from Nvidia CEO and leather jacket enthusiast Jensen Huang, and we'll be intrigued to see just what the company has on offer.

It's a fresh and sunny day here in San Jose - we've just been to the convention center to pick up our badge (and multiple drinks tokens for some reason) and it's already packed with people - should be a good day!

Ironically, it's quite a quiet start to the day, with Nvidia CEO Jensen Huang set to take to the stage for his keynote at 1pm local time.

If you'd like a video accompaniment to my live updates, you can sign up to watch here.

Well, we're in and seated at the keynote...45 minutes before the start, and it's completely heaving! This is the hottest ticket here in San Jose and the tech world as a whole...

This also means the Wi-Fi is very sketchy at best - so bear with us while we get settled.

We're nearly there! Half an hour to go...

The DJ is currently playing "Under Pressure" by David Bowie & Queen...hopefully not a taste of things to come.

In case you were wondering, the tagline for Nvidia GTC 2024 is "The Conference for the Era of AI"...

With just minutes to go, we're being treated to an incredible display from LLM-themed artist Refik Anadol, who has taken over the entire wall with an amazing visual piece.

The lights go down, and it's time for the keynote.

An introductory video runs us through some of the possibilities AI can bring, from weather forecasting to healthcare, shows some of the amazing growth Nvidia has had in recent months

And with that, it's time for the main man himself - Nvidia founder and CEO Jensen Huang.

Breathe a sigh of relief everyone - he is indeed wearing his now iconic leather jacket!.

"At no conference in the world is there such a diverse collection of researchers," he notes - there's a healthy amount of life sciences, healthcare, retail, logistics companies and so much more. $100trillion of the world's companies are here at GTC he says.

"There's definitely something going on," he says, "the computer is the most fundamental part of society today."

It's been a rollercoaster few years, Huang reminds us - and there's a long way to go.

"It is a brand new category," he notes, "it's unlike anything we've ever done before."

"A new industry has emerged."

We're now getting an insight into "the sould" of Nvidia - the Omniverse.

Everything we're going to see today is generated and simulated by Nvidia's own systems, he notes.

This is kicked off with a video showig off designs from Adobe, coding from Isaac, PhysX, and animation from Warp - all running on Nvidia.

It ends with a shot of Earth-2, a new climate mapping and weather tracking model, before Hang re-enters.

"We'e reached a tipping point," he says, "We need a new way of computing...accelerated computing is a massive speeding up."

Simulations can help drive up the scale of computing, he notes, with digital twins allowing for much more flexibility and efficiency.

"But to do that, we need to accelerate an entire industry," Huang notes.

Huang wants to shout out to some partners and customers - Ansys, Synopsys and Cadence among others.

All of these partners, and many others, are demanding much more power and efficiency, he notes - so what can Nvidia do?

Bigger GPUs! That's the answer, as Nvidia has been putting GPUs together for some time.

Following Selene and Eos, the company is now working on the next step - "we have a long way to go, we need larger models," Huang notes.

"I'd like to introduce you to a very big GPU, he teases...

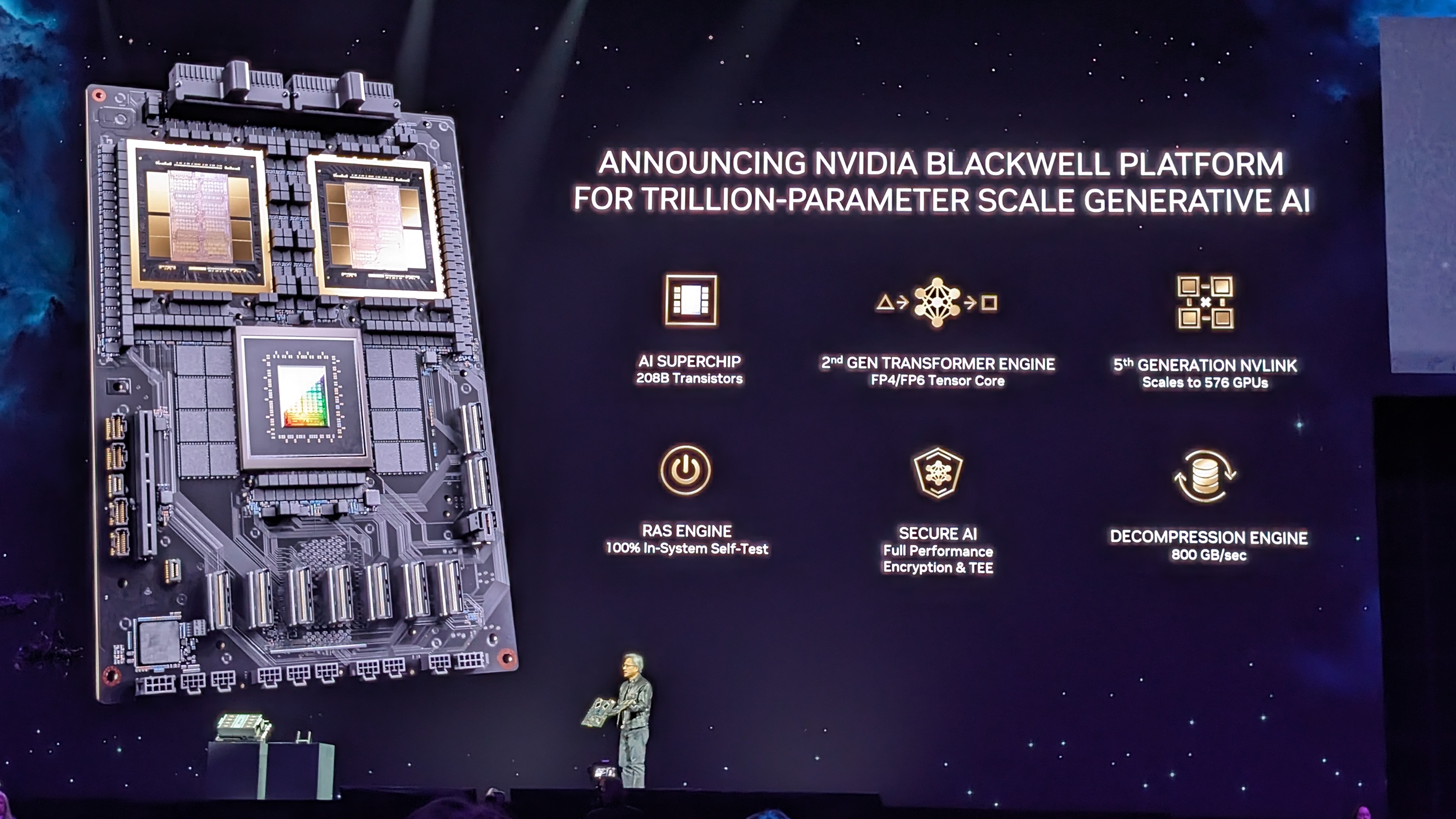

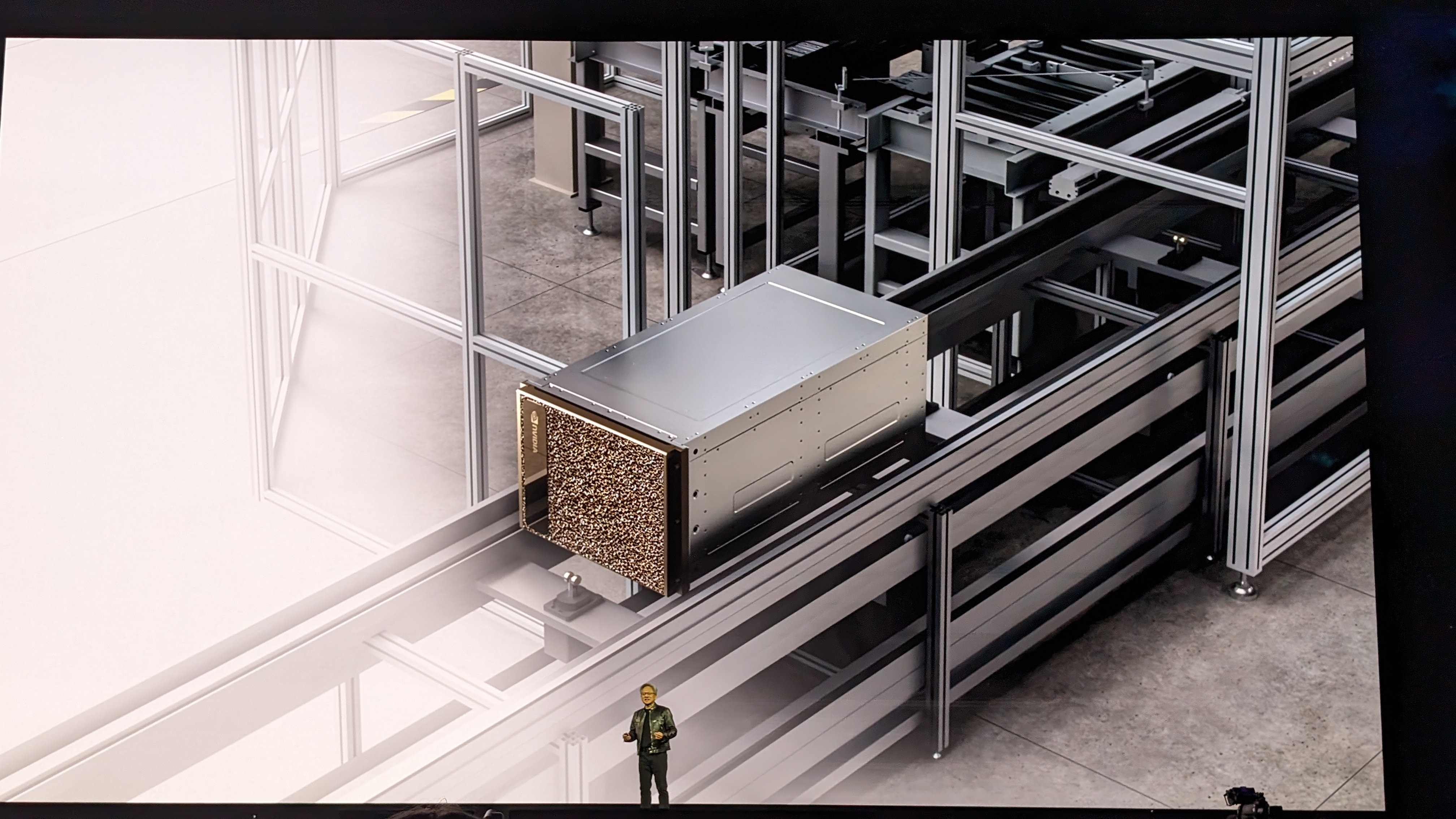

Say hello to Blackwell - "the engine of the new industrial revolution".

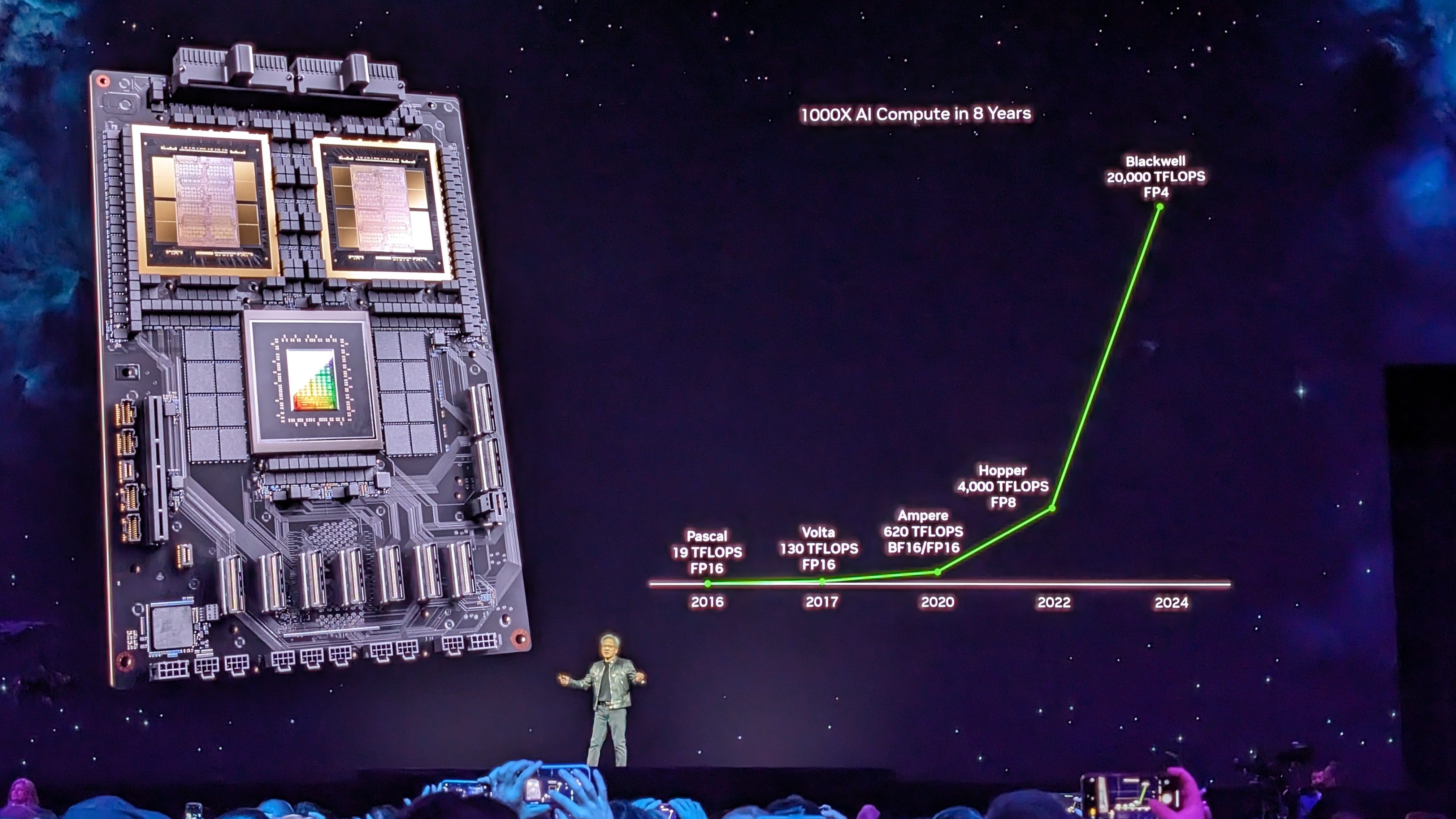

Twice the size of Hopper, the "AI superchip", it fits 208 billion transistors, putting two dies together to think they're one chip. "It's just one giant chip."

"People think we make GPUs, but GPUs don't look the way they used to," Huang notes.

Installing Blackwell is as simple as sliding out existing Hopper units, with new platforms scaling from a single board up to a full data center.

Huang talks about Blackwell like a proud parent - it's clear this is a huge upgrade for Nvidia, with some frankly staggering numbers behind it.

But wait - there's more!

Here's the future, people.

Blackwell also offers secure AI with a 100% in-system self-tesing RAS service, with full performance encryption - with data secure not just in transit, but at rest, and when it is being computed.

Secure AI - that's a major step forward all round.

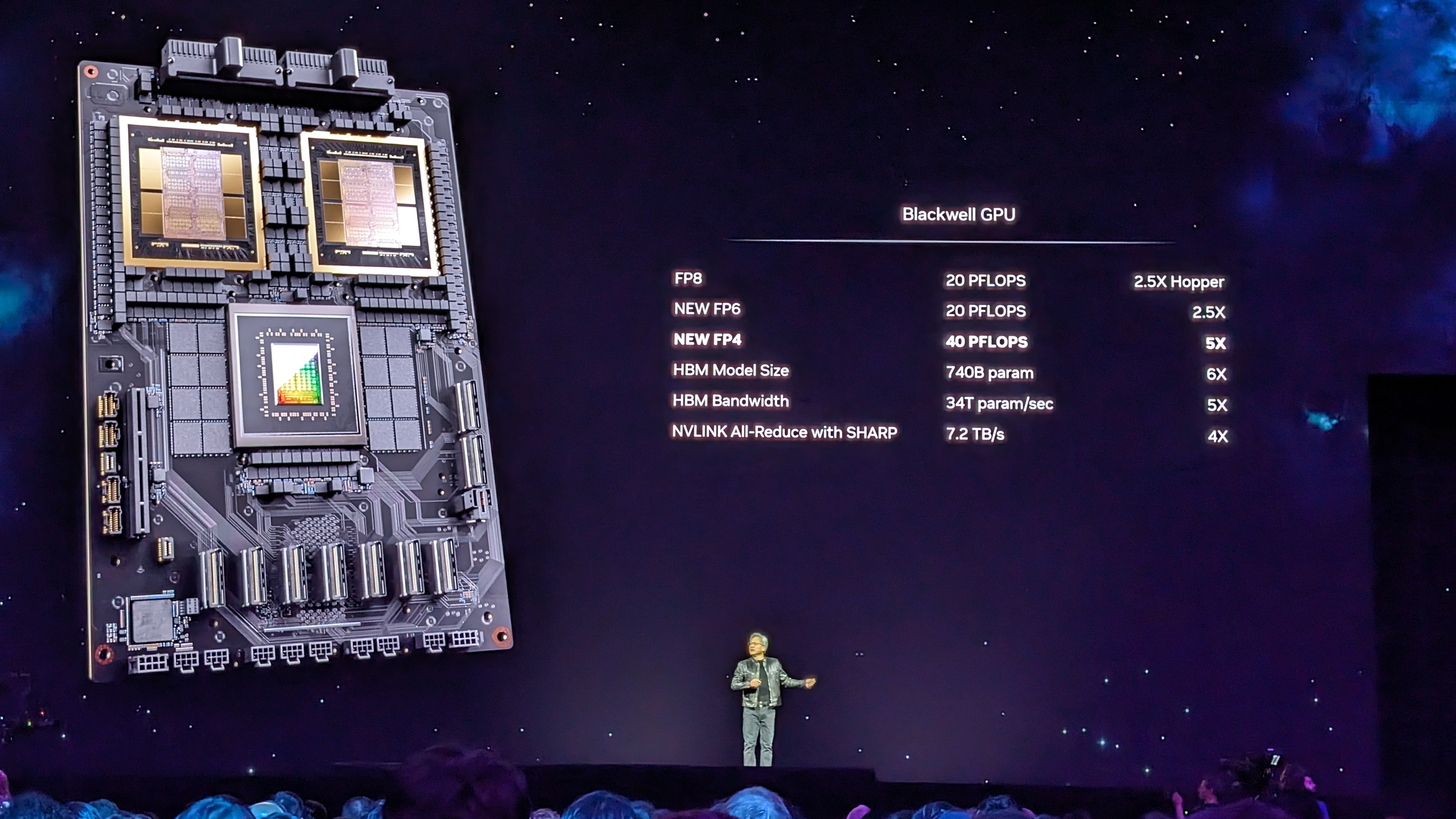

Some more frankly ridiculous numbers concerning Blackwell.

There's also a new NVLink Switch Chip, offering the chance to let all GPUs talk to each other at the same time.

It can be housed in the new DGX GB200 NVL72, essentially one giant GPU again with some ridiculous numbers - including 720 petaflops of training, and 1.44 exaflops inference - this is for seriously heavy lifting, able to process 130TB/s - more than the entire bandwidth of the whole internet.

These are the systems that will train massive GPT-esque models, Huang notes, with thousands of GPUs coming together for a huge collection of computing - but at a fairly low energy cost.

When it comes to inference (aka generating/LLMs), though, Huang says Blackwell-powered units can again bring down computing costs and energy demands.

Blackwell is designed for trillion-parameter generative AI models, so unsurprisingly, it trounces Hopper in inference - with up to 30x greater output.

"Blackwell is going to be just an amazing system for generative AI, " Huang notes, adding that he believes future data centers will primarily be factories for generative AI.

"The excitement for Blackwell is genuinely off the charts."

AWS, Google Cloud, Microsoft Azure and Oracle Cloud have already signed up for Blackwell, but so have a whole host of other companies, from AI experts to computing OEM and telco powerhouses.

"Blackwell will be the most successful product launch for us in our history," Huang declares.

After all of those pretty math and tech-heavy announcements, it's time for something different, as we enter hour two of this keynote.

It's time for some digital twins. Nvidia has already created a digital twin of everything in its business, Huang notes, blending together with Omniverse APIs to ensure successful factory rollouts and more.

So what else can generative AI do for us? It's time to hear about some of the ways the technology can benefit us today.

First up - weather forecasting. Extreme weather costs countries around the world billions, and Nvidia has today launched Earth-2, a set of APIs that can be used to build more accurate and wider-resolution models, giving better forecasts that can save lives.

Nvidia has already partnered with The Weather Company for better predictions and forecasts.

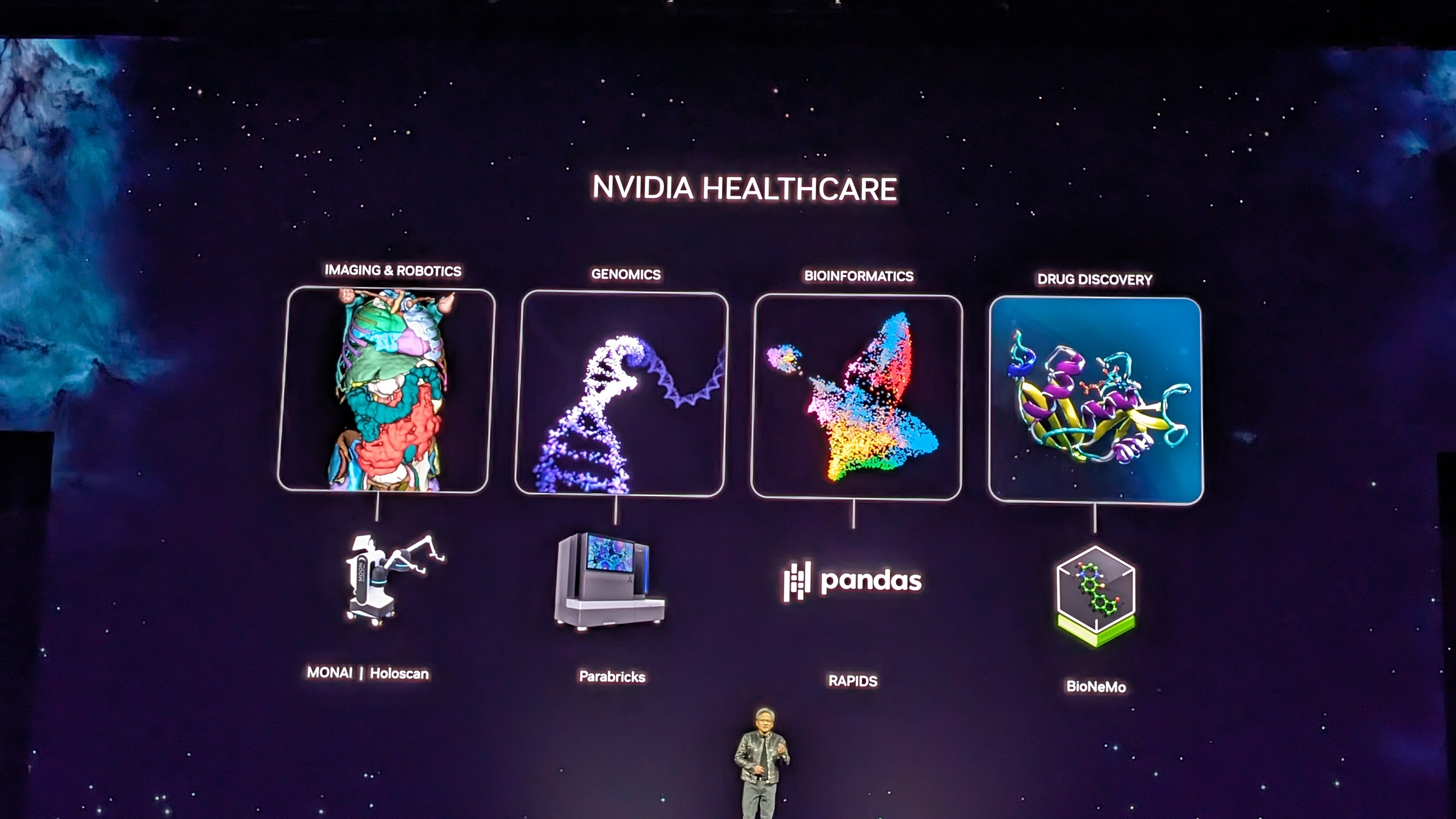

Now, on to healthcare. Huang highlights the vast amounts of work the company has already done, from imaging to genomics and drug discovery.

Today, Nvidia is going a step further, building models for researchers around the world, taking a lot of the background heavy lifting and making drug discovery faster than ever.

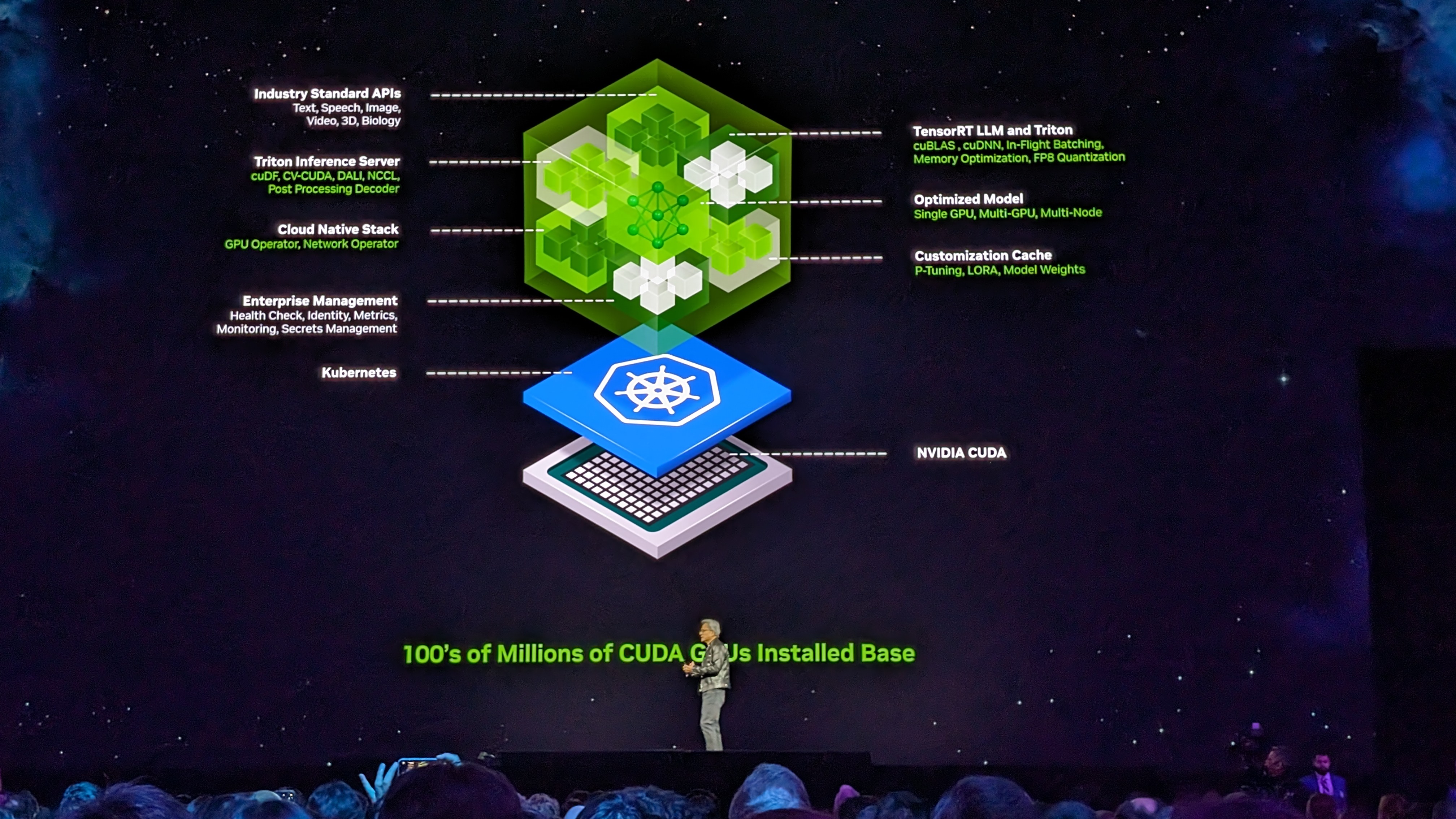

In order to receive and operate software better, Nvidia has a new service - NIM.

Nvidia Inference Microservice, or NIM, brings together models and dependencies in one neat package - optimized depending on your stack, and connected with APIs that are simple to use.

NIMs can be downloaded and used anywhere, via the new Nvidia.ai.com hub - a single location for all your AI software needs.

"This is how we're going to write software in the future," Huang notes - by assembling a bunch of AIs.

Huang runs us through how Nvidia is using one such NIM to create an internal chatbot designed to solve common problems encountered when building chips.

Although he admits there were some hiccups, it has greatly improved knowledge and capabilities across the board.

"The enterprise IT industry is sitting on a goldmine...they're sitting on a lot of data," Huang notes, saying these companies could turn it into chatbots.

Nvidia is going to work with SAP, Cohesity, Snowflake and ServiceNow to simplify building of such chatbots, so it's clear this is only the beginning.

Now, we move on to Robotics, or as Huang calls it - "physical AI".

Robotics goes along with AI and Ominverse/Digital Twin work as a key pillar for Nvidia, all working together to get the most out of the company's systems, he says.

The "chat-GPT" moment for robotics could be just around the corner, Huang says, and Nvidia wants to be up to speed and ready to roll when it does.

"We need a simulation engine that represents the world digitally for a robot," he says - that's the Omniverse.

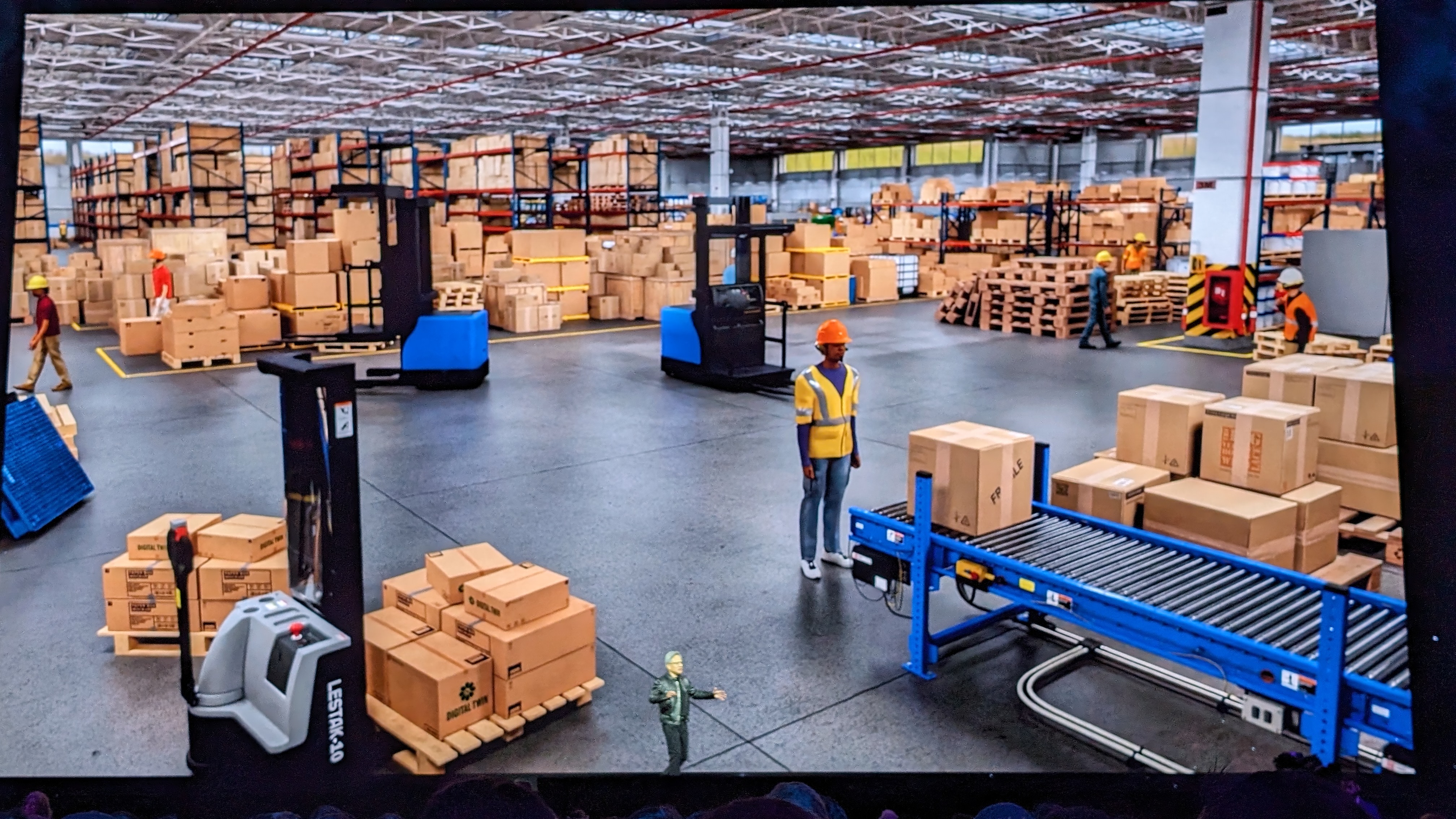

Huang introduces a demo of a virtual warehouse that blends together robotics and "human" systems, showing how the technology could work to boost productivity and efficiency.

It's a slightly disturbing, but comprehensive look at how industries of the future could work - Nvidia has already signed up industrial giant Siemens to implement it.

Project design and customization is another potentially huge market for AI - and now we're seeing how Nissan works with Nvidia to offer the ultimate personalization options when choosing a new car.

It's also being used in marketing and advertising campaigns - and there's even a little hint of the Apple Vision Pro...

Huang announces that Omniverse Cloud will now stream to the Apple Vision Pro!

He said he's used it himself, and it works - if not slightly embarassing.

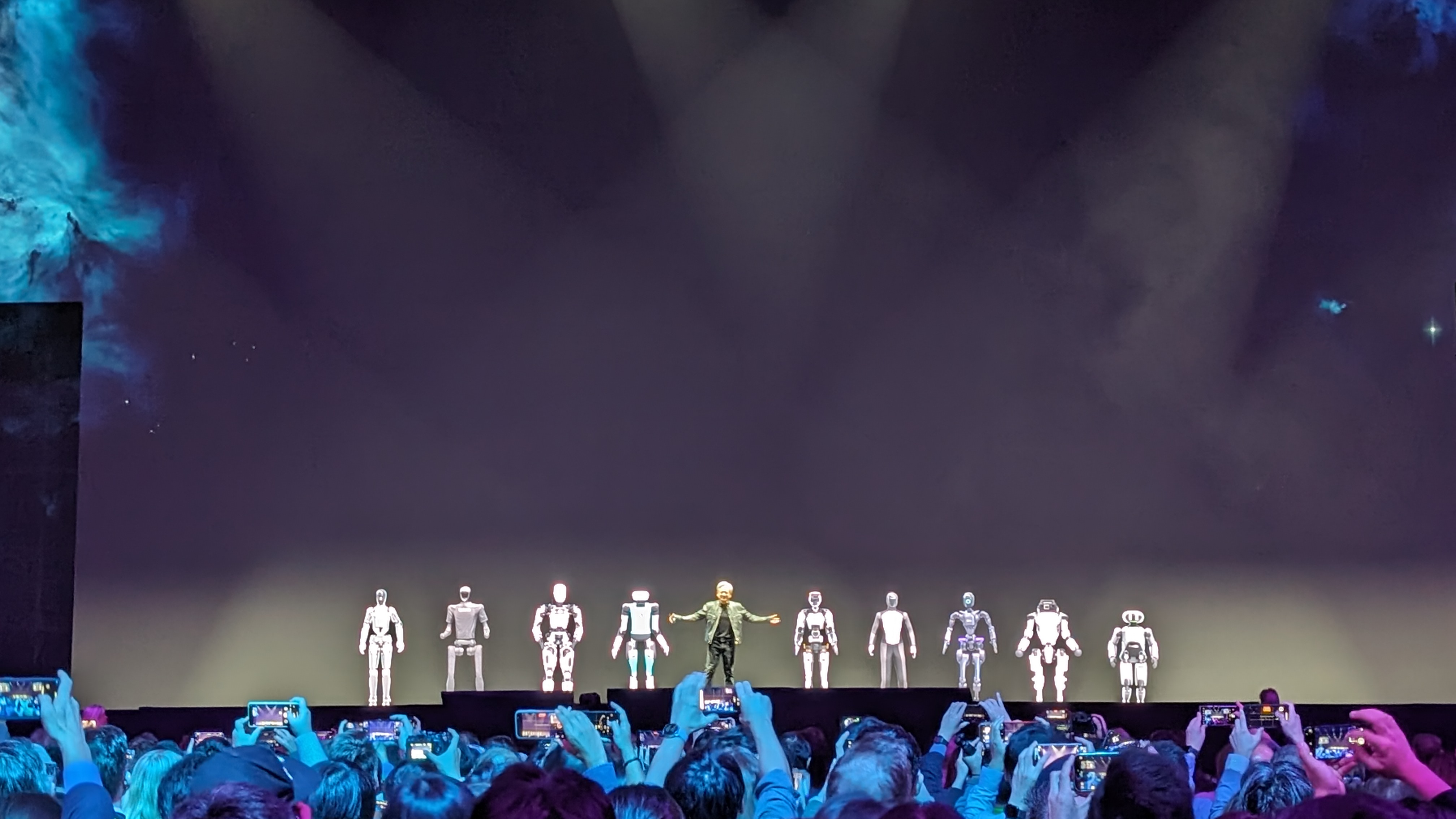

Back to robotics - and Nvidia has over 1,000 robotics developers, Huang notes.

The AI future has often conjured up visions of Terminator-esque robots wiping out humanity, but the reality is often a little more mundane.

There's a new SDK, Isaac Perceptor, aimed at robotic arms (known as "manipulators" and vehicles, giving such items far more insight and intelligence.

Nvidia is looking to forward the development of humanoid robots with the release of Project GROOT, another new collection of APIs.

Huang says the model will learn from watching human examples, but also learn in a "library" that will help it learn about the real world - not creepy at all...

We're then treated to a video that shows off these humanoid robots in development, across factories, healthcare and science - and in operation in the real world, so the future may not be too far off after all.

For his big finish, Huang is joined by a whole host of Project GROOT (General Robotics 00 Three) robots - and then by two famous friends from Star Wars as well!

"It's a new industrial revolution," Huang notes, powered by Blackwell, working alongside NIMs - all opearing in conjunction with the Omniverse.

And that's a wrap! A whole lot to experience and digest - but some huge announcements when it comes to Blackwell, the future of AI and much more - we'll be publishing our thoughts and write-ups shortly, so stay tuned to TechRadar Pro for more soon!

Day two of Nvidia GTC 2024 is here! After a mega first day, TechRadar Pro is set for another load of news and announcements today.

We're also attending a media roundtable with Jensen Huang later on, so stay tuned for all of his insight.

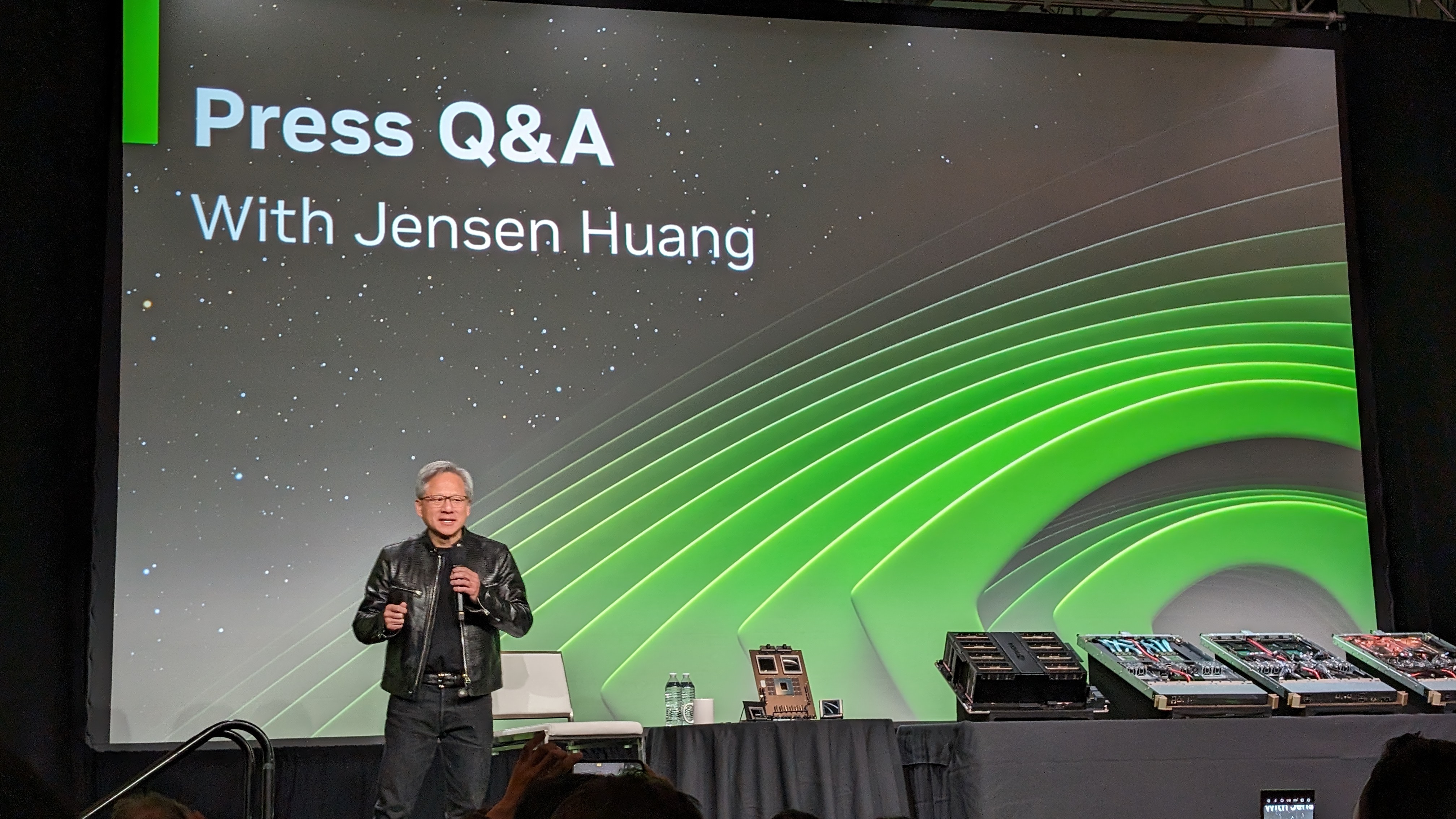

We're here for the press Q&A with Nvidia CEO Jensen Huang - unsurprisingly, it's a packed room, with media from all around the world keen to hear more after yesterday's bumper keynote.

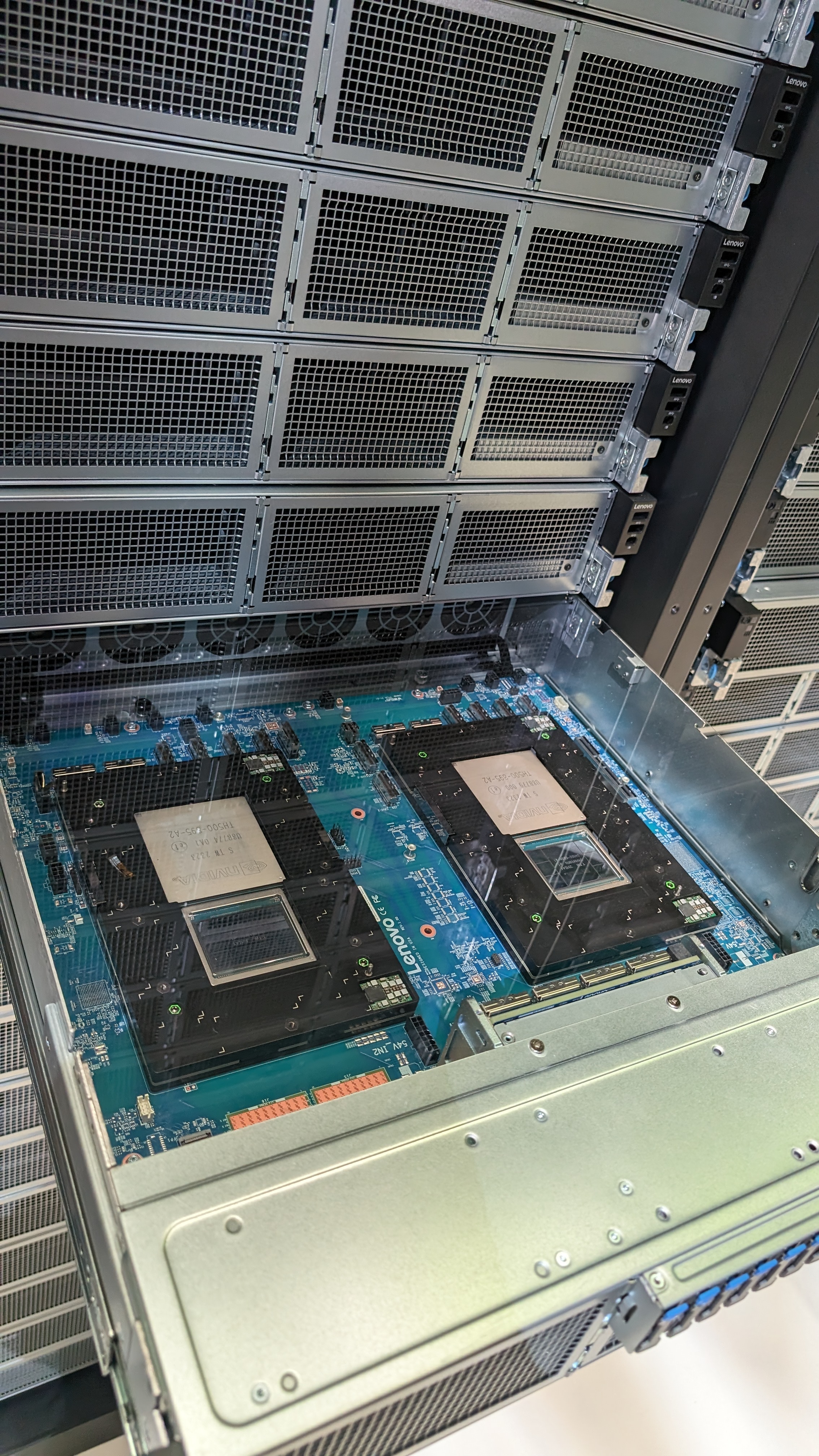

The stage is already populated with some of the Blackwell-powered hardware announced yesterday, from single racks all the way up to data center-ready units.

We'll be starting soon - wonder if Huang will have a different leather jacket on today?

A closer look at the new chips (and so much more)...

Here he is!

The computing industry is going through two main transformations, he notes - what computing can do, and how it is being built.

"It's a new industry - that's why we say there's a new industrial revolution," he notes, with data taking the place of water from the first industrial revolution.

With these changes, a whole new form of software is going to be needed, Huang adds, as it will need to learn in whole new ways.

"We created a whole new generation of compute," he says - Blackwell.

This is the first time people aren't just seeing AI as just inference, Huang notes - as it is now designed to be generating!

"This is the first time in the data center space that people are thinking of our GPUs in this way," he says - something that gaming fans are familiar with, as the company's GPUs have been popular the world over for some time.

"In the future, almost all of our computing will be generated," Huang says, with experiences of all kinds set to be generative. This will need a whole new set of hardware - once again, Blackwell steps up.

Huang moves on to NIMs - Nvidia's pitch to conquer the AI software world.

He notes that the launch is looking to make creating applications much simpler and straightforward, packaging up all the software you need in one handy download.

These can also be connected together, used off the shelf, and even customized via its AI Foundry.

"We can help almost any company build their custom AI," he declares.

New tools will also be needed - the Omniverse - and new AI foundational models in terms of robotics and digital twins - all helping companies move forward with their AI journey.

"I'm very happy with the success Omniverse is having in connecting to these tools," he declares.

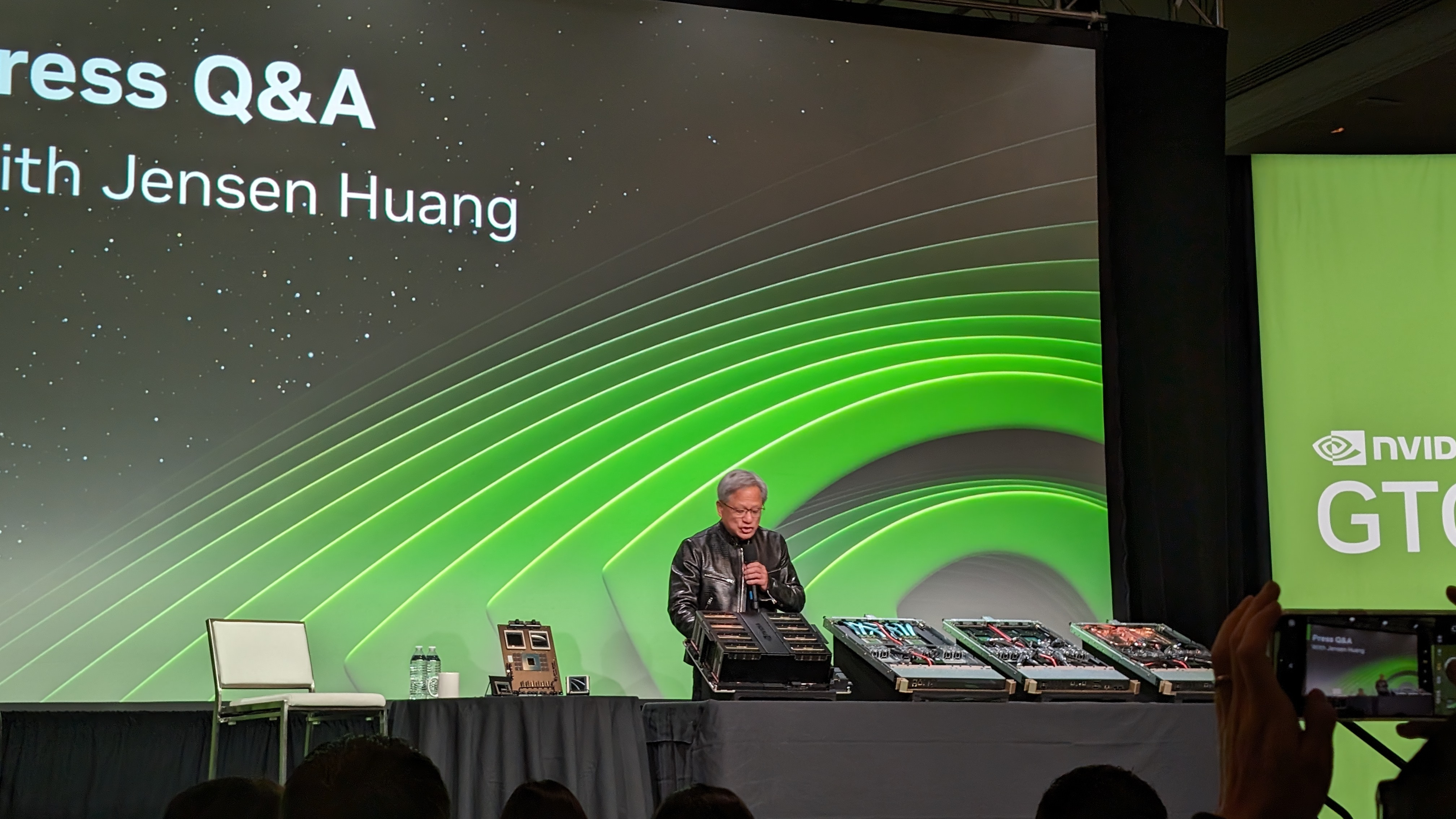

Huang now runs us through the various hardware options on stage, highlighting how the new and expanded architectures can open up entirely new dimensions for Nvidia.

They're all very modular, so can be quickly and easily removed and added.

With that, it's on to Q&A.

Huang is asked about Nvidia's computing sales to China, and whether the company has made any considerations or alterations for that market.

Huang says what's been announced at GTC 2024 is what's available - and Chinese customers are welcome to purchase.

Next, the goals of AI Foundry.

"Nvidia is always a software company," Huang notes - saying this won't be changing any time soon.

NIMs are going to take precedence, and can benefit organizations of all kinds, he adds.

Now a question on pricing for Blackwell - any more precise information?

Huang says it's difficult, as Nvidia doesn't sell the chip, and each system is different, so will require different resources, hence pricing will be markedly different.

"Nvidia doesn't build chips - Nvidia builds data centers," he reminds us. "We let you decide how you want to buy it..we work with you."

"This is not buying chips the way we used to sell chips...our business model reflects that."

Nvidia's opportunity is now more towards the data center market, a $250bn a year market - which is shifting towards accelerated computing thanks to generative AI.

Now a question about whether Jensen has spoken to AI hero Sam Altman about the latter's plan to expand the AI industry.

Huang says he agrees with Altman about the size of the opportunity, but the shift towards generative over retrieval is a significant one.

Next, it's a question about AI chip start-ups, specificially Elon Musk's Grok.

There's a pause - "he seems so angry," chuckles Huang, who admits he doesn't know enough about Grok to comment.

"The miracle of the CPU cannot be understated," he adds, "it has overcome everything else added to a motherboard over the years," where software engineers can develop and improve it.

"What AI is, is not a software problem - it's a chip problem...our job is to faciliate the invention of the next ChatGPT," he adds.

A follow-up question asks about Huang's comments around young people no longer needing to learn programming.

"I think it's great to learn skills...people ought to learn as many skills as they can - but programming isn't going to be essential to be a success."

"The first great thing AI has done is to close the technology divide...if somebody wants to learn, then please do - we're hiring programmers!"

He adds that AI has already made a huge contribution to society by closing the skills gap - and that learning how to prompt could be the next key skill for people everywhere.

A follow-up question asks more about the technological gap - is it going to grow as AI disrupts all kinds of industries, and how can Nvidia help them?

Huang notes that there are companies from all kinds of industries at GTC 2024, from healthcare to automative to finance and many more - "and I'm fairly certain they're here because of AI."

Their industry domain is first, but computing is second - "and we've closed the technology divide for them," by making such services available, he notes.

What are the limits when it comes to simulations, he is asked, especially at scale.

Huang reminds us that LLMs are operating in an unstructured world, so how they generalize is the "magic" - the ChatGPT moment for robots might be right around the corner, he adds.

How can hallucinations be conquered, especially in critical industries such as healthcare, where correct answers are incredibly vital?

"Hallucinations are very solvable," Huang replies - using retrieval augmented generation is the key, telling the model to first do a search for the right answer before providing the answer.

"The chatbot is no longer just a chatbot - it is a research assistant," he says.

When it comes to Blackwell, how do you calculate the compute requirements, or is it just a race to infinite power?

"We have to figure out the physical limtis first," Huang says, and then go beyond - which is by being energy efficient - "way more energy efficient".

Blackwell offers a major upgrade on Hopper for many tasks, and the boost in energy efficiency then also means Nvidia can push thelimits.

"Energy efficiency and cost efficiency is job one," he declares, "it's at the core of what we do."

Asked about "chatGPT moments", Huang is asked for an example of where he sees a possible next example happening.

Some are exciting for technical reasons, some for impact reasons, and some for "first contact reasons."

He names OpenAI's video-generator Sora as a particularly exciting example, and also highlights the work by Nvidia's own CorrDiff model for weather forecasts and prediction.

Generating new drugs and proteins is another hugely impressive potential use case, he says, "that's very impactful stuff" - as is robotics.

Next there's a question about quantum, and what Nvidia's plans are in this area.

"We are potentially the largest quantum computing company that doesn't build quantum computers...we don't feel the need to build another one," he adds.

Again, simulations are an area of interest, in terms of workloads and encryption, showing the wide scale possibilities there.

Now, a politically-themed question about the tensions between the US and China, and if this will affect Nvidia's path forward.

Nvidia has to understand all the policies to make sure it is compliant, but also boost its own resilience in the supply chain, Huang notes. The company's new chips are made of thousands of parts made all around the world - many in China, so the supply chain of the world is quite complicated - but Huang says he is confident that the goals of the US and China are not adversorial.

Next, Huang is asked about Nvidia's relationship with TSMC.

"Our partnership with TSMC is one of the closest we have...the things we do are very hard, and they do it all very well," he notes. "The supply chain is not simple...but these large companies work together, on our behalf...and collaboration is very good across companies."

Next is a question about cloud strategies, especially as cloud companies are increasingly building chips themselves.

Nvidia works with cloud service providers to put its hardware in their clouds, Huang notes - "our goal is to bring customers to their cloud".

Nvidia is a computing platform company, he reminds us.

Next is a question about AGI - and also a note around whether Huang sees himself as the modern-day Oppenheimer.

He laughs off the latter part, and returning to the question, asks the room what exactly AGI is - noting that it cannot be a permanent, specific moment.

How do you recognize when an AI model is doing tasks better than "most people" - but hints that within the next five years, there could be a significant step forward.

"I believe that AGI - as I specify it - is set to arrive within the next five years," he says, but laughs that we have no idea how we specify each other.

Jensen has broken free from the stage! The lighting and temperature has forced him off and down into the audience.

"These are the worst working conditions ever," he jokes.

We're nearing the end here - a question about the Japanese market, which Huang says is benefitting hugely from boosted productivity thanks to Nvidia's partnership.

With essentially a front-row seat to Jensen now, we have to say - his leather jacket looks absolutely immaculate, and definitely top-of-the-range.

That's what being the CEO of the most exciting company in the world gets you, I suppose.

That's a wrap - it's been an exhaustive Q&A, but Huang seems like he could go on all day.

We're off to grab some lunch, and will be back with more shortly.

We've had a busy afternoon here, visiting Nvidia's massive HQ just outside of San Jose. Covering 10 buildings across a huge campus, we were given a tour of the Endeavor and Voyager buildings, but weren't allowed to take any inside photos.

We're working on a write-up of what we saw during the tour, but for now, we'll bid you farewell from Nvidia GTC 2024 day two - see you tomorrow for some final thoughts!

Hello from day three at Nvidia GTC 2024! It's the final day, and we've been invited on a special closed press tour of the Nvidia stand, getting a close-up look at some of the biggest announcements here without the crowds.

For all you rack fans, here's the impressive line-up of partner hardware coming to a data center near you soon - it's a seriously impressive sight.

Blackwell is set to provide a major breakthrough for a wide range of vendors...

The future of Nvidia - in one image?

And with that, it's a wrap on our time at Nvidia GTC 2024. It's been a packed few days, so we hope it's been interesting for all of you too!

This isn't the end of our coverage, as we'll have more articles coming soon, but for now, thanks for reading TechRadar Pro!